![Ken Thompson and Dennis Ritchie at a PDP-11. Peter Hamer [CC BY-SA 2.0]](https://hackaday.com/wp-content/uploads/2019/10/1279px-Ken_Thompson_sitting_and_Dennis_Ritchie_at_PDP-11_2876612463.jpg?w=400)

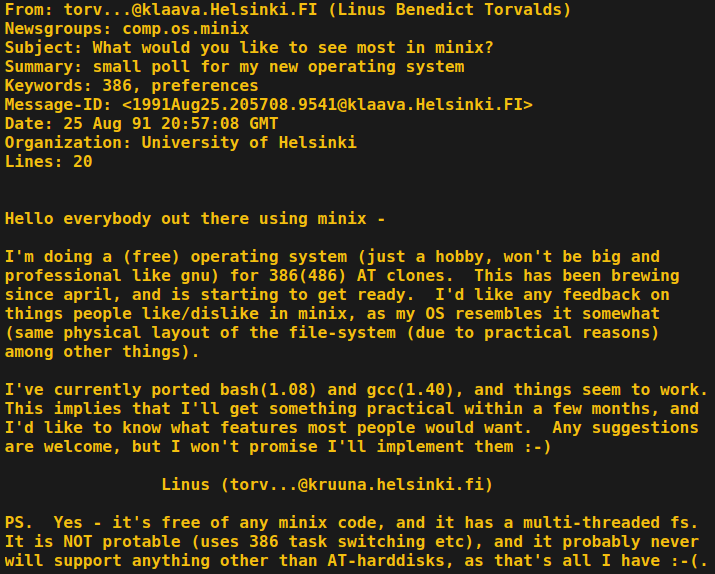

The official answer to that question is simple. UNIX® is any operating system descended from that original Bell Labs software developed by Thompson, Ritchie et al in 1969 and bearing a licence from Bell Labs or its successor organisations in ownership of the UNIX® name. Thus, for example, HP-UX as shipped on Hewlett Packard’s enterprise machinery is one of several commercially available UNIXes, while the Ubuntu Linux distribution on which this is being written is not.

When You Could Write Off In The Mail For UNIX On A Tape

The real answer is considerably less clear, and depends upon how much you view UNIX as an ecosystem and how much instead depends upon heritage or specification compliance, and even the user experience. Names such as GNU, Linux, BSD, and MINIX enter the fray, and you could be forgiven for asking: would the real UNIX please stand up?

![You too could have sent off for a copy of 1970s UNIX, if you'd had a DEC to run it on. Hannes Grobe 23:27 [CC BY-SA 2.5]](https://hackaday.com/wp-content/uploads/2019/10/Magnetic-tape_hg.jpg?w=400)

UNIX had by then become a significant business proposition for AT&T, owners of Bell Labs, and by extension a piece of commercial software that attracted hefty licence fees once Bell Labs was freed from its court-imposed obligations. This in turn led to developers seeking to break away from their monopoly, among them Richard Stallman whose GNU project started in 1983 had the aim of producing an entirely open-source UNIX-compatible operating system. Its name is a recursive acronym, “Gnu’s Not UNIX“, which states categorically its position with respect to the Bell Labs original, but provides many software components which, while they might not be UNIX as such, are certainly a lot like it. By the end of the 1980s it had been joined in the open-source camp by BSD Net/1 and its descendants newly freed from legacy UNIX code.

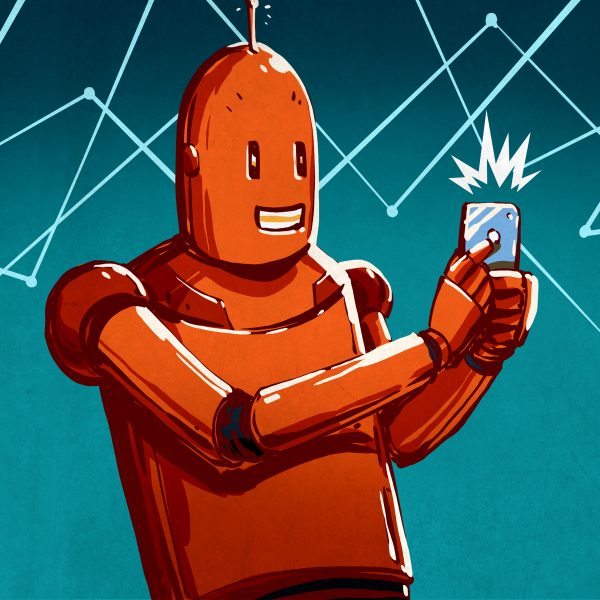

“It Won’t Be Big And Professional Like GNU”

In the closing years of the 1980s Andrew S. Tanenbaum, an academic at a Dutch university, wrote a book: “Operating Systems: Design and Implementation“. It contained as its teaching example a UNIX-like operating system called MINIX, which was widely adopted in universities and by enthusiasts as an accessible alternative to UNIX that would run on inexpensive desktop microcomputers such as i386 PCs or 68000-based Commodore Amigas and Atari STs. Among those enthusiasts in 1991 was a University of Helsinki student, Linus Torvalds, who having become dissatisfied with MINIX’s kernel set about writing his own. The result which was eventually released as Linux soon outgrew its MINIX roots and was combined with components of the GNU project instead of GNU’s own HURD kernel to produce the GNU/Linux operating system that many of us use today.

So, here we are in 2019, and despite a few lesser known operating systems and some bumps in the road such as Caldera Systems’ attempted legal attack on Linux in 2003, we have three broad groupings in the mainstream UNIX-like arena. There is “real” closed-source UNIX® such as IBM AIX, Solaris, or HP-UX, there is “Has roots in UNIX” such as the BSD family including MacOS, and there is “Definitely not UNIX but really similar to it” such as the GNU/Linux family of distributions. In terms of what they are capable of, there is less distinction between them than vendors would have you believe unless you are fond of splitting operating-system hairs. Indeed even users of the closed-source variants will frequently find themselves running open-source code from GNU and other origins.

At 50 years old then, the broader UNIX-like ecosystem which we’ll take to include the likes of GNU/Linux and BSD is in great shape. At our level it’s not worth worrying too much about which is the “real” UNIX, because all of these projects have benefitted greatly from the five decades of collective development. But it does raise an interesting question: what about the next five decades? Can a solution for timesharing on a 1960s minicomputer continue to adapt for the hardware and demands of mid-21st-century computing? Our guess is that it will, not in that your UNIX clone in twenty years will be identical to the one you have now, but the things that have kept it relevant for 50 years will continue to do so for the forseeable future. We are using UNIX and its clones at 50 because they have proved versatile enough to evolve to fit the needs of each successive generation, and it’s not unreasonable to expect this to continue. We look forward to seeing the directions it takes.

As always, the comments are open.

protable, Linus?

B^)

Capable of being absorbed and used by the protovirus. (When is the next episode coming??)

New season Intro:

“My name is Linus Benedict Torvalds. Over a three centuries ago, I designed software that forms the backbone of computing to this very day. Regardless of the countless extensions and branches of its version tree, the kernel remains as intended.

And now at long last, it is ready for it’s true purpose.

My name was Linus Benedict Torvalds. The year of Linux has come at last.”

Surely Torvalds would not write something like “for it’s true purpose”.

Being Norwegian and so having to learn English in a methodical way, Torvalds probably would write “for its true purpose”, since he would probably be more aware of English grammar than many native English speakers!

While I used Unix in college (early 1980’s), it wasn’t really useful for real-time needs. Timesharing has its applications, but controlling a bunch of relays and contactors wasn’t one of them (ha). Where Unix won us over though, was BSD Sockets and networking. Now we could link our real-time systems, and hackers could break their way in and blow-up the world.

or steal 75 cents worth of time and get some military secrets. Cuckoo

Sventek was back!

“Those computer s will never amount to.much”.

What complicates it is the legalities. Microsoft bought the source code, but had to call their product Xenix because they weren’t allowed to use “Linux”.

There were a bunch of operating systems that were Unix-like in the eighties. Microware OS-9 never tried to be Ubix, but had aspects of it. Otyers seemed more vague, at a distance I thiught they were less about being comoatible but to include imoortant concepts from Unix. But one time in alt.folklore.computers I posted about some of them, and Dennis Ritchie replied that Mark Williams’ Coherent operating system was very Unix like.

It was the grail to seek out, but for a long time the software and the hardware were too expensive for the hobbyist. So I made do with OS-9, never experiencing Unix so the differences didn’t bother me. Others bought OS’s from their computer ‘s manufacturer, who knows how Unix they were.

And every new thing got us wondering if this was it. GNU, Minix, the free BSD, then Linux.

“Microsoft bought the source code, but had to call their product Xenix because they weren’t allowed to use “Linux”. ”

UNIX. They weren’t allowed to call it UNIX. Because Bell Labs owned the trademark.

Linux didn’t exist when Xenix was a thing.

Sorry, that was a slip, I knew Linux didn’t exist at the time

KiX from Kyan for Apple II. All the commands and utilities are on disk and pulled in as needed.

AUX for later PowerPC Macs I believe.

Or was it available for Motorolas as well?

68020 and 030 with the pmmu if I recall. And perhaps the quadras (68040) too. A/UX 3.1 had some really cool features that would still be welcome today. Commander.

I briefly had a surplus PC with Xenix on it (a friend got it from a company he worked for that was tossing it out)

Does anyone have a link to that extensive UNIX family tree file? It lists all the commercial UNIX and its offshoots. I spent some time looking but came up with nothing. The one I’m talking about scrolls from right to left for many pages and its all black and white text

Check this out. https://www.levenez.com/unix/

Thanks!

You may also wish to check out the extensive archives at http://www.tuhs.org.

Thanks. Wow, I had forgotten how many our group (about 100 people) used and I had to administer most of them at one time or another. They were tied to the machines we bought at the time for different projects: SunOS…Solaris…OpenSolaris (even after bankruptcy), AIX (aches and pains i called it – the worst and most different for sysadmin), HP-UX, Ultrix, OSF-1, NEXTstep (only 1 ‘crazy’ guy bought a NeXT for his personal desktop), later BSD and IRIX. We now use various flavors of Linux depending on the current whims of purchasers :)

>there is “Has roots in UNIX” such as … MacOS

Perhaps you didn’t know, but OSX is actually certified UNIX

https://www.opengroup.org/openbrand/register/

OK, no, I didn’t. So it’s BSD gone full circle.

I mean, BSD is fully POSIX compliant and always has been. The only thing it needs to be UNIX certified is a company to pay for certification, but they always do that under their own branding.

Ex: SunOS (from Sun Microsystems) was derived from 4.2BSD (which included some AT&T code and thus required a UNIX license, but was primarily Berkeley source code/copyrights). Compared to SunOS, MacOS is hardly BSD.

Other interesting examples of this are EulerOS and K-UX, which are both redhat-derivative, but certified as UNIX03 compliant. See, these days, “a commercial UNIX” is an OS that conforms to at least one version of the Single UNIX Specification, and has paid for certification. So we have at least one example (MacOS) being BSD-derivative and choosing to pay for this route, and examples (EulerOS & K-UX) being linux-based, and choosing to pay for this route.

So the divisions are more like a 2×2 matrix of “Blood relatives” vs “close friends” on one side, and “paid certification” vs “mostly-conformant-but-not-certified” on the other side. Traditionally we’d call blood relatives + paid certification “Commercial UNIX”, blood relatives + but not certified (eg BSDs) as “UNIX-derived”, and close friends + but not certified (eg Linux) as “UNIX-alike”. But we also have a fourth grouping of “close friends + paid certification” ranging from z/OS(+USS) to EulerOS.

So where I’m going with this .. MacOS and EulerOS would have been called UNIX-derived and UNIX-alike respectively, but have slipped into neighbouring categories via paid certification.

DG/UX :-)

https://en.wikipedia.org/wiki/DG/UX

From my alma mater, Data General (RIP)

The SCO attack on Linux was, in fact, significant – it was more than a “bump”. For several years, the future of Linux was in doubt. An employer made me stop doing ATE and production control stuff on Linux boxen. Some hardware companies ‘paused’ their driver development. But the worst of all was when the corrupting M$ marketers convinced several Cal State University school IT managers that there was no legal future possible for Linux, and the public schools subsequently lost hundreds of millions of US $$ to the evil empire. Bill Gate’s facade of philanthropy will never be able to repay and rescind and repair the damage done to American industry innovation and public school health. He may be a brilliant marketer and competent industrialist, but he and his ilk are a dark smear on the progress of humanity.

After several years of using Red Hat’s stuff (a la Siever and Poettering), am again uncertain of the defining characteristics of Unix and/or Linux.

And for what it is worth, the Unix that we used on the PDP11 was a mess. But Slackware eventually emerged to save my computational soul.

My epiphany came when I did a dual install (two hard drives) of Linux and Win 3.1 on a 486 box at work. I wanted to use Linux as an X-client on our Unix system, so I could run a schematic capture tool that wouldn’t crash when I opened up a second page (thanks, QEMM). (It was UNIX Viewlogic vs the Windows version)

To say the performance difference was significant would be an understatement. And the reliability was a welcome change as well.

Then, the boss came around and asked what I was doing. He was unhappy to learn that I was running Linux on his system (I had bought my own HDD), and instructed me to stop. Luckily, he left shortly thereafter.

i lived viewlogic, great ui design.

Of course today it is very simple with Xming and SSH x tunnelling through PuTTY.

Windows 10 includes openssh client and (optionally) server, so you don’t even need PuTTY.

I just installed Windows Subsystem for Linux on Win 10 and it can access it directly with Linux. If you work for a Windows only person, you could say it’s just an app that you downloaded from the Microsoft store…..

It’s a compromise though if you are used to a real Linux.

“corrupting M$ marketers convinced several Cal State University school IT managers that there was no legal future possible for Linux, and the public schools subsequently lost hundreds of millions of US $$ to the evil empire.”

Linux wasn’t really up to the task of supporting a massive deployment across CA public school system at the time. There was no capable alternative to Active Directory in the Linux world at the time, and the few commercial tools that were available cost more than the school software licenses from Microsoft.

A Microsoft software assurance license costs about $20-40/yr based on volume, and entitles user to the latest OS, Office, and any needed server CALs.

A free OS is fine, but the OS alone isn’t the major cost in computing. CalState likely wouldn’t have chosen Linux over Windows – they would have rolled any imagined savings in OS license fees and more into programmers salaries to develop the missing tools to allow them to properly manage their computing environment.

Too bad UNIX didn’t have any history of working in the corporate world.

But I will say one thing, Microsoft’s poor reliability at the OS level and in the application server space brought us PC virtualization in quick time. This same feature is the one thing which allowed lots of Windows shops to explore Linux and bring up services on Linux inside corporate IT departments at zero to little costs and see how well it ran. Having Microsoft Windows as the host OS often didn’t help the Linux VM’s reliability but cheap discarded boxes quickly got re-purposed to fix that problem. And then Amazon cloud happened.

“There was no capable alternative to Active Directory in the Linux world at the time”

I’m guessing you meant to say Group Policy instead.

Active Directory was a direct(ha) copy of LDAP from Unix. Today however AD serves as a mostly full-compatible LDAP directory service.

The thing MS was cheered for was Group Policy, which for a very long time was unmatched by anything. Arguably even today they may still have that claim in the desktop world.

It took over a decade for Linux to get anything similar, and MS only recently was surpassed due to the mobile trend (Apple is the mobile GP king by far)

Not to mention [Kerberos](https://en.wikipedia.org/wiki/Kerberos_(protocol)), which existed since 1980 and really is the actual core for how ActiveDirectory works (typical MSFT embrance extend… etc, but it’s BSD-licensed). But it certainly has groups, ‘SPN’s ‘Domain’s etc etc.

Don’t believe me? Open up a cmd.exe and type ‘klist’.

That’s the standard Kerberos command to list your kerberos tickets. Kerberos List.

If you aren’t on a domain, it’ll just say something like ‘Cached tickets (0)’, but if you are on a domain-joined PC it’ll say you have at least a ‘TGT’, or ‘Ticket Granting Ticket’.

LDAP is the ‘lightweight’ form of DAP, which is do with accessing that directory, which requires the computer doing that accessing to be authenticated to the domain controller… which is to say, have a ‘Computer’ object account, and have it’s ‘keytab’ file for the account (another form of password, essentially).

If you have friendly && unix-competent IT staff at work (lucky you) you can have them give your linux machine a domain ‘Computer’ account and then extract and you its kerberos keytab file, and then you can configure your machine to respect domain SSO via ActiveDirectory.

Just don’t forget that whilst AD is used for both authentication (proving you are you with a signin) and authorisation (controlling who has access to what) you can use it for just the first if you want, and use a whitelist to let just certain accounts in — without your machine ever actually seeing their password in any way.

No need to give your IT people the keys to root on your machine, but it is useful if you want to host services to less technically literate co-workers.

Set your service up right, and they’ll never need to see a logon page, or be persuaded to pick a special unique password for another special account just for your not-central-IT-controlled service.

But even with no help or support from IT, you can still use kerberos as a client on your linux machine — this will let you sign in and make your own ‘TGT’ locally even if your machine’s attempt to talk to the KDC are getting shunned. Just install the relevant utils package for your distro, edit /etc/krb5.conf to set your default ‘domain’ to match what your IT staff named it, and run `kinit` to ‘sign in’.

After which you can do thinks like connect to windows shares using kerberos, like `smbclient -k //server/share`, or even have them mount from an /etc/fstab entry without bad behaviour should your ticket expire.

But if you want your local machine sign-in to work like SSO, you have no choice but to ‘fully’ connect your PC to the domain, including getting IT to run that horrible ‘ktpass.exe’ invocation to extract the krb5.keytab file for your machine… which they’re likely to refuse to do ‘for security reasons’, but really only because they’re ignorant of how their own authentication system works.

I remember it well, I was there reading the blow-by-blow accounts daily. And yes, it was very serious business at the time. But 20 years later? It didn’t change the course of the river, did it.

Only, I submit, because tSCOg ran out of money to pay the lawyers. And even after that, it dribbled on for a while. Microsoft was suspected of paying the bills. It was a blatant scam, with a huge payoff, but not as simple as they thought it would be.

IIRC they went all legal and burned their cash, and then didn’t Novell say “Hang on, we own the rights, not you!”? Trying to remember off the cuff.

I don’t know how accurate it is, but wikipedia has a longish entry about this under “SCO–Linux disputes”.

The Groklaw archives (http://www.groklaw.net/) are still there, and has a lot of details on the SCO shenanigans.

They were not “suspected of paying the bills”, they were found out to have been doing it through a Canadian bank.

I did not know that had been discovered. It was a widely held belief, but no proof, when I was following it. Glad someone came up with the proof.

Of course, Microsoft now [i]embraces [/i] Linux…

I think MS is evil for putting Lotus out of business. Well, technically Lotus was bought out by IBM. I still use Lotus Approach every single work day. It’s hopelessly obsolete but while I am interested in coding and electronics and all manner of things I do not know how to gin up a real working database frontend. Lotus Approach still works great for everything I need it to do but had it had continuous development since 1997 it could be something really great.

By the way Lotus was the first large company to recognize same sex domestic partners in a significant way. Great company.

I lived in a historic town in Kansas which had streets laid out in the 1870s when it was Indian Land. My house still has the concrete hitching posts (for city folks, that’s for horses) out by the curb and converted gas light fixtures. The sewers and waterlines were laid out in the 1880s. The streets were bricked in in the 1910s -20s and some are still used with the original brick surfaces.. It’s amazing to me how these things are even slightly functional today with Teslas running around and the old easements originally set in for gas and city steam used now for fiber to the house. Shannon came up (in the 50s) with the signal to noise curve we use for modern telecom and data circuits. Clarke came up with the location for all current geostationary satellites in the 40s. I have the same fascination with UNIX. Today’s version of Linux is really derivative from SR4V5 and much different from the basic UNIX from the 70s. But just as a it’s evolved it seems to have solid roots and a good future. Tomorrow may yield a genius or hardware that obsoletes it, but so far it looks like it will be with us for a while. Keep in mind Ritchie also wrote C which is what Windows was written in.

Didn’t Microsoft subsequently produce their own incompatible C and C++ compilers? Bill Gates and Steve Balmer didn’t want Windows developers being able to work with other operating systems and they went out of their way to controlling developers, developers, developers. To this day I still question their motivations when I see Microsoft join the Linux steering committees and recently the Java steering committee.

If one’s ever “steered” a committee, you’ll know how that works out.

And IBM had their programmers writing in Rex, which was less marketable outside their organization.

And you mean REXX not someone’s name.

Rexx is a scripting language.. not really comparable to C/C++. It is more comparable to Python. We used REXX on OS/2 through the 90s. Rexx was a nice language to use.

We were looking at moving to Windows and/or Linux. I was asked to evaluate IBM’s ObjectRexx. Its performance was terrible. Scripts that I had written to generate test import files that took a few minutes on classic OS/2, took hours eventually failed after consuming all the RAM and swap file.

We eventually chose Python.

There is only one compiler that is relevant. That’s the one that the chip manufacturer supplies when they issue a microprocessor. Yeah, I get really grumpy about Microsoft trying to lock down, rebrand and corrupt standards and processes to force incompatibility and obsolescence (read: user has to spend more money).

Even within their own products. Why in the world would a VBA for Excel need to be forward incompatible from one Excel version to the next because of how a file is dimensioned upon opening? I think that one was deliberate to force old VBA scripts to crash and be rewritten.

ARM has their own C compiler and they also manage the ARM GCC port, so which of these is the relevant one?

The one supplied by the chip manufacturer with the processor because that is what you use to port (cross compile) anything (even compilers and operating systems) to that processor.

If you’ve ever had to pick up someone’s mess of excel VBA (or even your own a few years later), you’d be glad that VBA scripts get needed every few releases, or they’d grow into even more horrific monstrosities.

Every time MS breaks some VBA, there’s a chance for someone to say “shouldn’t this be in a proper relational database?”

I was using excel as an offline terminal and dashboard and shelled out of excel to an executable to do real math. It was distributed to a few hundred computers globally within the company and worked 100% for a year or so. I had to issue 2 versions of the program depending on which version of excel you used. If I remember right it was something like an additional comma in the open file argument (to save the results). Totally stupid. Completely crashed on new version excel boxes. I actually did know better than to use VBA for anything serious and won’t make the mistake again.

Oh, and plug for the Kernighan book…an excellent read.

https://www.amazon.com/gp/product/1695978552

+1. Reading it (slowly) right now, savouring every word and picture.

another +1, i’m about halfway through it now. Bell labs must have been such a great place to be.

Fun – that artwork at the top is based directly on my PDP-11/70 re-animation!

https://saccade.com/writing/projects/PDP11/PDP-11.html

I can’t speak for Joe, sadly.

But wow, that’s a very cool project!

And that is the first thing I noticed. Glances over at his own OP PDP11/70 blinking at me :-)

It’s worth noting that at the time when Caldera were stirring up trouble, they owned SCO. The purchase took place in 2001.

SCO at that time had interbred with Microsoft Xenix… and OpenServer 5 very much had a Microsoft-feel to it. It was the only OS I’ve ever used that required you to re-link the kernel and reboot to change IP addresses.

http://www.longlandclan.id.au/~stuartl/images_tmp/sco-fullsize.png

I remember that tape …. the first step of porting V6 was to write a version of tar (in fortran) so we could unpack it

Please, please put this history in writing — if you haven’t already? It’s computing at a whole different level compared to what we know today.

It’s not really. I know a lot of folks writing fairly deep executables today and a lot of degreed engineers who can’t calculate heat load or pour volume unless there is an app for it.

That’s why Linux is for computers and Windows is for terminals, word processing and entertainment. There is a definite split between programming and “programming”.

I know what you mean. I used to work for a company that manufactured cooling products. I wrote a heat load calculator for the sales team because they were an embarrassment at sizing the proper cooler for the heat load.

https://en.wikipedia.org/wiki/Amdahl_UTS used in house and by many Amdahl Mainframe customers including AT&T.

I wrote and ran programs under UTS at Amdahl. I converted them from EBCDIC to ASCII, wrote them out to floppy disk so I could work on them at home under MINIX and at work again converting them back to EBCDIC to continue development.

Will the original Unix stand up? Kind of like saying what is the real (original) life form today.

How about A/UX? That was an odd version of Macintosh System 7.1 that could run UNIX programs on a few models of 68K CPU Macintosh computers. Apple didn’t keep going with that in the 7.5 and later Mac OS versions – only to cannonball into the *NIX pool years later by basing OS X on BSD.

I think you get to call something Unix if it passes the Unix certification tests to use the Unix trademark.

By that measure, Apple is the worlds leading Unix distributor.

You also have to pay I fee, I recall

Well, I didn’t actually get to UNIX os’s until about 1981 when AT&T needed a tech in Alaska and gave me a jillion tons of free training so I would be able to support their 3B2 systems. I LOVED it! My favorite version is still SVr3.2. Later on I sold thousands of desktop systems running “ESIX” to NASA here in Texas. Other vendors over time were Kodak and SCO. the code was licensed to a lot of people at one time. Cromemco had their own version called “Cromix”. I still have a complete “Xenix” distro for the 386. At that time all the versions came with an incredible amount of documentation which could teach you anything. (I keep it as a reminder of what high standards are possible when a manufacturer really cares to pay attention. In this case, the manufacturer was not Microsoft, but AT&T; MS Just reproduced the docs and included them with their package.)

What I see missing from today’s OS’s is the philosophy behind UNIX development. I loved the idea that every little unit did only one thing and that every little unit could be processed as a file. If I live long enough maybe I’ll figure out an anti-complexity proof to determine if this is actually a best way to design an OS.

“…the philosophy behind UNIX development.”

Exactly right. It irks me that people are still creating monoliths. Including monolith dynamic libraries. Why you would create a dynamic load library that had everything except the kitchen sink, is lost on me.

I guess it is unprofitable to develop non-monoliths…

With regards to Microsoft, they have been fighting against web standards, against Java with their own version, against flash with their release of Silverlight, use of VB at websites instead of Javascript making harmfull scripts easy to run just accessing a site etc. Thank God, they don’t have that power anymore … And yes I appreciate Bill Gates giving billions away for good causes, but I don’t think it can ever make up for the delay and impact to open sourse software development and open standards ….

You forgot to mention their *gag* Internet Explorer browser which tried to defy standards, even their own, and needed special html code for each version just to run a webpage on it. Good thing all that is over with!

Solaris was open sourced. In 2005.

As an inhabitant of what used to be called “The Bell System” for 42 years (Southern bell, BellSouth, AT&T) i can attest to the heavy use internally of unix (the name was originally all lower case to contrast it with MULTICS) in many of its various flavors. That included mundane desktop tasks on Fortune systems, back office support tasks on PDP11 based systems like SCCS and RMAS used to monitor the performance of telephone switching systems and update their databases, to the operation of the switches themselves after the advent of the #5ESS switch.

Not to shabby for the result of a couple of guys tinkering with a cast off computer system looking for a way to do text processing.

I’m surprised the comments got this far without one mention of the POSIX standard.

I said POSIX…. 😎

Those couple of guys all happened to have PhDs, but your point is still (maybe even more) valid.

Put a bunch of wicked smart people ina room with nothing to do and you’ll be amazed what comes out.

(talk about hte ideal job…)

People can still get Unix on a tape. For the price of blank media and postage, I’ll send anyone a DDS-2 or an LTO-4 tape with any architecture you like, in the case of the DDS-2, or every architecture, in the case of LTO-4, of NetBSD.

The DDS-2 tape will even boot on architectures that support booting off of tape :)

As confusing as this topic is, the above is slightly incorrect. macOS uses FreeBSD kernel code, but is not BSD. It’s actually a certified UNIX and POSIX OS and belongs in the “real” UNIX family you mentioned. Small reminder that the BSD license allows code reuse for basically any purpose, but that BSD is an operating system and not a kernel. As such, OSes that reuse BSD code are not necessarily BSD unless they also implement the filesystem and binary compatibility, which macOS doesn’t.

I wrote a guide to how LInux, BSD, UNIX, and macOS relate to each other here: https://github.com/jdrch/Hardware/wiki/How-Linux,-BSD,-UNIX,-and-macOS-relate-to-each-other

Thanks, I ‘ll eventually read it.

And if you boot MacOS in terminal mode, you can see the boot sequence in all its glory (including the famous reference to the Regents.)

N

Off topic a bit, but in the 90’s the hot ticket was Novell Netware so Windows machines could share drives.

I was working for a Bond company who had the clerks all working in Netware, and then Sun386 Unix servers running a homebrew database we wrote (non-SQL). The killer solution was Beame & Whiteside TCP/IP and NFS for DOS.

But people in NYC on the trading floor could peruse the database using a DOS box over the IP network, We even had DOS boxes locally to do only one thing. They sat and waited for a file to appear on an NFS share, crunched the data, and dropped it where another DOS box saw it, etc, etc. Networking made it all possible. I think there were 10 DOS boxes and only 2 Sun386 servers. But we upgraded those servers every time the clock speeds increased, or new SCSI cards came out.

Anyway, the Unix was always just a big database box, with scripts and programs to prepare/process the data being saved.

No one ever logged-in to it except programmers and system administrators. The unwashed were on Windows.

POSIX

I think I can speak for everyone that I am very glad that Linux eventually did get support for things other than “AT harddisks” (presumably he meant IDE/ATA).