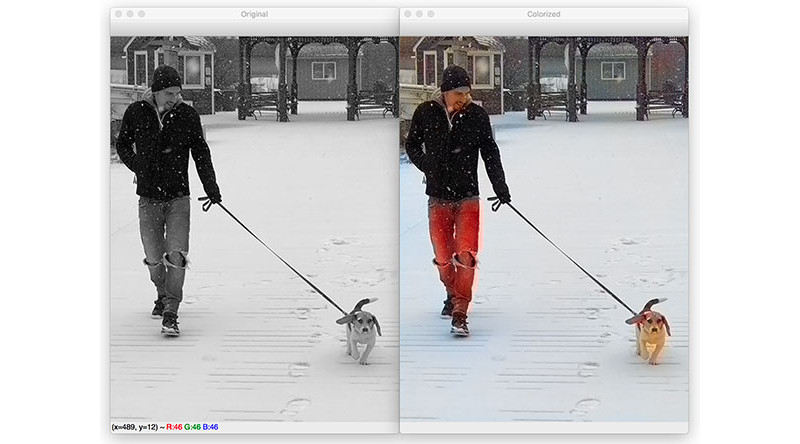

The world was never black and white – we simply lacked the technology to capture it in full color. Many have experimented with techniques to take black and white images, and colorize them. [Adrian Rosebrock] decided to put an AI on the job, with impressive results.

The method involves training a Convolutional Neural Network (CNN) on a large batch of photos, which have been converted to the Lab colorspace. In this colorspace, images are made up of 3 channels – lightness, a (red-green), and b (blue-yellow). This colorspace is used as it better corresponds to the nature of the human visual system than RGB. The model is then trained such that when given a lightness channel as an input, it can predict the likely a & b channels. These can then be recombined into a colorized image, and converted back to RGB for human consumption.

It’s a technique capable of doing a decent job on a wide variety of material. Things such as grass, countryside, and ocean are particularly well dealt with, however more complicated scenes can suffer from some aberration. Regardless, it’s a useful technique, and far less tedious than manual methods.

CNNs are doing other great things too, from naming tomatoes to helping out with home automation. Video after the break.

GE

Any videos available that have more detail or give a bit more information??

This project is just using Zhang et al’s algorithm from 2016. Zhang has a website where you can get a lot more information about the algorithm, as well as a reddit bot that will colorize any image you give it: https://richzhang.github.io/colorization/

Good link. Less than impressive results when running the demo with historical BW images

AI nah, you just need this smart film, which like any 5th grader knows that the sky is blue, the grass is green and people are in the middle… https://trulyskrumptious.wordpress.com/2012/11/29/instant-color-tv-screen/

Looking at the example image above, I have to ask:

1) I’ve never seen red jeans for sale anywhere, and who wears bright red jeans? Is red the most likely color of jeans? Did the input data have an overwhelming number of red-jeans wearers?

2) I’ve never seen a beagle with yellow/orange chest, only a white chest. Is yellow/orange the most likely color of the dog’s chest?

3) The yellow/orange dog casts a yellow/orange shadow?

4) The helicopter in Jurassic Park is blue and white, not yellow and red. https://youtu.be/ghKy8FRhs28?t=23

5) The algorithm miscolors the pink shirt and red-ish head of the pilot.

Basically, the algorithm makes everything of high luminance (whites) yellow, and low luminance (darks) red. I don’t see this as anything but an algorithm that doesn’t work.

„1) I’ve never seen red jeans for sale anywhere…“ you‘re just too young.

We owe Ted Turner a big thanks. ;-)

The world didn’t turn color until sometime in the 1930’s, and it was pretty grainy color for a while, too.

https://www.gocomics.com/calvinandhobbes/2014/11/09

blue snow, yellow dogs and red jeans, perfect!

I wonder if the difference between the historic B&W pictures and a color picture that’s been de-colorized is causing problems. It’s usually pretty obvious when looking at a picture whether it’s a true B&W or just run through a digital filter. I assume the old B&W film reacted to certain wavelengths more strongly, causing that difference.

B&W film, old or new, responds to different wavelengths more than others. It depends on the type of film, and using coloured filters is common, e.g. to darken the sky and reduce overall contrast, or to bring out the clouds, or to emphasise foliage, etc.

Any photographer working in B&W with a digital camera will be making the same choice today, just in post-production; software like lightroom allows you to alter the response to different wavelengths when you do the conversion.

The only B&W photos produced with a ‘flat’ wavelength response are produced by a simple desaturation to luminance. This isn’t commonly done by photographers, but is done by programmers who don’t realise the nuances of B&W photos. It doesn’t usually look as nice (largely because no thought has gone into it).

However, your ability to spot old photos is probably based more on the grain, contrast, and other factors like the subject.

I really love the work of Jason Antic and his “deoldify” deep learning based approach: https://github.com/jantic/DeOldify

The examples are amazing: https://github.com/jantic/DeOldify#example-images

Or just go back to true color photography. Not exactly cutting-edge.