Stephen Wolfram, inventor of the Wolfram computational language and the Mathematica software, announced that he may have found a path to the holy grail of physics: A fundamental theory of everything. Even with the subjunctive, this is certainly a powerful statement that should be met with some skepticism.

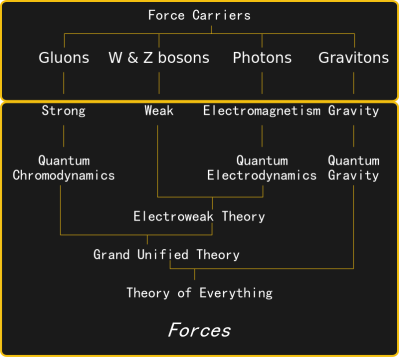

What is considered a fundamental theory of physics? In our current understanding, there are four fundamental forces in nature: the electromagnetic force, the weak force, the strong force, and gravity. Currently, the description of these forces is divided into two parts: General Relativity (GR), describing the nature of gravity that dominates physics on astronomical scales. Quantum Field Theory (QFT) describes the other three forces and explains all of particle physics.

The Answer To The Ultimate Question Of Life, The Universe, And Everything

Apart from the incompatibility of QFT and GR there are still several unsolved problems in particle physics like the nature of dark matter and dark energy or the origin of neutrino masses. While these phenomena tell us that the current Standard Model of particle physics is incomplete they might still be explainable within the current frameworks of QFT and GR. Of course, a fundamental theory also has to come up with a natural explanation for these outstanding issues.

A Controversial Kind Of Science

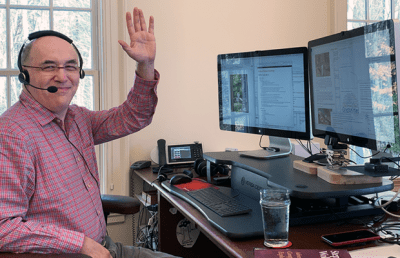

Stephen Wolfram is best known for his work in computer science but he actually started his career in physics. He received his PhD in theoretical particle physics at the age of 20 and was the youngest person in history to receive the prestigious McArthur grant. However, he soon left physics to pursue his research into cellular automata which lead to the development of the Wolfram code. After founding his company Wolfram Research he continued to develop the Wolfram computational language which is the basis for the Wolfram Mathematica software. On the one hand, it becomes obvious that Wolfram is a very gifted man, on the other hand, people have sometimes criticized him for being an egomaniac as his brand naming convention subtly suggests.

In 2002, Stephen Wolfram published his 1200-page mammoth book A New Kind of Science where he applied his research on cellular automata to physics. The main thesis of the book is that simple programs, in particular the Rule 110 cellular automaton, can generate very complex systems through repetitive application of a simple rule. It further claims that these systems can describe all of the physical world and that the Universe itself is computational. The book got controversial reviews, while some found that it contains a cornucopia of ideas others criticized it as arrogant and overstated. Among the most famous critics were Ray Kurzweil and Nobel laureate Steven Weinberg. It was the latter who wrote that:

Wolfram […] can’t resist trying to apply his experience with digital computer programs to the laws of nature. […] he concludes that the universe itself would then be an automaton, like a giant computer. It’s possible, but I can’t see any motivation for these speculations, except that this is the sort of system that Wolfram and others have become used to in their work on computers. So might a carpenter, looking at the moon, suppose that it is made of wood.

From Graphs To Hypergraphs

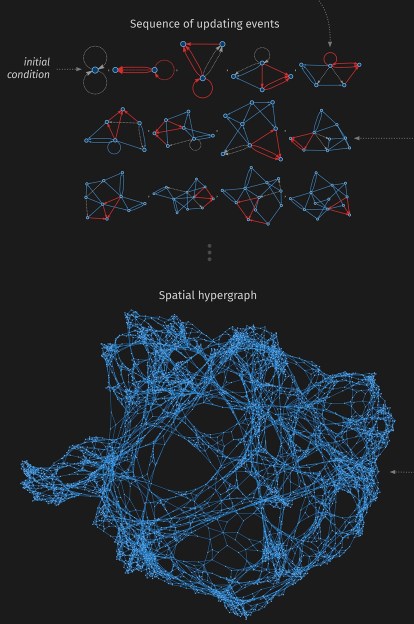

The Wolfram Physics Project is a continuation of the ideas formulated in A New Kind of Science and was born out of a collaboration with two young physicists who attended Wolfram’s summer school. The main idea has not changed, i.e. that the Universe in all its complexity can be described through a computer algorithm that works by iteratively applying a simple rule. Wolfram recognizes that cellular automata may have been too simple to produce this kind of complexity instead he now focuses on hypergraphs.

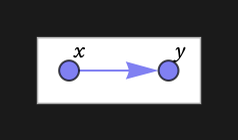

In mathematics, a graph consists of a set of elements that are related in pairs. When the order of the elements is taken into account this is called a directed graph. The most simple example of a (directed) graph can be represented as a diagram and one can then apply a rule to this graph as follows:

In mathematics, a graph consists of a set of elements that are related in pairs. When the order of the elements is taken into account this is called a directed graph. The most simple example of a (directed) graph can be represented as a diagram and one can then apply a rule to this graph as follows:

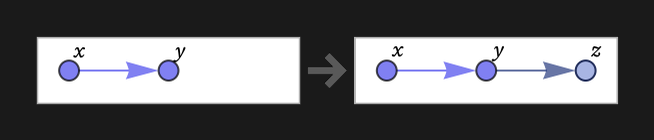

The rule states that wherever a relation that matches {x,y} appears, it should be replaced by {{x ,y},{y,z}}, where z is a new element. Applying this rule to the graph yields:

By applying this rule iteratively one ends up with more and more complicated graphs as shown in the example here. One can also add complexity by allowing self-loops, rules involving copies of the same relation, or rules depending on multiple relations. When allowing relations between more than two elements, this moves from graphs to hypergraphs.

How is this related to physics? Wolfram surmises that the Universe can be represented by an evolving hypergraph where a position in space is defined by a node and time basically corresponds to the progressive updates. This introduces new physical concepts, e.g. that space and time are discrete, rather than continuous. In this model, the quest for a fundamental theory corresponds to finding the right initial condition and underlying rule. Wolfram and his colleagues think they have already identified the right class of rules and constructed models that reproduce some basic principles of general relativity and quantum mechanics.

Computational Irreducibility And Other Problems

A fundamental problem of the model is what Wolfram calls computational irreducibility, meaning that to calculate any state of the hypergraph one has to go through all iterations starting from the initial condition. This would make it virtually impossible to run the computation long enough in order to test a model by comparing it to our current physical Universe.

Wolfram thinks that some basic principles, e.g. the dimensionality of space, can be deduced from the rules itself. Wolfram also points out that although the generated model universes can be tested against observations the framework itself is not amenable to experimental falsification. It is generally true that fundamental physics has long decoupled from the scientific method of postulating hypotheses based on experimental observations. String theory has also been criticized for not making any testable predictions. However, String theory historically developed from nuclear physics while Wolfram does not give any motivation for choosing evolving hypergraphs for his framework. However, some physicists are thinking in similar directions like Nobel laureate Gerard t’Hooft who has recently published a cellular automaton interpretation of quantum mechanics. In addition, Wolfram’s colleague, Jonathan Gorard, points out that their approach is a generalization of spin networks used in Loop Quantum Gravity.

Where Will It All Lead?

On his website, Wolfram invites other people to participate in the project although it is somehow vague how this will work. In general, they need people to work out the potential observable predictions of their model and the relation to other fundamental theories. If you want to dive into the topic in depth there is a 448-page technical introduction on the website and they have also recently started a series of livestreams where they plan to release 400 hours of video material.

Wolfram’s model certainly contains many valuable ideas and cannot be simply disregarded as crackpottery. Still, most mainstream physicists will probably be skeptical about the general idea of a discrete computational Universe. The fact that Wolfram tends to overstate his findings and publishes through his own media channels instead of going through peer-reviewed physics journals does not earn him any extra credibility.

I believe that some of the core ideas of “digital physics” are much older.

Konrad Zuse probably was the first that considered ideas along these lines – “Der Rechnende Raum”:

https://en.wikipedia.org/wiki/Calculating_Space

AFAIK, he was able to derive the laws of Special Relativity with his approach, and later, Carl Adam Petri (-> Petri Nets) also did work in this direction. I don’t think they succeeded with Quantum Mechanics, but frameworks like

https://en.wikipedia.org/wiki/Causal_dynamical_triangulation

seem to be in a similar realm.

String theory is not a theory in the scientific sense. It is just mathematics without any falsable prediction. Seems Wolframs Theory is the same. Entertaining, but not physics.

Agreed. He may very well come up with a powerful/useful predictive tool, but that is not the same as describing what the universe actually *is*.

That applies to all theories. You may only know how well they correlate with reality through experimentation, if they are the same is an entirely other question!

… and we wondered where all the corrupt science and quack pots disappeared… I’m happy flat earthers will keep asking the really hard questions.

This is an area that has interested me for some time and there are a number of fundamental questions to consider. The first is whether it is possible to describe how the universe actually is or whether physics is just building models that predict the behavior of the universe to some level of precision. Connected to that is the question as to whether the amount of information in the universe is bounded (finite). If it is, then it may be possible to have a discrete model that truly describes how the universe actually is. If not, discrete models may still be effective at prediction, but models based on the concept of continuous space may be more effective, making the Wolfram ideas a dead end. I have some background in mathematics and I look at the concept of infinity and its use in calculus applied to “continuous” space as being a clever an effective mathematical tool with some significant limitations when applied to the real world (e.g. singularities leading to renormalization). The advantage of discrete models is that they can avoid such issues and that makes it worth investigating them as models of the physical world. Graphs are clearly a good starting point but, although I used to be a fan of cellular automata as a possible approach, I am concerned that they treat time separately from space which makes it difficult to incorporate General Relativity in their models. Graph based models also seem to be conceptually compatible with string theory where strings may be describable as subgraphs. One issue with graph based models is how to represent dimensions and so how connected each node can be to other nodes. One significant problem related to dimensions that I have been working on is that Pythagoras’s theorem doesn’t seem to work in discrete space. This may seem a trivial matter but any model of space must, at a bare minimum, be able to represent basic geometry and it is non-trivial to do that with discrete models. That is just the basics. At some point discrete models will have to cover wave functions with their probabilistic nature and the need for complex numbers plus much more. I think discrete models deserve more attention but there is a lot of work to be done if they are to compete with existing models.

Science IS building models that predict observations.

I agree and I suspect that that it is meaningless to expect an answer to life, the universe and everything (other than 42). There must be limits to human knowledge of a system of which we are a part, and a tiny part at that. I think the best we can do is just create ever more effective predictive models. To me, the interesting question is whether it is possible to have a discrete model that is self-consistent, comprehensive and which predicts better than the existing calculus based models. It may be almost accidental that physics uses the math that it does, and has now become so entrenched that physicists can’t contemplate alternative approaches. It is at least worth a try applying discrete models to physics.

Kurt Gödel’s second incompleteness theorems say yes to this

“… I am concerned that they treat time separately from space which makes it difficult to incorporate General Relativity in their models. Graph based models also seem to be conceptually compatible with string theory …”

In his recent book “Einstein`s Unfinished Revolution”, Lee Smolin argues from the standpoint of first principles that time must be considered as fundamental and space as emergent, and suggests that a conflict with General Relativity is avoided by mathematically substituting time with position in GR calculations.

Still reading the long article, but I have to comment that you need a guy called “Wolfram” to solve the _really_hard_ problems like this. It shows a lot of mettle too….

…and tungsten cheek

Please, don’t be such a boron this subject!

“WHERE WILL IT ALL LEAD?” indeed. (plumbing the depths, I know…)

I guess when we argon it will reveal that place.

“you need a guy called “Wolfram” to solve the _really_hard_ problems…”

Such as solving the problem of why I wasn’t “Born to be Wild”?

Stephan Wolfram v. Steppenwolf

Hmmm, some people have said that the universe is a simulation and now somebody comes along and says it all can be described by simple computational programs, something to think about.

It’ll take a real quantum computer to solve the problem of quantum mechanics, and it will take quantum “mechanics” to solve problems with quantum computers.

I expect (I don’t know if this is mathematically plausible) that like quantum mechanics which can seem fragmentary wave equations, matrixes, bras and kets

There are many ways of looking at the same phenomenon and it’s not worth looking into the details too much.

42

Yeah, the question is the tricky part.

“What do you get if you multiply six by nine?”

Decimal or Hexadecimal?

Octal

To be clear, this is really just “I figured out some new math which might help with physics.” Which isn’t a small thing, mind you! Physics has often been limited by the current state of math. There are several famous examples early in the 20th century: the Dirac delta function, which Dirac basically said “let’s just suppose this exists,” mathematicians said “uh… wtf?” and Dirac’s response was just “whatever, it works, you guys figure it out later,” which they eventually did. There are bunches of examples with QFT and with GR as well.

Except I don’t think it’s actually going to do that, because the problem, fundamentally, between the Standard Model and gravity is that there’s no experimental anchor between the two over gigantic scales. In other words, providing a new *limited* math between QFT and continuous gravity doesn’t help, as there’s no guarantee that with our *current* knowledge, you can actually do that.

And if we *would* get new experimental results (like seeing evidence for supersymmetry, for instance) then there will undoubtedly be multiple different ways to approach the problem. Physics isn’t really at the point where it’s bound by math. It’s bound by experiment right now.

A Math Professor I used to work with, told us (~25 years ago) that in the past, in order to get a Ph.D. in Math, you had to invent a new Math and defend it in your Dissertation!

I believe that some of the core ideas of “digital physics” are much older.

Konrad Zuse probably was the first who considered ideas along these lines – “Der Rechnende Raum”:

https://en.wikipedia.org/wiki/Calculating_Space

AFAIK, he was able to derive the laws of Special Relativity with his approach, and later, Carl Adam Petri (-> Petri Nets) also did work in this direction. I don’t think they succeeded with Quantum Mechanics, but frameworks like

https://en.wikipedia.org/wiki/Causal_dynamical_triangulation

seem to be in a similar realm.

We’re pretty good at manipulating Photons. Let me know when we can manipulate Gluons, W&Z bosons, and Gravitons.

We’ve been manipulating gluons and W/Z bosons for decades now. How do you think nuclear physics works?

If you define manipulating as meaning something like when a 7 year old has been given a tin of old watches by his grampa and has discovered the existence of springs and gears by watching as they fly to far corners of the shed when he smashes one open with a hammer.

Yeah, if springs and gears literally *could not exist* outside of a watch. And the “hammer smashing” was so precise that you were able to convert the springs and gears into a completely different machine inside the watch.

That is an interesting question. Most of the physical phenomena in our world are based on electromagentic interactions so when we heat a wire it emitts photons while a piece of metal reflects them, etc. While photons are massless and have an unlimited lifetime, W,Z bosons are massive and very short-lived (~10^-25s) so they can only be produced at accelerators. Gluons are massless but bound by so-called confinement.

This gets announced every few years – we keep hoping.

https://www.stephenwolfram.com/media/study-complexity/

Rather than rescuing relativity and quantum mechanics as they stand, rather than an update, what we need is a reboot to actual simplicity

http://www.naturalphilosophy.org/pdf/abstracts/wesley1.pdf

Wow, the Big Bang didn’t happen?! Amazing! And how come your revolutionary ideas aren’t being taken seriously? It’s Big Cosmology with what them making all those billions of bucks and suppressing the Truth, innit?!

Calm down, nothing like this is said in the paper.

After making Mathematica available on the Raspberry Pi I’ll listen to whatever he has to say.

Thanks again!!

>Still, most mainstream physicists will probably be skeptical about the general idea of a discrete computational Universe.

There is a philosophical/ontological problem with the very idea that the universe is computational, which is the same as “Who created God?”. Computational implies rule-based, which doesn’t explain where the rules came from, which leads to the conclusion that it must be just because – therefore violating its own point…

The question is rather, at which point or scale in physics can you start explaining things in terms of computational rules, and where it turns into “just because” and probabilistic effects – because it can’t really be turtles all the way down.

“There is a philosophical/ontological problem with the very idea that the universe is computational, which is the same as “Who created God?”. Computational implies rule-based, which doesn’t explain where the rules came from”

You have the same problem whether it’s rule based or probability based (where did those probabilities come from, heck where did the choices come from).

You’re thinking of “probabilities” in terms of flipping a coin.

What I’m saying is, you can’t explain it by saying that “it’s math”, because you end up with an explanation that is just turtles on the backs of turtles all the way down. Wherever you find a “fundamental rule”, you have to ask what rule makes it so, and what substrate does the rule exist on? A rule – a pattern of behavior – does not exist before you have something to exhibit that pattern. Existence precedes essence. I don’t think the word “probability” even applies at the fundamental point.

Something must first be without a rule for it to be, and this being the case we are not necessarily restricted to other things becoming the same way, hence the fundamental non-determinism of things like quantum mechanics. Some aspects of reality are not because of anything – they just are.

“restricted to” substitute “restricted from”.

>What I’m saying is, you can’t explain it by saying that “it’s math”, because you end up with an explanation that is just turtles on the backs of turtles all the way down.

Yes I got that, and agree with you. But no matter how you explain it it will be turtles all the way down, and I see no way around it.

>Wherever you find a “fundamental rule”, you have to ask what rule makes it so, and what substrate does the rule exist on?

Similarly wherever you find a fundamental substrate you have to ask by what rule did it come to exist, ie what it exists in or in relation to.

>Existence precedes essence.

Does it? At the most basic level, in order to say something exists, you must have some essence or another in order to differentiate it from what it is not. Essence is existence.

Clearly, either the universe popped into existence in a cloud of impossibility; or it’s been here all the time. Both are equally insane, so how do you know which one to prefer?

>”it’s been here all the time”

This is the same as the first one, or “the universe began last tuesday”. In all versions the universe just is – no cause, no reason, no rule.

“Wolfram […] can’t resist trying to apply his experience with digital computer programs to the laws of nature. […] he concludes that the universe itself would then be an automaton, like a giant computer. It’s possible, but I can’t see any motivation for these speculations, except that this is the sort of system that Wolfram and others have become used to in their work on computers. So might a carpenter, looking at the moon, suppose that it is made of wood.”

Look at the balls on this guy. He criticizes others for exactly what he does. Carpenters see wood, mathematicians see math. It’s obtuse to think that the universe is actually made of math and not simply described by math just as it is to think the universe is made of hypergraphs and not simply described by hypergraphs. Personally I have no horse in the race. I don’t care how it’s described. It’s not my field, but wow, what an old fart yelling at kids on the lawn, right?

It’s meaningless to say “the universe is made of math” because it’s a contradiction in terms in the first place. It’s saying that the universe is completely abstract rules, but such cannot exist without being represented by some -thing- that is concrete. In other words, it’s just kicking the can down the street by postulating a platform where these rules and this computation may take place. A reality beyond reality that you can never observe.

Or to put it in simpler terms: can you imagine a computer program without imagining the computer that it runs on?

Knuth wrote a series of books on the matter.

On another topic, that’s not the subjunctive.

The biggest problem in saying “the universe is made of math” is that it’s just a point of view. It’s like saying “everything’s a square,” someone handing you a rectangle, and you saying “that’s just a square in deformed geometry.”

Even the laws of physics that everyone’s taught in grade school are, in some sense, a point of view. Physicists *like* conservation of energy, so they parameterize violations of it in such a way to maintain it. Wolfram *likes* cellular automata and so he generalizes them and reshapes them until it describes things the way he wants.

It is a common misconception that being incorrect about something objective involves point of view, but actually, point of view is only relevant for dealing with subjective matters.

That math is an abstract human tool is an objective fact, it is not a matter of opinion.

The “laws of physics” are math formulas believed to be useful at predicting nature, so of course they’re not subjective; they either predict accurately, or they do not.

Wolfram liking an abstraction more or less does not make it more or less abstract.

“The “laws of physics” are math formulas believed to be useful at predicting nature, so of course they’re not subjective; they either predict accurately, or they do not.”

Nope. In fact, this is pretty trivially demonstrated to be wrong. The objectivity of the laws of physics are pretty much injected by fiat. They’re basically just special relativity: asserting that the Universe is symmetric under Poincare transformations. No unique time, position, rotation, boost frame, etc. Physicists don’t *want* subjective laws, so they craft them to be objective.

Except consider the most *basic* of those – conservation of energy, or invariance under time translation. From a basic point of view, that’s completely wrong. All observers in the Universe can agree on a unique time, based on the cosmic microwave background, or based on the Hubble flow if you prefer.

But that basic implication means that in a very literal sense, no experiment can actually be completely repeated, and the results of all experiments *are* actually subjective. It depends on how long it’s been since the Big Bang. You might say “well, that’s nonsense, atoms still existed before the CMB, it’s just that the temperature was too high for them to form.” But that’s a preference – it’s equally valid to say that, in some sense, the lower-energy laws of physics *didn’t exist yet*.

Similarly, consider the basic fact of the acceleration of the expansion of the Universe. Literally, every second, portions of the Universe are exiting our cosmic horizon. For us, they *no longer exist*. So any theory which predicts what happens in that portion of the Universe is *untestable*.

So how can we assert that the predictions of what happens in *our* portion of the Universe are identical to what happens in that other, unobservable part? In other words, how can we assert that our statements aren’t subjective? We only do that by fiat.

I should clarify that when I say “injected by fiat” that doesn’t mean those things are somehow *wrong* – like I said, they’re essentially postulates. They’re things that scientists/physicists basically have *agreed* are necessary for testable theories. You have to agree that what you consider the physical laws of the Universe are objective.

But it absolutely does not follow that objective physical laws *can* completely describe and predict the Universe, nor does it follow that if they can, they’re the only way to do so. If an event occurs once and only once in the Universe, the choice of an objective description obviously must be just that – a choice.

“Look at the balls on this guy. ”

uhhh, I’d rather not…

Indeed, Math has worked for many problems, but, to date, it has not provided us yet with a theory of everything. Why not let others have a go with their tools, and see where it leads? No need to fight among ourselves over who has the best tools. The universe is what it is, and that will ultimately be the yardstick against which theories will be measured, not the opinions of some smart but big-headed guys.

“So might a carpenter, looking at the tree, suppose that rocks too grow…” And using this knowledge he would have acquired the precious resources to build entire countries. Where then does the modern dude cometh misunderstanding of all things hierarchical and whose misallocations create paradoxes that enslave them to identity politics. You are not the son of the carpenter, you are a thief.

Rocks do grow, e.g. crystals.

I’ve never seen a criticism of Wolfram from a person who could demonstrate that they were more intelligent that he is, he may well be just the smartest fool on the planet but there is currently only proof that he is smart and none that he is a fool.

“who could demonstrate that they were more intelligent that he is”

What the heck kind of comment is this? Did I miss when the world turned into a roleplaying game with an “INT” parameter that everyone can compare against each other? Let me be clear – Wolfram’s claiming that this math can help explain everything in physics. You don’t have to be “more intelligent than he is” to criticize that comment, you just have to be more experienced in a portion of modern physics than he is, and there are *plenty* of people who fit that description.

Are you saying Wolfram’s never proposed anything that someone’s shown to be wrong? Wolfram’s proposed bunches of dumb things previously. It’s even in the article: “Wolfram recognizes that cellular automata *may have been too simple* to produce this kind of complexity” (emphasis mine). The reason why he now “recognizes” this is that physicists rapidly pointed out that the arguments he was making were easily demonstrated to be flat-out wrong with well-established physics (for instance, his views on how entanglement works are easily shown to violate a combination of the Bell inequality and special relativity, and the overall CA stuff in that book is closely linked to loop quantum gravity, which people have been studying for years).

The most common criticism of Wolfram is that he’s *arrogant*. It’s not that the math of what he’s saying is wrong. It’s that he asserts the implications of the math are far larger, without backing it up in the slightest. That’s pretty much a basic definition of arrogance, so it’s not exactly a leap to call him that.

Imagine being the guy watching all this, noticing that the simulation build an emulator of itself.

the size of the universe is easy to predict, it’s just based on the draw distance setting

that being said I always wondered if quantum behaviour was just an example of the system using lazy loading or floating point numbers

Steven Weinberg’s quote is disgustingly ignorant. Maxwell wrote in terms of the technology of his day, clockwork mechanisms. But was the truth in his insights lost?

Maxwell described physical phenomena using formulas.

There is no comparison at all, Wolfram hasn’t even begun work that could describe physical phenomena. He merely supposes, roughly, that using universal logic gates you could model any system, and then asserts that this observation is very important. Even if everything he said was correct, he’d have discovered nothing and it would be up to somebody else after him to actually discover something.

You say that Wolfram supposes that with universal logical gates one could model any system. Why is that a supposition? I mean, what could falsify this claim?

6 * 9 = ?

starting from big-bang( ){decay(decay(unity))}

unity was void

but as our universe is only a part of the result observation can’t lead to unity

This leaves me with two thoughts..

Either: Where are you keeping all the other stuff then?

Or: Does only having a part observation lead to the feeling of the universe not encompassing enough?

Unity is circular here, there isn’t a unity but a sum. It has to be a sum in order to decay. That’s the “bang” part. Unity couldn’t exist anymore when the conditions for the big bang exist. It can’t decay, it has to inflate; then these inflated things can decay.

It appears as unity from where we are standing, just as the sky appears to be a lid over the Earth.

I was unimpressed by ANKOS. Yeah, fractal generating algorithms do weird things, news at -11.

I was very impressed by this. It seems like a James Clerk Maxwell moment. You know, at the time it had been observed and measured that a moving electric field could create a magnetic field, and that a moving magnetic field could create an electric field. And very separately, the speed of light had been measured, but nobody knew what light was. So Maxwell does this thought experiment, suppose I put a charged object on a pole and wave it up and down? Well it’s a moving charge, so it will create a magnetic field. And that magnetic field will be moving, so it will create an electric field, and so forth la-di-da, and that means it’s a wave. And Maxwell asks himself, if I made such a wave, how fast would it propagate? Well all the measurements had been done and when he solved the equations that would eventually be named after him, he got an answer that was very suspiciously close to the measured speed of light.

It would be decades later before Hertz proved that Maxwell’s electromagnetic waves really existed, and that they did move at the speed of light, and more evidence would start to pile up that light was itself an EM wave.

That in a few months Wolfram has been able to show this graph-based automata system deriving fundamental things like relativity and quantum behaviors and all this without even having the exact rule in hand is amazing. You could have made the same critique of Maxwell that people are making here of Wolfram — what is it useful for? What does it predict? Well Maxwell predicted that light would be an EM wave, but at the time that was not a thing that could be measured or used. Today of course it powers our entire world.

Wolfram has, at this very early stage, provided non-maddening if yet incomplete explanations for what happens at the event horizon and within a black hole, what dark matter might be, and why the cosmological constant isn’t necessary in Einstein’s equations. And once we have specific candidate rules in hand we will probably have lots of predictions to test. Dark matter and dark energy are things. (As I understand it, in Wolfram’s model dark energy is just the computational output of the rule processor expanding and refining the model.) And he even explains why we can’t detect the granularity of the universe; as we make ever more granular tests, the granularity of the universe is becoming finer, ahead of those tools we build in part because of those tools we build. That’s not a non-prediction if the overall model is useful (even in an Occam’s Razor way) for other things. It’s significantly less crazy than a lot of things we take for granted in quantum mechanics “just because that’s what the experiments do.”

DEVS – What is DEVS?

This reminds me a lot of mandelbrot and julia sets

I seem to have read similar statements in the book 《Gödel, Escher, Bach: An Eternal Golden Braid》

Has anyone read it?

mizar is better because give a proof

“Wolfram recognizes that cellular automata may have been too simple to produce this kind of complexity instead he now focuses on hypergraphs.”

IMHO, cellular automata are enough to create a full-fledged model of the universe. Please take a look at my research:

http://automaton3d.com/index.html

Can’t we just call a ‘hypergraph’ a ‘network’ or just a ‘net’ like every other engineering discipline?

Also, ‘hyperedge’ already has a name, too, where you want to disambiguate vs. the node, we call it an ‘arc’.

(come up in circuit design tools, where everything connected to the same node IS the same node, but you want to talk about a specific line on the schematic diagram. It’s an arc. Or even ‘line’ if you want.

I think I could accept the ‘hypergraph’ terminology for the part once he starts connecting laterally across different diagram domains. For instance he introduces the concept of ‘time as configuration evolution’, where a timeline is a contour of connections between succeeding particular graphs for a given generation rule.

In general, the work is pretty neat.

Great theory, well presented. Nice light reading.

It doesn’t surprise me that out of a few tiny rules (and given infinite computer memory and compute resources) you could end up with instances perhaps including all possible universes. We *are* talking ‘infinite’ here.

It’s nice that this theory hangs together so nicely.

The other ‘limiting speeds’ he comes up with with analogy to c are also nice — these provide targets for actual experimental exploration, if we can ever figure out how that might be done.

But… the whole theory might also just turn out to be useless (in a practical application sense): Even if you did figure out exactly which rule set leads to our exact universes (or at least, all those with exactly matching physical behaviour), you might never be able to actually apply it to any practical problem, just because it is so compute-inefficient that you could never build a big enough computer to make any usefully detailed simulation practical.

I mean CFD is already expensive — you have to compromise on spatial resolution a lot to even apply it at all. But this stuff implies a necessary computational resolution that you can’t even ‘catch up with’ on a scale far below atomic.

In fact, it might turn out that to *prove* any given rule is that of our universe might be so much more computationally expensive as to be undecidable anyway.

So, nice, perhaps, but less useful than it at first might seem.

Philosophically — well, sure. Engineering use though?

I think it’s greatest value is in the testable predictions it makes, and this mainly applies as to what possible behaviours and features a more applicable GUT might have. In that sense, it’s more like good mathematics.