In the last few months, most of the world’s population has shied away from touching as many public things as possible. Unfortunately, anyone with low vision who relies on Braille signs, relief maps, and audio jacks doesn’t have this luxury — at least not yet.

A group of researchers at Bayreuth University in Germany are most of the way to solving this problem. They’re developing HaptiRead, a mid-air haptic feedback system that can be used as a touchless, refreshable display for Braille or 3D shapes. HaptiRead is based on a Stratos Explore development kit that has a field of 256 ultrasonic transducers. When a person approaches the display, a Leap motion sensor can detect their hand from up to 2.5 feet away and start providing information via sound waves. Each focus point is modulated with a different frequency to help differentiate between them.

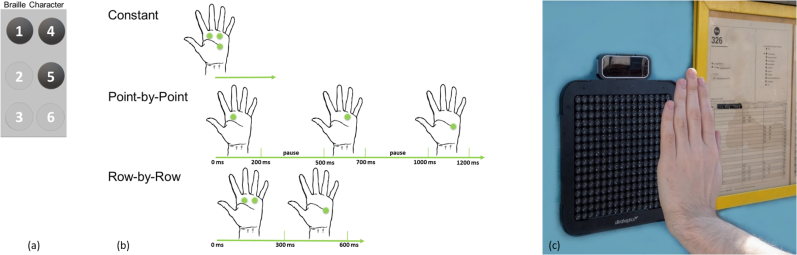

HaptiRead can display information three ways: constant, which imitates static Braille displays, point by point, and row by row. The researchers claim up to 94% accuracy in trials, with the point by point method in the lead. The system is still a work in progress, as it can only do four cells’ worth of dot combination and needs to do six before it’s ready. Check out the brief explainer video after the break, or read the group’s paper [PDF download].

Want to play with refreshable Braille systems? This open-source display uses Flexinol wire to actuate the dots.

Thanks for the tip, [Qes]!

Looked into Braille displays once as a hobbyist project.

The problem is rendering speed. A person reading braille can slide their fingers over text about as fast as a sighted human can read individual letters. For a reasonable Braille display you need to present characters about that fast.

The existing systems I’m familiar with use small piezoelectric rods embedded in rubber, which vibrate individually and “feel” like a bump. A person can read a strip of these quite fast.

I’ve seen several attempts at making a Braille single-char display using, for example, servos to raise and lower the bumps. At about 1 char per second these are entirely useless.

You *might* get by with something like the head of a dot matrix printer – that seems like it would have the necessary speed.

So my question for this project is: how fast can their system render the chars? If it takes the person a moment to feel and interpret each char, then it won’t be very useful as a Braille display.

(Next time I see a dot-matrix printer being thrown out at the dump maybe I’ll snag it and see if the print head can be repurposed as a braille display.)

I learned reading Braille with a display that used tiny solenoids or something to physically raise the plastic rods to indicate dots. I think someone tried to make a 3D printed version of it. Still the whole assembly must be small enough to be stackable to form a single line. Single character would be useless from practical point of view, as the user can’t locate a place in text fast if he/she has to read it one character at a time. Navigating the text would be a chore…

Braille printers work a bit like dot-matrix ones, but are incredibly loud. In my old school there was one that sounded like machine gun. You need signifucant force to bump up the paper…

I wonder what the ratio of notices to people who would use the notices is. It seems difficult to scale this approach to many locations (smart sign but no tech requirement on the user to be able to access it). I’ve been thinking recently about a way to guide people onto a point (that could be a notice, a road crossing, bus stop, doorway). BLE could be an initial wider area advertising method with perhaps IR as a way to add orientation as I’d assume trilateration wouldn’t be an option in these cases. If you had some way of focusing the user’s equipment (haptic glove?) the BLE could also just transfer the data content too, so no need to exactly find where the ultrasonic array is and hover your hand at the correct standoff and position.

Or just use something like Google Glass to scan environment for static QR codes that hold information for visually impaired and blind, which is read to them via bone-conducting speaker hidden in the frame behind the ear. Add some IR LEDs that will transmit ID number on demand (it should be randomly generated each time it’s needed for the purpose) for cameras in the area to track position and send back instructions via BLE or WiFi. These can be in text format, as the TTS system would be part of the glasses. It could also contain some haptic feedback signals to indicate exactly where to stop or turn. And this system could be extended to include navigation, timetable information on bus stops, emergency signaling, navigating to particular entrances, information on stores and buildings one passes by, etc…

IMHO, would be interesting to see how far you can push the integration

of this with already available stuff, given the more ways

anyone can use with existing things, more likely to be adopted.

Wider audience to sell to also results in less cost per device(s).

The qr code would be useful for more that the sight impaired.

Some autonomous robots can make use of qr codes to navigate & perform tasks based on QR code encountered in the robots environment.

The concept beyond just for sight impaired would also permit a robotic seeing eye dog (aka spot) — although gps & geotagging might be more useful.

Things like geotagging could create a richer information environment with/without using the google glasses & bone conducting speaker without overwhelming the blind or visual user. (direct user to wave the qr reader to get more information).

It’d also be a way to provide any user with extended information/tie ins to

website pages or other on-line information already available/provided.

e.g. web physical map pages, already existing on-line information about what’s

around you, etc.

It’d be kinda interesting to see this used in a less obtrusive wearable

hand/glove format in conjunction with iphone/android/google glasses

and/or bone conducting speaker. Formats other than glove would

free up the hand(s) for other uses than information processing

aka. arm/wrist band. Glove restrictions on hand movement

not withstanding, kinda allow for other hand uses on the go.

From a portability standpoint, geotagging using the google glasses/bone

speaker/haptic band or haptic glove could provide the information to the user

in an almost instantaneous feedback (as in feel it just as quickly as if one saw it)

without the user having to do a non-visual hand/cane spacial search/guess

as to where find relevant information to read.

Gloves or not, less restrictions on how/were can use. (e.g. instead of

having to physically sit and/or hold ipad device would be able to do the

sighted persons version of reading email while moving around &

doing other tasks such as eating and still get feed back about what’s going on.

Multiple feedbacks — via more than one touch arm band or bond-conducting

audio– could provide for quicker feedback about whats going on aka

think visually impaired users version of sight person’s use of multiply windows on a

computer screen. Aka google glass taps AI to provide separate audio/textual

information about what you’re seeing in front of you. aka, food on plate,

german shepard sitting in front of you, etc.)

Information overload/distraction not withstanding, the glasses/haptic feedback

device would be useful for the non-visually impaired to use while

on the go. aka. Less likely to walk into traffic because looking down at the

iphone and oblivious to what’s going on in environment.

Or making use of the geotagging to feel warnings about surroundings.

Geo-tagging tagged to other readily available on-line information

might be more useful than qr codes. aka already available/in use &

doesn’t require extra cost/effort to update/put out.

Putting the “hand reader” onto a t-shirt (aka a braille version of https://techxplore.com/news/2020-06-bioactive-inks-wearable-textiles-conditions.html ) would be more portable along with having the ability

to convey more information than a hand scanner. aka while reading the

information looking at, areas of shirt could convey other information like

different areas on a visual screen do.

he braille t-shirt tied in with the already available google glasses visual translator would get around the need for visually impaired reader to know where to place hand (e.g. look at it, glasses translates what your looking at to braille (instead

of a foreign language, The translation the braille is then sent to the feel-able

t-shirt for instant braille feel/read)

Yes, I was thinking of some version of this. Beacons that use QR codes and/or ocr are already available and working. (Ie. NaviLens, way around, etc.) (And cheaper to implement). I think it would be better to improve the implementation of these and work on improving how they work with current braille displays (they do work with braille displays, just need some tweaking.) This is stupid and won;t work. Blind people will always have to deal with touching surfaces even to find this thing/ and braille cells the size of your palm are super inefficient. This seems like another silly tech thing where they didn’t look at what is already there and talk to actual blind people about what would be helpful.

I was thinking of similar issues with this. The photo at the top shows it next to a bus schedule, so presumably it is presenting that information. People who live in the area usually know the bus schedule already, the schedules are aimed more at people who don’t live around there or perhaps in case someone forgot. So, that being the case, how would someone who can’t see this thing, know it’s there waiting to be used? Does it beep? Is it always sending out sounds? If you used some audible sounds to announce this things presence, couldn’t you just use those sounds to announce the information? If just not touching the thing is the goal, what keeps people from having to feel around to find it before they can use it?

Is there an ISO standard covering ability enabling tech like this?

Whatever it becomes it needs to be a single good long lasting standard like hearing inductive loops aircraft nav-com or ELTs; buy once and no Apple changing charge plugs crap.

My biggest problem with Hitchhikers Guide to the Galaxy was Ford Prefect’s sub-etha signalling ‘thumb’; how can you get a whole galaxy of subversives and free-riders to agree on a single signaling technology and channel?

If we were able to standardize we would probably have installed AM radio transmitters and record players around anything that required a reader board(not unlike the ‘talking’ highway signs) to enable seeing different people, but we cant standardize and barely have braille markings at elevators, even in government buildings. People could have tuned the ‘special frequency’ for additional information without bothering the normies starting in the 40s or perhaps when the transistor enabled pocket radios with reasonable battery life came around. If we could have established a long term standard and all begun investing in that nobody would have to worry about zapping braille onto people’s hands now. That and the user side tech if required has to be cheap, say $1, a pocket radio would be useful to everyone with maybe an added switch for ability freq, and as easy to find as reading glasses or toothbrushes, or a mandated FM radio in a mobile phone.