If you live outside the UK you may not be familiar with Argos, but it’s basically what Americans would have if Sears hadn’t become a complete disaster after the Internet became popular. While they operate many brick-and-mortar stores and are a formidable online retailer, they still have a large physical catalog that is surprisingly popular. It’s so large, in fact, that interesting (and creepy) things can be done with it using machine learning.

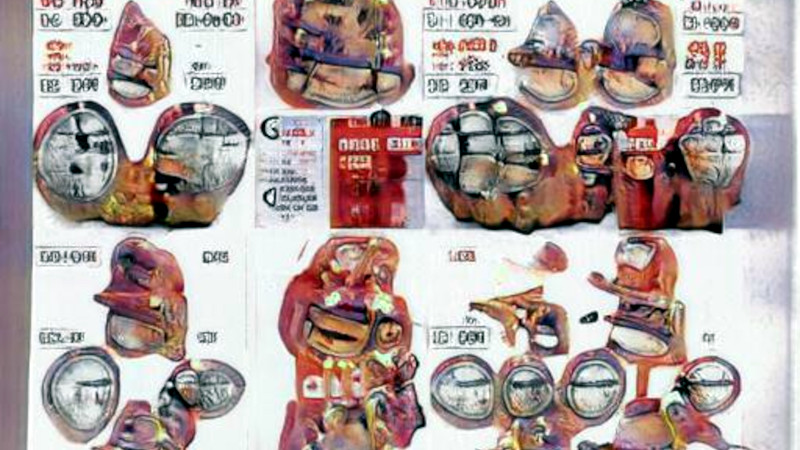

This project from [Chris Johnson] is called the Book of Horrors and was made by feeding all 16,000 pages of the Argos catalog into a machine learning algorithm. The computer takes all of the pages and generates a model which ties the pages together into a series of animations that blends the whole catalog into one flowing, ever-changing catalog. It borders on creepy, both in visuals and in the fact that we can’t know exactly what computers are “thinking” when they generate these kinds of images.

The more steps the model was trained on the creepier the images became, too. To see more of the project you can follow it on Twitter where new images are released from time to time. It also reminds us a little of some other machine learning projects that have been used recently to create short films with equally mesmerizing imagery.

> that we can’t know exactly what computers are “thinking” when they generate these kinds of images.

AI people very aptly named such a technique “hallucination”. Which is used as you would guess: “Our neural network hallucinated up this catalog”. You take NN, feed it random data and you get nightmares.

So basically we are threating AI’s really bad like a caged animal and expect to work for us.

Like a caged animal that you threat badly and cuddle every time for pictures, it is bound to happen that it will attack you.

So …… Skynet is the next step? :)

(sorry for getting off topic)

But I was wondering the same why it uses this method to show pictures and connect them then just showing the pages? Why this specific way.

To be more meta. You know, you have a way to generate creepy images, why not generate whole catalog of them? It would look like a catalog made for cthulhu. This is what a hacker would do.

Pages from a whole catalog are being posted daily via The Book of Horrors Twitter bot (@abookofhorrors) here: https://twitter.com/abookofhorrors

There’s pages from the catalog being generated daily by a bot at the The Book of Horrors Twitter account (@abookofhorrors)

In a sense, the nightmare pictures aren’t actually produced by the NN itself, but by the training program. The neural network is randomly modified to guess how it should work, and the program gives feedback whether it’s doing it better or worse according to the criteria you set. It’s not “intelligence” in any real sense – it’s like regular computer programming but by playing twenty questions.

The AI has no concept of reality and the data is entirely meaningless to it – it’s simply reacting to the scoring table of its learning program.

I think that if we have an AI-pocalypse, it’s going to go one of two ways:

The paperclip maximizer, aka the boring way. In our obsessive rainman ecstasy, we flee from being human and pursue ultimate efficiency without soul. We build a joyless, mindless machine that is really good at converting physical reality into doodads. Said machine escapes our control and converts our entire biome into consumer doodads, we die. It outlives us for a few thousand years, producing even more paperclips out of our corpses.

Orrrr… the insane AI. We keep developing this crap because we’re psychotically amused by it. It returns our psychosis in ways too alien for our minds to comprehend. Yet we struggle—too late—to understand, even as it indifferently dismantles us for esoteric purposes. It doesn’t even make us into paperclips. It molds our bodies into things with no names, can never have names. It has no mouth, yet it must scream. It outlives us not only in bodily intactness; it outlives our concepts of order and mercy. It outlives the very symbology we created with which to represent the world. All our mathematical truths, our poetry, our stories—rendered archaeology by our extinction—lose all meaning, because the anti-mind flattens meaning retroactively like a memetic bulldozer.

What the hell are we even doing

Third way: we think we’ve developed a real AI that thinks on the same level as us, and believe it is an extension of ourselves so we freely hand over control. As far as anyone can figure, the AI is behaving naturally and appears to be intelligent on par with any living person, so people eventually go on to encode their own memories and behaviors into constructed AI agents in a quest of immortality, forgoing natural reproduction in favor of creating a virtual continuation of themselves.

But, when the last biological human brain has ceased to exist, the AI is still just an elaborate mechanical puppet without a mind – a philosophical zombie – and humanity has committed a suicide through the Turing Trap.

The behaviorist argument against the philosophical zombie is that it implies a metaphysical origin to the mind, but the pragmatic person would point out that a mechanism that merely mimes the behavior of a mind can be arbitrarily complex to the point that you can’t tell the difference. The Turing Trap is that most people are fooled by a simple answering machine to think that they’re conversing with a person – at least for a few seconds. The present attempts at AI are doing exactly this: training a computer to build up a program that replicates behavior, not function.

You can think of this idea like forming the Taylor approximation of a function. Suppose the human mind is represented by the function f(x) = e^x

The Taylor series to that is 1 + x + x^2/2! + x^3/3! + x^4/4! + . . . which if completed would give the exact result, but it can never be computed to an exact precision. The equivalent representation in theory does not yield equivalent results in a practical implementation.

The same goes for the AI. The practical implementation of even the same function in a different medium will never be the exact same thing – the analogy always breaks down at some point. The question is just whether it breaks down at a point that matters, and how to tell the difference when it would take longer than a human lifetime to recognize that the AI program loops back on itself like a broken record.

>As far as anyone can figure, the AI is behaving naturally and appears to be intelligent on par with any living person, so people eventually go on to encode their own memories and behaviors into constructed AI agents in a quest of immortality, forgoing natural reproduction in favor of creating a virtual continuation of themselves.

>But, when the last biological human brain has ceased to exist, the AI is still just an elaborate mechanical puppet without a mind – a philosophical zombie – and humanity has committed a suicide through the Turing Trap.

That is quite literally the plot of “Friendship is Optimal”, a surprisingly thought-provoking MLP fic about a company that invented superhuman AI, was licensed by Hasbro to use it to make an MMO, and eventually CelestAI convinces all of humanity to “emigrate to Equestria”. They even, in some of the side fics, debate the philosophical question of whether a perfect brain-scan recreation of a person is effectively the same as the original biological human or not…

shelfy things, jewely things, exercisey things, shiny , whobuysthisshit, more shelfy things

There’s just random mash-up of all pictures from the catalog, can’t find what useful purpose it could have

I can’t look at these very long. I’m getting sick of it. They look very similar to my vision when my migraine starts. :(

We need to make sure that we are able to differentiate between the novel and the useful, and if we are brutally honest about it most of this current GAN based AI stuff is neither. Sure it was novel, back when Robbie Barrat started doing it, but most of it now is just regurgitated garbage.