We all have a folder full of images whose filenames resemble line noise. How about renaming those images with the help of a local LLM (large language model) executable on the command line? All that and more is showcased on [Justine Tunney]’s bash one-liners for LLMs, a showcase aimed at giving folks ideas and guidance on using a local (and private) LLM to do actual, useful work.

This is built out from the recent llamafile project, which turns LLMs into single-file executables. This not only makes them more portable and easier to distribute, but the executables are perfectly capable of being called from the command line and sending to standard output like any other UNIX tool. It’s simpler to version control the embedded LLM weights (and therefore their behavior) when it’s all part of the same file as well.

This is built out from the recent llamafile project, which turns LLMs into single-file executables. This not only makes them more portable and easier to distribute, but the executables are perfectly capable of being called from the command line and sending to standard output like any other UNIX tool. It’s simpler to version control the embedded LLM weights (and therefore their behavior) when it’s all part of the same file as well.

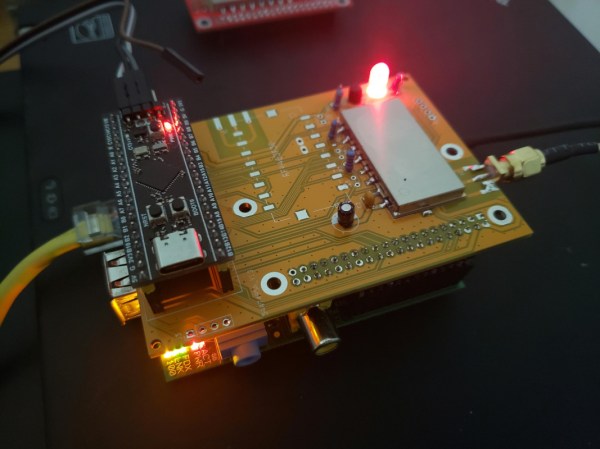

One such tool (the multi-modal LLaVA) is capable of interpreting image content. As an example, we can point it to a local image of the Jolly Wrencher logo using the following command:

llava-v1.5-7b-q4-main.llamafile --image logo.jpg --temp 0 -e -p '### User: The image has...\n### Assistant:'

Which produces the following response:

The image has a black background with a white skull and crossbones symbol.

With a different prompt (“What do you see?” instead of “The image has…”) the LLM even picks out the wrenches, but one can already see that the right pieces exist to do some useful work.

Check out [Justine]’s rename-pictures.sh script, which cleverly evaluates image filenames. If an image’s given filename already looks like readable English (also a job for a local LLM) the image is left alone. Otherwise, the picture is fed to an LLM whose output guides the generation of a new short and descriptive English filename in lowercase, with underscores for spaces.

What about the fact that LLM output isn’t entirely predictable? That’s easy to deal with. [Justine] suggests always calling these tools with the --temp 0 parameter. Setting the temperature to zero makes the model deterministic, ensuring that a same input always yields the same output.

There’s more neat examples on the Bash One-Liners for LLMs that demonstrate different ways to use a local LLM that lives in a single-file executable, so be sure to give it a look and see if you get any new ideas. After all, we have previously shown how automating tasks is almost always worth the time invested.