Cameras are getting smarter and more capable than ever, able to run embedded machine vision algorithms and pull off tricks far beyond what something like a serial camera and microcontroller board would be capable of, and the upcoming Vizy aims to be even smarter and easier to use yet. Vizy is the work of Charmed Labs, and this isn’t their first foray into accessible machine vision. Charmed Labs are the same folks behind the Pixy and Pixy 2 cameras. Vizy’s main goal is to make object detection and classification easy, with thoughtful hardware features and a browser-based interface.

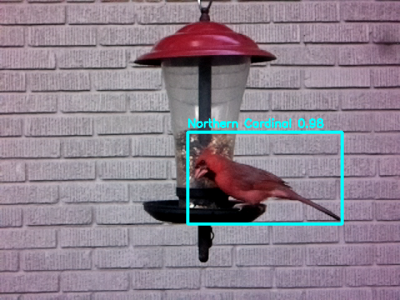

The usual way to do machine vision is to get a USB camera and run something like OpenCV on a desktop machine to handle the processing. But Vizy leverages a Raspberry Pi 4 to provide a tightly-integrated unit in a small package with a variety of ready-to-run applications. For example, the “Birdfeeder” application comes ready to take snapshots of and identify common species of bird, while also identifying party-crashers like squirrels.

The demonstration video on their page shows off using the built-in high-current I/O header to control a sprinkler, repelling non-bird intruders with a splash of water while uploading pictures and video clips. The hardware design also looks well thought out; not only is there a safe shutdown and low-power mode for the Raspberry Pi-based hardware, but the lens can be swapped and the camera unit itself even contains an electrically-switched IR filter.

Vizy has a Kickstarter campaign planned, but like many others, Charmed Labs is still adjusting to the changes the COVID-19 pandemic has brought. You can sign up to be notified when Vizy launches; we know we’ll be keen for a closer look once it does. Easier machine vision is always a good thing, because it helps free people to focus on clever ideas like machine vision-based tool alignment.

Cool to see this sort of thing becoming more accessible. Reminds me of https://xkcd.com/1425/

Glad to see it wasn’t just me.

Funny though, in that comic he claimed “research team and five years.” It’s been just about six. I suppose even imaginary projects run late.

Flickr made this 1 month after the comic:

https://code.flickr.net/2014/10/20/introducing-flickr-park-or-bird/

“Easier machine vision is always a good thing, because it helps free people to focus on clever ideas like machine vision-based tool alignment.”

Always? I’m sure there are plenty of dystopian stories that assume such technology.

Flickr made this 1 month after the comic:

https://code.flickr.net/2014/10/20/introducing-flickr-park-or-bird/

I made almost this exact thing a year ago. tldr; scrapped it and went to better hardware because the RPi4 is too slow.

Their web interface is much much more refined, but otherwise it looks extremely similar. Their web interface is 10x better and it has very neat features like text messaging and OCR, but under the hood I think it’s damn near identical.

I used a Raspberry Pi 4 with 4 gigs, and ran Tensorflow with MobileNetSSD. It’s slooooooooooooooooow. Like unusably slow. I tried optimizing it to death but there’s only so much you can squeeze out of that hardware. From the video they’re achieving similar framerates to what I was seeing. What you can’t see from the video is the 5 second delay from frame capture, Tensorflow inference, to video out.

I ended up scrapping it and buying a few Jetson Nanos. They work great. I’m between 20-30 fps now, and I don’t have to use quantized models so the accuracy is better. Lag is 1 second or a little less. nVidia Jetsons are great, but I think there’s even more interesting hardware now. Khadas VIM3 Pro looks very interesting, but I think that requires quantized models as well.

Have you tried a Vizi-AI this has the real power and had NodeRed app included to deliver outcomes from the inference output really quickly

That sounds like a great feature, but there’s still a hard limit of limited CPU horsepower. Adding NodeRed to the Raspberry Pi 4 isn’t going to speed up a 5 second inference delay. It might work well for a very slow changing system. Probably not electrocuting a certain species of bird like another commenter had suggested.

Deer. Paintball. Turret.

Easy to recognize a cardinal even if it was in black and white video, but lets see if it can tell a invasive starling from many similar dark birds. If it passes that test then wire up the HV perches individually addressed to the doomed bird. Many bird lovers would like to feed our home birds only. A discriminating bird feeder indeed! Cats could eat the fallen starlings.

So then what are you going to get rid of the cats with? Gorillas?

There is no way the AI is even close to accurate enough to be able to accomplish that. If you watch a live video of the classification it jumps all over the place, even on near static images. It’ll outline the same item and call it a person, then a chair, then a suitcase… You’d end up with a big pile of every kind of dead bird under the feeder, maybe with a slightly higher percentage of the invasive species you’re targeting.

Do you have a link to such video, because i couldn’t find one. They do say it can identify species, but like always, them saying isn’t a proof that it’s consistent enough and how big of a difference is required. Especially if the difference is like color under the wing or head or tail shape, the AI would have to know it is not able to identify if it has not seen the identifying part of the bird. Also that would require samples for each different species in the first place even if it was possible.

No it’s just any AI. They all do it. I’ve been developing with Tensorflow for a while now and if you’re doing visual object classification that’s just a thing you have to live with.

Take any example video on Youtube. Understand that these videos have been cherry picked. They still jump around like crazy. A car might turn into a skateboard for a couple frames, then go back to being a car. Then turn into a fridge for a few frames. Then disappear. Then be a car again. They just all do it.

https://www.youtube.com/watch?v=4eIBisqx9_g

Yeah, the detection systems seem to be still missing awareness, that a person can’t magically turn into a chair in couple of frames (well i guess it depends on frame speed, but you know, normal scenario), but a sitting person can by leaving the area, but a lot slower though etc.

My wife and want an camera to watch and keep track of birds at the feder.

I wonder if using various filters would improve the recognition rate? Plumage looking different under ultraviolet for example.