Caring for the elderly and vulnerable people while preserving their privacy and independence is a challenging proposition. Reaching a panic button or calling for help may not be possible in an emergency, but constant supervision or camera surveillance is often neither practical nor considerate. Researchers from MIT CSAIL have been working on this problem for a few years and have come up with a possible solution called RF Diary. Using RF signals, a floor plan, and machine learning it can recognize activities and emergencies, through obstacles and in the dark. If this sounds familiar, it’s because it builds on previous research by CSAIL.

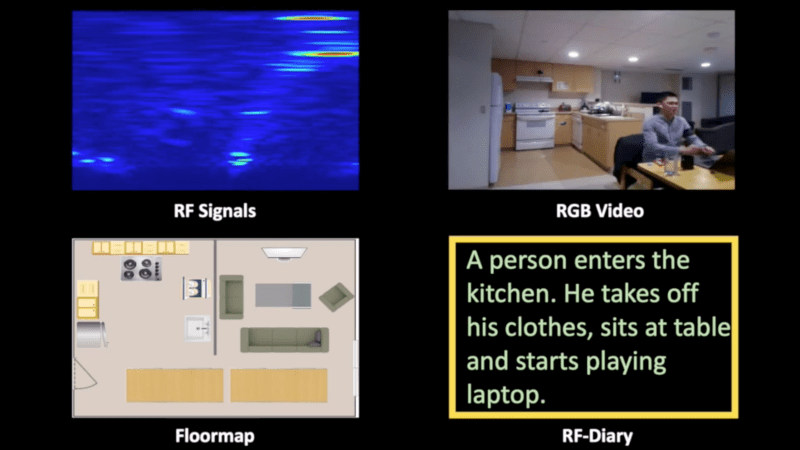

The RF system used is effectively frequency-modulated continuous-wave (FMCW) radar, which sweeps across the 5.4-7.2 GHz RF spectrum. The limited resolution of the RF system does not allow for the recognition of most objects, so a floor plan gives information on the size and location of specific features like rooms, beds, tables, sinks, etc. This information helps the machine learning model recognize activities within the context of the surroundings. Effectively training an activity captioning model requires thousands of training examples, which is currently not available for RF radar. However, there are massive video data sets available, so researchers employed a “multi-modal feature alignment training strategy” which allowed them to use video data sets to refine their RF activity captioning model.

There are still some privacy concerns with this solution, but the researchers did propose some improvements. One interesting idea is for the monitored person to give an “activation” signal by performing a specified set of activities in sequence.

Radar is a complex but fascinating topic, and we’ve seen a number of excellent projects in the field, including a bicycle mounted radar that can be used to generate aerial images and a Doppler radar module designed from first principles.

Thanks [Qes] and [Adam Conner-Simons] for the tip!

“A person enters the kitchen. He takes off his clothes, sits at table and starts playing laptop. ”

I don’t know about you, but that line sounds super creepy to me. To sit naked at a table while playing Laptop…

Heck of a screensaver.

Like a banjo… https://www.youtube.com/watch?v=Ie5Vj2PVPVQ

As long as he’s not playing with his joystick!

Make sure you’re gentle! wouldn’t want to snap a banjo string.

It’s worrying that this could become refined enough to determine what someone is typing on a keyboard. In theory, you could just leave a small device in the room (anywhere in a room of any layout) and as long as they are the only one typing then the information relayed would be all that is needed to determine what you are typing.

I don’t think so. The wavelength of the frequencies used wouldn’t allow you to resolve features as small as fingers, even if the sensors and machine learning were up to the task

This is brilliant work and badly needed, the number of tragic stories I have heard about people who have been found a bit late is shocking. So many people live alone these days, some get so forgotten that their bodies are not found for months. Anything that can provide them with a technological guardian angel is a very good project indeed!

Why not just use a very low resolution camera? No privacy concerns, more mature technology.

or a few strategically placed motion sensors