Parallel computing is a fair complex subject, and something many of us only have limited hands-on experience with. But breaking up tasks into smaller chunks and shuffling them around between different processors, or even entirely different computers, is arguably the future of software development. Looking to get ahead of the game, many people put together their own affordable home clusters to help them learn the ropes.

As part of his work with decentralized cryptocurrency, [Jay Doscher] recently found himself in need of a small research cluster. He determined that the Raspberry Pi 4 would give him the best bang for his buck, so he started work on a small self-contained cluster that could handle four of the single board computers. As we’ve come to expect given his existing body of work, the final result is compact, elegant, and well documented for anyone wishing to follow in his footsteps.

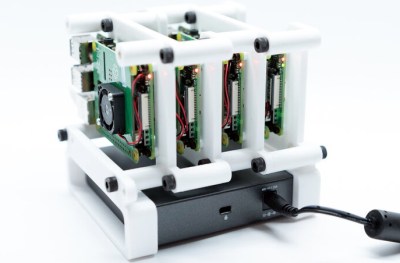

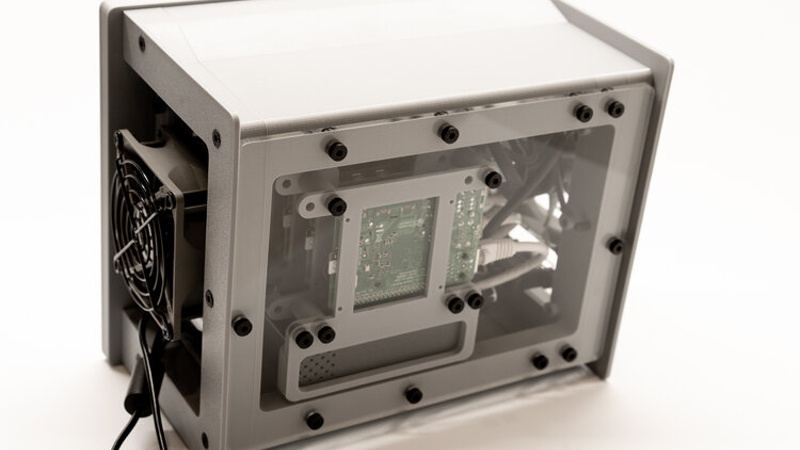

Outwardly the cluster looks quite a bit like the Mil-Plastic that he developed a few months back, complete with the same ten inch Pimoroni IPS LCD. But the internal design of the 3D printed case has been adjusted to fit four Pis with a unique staggered mounting arrangement that makes a unit considerably more compact than others we’ve seen in the past. In fact, even if you didn’t want to build the whole Cluster Deck as [Jay] calls it, just printing out the “core” itself would be a great way to put together a tidy Pi cluster for your own experimentation.

Thanks to the Power over Ethernet HAT, [Jay] only needed to run a short Ethernet cable between each Pi and the TP-Link five port switch. This largely eliminates the tangle of wires we usually associate with these little Pi clusters, which not only looks a lot cleaner, but makes it easier for the dual Noctua 80 mm to get cool air circulated inside the enclosure. Ultimately, the final product doesn’t really look like a cluster of Raspberry Pis at all. But then, we imagine that was sort of the point.

Of course, a couple of Pis and a network switch is all you really need to play around with parallel computing on everyone’s favorite Linux board. How far you take the concept after that is entirely up to you.

Got to wonder how annoying the fans on those PoE HATs are going to be.

I’ve got three of them and when they’re all on it’s really irritating – the board should have been designed with a larger fan, there’s plenty of room if it was laid out a bit differently

Add this to your /boot/config.txt:

dtparam=poe_fan_temp0=55000,poe_fan_temp0_hyst=5000,poe_fan_temp1=66000,poe_fan_temp1_hyst=5000

dtparam=poe_fan_temp2=71000,poe_fan_temp2_hyst=5000,poe_fan_temp3=73000,poe_fan_temp3_hyst=5000

I got it from here:

https://www.raspberrypi.org/forums/viewtopic.php?t=221639

The fans on each side of the enclosure aren’t speed controlled, and I have that in my article as a to-do since the noctuas are running and full speed and a little loud for a desk. If you’re using the bare internal frame the code above should be fine.

Design and print a air duct for one bigger, slower fan and lose the individual fans.

I was leaning that way. Though I like passive if possible so perhaps actually putting the Pi PCB’s on the same plane for easy fitting of large passive cooling heatsinks through the POE hats fan location. Or making the rack mounting points with Aluminium/Copper sheets and/or heatpipe components to carry the heat away to a large side panel passive heatsink.

However this is a really neat looking and functional solution, with all the off the shelf bits to make it easy to build so its a winner in my book. I’ve been meaning to build a cluster for a while, just to play with.. and this is a neat and relatively cheap way of doing so (and using Pi 4’s actually quite high performance so should get really used not just as a learning tool…).

I challenge you to design it! I think you may find it more satisfying, challenging, and problematic to do so while making something accessible to others. “Design and print” may be a good place for you to start.

Ob”Imagine a Beowulf cluster of these!”

But seriously: nice and neat looking packaging.

This is a genuine question form someone who know very little about cluster computers:

What do you run on them and how?

I assume each one has it’s on SD and one has the master image or whatever to run the rest but what OS do you use and what do you run on it?

Does it behave like an Ubuntu server?

Retropie?

I just don’t know enough to understand more than the cool factor.

It really depends on the workloads. For distributed computing you need to make sure that there’s a way to divide up a complex task for each node or worker. I can really only speak to how I understand the Millix transaction process, where the Millix currency processes very small transactions (hopefully) very fast. This differs from Bitcoin which requires some computational work to process a transaction, which gets harder with more nodes competing.

This deck was built to let four nodes work independently- each one is still processing the same shard, but each one is adding to the computational power of the Millix network.

Other systems could easily be used on this same hardware platform though- there are four nodes and one has an extra SSD to share files to the other nodes if needed.