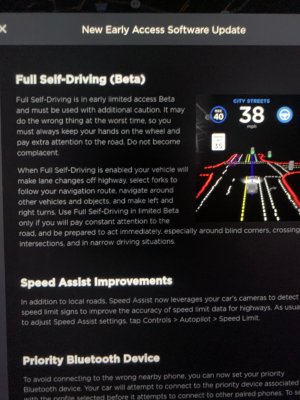

Self-driving technology is a holy grail that promises to forever change the way we interact with cars. Thus far, there’s been plenty of hype and excitement, but full vehicles that remove the driver from the equation have remained far off. Tesla have long posited themselves as a market leader in this area, with their Autopilot technology allowing some limited autonomy on select highways. However, in a recent announcement, they have heralded the arrival of a new “Full Self Driving” ability for select beta testers in their early access program.

Taking Things Up A Notch

The new software update further extends the capabilities of Tesla vehicles to drive semi-autonomously. Despite the boastful “Full Self Driving” moniker, or FSD for short, it’s still classified as a Level 2 driving automation system, which relies on human intervention as a backup. This means that the driver must be paying attention and ready to take over in an instant, at all times. Users are instructed to keep their hands on the wheel at all times, but predictably, videos have already surfaced of users ignoring this measure.

The major difference between FSD and the previous Autopilot software is the ability to navigate city streets. Formerly, Tesla vehicles were only able to self-drive on highways, where the more regular flow of traffic is easier to handle. City streets introduce far greater complexity, with hazards like parked cars, pedestrians, bicycles, and complicated intersections. Unlike others in the field, who are investing heavily in LIDAR technology, Tesla’s system relies entirely on cameras and radar to navigate the world around it.

A Very Public Beta Test

Regulations are in place in many jurisdictions to manage the risks of testing autonomous vehicles. Particularly after a pedestrian was killed by an Uber autonomous car in 2018, many have been wary of the risks of letting this technology loose on public roads.

Tesla appear capable of shortcutting this requirement, by simply stating that the driver is responsible for the vehicle and must remain alert at all times. The problem is that this option ignores the effect that autonomous driving has on a human driver. Traditional aids like cruise control still require the driver to steer, ensuring their attention is fully trained on the driving task. However, when the vehicle takes over all driving duties, the human in the loop is left with the role of staying vigilant for danger. Trying to continuously concentrate on such a task, while not being actually required to do anything most of the time, is acutely difficult for most people. Ford’s own engineers routinely fell asleep during testing of the company’s autonomous vehicles. It goes to show that any system that expects a human to be constantly ready to take over doesn’t work without keeping them involved.

Cruise and Waymo Going Driverless at the Same Time

Tesla’s decision to open the beta test to the public has proved controversial. Allowing the public to use the technology puts not just Tesla owners, but other road users at risk too. The NHTSA have delivered a stern warning, stating it “will not hesitate to take action to protect the public against unreasonable risks to safety.” Of course, Tesla are not the only company forging ahead in the field of autonomous driving. GM’s Cruise will be trialing their robotic vehicles without human oversight before the year is out, and Alphabet’s Waymo has already been running an entirely driverless rideshare service for some time, with riders held to a strict non-disclosure agreement.

The difference in these cases is that neither Cruise or Waymo are relying on a human to remain continually watchful for danger. Their systems have been developed to a point where regulators are comfortable allowing the companies run the vehicles without direct intervention. This contrast can be perceived in two ways, which each have some validity. Tesla’s technology could be seen as taking the easy way out, holding a human responsible to make up for shortcomings in the autonomous system and protect the company from litigation. Given their recent updates to their in-car camera firmware, this seems plausible. Alternatively, Tesla’s approach could be seen as the more cautious choice, keeping a human in the loop in the event something does go wrong. However, given that evidence is already prevalent that this doesn’t work well in practice, one would need to be charitable to hold the latter opinion.

A conservative view suggests that as the technology rolls out, we’ll see more egregious slip ups that a human driver wouldn’t have made — just like previous iterations of Tesla’s self driving technology. Where things get hairy is determining if those slip-ups deliver a better safety record than leaving humans behind the wheel. Thus far, even multiple fatalities haven’t slowed the automaker’s push, so expect to see development continue on public roads near you.

Maddeningly irresponsible. Roads are a shared resource, and I didn’t sign up to have people texting while their car uses a camera to decide what color a light is, or whether a stop sign exists.

It’s also not acceptable for them to push liability onto the customer. If you tell them they have a full-self-driving autopilot, they’re going to act like they have one. Everybody involved in this decision-making process knew damned well that these beta testers won’t be keeping their hands on the wheel and paying attention the whole time, especially once they get comfortable with the system.

I just hope they don’t kill or maim too many innocent people.

I couldn’t have said it better myself. The whole idea of self-driving vehicles on public roads is irresponsible, dangerous, and stupid beyond all belief. I can’t understand why anyone with a modicum of intelligence thinks this is a good idea. Laziness is the only reason I can think of, as this technology only serves the people that can’t be bothered to drive responsibly, and companies whose entire sales model is based on convincing people who don’t know better to buy their garbage based on fabulous but flawed technological promises (Tesla, for example).

I’ve seen far too many computer glitches and crashes to ever trust a computer to pilot a 2 ton behemoth along at 70 mph a foot or two from actual responsible drivers.

People who can’t drive responsibly? Gee, you’re talking about a lot of the driving public.

If you choose not to drive drunk, not to speed, and not to fiddle with your cellphone while driving, you’re already reducing your risk of accident by 80% compared to the “average driver”. If you also choose not to drive in terrible weather and stop by the side of the road when it starts pouring down cats and dogs, you’d be reducing your risk of accident by 99%

That being the case, if you enable a “self-driving” AI that is twice as good as the average driver, you would be putting yourself into a terrible risk, because the AI would be effectively part-drunk, part-distracted, and bad at following the rules of the road.

The trick is that the majority of drivers are better than the average driver, by a large margin, because the minority that causes the majority of accidents are really terrible at driving.

So we can save a lot of lives by putting those dangerous drivers into self driving cars!

Yes, only trouble is identifying who they are.

Then there’s also the issue that every driver starts dangerous, and becomes safe as they drive more miles. If you don’t let them, they will never learn.

Besides, if you were using the FSD to eliminate the bad drivers, you would enable it when you want to be drunk or text and drive, or sleep behind the wheel, or navigate a rainstorm at night when you (and it) can’t see s*** – which is exactly how you’re not supposed to use it since you are still responsible to keeping hands on the wheel.

> stop by the side of the road when it starts pouring down cats and dogs, you’d be reducing your risk of accident by 99%

I doubt that, the chance of you causing an accident shoots way up when you try to get back out on the road. IMHO by and far the safest thing you can do is increase your following distance, turn on your 4 way flashers if visibility is low, obviously slow down, but stay in your lane and move slowly along with traffic.

If the rain is coming down so hard that there’s a risk of hydroplaning, you can hit a deep puddle that wipes you out anyways, or someone else rear-ends you when the car slows down suddenly, or you hit the puddle on one side only and spin out. Other people will keep on trying to drive as normal, so there’s a great chance you’re heading into a pileup.

“If you also choose not to drive in terrible weather and stop by the side of the road when it starts pouring down cats and dogs, you’d be reducing your risk of accident by 99%”

It must be nice to be independently wealthy so that you don’t have to be anywhere on a set schedule despite conditions. I guess the rest of us can just appreciate that you staying home makes one less person on the road during bad weather that we need to contend with.

>you don’t have to be anywhere on a set schedule

And then there’s idiots who just have to go somewhere even though they’re braving a snowstorm on summer tires. I’d rather lose my job than my life.

FSD (level 3) can only be achivable once widespread testing can be implemented. Even the current cars already have a better track record than human drivers. Ive read somewhere that if you want FSD with the current legislation youll need to wait for about 80 years with 10x the amount of prototypes in the road to proof that FSD is better than a human.

> Even the current cars already have a better track record than human drivers.

No they don’t. Last I read it’s 9.1 accidents per million miles vs. 4.4 for humans. The trick is that you count accidents, not just what is legally accountable to be the car’s fault, because the self-driving vehicles tend to get away with causing rear-ending accidents on the technicality that it’s always the other guy’s fault for driving “too close”.

It’s the longer term goals that are motivating this investment. The autonomous personal vehicle is just an odd stepping stone.

That’s what people say when they try to rationalize Elon Musk’s business choices – that all this serves some ultimate purpose that the critics are discounting – but what if it’s just him running his mouth?

Musk is playing into the fantasies of the “Jetsons” generation who grew up in the era of great technological advances, have the money and are so desperate to see their dreams of flying cars and space rockets come true that they’ll believe anyone who makes the promise.

“Musk is playing into the fantasies of the “Jetsons” generation ”

Beats just giving up, laying down and making no progress like their kids seem to have done.

How does getting cheated out of your money beat anything?

You’re still under the illusion that what Musk does is progress.

A car for many with kids, work, or hobbies like mine, fishing, needs to be automous as I need my car loaded with gear . i have no desire to jackass my rods and everything else into a robocar. Just let big brother know your every move on top of the huge inconvenience.

Millions of responsible drivers die every year. If self-driving ever does become a reliable and mature technology then it can potentially reduce that figure significantly. Besides, driving is a chore that is sadly necessary in some parts of the world, let a machine handle it like we do with other mundane chores.

This “full self driving” is a stupid and irresponsible name though and surely against some advertising standards.

– Glad I’m not the only one, well put both of you. And yes, Tesla should have full responsibility for this crap in my opinion, not being allowed to punt it to a driver (or the drivers insurance) if one of their cars runs (or other bodily injury) someone over while in ‘FSD’. I guess we’ll unfortunately likely see how that plays out in court sooner or later if a Tesla turns someone (other than its occupants) into a quadriplegic. Doesn’t seem ‘the driver was supposed to be able to take over at any instant’ may pass the smell test to a judge, but I’m by no stretch of the imagination a lawyer.

+1

Wait until you find out about *other drivers*!

If this makes more people aware that cars are dangerous, all the better. Cars ARE dangerous, no matter whether it’s a person or a computer in the driver’s seat. It’s ridiculous that we’ve allowed them to become our standard mode of transportation.

Do have stats on how many people were trampled by horses on the road before cars came along? How many carriage passengers were killed by thieves and bandits on the road? How many people got sick from the constant exposure to horse dung? Cars may kill a lot of people but they are much safer than what they replaced.

As if we could ever go back to what the cars replaced.

New things on the roadway? Obviously time to re-enact Red flag laws.

And people wonder why I get so angry about Tesla. Yeah ok so we put a bunch of barely passable death machines on the road. Buuutttt we pushed all liability to our customers so we are in the green baby gotta make that money money money.

In all seriousness, this is the same problem I have with drunk drivers. You want to be an idiot, crash into a tree, go for it I don’t care. When you risk the innocent lives of others around you, driving across the median and hitting a family van, your a pile of human waste.

Exactly. Tesla through a school area, kindergartener runs out from in front of a bus that is loading, etc. Sure it *can* happen with a human driver, but humans have a whole different situational awareness than these machines do. And in this case it is a child’s death by a software/computer shortcoming, rather than a human error. To err is human, machines aren’t granted this luxury. I hope something like this doesn’t happen, but it seems inevitable, and if it does, I’d be a fan of the affected suing Tesla out of existence for trying to profit off of lazy humans who can’t be bothered to drive responsibly themselves.

Yes, it is possible that a software bug hit at that moment would cause the car to continue along and kill the kid.

But.. if a kid runs out into the middle of the road without warning who has the faster reaction time? A computer or a human? Who has eyes that focus in one direction at a time and a brain that only kind of sort of multitasks and who can have sensors of multiple kinds placed all around a chassis and monitor them all with task switching so fast that it is for all intents and purposes simultaneous?

It’s a bad situation with no guarantees the kid is going to make it but I’m not convinced the greatest odds come with the human driver.

> if a kid runs out into the middle of the road without warning who has the faster reaction time?

The one which correctly understands that it’s a child in the first place. The AI is liable to not make that judgement in the first place, even if it could react in time.

Computer vision is still very very primitive and runs at best at 95% accuracy, but the algorithm has to be tuned down to avoid false negatives, so the AI doesn’t start to “hallucinate” things and driving erratically. That’s why the Uber algorithm ran over a person, which the computer saw but didn’t react to.

Sorry, the algorithm is tuned down to avoid false positives, which then causes false negatives.

The fundamental problem is that the computer is trained to identify “a child” by showing it a million pictures of children, and then it comes up with statistical correlates of what a child looks like. If the situation doesn’t fit the mold, then it doesn’t do anything. It doesn’t have a rule that says “if unsure, brake”, because it would be braking all the time, because it only understands a very limited set of things.

So the AI will happily drive a child over if they’re holding an umbrella and wearing yellow boots, because that wasn’t in the training set and the umbrella is obstructing the kid’s head, so it doesn’t meet the statistical threshold for anything the car knows. It’s not about reaction times – the car is fundamentally too stupid to drive.

The Autopilot isn’t their only sin. Tesla is knowingly using NCA batteries which are flammable enough that they will burn you alive in the car in an accident if you are knocked unconscious or trapped otherwise. No help can get to you before the whole thing is just a ball of fire.

They had a lawsuit over the matter thrown at them recently with the teenager who was killed speeding after Tesla’s maintenance took out the speed limiters that were requested by the parents, but as far as I know they settled it out of court with money.

You are aware that petroleum powered vehicles have a big tank full of highly explosive gas, and that many people burn to death every year from spilled fuel?

Yes. Gasoline however does not spontaneously combust when the tank or fuel lines are damaged, and it doesn’t burn nearly as fast.

https://saferide4kids.com/blog/car-fires-vehicle-submersion/

“A car fire was a contributing factor but not necessarily primary cause of death in 0.66% of fatal crashes and only 0.0051% of all car crashes.”

It is actually pretty rare that a gasoline powered car ends up in fire in a crash.

Tesla’s response on the question of why their cars burned their passengers in a crash:

“Based on the evidence, in every such case we know of involving a Tesla vehicle, the occupant would have died whether the crash resulted in a fire or not.”

I suppose free cremation could be a selling point…

I couldn’t agree more. While automated cars are an amazing technological achievement (when they work), I am completely against them. I actually enjoy driving though… well… except for my old commute from Seal Beach to West Los Angeles. That was absolute cancer.

I like cars which are drive-by-cable, manual transmission, and don’t have features which take control away from the driver like auto-braking, etc. I still vividly remember what happened to a journalist named Michael Hastings in 2012. He had mentioned earlier in the day that he thought someone had done something to his car while parked at his apartment. He even tried borrowing a friends car because he was fearful that he was being targeted by the government. The last anyone saw of Hastings was a red light camera video of his car doing 100mph or so down Highland where his mercedes hit a palm tree dead on and exploded. Given the statements earlier in the day about someone messing with his car, and how he had pissed off some powerful people in the Obama administration. I have little doubt that his car remote controlled into the tree.

This L2 pseudo FSD will be like watching an output from security camera. Brain will wander away and suddenly you are in a crash. Who is responsible? You are. Thanks a lot, I will rather drive my car by myself and own my own mistakes than ends up in jail for mistake of somebody else.

Agreed. I’ve always said: level 0 or level 5 autonomous vehicle. This is one rare case where extremes are the best choice and pretty much anything in between is horribly wrong.

So you advocate for banning cruise control and anti lock brakes? How terrible these things are, they thwart the.drivers intentions to control the car automatically. Even the automatic choke and the engine computer are getting between you and the car, preventing you from having control. Ban them all!

Nice trolling there buddy.

I laugh at people who think they have control, poor delusional suckers. The car controls you before you even get in, between paying for it and maintaining it, you are the slave and the car is the master.

False dilemma.

Cruise control and ABS still keep you active in the loop – although it has been shown that ABS and ESC etc. don’t much reduce the accident rate more than provide the illusion of safety and induce riskier driving.

Do i? Cruise control is like level 0,1. Did i say “ban” once?

What’s your major malfunction by the way?

“relies on human intervention as a backup”

Does the intervening human have to actually be in the car? How about a call center somewhere with under-paid, over-worked humans, each monitoring a bunch of vehicles. Sure, there will be lag, but that is what the onboard computer is for. Each call center (drive center?) worker is in a driving-sim-like setup and can over-ride one of his vehicles when required. If need be, his other vehicles can be handed off to others.

GTA for real, once the hackers get going or the call center is overrun by nefarious actors…

It could well be a mechanical turk as it is. Way cheaper to implement. (soon in akismet: “select all red streetlights. Queue corresponding xkcd.)

Does anyone else think that they are using these bull$h1+ names because they literally want to get away with murder. By pushing all negligence on the driver, they have some really smart marketing people and lawyers – It is a company that I will be avoiding.

-Yep, I just hope some others find some really smart lawyers also so they don’t get away with it.

Approaching concrete wall at 100 km/h …

… your car computer suffered a software problem and will now restart. Please be patient…

Yeah and humans Never have strokes or epilepsy or any other sort of medical problem that would cause them to lose control, they never get distracted by their phones or their passengers and the 30000 deaths every year on the road is apparently fake news

Actual human failure is responsible for less than 1% of the road accidents. Most of it is down to human CHOICE to drive dangerously or pay no attention. About one in five accidents is caused by poor road/weather conditions that has little to do with the driver.

Plus, when a human has a stroke or epilepsy etc. it’s a random risk. When the Autopilot fails, it is not a random risk – it doesn’t make random errors. It is a failure of the programming to cover some edge case, or its fundamental inability to process information (dumb AI) correctly to avoid the accident.

Failing to make the right choice is a failure. Failure to take road conditions into account is a failure. These things are all human failure.

Yes, but that is semantics. The choice is yours when you start driving. When the autopilot drives, it is not.

Attributing human choices as human failure, to argue that the autopilot doesn’t have to do any better, is forcing stupid choices on people who would not make them.

Imagine if you used the same argument for Robocop.

You would be saying, “Well the average police officer takes bribes and sometimes kills black people, therefore it doesn’t matter if Robocop sometimes shoots random people.”

Only the driver makes these choices, the passengers have no choice.

True, and the responsibility is still on the driver.

Although the passengers have a role in not stepping in to a car driven by a drunk or a person who keeps texting behind the wheel.

Dude…

They kill far more white people than blacks. But since you’ll likely bring up how blacks are only 13% of the population so per capita…. blah blah.

Well to that i would respond.. ok, fair point. Since that 13% commits 53% of all murders that propensity to violence couldn’t have anything to do with it.

All that is irrelevant to the point, Andrew.

On the argument that autonomous cars are dangerous to other users. The most important consideration should be: does the autonomous car overall cause less (severe) accidents than human driving? If so, let’s go forward by all means. One could even state that if the autonomous car has the *potential* to cause less accidents in the long run, we may accept some increase in accidents from autonomous cars in the short term. As long as if it speeds up the process of getting the more safe autonomous cars up and running asap.

Some numbers seem to show autonomous driving already is superior to human driving in many cases. I’d also say the potential for super-human performance is definitely there. Lastly, I really don’t care if I get rammed by a person or by an autonomous car. The important thing here is to decrease the amount of ramming!

“The most important consideration should be: does the autonomous car overall cause less (severe) accidents than human driving?”

Tesla owners that use “AutoPilot” have a lower rate of collisions than those who did not: https://www.slashgear.com/tesla-autopilot-safety-report-q1-2019-autonomous-progress-10572659/

Could this be because AutoPilot only works on freeways, where crashes are less likely?

And because Tesla cars are almost brand new with the latest driving assists anyways, without mechanical failures, and predominantly driven by older middle-aged men who are less prone to crashing and DWI offenses in the first place.

And because the Autopilot gives up at the first sign of trouble and demands the driver to take over, after which it is no longer the system’s fault if the car crashes.

Didn’t read the article, did you? Tesla reported that people driving _Teslas_ on AutoPilot and _Teslas_ not on AutoPilot had less crashes for the AutoPilot users than for the non-AutoPilot users.

> _Teslas_ on AutoPilot and _Teslas_ not on AutoPilot

Still not the same. Location, time of day, weather conditions, etc. are not even remotely similar for the two cases because of the restrictions on where and how the autopilot can be engaged.

And again, if the autopilot runs into trouble and throws its “hands” up, leaving the wheel to the driver, and the driver then crashes, that is counted as driver fault, not autopilot fault.

Tesla has made two case arguments in the past: that the Autopilot has fewer accidents than the general public, and now that the Autopilot is safer than the average Tesla driver. In both cases, they make the same slight of hand by making irrelevant comparisons.

And the most irrelevant comparison of all: if the driver deliberately chooses to drive unsafely, like the kid who got killed by speeding into a concrete barrier in a Tesla, why does that count against the average driver who does not have a death wish?

You see, stuffing the statistics up with the worst most irresponsible drivers again stacks the odds heavily in favor of the Autopilot, even though most drivers would never drive like that.

– Also it states – “when Autopilot was engaged”… so if you’re cruising along with autopilot, and autopilot says ~’Help! I’m tapping out!’ and disengages itself – and a crash ensues, seems you could easily count this accident as ‘autopilot not engaged’?

There’s no other way Tesla would count it.

Plus, on the face of it, what the autopilot does for the average driver is effectively act as a speed limiter and an automatic braking system – all of which exists already in other cars. This does away with the cases where the driver wasn’t paying attention and/or forgot to slow down when the speed limit changes.

That is the 20% feature that has 80% of the effect, and the other 80% of the system that would enable it to drive safely in all conditions is still very much incomplete.

>The most important consideration should be: does the autonomous car overall cause less (severe) accidents than human driving?

That is assuming that every driver is the average driver. The most important question is, when a person turns the system on, will it harm them more or less likely than when the person is driving.

There are some people form whom the Autopilot would absolutely help, but the vast majority of the driving public would be more likely harmed than helped by the system, because it is effectively distributing the risk from the incompetent and negligent drivers to everyone equally.

A car crashing into mine due to irresponsible driving is *also* distributing the outcome of incompetence and negligent drivers to me, even though I drive responsible I can still *be* hit by someone, the result being the same . So I do not fully agree with that notion. People who crash their cars are not just damaging themselves, they usually collide with something and in the ever more busy roads, and that is usualy another trafic participant.

If I can lower the chance to get hit by shitty driver and slightly increase the risk I hit something, this can still be a valid tradeoff.

I’m surprised by the number of naysayers today.

I love Howard Tayler’s vision of self-driving vehicles in the future. Over hundreds of iterations, they become so adept at collision avoidance that the protagonists can’t CAUSE a crash when they want to.

I’d love to dive into how Tesla has implemented feedback and machine learning. I can imagine a system where variances between the Autopilot and human driver decisions are fed back and analyzed for future improvements to the software. Did the driver have to manually correct? Logged. Did the driver make a mistake the AP would have avoided? Logged. I anticipate that once enough smart vehicles are on the road, they’ll network to flock intelligently and avoid unpredictable meat drivers. Like many other phenomena, once a certain critical mass is reached the problems become simpler to model and prevent.

I don’t trust people, we’re incredibly easy to distract and incredibly slow. Why would anyone prefer a human’s reaction time?

Its not the humans reaction time but the humans highly evolved and developed situational awareness. The machine doesn’t care, its got no desire to avoid crashes beyond getting the ‘best’ answer, has not got that human intuition in to situations so will often choose wrong.

I love the idea, one day it might even get there but it is not now, and probably not for quite some years. Letting them loose on the motorways I have less objection to, its a much more structured environment with far fewer variables. But in built up areas you have toddlers, pets, cyclists, idiots on their phone crossing roads etc – Even if the AI’s could spot them all with sufficient confidence (which so far they can’t) they can’t read the body language etc to know where they are heading, notice the parents eyes darting down behind their car suggesting the presence of a loose child etc.

The 30000 who die every year on the road from human error and drunkenness just might disagree with your overly optimistic view of human driving skills.

https://www.sciencedirect.com/science/article/abs/pii/S0001457513002534

“Can it be true that most drivers are safer than the average driver?”

“It is entirely possible for a majority of drivers to be safer than the average driver”

“In eight data sets, the share of drivers who were safer than the average driver ranged from 70% to 90%”

A minority of incompetent and negligent drivers are what causes the majority of accidents, and a minority of the minority is involved in so many accidents that they bring down the average for everyone. Most drivers are actually exceptionally good – most people will never have an accident.

So we can avoid lots of death by putting those bad drivers into self driving cars!

If you were using the FSD to eliminate the bad drivers, you would enable it whenever you want to be drunk or text and drive, or sleep behind the wheel, or navigate a rainstorm at night when you (and it) can’t see s*** – which is exactly how you’re not supposed to use it.

X… The truth of the matter is very few people will be able to even afford cars with such systems, let alone the ones who are prone to doing stupid stuff since bad driving is probably not the only stupid thing they engage in. I doubt drunks are going to go get tanked then suddenly become responsible enough to engage auto pilot to take them home.

I’m just trying to be realistic about things from the perspective of someone who had their entire life destroyed by a drunk driver who hit me head on at 45mph vs 55mph @ 5pm on a friday. Thankfully he was arrested two blocks away after fleeing when his car went up in flames. piece of trash blew a .34 BAC. What i’m saying is self driving cars would have done nothing in my situation.

I’m not sure where you’re from or if you’re the type who see driving as an inconvenience and see self driving cars as your own Uber without the weird guy driving you around, but a good chunk of the population love their cars and enjoy driving.

@andar_b said: “I’m surprised by the number of naysayers today.”

Don’t be. Because he thinks outside the box, Elon Musk is deemed politically incorrect. Elon Musk is becoming the Hydroxychloroquine of the mega-tech world.

– Not so much. I have no problem with Elon, his other projects, Hydroxychloroquine , or PC-incorrectness whatsoever. It’s autonomous driving I take issue with, and at least a number of other also do.

-At least personally, I think putting people on Mars is a much more realistic problem to solve than autonomous driving. Trying to, at least from my opinion, comes from corporate/investor greed preying on people’s laziness and ideals a self-driving car would be cool to have and/or brag about. It’s not something to ‘better the world with safety’, or solving an issue, it’s more ‘we could make a bucket-load of cash with this, especially if we are one of the first/only to market’ (if we can avoid liability for ensuing deaths/injuries). I don’t take issue with the advanced safety features, it is the self-driving mode. There are just soooo many edge cases for software and sensors, and software doesn’t remotely have the situational awareness a human would. I think many here have a decent understanding of what has to happen under the hood to make a system like this have even remotely the capabilities of a human driver, and see what they are releasing onto public roads is, well, bananas.

There’s a good reason for it: Elon Musk is an incompetent charlatan, whose early business success was based on selling his half-baked businesses to larger corporations during the dotcom bubble. He has never actually done a mature product – he sells the idea to investors before he has any means of pulling it off, does 20% of the effort that covers 80% (or more like 50%) of the promised functionality, makes the public beta-test his crap, and then hops on to the next project.

It’s kinda like a pyramid scam where the business keeps going as long as he keeps promising new things and starry-eyed investors and fanboys keep throwing money at him, because if he stops or even slows down to finish what he already started, to meet his original promises, it would cost him so much money that he would go under. So he simply shifts the goalposts, calls it done and moves on.

All this is done with the support of public subsidies, such as cheap government loans, or subsidies to the car buyers. Did you know that the profit that Tesla posted for the past five months has come entirely from the clean vehicle credits that other car companies have to buy from Tesla in order to be allowed to sell regular cars?

Elon Musk’s electric cars solve nothing, and they cost everyone a pretty penny. Why are we cheering on this guy?

You can treat Musk’s business model kinda like one of those game developers on Patreon and other platforms, who are selling the beta version already and demanding subscriptions to complete the development, which never ends because that’s exactly how they make money.

That way, when you hit the point of diminishing returns in development where you’re spending more and more time to fix the harder and smaller bugs, or maybe you run into a corner and have to refactor the whole thing to proceed, you’ve already collected the money and you can simply dump the project: just pretend you’re still doing it, while you kick up a new project on the side.

One example of the hype-and-dump cycle is that Tesla keeps discontinuing the cheaper Model S options, keeping only the most expensive models on the market – so the original promise of a $50k car that has a 300 mile range has been completely thrown out the window.

Likewise, they won’t sell you a Model 3 car with basic trim. It’s still on the list, but you’ll wait forever to have it made. It only exists in name, so they could say they made it. The product that you were promised doesn’t actually exist, and the beta version that has inferior properties (in this case, heavier price) is what they’re actually selling.

And that’s fraud.

Take a look at airplane autopilot. This have very long tradition and test run. Many years of engineering and user feedback. Top quality hardware and software. And who is driving this? At least 2 people with periodical training and healh overview. Drivers don’t get as much training and health examination as pilots do and average pilot understands his machine better than average driver his.

I get it that AI outperforms people in many ways (don’t get bored, tired or distracted by a cool song in radio or bad mesaage in news and it does not suspect it’s husband is cheating on it which just keeps on bothering it). But giving it too much control without proper constant observation I consider risky. Since drivers are not pilots and get tricked by markering slogans with zero understanding what this technology really does they should not be in charge. Not yet.

When the massively simpler problem of driverless trains is demonstrably solved with sufficient reliability, then maybe I’ll consider trusting a machine to pilot in a crowded environment with so many variables. Think about it trains are simple, they have but two directions, if you are stopping in response to something you can see a collision is already certain (so while a human driver might manage to spot a danger and slow faster the end result won’t change significantly) and signalling in theory means means there should never be anything to hit, plus in many cases they have always live network connections for their passengers to access the internet so should be able to report to all other trains directly.

Once that level of functionality has been proven and got public acceptance then maybe expanding that robust code to cover an extra axis and more chaotic conditions should be allowed – like provable 0% avoidable crashes on motorways a very much more predictable area (obviously there would be some rounding down to 0%, and not all crashes in which driving aids are a part could be avoided).

But as it stands nobody would accept a pilotless aircraft, and driverless trains seem to be largely considered unacceptable so why is it in fine for a car maker to allow it to operate in much harder conditions, where mistakes are almost certain. I think I might just have been put off road cycling for good, its bad enough with people trying to kill you round here.. Machines doing it for them… heck it might not even notice after it knocked you off…

You use the Ill-defined word “sufficient” in a way that makes the rest of your comment useless because we cannot read your mind.

You’ve apparently never been to Atlanta where the airport train is fully automated.

Why do you accept a high accident rate for humans while robot drivers most be perfect? How many extra people will die while you wait for the perfection you will never see?

And WHY does your opinion matter? Just curious…

> Atlanta where the airport train is fully automated.

And supervised by people who can pull an emergency lever if they see someone stumbling on the tracks.

Plus, the Atlanta Plane Train operates inside the secure area, in a tunnel where no other traffic is even possible. The route consists of two tracks with mutual end stops.

The system is basically nothing more than an elevator turned horizontal. It requires no AI, just traditional dumb automation.

Sounds just like most other subway systems! 3 of the 4 subway lines in Boston are just as you describe.

Yes. A subway is not very hard to automate.

Subway or train automation in most locations is limited by unions and loss of jobs. For instance, the SkyTrain in Vancouver is driverless. But the same technology RT line in Toronto still has an operator. They push a button to “go” when the beeper wakes them up.

The technology is there, but the politics might not be. Objective vs Subjective.

No, there is no operator. The doors open and close under computer control. Nobody stumbles onto the tracks because the platforms are enclosed with doors that only open when a train is at the platform.

There is no operator, but there are still people who can intervene. The system doesn’t operate entirely autonomously without any human supervision.

> accept a high accident rate for humans while robot drivers most be perfect?

With humans, safety is 99% a matter of choice. With robots, the choice is made for you by the designer. Therefore the minimum level of safety from the robot should be as best as the best person, not the average person who makes stupid choices, because the latter would be forcing stupid choices on people who would not make such choices if they knew.

Is safety a choice for the innocent people killed in the other lane when a stupid human pulls into oncoming traffic to pass?

That is a different question.

It is asking whether we should take away the choice of safety from the majority of drivers in order to increase the average safety of all road users, but this already assumes that the Autopilot is mandatory in all cases. It is simply not ready for that, so the point is moot.

It sucks pretty bad when death is forced upon you by a drunk driver

It would be the choice of the drunk to enable Autopilot to avoid the accident, but you’re not allowed to use the autopilot while drunk anyways, because you are responsible for keeping tabs on the machine and your hands on the wheel.

Never been to Atlanta its true, but we do have (and I’ve ridden on) driverless trains here too, in similar situations where horizontal elevator is more true to its function than train…

As for a more accurate measure of sufficient – its tricky to quantify at what point its good enough. Are we talking better error avoidance than humans by some factor (reasonable but perhaps not the most helpful) or the severity of errors when it does fuck up – if it fails less often even by a huge margin but when it cocks up its inevitably fatal to everyone on the train and the other train it ran into for example is that actually better than lots of minor incidents with no injuries? So for me the driverless would need to do both, have lower error counts than humans, and make less errors with serious consequences. Far from perfection required, but a heck of alot better than any autonomous system under normal conditions has proved.

Also high accident rate for humans isn’t really true, a high rate for very specific humans who should not be allowed to drive anymore after so many offences (and dealt with in such a way they are incapable of driving again) however is.

> if it fails less often even by a huge margin but when it cocks up its inevitably fatal to everyone

Tesla’s AI already demonstrates this feature. It reduces the rate of accidents by removing the simple errors, like rear-ending a car, or missing the speed limit sign and driving 65 mph into an intersection – but then it makes absolutely stupid errors like driving through a toll booth barrier because it wasn’t programmed to detect the case (it is now, once it did exactly that).

In other words, the safety is just an illusion. It is trading a small risk of human error to the inevitability of crashing once you find the conditions the programmers didn’t consider.

And, if some number of people have to die so Tesla can find these edge cases and correct them, the blood is still on their hands.

There’s also the Docklands Light Railway in London. It’s mostly above ground, there is little to prevent people getting on the track and it’s fully automated. Operators can take manual control at peak hours or when there are signaling problems, but they are mostly there to check tickets.

L…………atency.

Actually, if you shorten the pipe a bit, this already exists. It’s called “taxi driver”.

If two normal taxis crash, both carrying passengers, then four people might die.

If two apeless carriages or remotely-driven cars crash, then two people might die.

(Yes, this assumes a lot, the ratio will change with context, etc. but it’s still a factor.)

And how BAD are they…. lol

I really wish they’d pick somewhere hard to trial this stuff, like Phnom Penh. Despite the truely batshit crazy driving (and walking) I saw while there they had a relatively small number of accidents. If the auto pilot can handle that for 12 months without accident, I’d have very few concerns about letting it drive me home.

100% agree with all the comments above about Tesla being shitty corporate citizens.

I think a lot of the comments above are confused..

The tech is probably already better than a human driver, and the uber death had nothing to do with the self drive – watch the video (https://www.nytimes.com/interactive/2018/03/20/us/self-driving-uber-pedestrian-killed.html) – a person crossing a main road in dark clothing at night. A human driver would have gone straight into her too.

There is no doubt that auto driver will be statistically safer, the problem is

1) human beings don’t make decisions based on a rational evaluation of risk – and people want/need to be in control even if it is less safe (this is what would stop me doing full auto drive!)

2) the legal system – particularly in the USA – means that people will sue the car company instead of the driver.

“the uber death had nothing to do with the self drive” – so because it was dark, you give self-drive a free pass? If anything, this should be one of the few areas easy to out-do humans capability on, with our limited night vision. If you’ve got radar/lidar/good image sensors (or some combination thereof), you can’t cop out with a ‘it was dark’ for an autonomous vehicle.

– ‘A human would have done it too’ is no excuse for autonomous – ‘drunk drivers run a few people over’ so it’s ok for an autonomous vehicle to run a few kids over because it didn’t understand what they were, being in halloween costumes? Not so much.

‘A human would have done it to’ IS a valid excuse (not, note, a drunk human!), as the self drive doesn’t have to be perfect, it just has to be better than a human. In the millions of kms it did there were probably lots of times a human would have had an accident, and it didn’t.

In this case it didn’t cope with it because of the sheer stupidity of the pedestrian (at night, dark clothes, in the shadows, walked in front of the car, on main road) the car driver – either software or a human being, wasn’t to blame. It certainly didn’t invalidate the concept.

And visual is still the best way to do a lot of this – the clutter you have to make sense out of with radar is significant – and black clothes in a dark shadow at night, in the middle of the road, is hard thing to ‘see’.

Here you have to use the metric of a responsible human, not the average driver. One that is driving like they should be driving, rather than how the average person actually drives. After all, if a human drives the car and they run someone over, drunkenness, distraction, or disregard of the speed limits etc. counts against them. We don’t get a pass for being negligent drivers either.

Discounting drunk, reckless and distracted drivers means your AI would have to do at least five times better than the average rate of accidents to meet the bar. Yet this doesn’t count for the edge cases, which are not average situations.

>In this case it didn’t cope with it because of the sheer stupidity of the pedestrian

Actually, the data shows that the car DID spot the pedestrian, it just didn’t do anything about it because it wasn’t programmed to expect a pedestrian there. The problem is that the AI doesn’t actually “see” anything, it just picks up collerations of what things might be based on their shape, size, how they move, etc. so a pedestrian showing up in an unexpected location fell through the gaps and the car just didn’t react in any way.

and my point is a human wouldn’t have seen them, so it did no worse than a human driver (even a good one) in that case, and probably a lot better.

And saying the AI has to be better then the best human is a bit silly in one regard, as if it is better than average there will be less accidents. In another regard it is a key issue – as everyone THINKS they are a good driver, and that the software won’t be better than them…

>a human wouldn’t have seen them

Humans have superior night vision to just about any digital camera. The images published in the media are from the camera’s point of view, which didn’t see the pedestrian, but it has been pointed out that a human would have seen them.

>better then the best human

Not better than the best; read again. A person who is not reckless, negligent, drunk, or falling asleep is enough. If the AI cannot best that, then why should anyone let it drive?

First, life is a risk. Whether it is Covid, cars, trains, or planes, riding a bike, walking, cutting with a knife…. Get over it and live life and take risks you are comfortable with. Just don’t force it on the rest of us (that’s where I draw the line with car self driving). Leave the self driving to Mars Roves and such…. With that said, if you feel driving is a chore or boring, then take a taxi, train, bus. Then you don’t have to drive at all. You’ll get there eventually. I find driving actually fun most times. When I want to pass a semi, I will … given the opportunity. Computer will probably evaluate and say … safer to stay behind, so lets crawl along, lane changing is statistically ‘riskjy’ …. No driving should be fully under full control of the user. If you don’t feel that way, then take a tram, or train…. Choice and freedom matters (or should). Statistics get us in more trouble… even climate warming, no climate cooling, or heck lets just call it climate change… whatever band wagon you want to climb on, just start spouting statistics…

Its really very scary to read Full self-driving and beta in one line.

Right now autonomus cars are driving in area full of human-in-charge-cars. They seem better but a lot of decision and prediction is done by people. How will they behave in environment full of autonomus cars?

It will be easier when all vehicles are autonomous, right? The cars will be talking to each other telling the other what it is about to do and perhaps warning oncoming cars about what to expect around a corner. With a human driving the other car, it’s a matter of guessing what the human intends to do based on car movement that the human commanded a half second earlier.

If the car won’t understand a pedestrian as a pedestrian, it won’t warn the other cars either.

The mark of insanity is doing the same thing over and over, and expecting different results. If you find your AI is too dumb to drive, adding more of them won’t make a difference.

…”when all vehicles are autonomous”. Good luck with that – there are plenty of people who enjoy driving themselves. Even if autonomous vehicles ever did get to the point of being safer all around, ‘so what’. Motorcycles aren’t exactly inherently safe, and people have (and should have) a choice to choose them as a transportation option. Some people would rather enjoy life and it’s experiences than live in a safety bubble.

When cars start talking to each other, they are going to gossip about their occupants.

They will also snitch to the police car.

And the police car will tell them to huddle around an offending car and entrap it.

And the police car will talk to the fire truck, ambulance, and tow truck*.

The police car will talk to the traffic lights to re-route traffic.

The firetruck will talk to the automated hydrant if needed, and notify the automated street sweeper to clean up the mess.

The Eyewitless News camera truck and camera drone will overhear the police car, and route to the scene.

The ambulance will talk to the lawyer’s car, and the doctor’s car to tell them to go to the hospital.

The ambulance will also talk to the hospital to have the self driving gurney meet it.

The ambulance will monitor the vital signs of the patient inside.

If the vitals cease, the ambulance will talk to the hearse.

The hearse will talk to the funeral home.

The funeral home will talk to the self driving excavator and casket crane at the cemetery.

The hearse will also talk to the florist delivery truck.

*the tow truck will talk to the car it is towing and to the service garage.

The service garage will order replacement parts from the distribution warehouse before the tow truck arrives.

(or the service garage will route the tow truck to the scrap yard).

The distribution warehouse will talk to the warehouse forklift.

The forklift will talk to the automated loading dock.

The automated loading dock will talk to the delivery truck.

:-) A bit before that… It might be the case that humans will tend to stay home more because they don’t need to go the store or the restaurant. Those items can be delivered. This puts fewer humans on the road. And reduces the number of traffic fatalities.

If this was being pushed by Google or Apple I’d trust them to do a good job. Tesla? Oh please, a company run by a lunatic huckster whose cars can’t even keep on their roofs and bumpers. Where there is no support for their cars once the warranty expires.

Tesla is a shoddy company that preys on virtue signalling yuppies with lots of money.

As for the automation aspect. The moment their autonomous vehicles start killing people the lawyers will skin Tesla alive.

For once Dude we are in total agreement, how rare… Very true though, and the big problem with the most common mean average methods – when the outliers are massively outlier they hugely skew the whole dataset, where a modal average for example on human drivers would say 0 crashes in a lifetime as more folks have 0 than any other number of crashes.

For me personally you drink and drive, rapidly thrown lifetime ban and a free bus token… Ever put your hands on a steeringwheel on public roads afterwards and loose the hands.. If you make the risk of being that stupid high enough (to the idiot being that stupid not the poor sods they crash into) many fewer will get behind the wheel in an unfit state. And those that do will quickly take themselves off the road as its rather hard to drive with no limbs…

Such absolutism is hard to pull off, since “0 BAC” is impossible to detect with any accuracy, and even orange juice has a little alcohol in it, so people would be losing hands over eating a piece of rum cake.

We allow some level of drunk driving, because the increase in the relative risk of accident is negligible up to about 0.05 BAC (two 12 oz cans of strong beer for a 160 lbs adult male, drank within the hour). The reason the federal limit is 0.08 in the US is because people want to drive home from the bar after “having a few”, which is highly irresponsible but hard to change.

https://www.researchgate.net/figure/Relative-risk-of-crash-involvement-as-a-function-of-drivers-BAC-SOURCE-Adapted-from_fig4_221828495

Indeed, Here at least “Drunk Driving” means having rather too many and being over that limit – usually by more than a small margin in the way we use the phrase, but over is over. I was not meaning to imply any thing above 0 BAC is a fail.

But we have a limit here, you have one there and over that limit (which should require deliberate choice to get there) the punishment needs to fit the potential seriousness of driving while impaired. And as it was your choice to visit the pisser its your choice to never be allowed to drive again by maiming should you repeat the offence, thus keeping the sensible folks safe.

For a more serious DWI offense, you already lose your license for up to 10 years. Driving without a license AND drunk will then send you to prison for quite a long time.

Chopping off a hand isn’t proportional.

Can one still SPEED with an autonomous driving vehicle? Or does the vehicle respect all speed limits, and joins a single file procession?

I guess if one can multi-task, the speed is less of an issue. Driver is not stuck only driving.

On the other hand, when this becomes mainstream, speeds can be increased to suit the autonomous capability.

If compliance is universal, there will be no more speeding violations, or camera based revenue generation?…. this will get political fast!

If compliance were universal the speed of many roads would increase without having to raise the speed limit. Where there’s busy traffic the problem is often exacerbated by the selfishness of human drivers.

How did this article get to be about Tesla? Their “full self driving” isn’t. It’s level 2 — “partial automation”. I guess that’s a step up from their “autopilot”, which is level 1 “driver assistance”.

The Waymo and Cruise cars are actually driving themselves around, and have licenses to do so. The Cruise cars are going to be running around in San Freaking Francisco, and not a wide-open desert (Phoenix).

This story buries the lede. There _will_ actually be a significant number of self-driving cars, on the roads, legally, this year. Like, acutally driven by AIs, without a person at the wheel. Level 5 autonomy. This is crazy awesome.

Not a single one of them is a Tesla, however. (Indeed, you know they’re sweating bullets, b/c the emperor is about to be publicly displayed to be pantsless…)

I find it ridiculous that people are all lit up over self driving cars when we still have train wrecks. Lets get the autopilot working on trains for a decade and than we can start to look at things that go off the rails.

If people in the US cared about preventing train wrecks further, more resources would be positioned to solve that problem and that problem would already be solved. But we are not motivated to solve that problem. It’s just not important enough to improve it further.

And train drivers (in the U.K.) have successfully avoided any automation because they’re unionised.

Note that all these companies are targeting (ununionised) personal drivers. If they’d gone for delivery vehicles first, they’d still be stuck in court with the unions.

The future scares those of us who are ignorant to change. Like it or not, the future is here, at some level, and it’s better to prepare yourself for it then complain that things won’t remain the same. Over the next 20 years, you will lose your ability to be the driver in control, and resorts that allow you to drive as they use too will pop up where you will pay to drive like you do today.

no chance that we will lose the ability to drive in the next 20 years..

Too many humans have lousy instincts.

How many drivers out there respond to EVERY situation by slamming on the breaks? Too many! It doesn’t matter if the threat comes from behind, from the side or the front. They see scary out the window, panic neurons take over and their foot is to the floor on the brake pedal before their conscious mind has even processed the situation regardless if it is the best or the WORST possible response to the present situation. I doubt it’s even in their brains, it’s probably processed by their spinal cords the same as the pull-away from hot touch reflex.

No doubt there will be software bugs and deaths along the way but not as many deaths as there will be if we keep up the status quo. If the nay-sayers don’t get the politicians to kill it first that is.

Humans are actually remarkably good drivers.

https://carsurance.net/blog/self-driving-car-statistics/

“At the moment, self-driving cars have a higher rate of accidents compared to human-driven cars, but the injuries are less serious. On average, there are 9.1 self-driving car accidents per million miles driven, while the same rate is 4.1 crashes per million miles for regular vehicles.”

Of course this isn’t counting the fact that self-driving cars are only operating on a limited set of roads and conditions, while people manage over twice as well regardless AND this still includes drivers who choose to drive dangerously.