The RISC-V architecture is inexorably inching from its theoretical origins towards the mainstream, as what could once only be done on an exotic FPGA can now be seen in a few microcontrollers as well as some much more powerful processors. It’s exciting because it offers us the prospect of fully open-source hardware on which to run our open-source operating systems, but it’s more than that. RISC-V isn’t a particular processor core so much as a specification that can be implemented at any of a number of levels, and in its simplest form can even be made real using 74 logic chips. This was the aim of [Robert Baruch]’s LMARV-1 that caused a stir a year or two ago but then went on something of a hiatus. We’re pleased to note that he’s posted a video announcing a recommencement of the project, along with a significant redesign.

We’ve placed the video below the break, and it’s much more than a simple project announcement. Instead, it’s an in-depth explanation of the design decisions and the physical architecture of the processor. It amounts to a primer on processor design, and though it’s a long watch we’d say you won’t be disappointed if your interests lie in that direction.

We first covered the LMARV-1 back in early 2018, so we’re glad to see it back in progress and we look forward to seeing its continued progress.

I was following his previous series. I’m glad to see him start up again.

Go big!

Let’s have a 128 word size!

Or perhaps a 1 bit word size! Okay, maybe not. Really, word size is a matter of convenience. It’s a decision we make, balancing how many bits we really need to express things that we often need to express, against the cost of computation for things that don’t need as many bits. In conventional CPUs, particularly von Neumann architectues, the thing we need that started to make 32 bits too cramped, was the amount of memory we could fit into practical computers, both in how much needs to be accessible from a single function, and how much can be fit in a mass storage device. Even in the 1960s, word sizes of 32-36 bits were necessary, so it’s remarkable that it’s only been in the turn of the century or so, that we outgrew this. That’s around 40 years, so maybe we’ll need 128 bits in a couple of decades, but until then, I’m not going to insist on making every instruction twice as long, just for those things I’ll eventually need to express.

https://github.com/olofk/serv

Could one build this serial RISC V out of transistors?

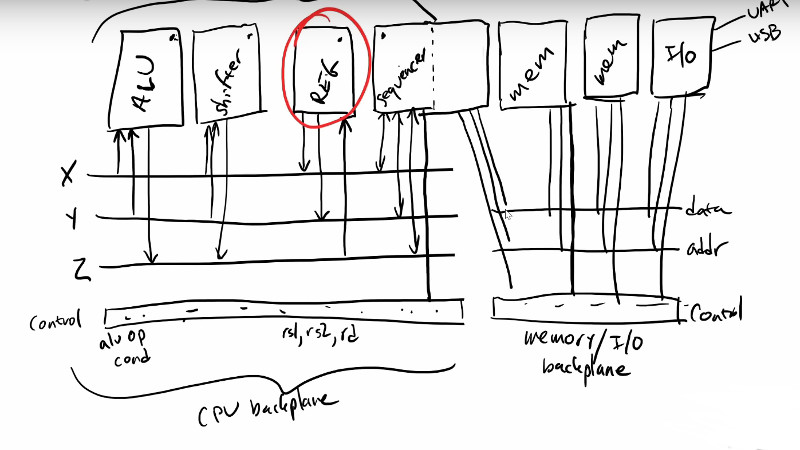

Certainly. You just have to implement each of his chips as transistors. Tricky, and perhaps harder to verify, but doable. In this video, [Robert] describes implementing the register module, where he realizes that he would need 128 of a certain chip in order to properly multiplex the flip-flops in order to get the triple access to the registers (two reads and one write in each clock cycle) required by the architecture. His extremely well-described solution is to use two memory chips, where one is being used to do one of the reads, the other chip is used to do the other read, and each write is done to both memories, which keeps their contents synchronized. Which was a big optimization for the sake of chip count, but such an optimization would NOT apply – in fact would be a big de-optimization since it doubles the amount of actual memory needed – when applied at the transistor level. So at the point where he says “that alone would take 128 chips, which I am not going to do”, when implementing in transistors, you actually have to implement all 128 of those gates, each of which has multiple transistors. So yes, certainly doable, but as with any 32-bit CPU, it’s going to take a lot of transistors, a lot of time, a lot of space, and a lot MORE time to actually get to work.

Having said that, the Digital Equipment Corporation PDP-10 (later DECsystem-10) was originally implemented in the mid-1960s using transistors, on small cards called Flip-Chips (similar to the IBM 1401’s SMS cards), which plugged into backplanes, forming the functions that would later be handled by integrated circuits – gates and flip-flops, typically one or two gates or one flip-flop on each card. The “Ten” was a 36-bit machine designed for multitasking, and a major player in the time-shared computer business in the 1970s – 80s, although by that time it used a better, faster, cheaper CPU implementation in TTL chips. And yes, it had a bunch of registers (though fewer than the RISC-V), and yes, it was fairly big, if I remember correctly, it had three cabinets six feet tall and three feet wide for the CPU alone, which weighed in at nearly a ton, and then a few other cabinets for memory and I/O controllers.

So yeah, it can certainly be done, in the sense that it HAS been done, although not exactly at the home workshop level. A 32-bit architecture may be a bit ambitious for doing with discrete transistors.

Thanks for your informative reply :-)

That is a Serial RISC V that I referenced; fwiw, is supposed to synthesise in under 800 LUTs.

Maybe it’s one for if I ever get a pick’n’place machine for Xmas…

Sure. Two things, though:

1) LUTs are efficient for FPGAs because they are very versatile, but they take a lot of transistors to implement, because they are basically ROM, which has the benefit of being totally flexible, but never takes fewer transistors than the equivalent optimized logic circuit, and this gets worse, the bigger the LUTs get.

2) Serializing saves a lot of transistors in the arithmetic and logic, but nothing at all in registers – every bit of every register has to have its own flip-flop that takes at least six transistors, because each bit still has to be stored separately, even if it’s in a shift register instead of a set of D-type flip-flops. If you look at chip photos, they are dominated by two things: the register array, and for microcoded CPUs, the microcode ROM.

This is why there was so much innovation in memory technologies in the 1940s and 50s, when ferrite core arrays, magnetic drums, electrostatic drums, storage CRTs, and delay lines all were developed for one reason: to reduce the number of tubes (or later, transistors) needed for fast temporary storage of intermediate results. Even Babbage’s Difference Engine was made up mostly of registers, although these were able to add, as well as storing values. So yes, serialization saves transistors, but not as many as you’d hope, and you then pay for that in speed. Which isn’t a big deal for a discrete transistor CPU, since speed isn’t usually the first concern, but also consider the extra complexity that serialization adds, when you have to debug the design. Even a fully simulated serial design is naturally going to take longer to get right.

Modern CPU architecures take advantage of the fact that while registers are still the most expensive part of a CPU (by chip area), they’re still cheap enough to justify making more of them available to the programmer, which tends to speed up programs because of the reduced need for juggling data around between registers and main memory. So building a discrete modern CPU out of transistors is just going to be expensive.

thats called simulated dual read

Okay, that sounds like a suitably descriptive name for it. I’d never heard of this, but then, I’ve never considered implementing registers is quite this way!

It’s such a shame that the RISC-V foundation has become a “big boys only” club. Secret mailing lists and working groups, and no access unless you’re a large silicon vendor. My guess is companies like WD are happy to pull in the best designs if it means not paying fees to ARM, but don’t want to cede any kind of control.

The Libre SoC project moved recently from RISC-V to the OpenPOWER foundation for exactly those reasons. Their European government grants mandate complete transparency and they couldn’t even find out the process for how to propose extensions, much less get them looked at.

IBM has done a much better job stewarding things, moving the OpenPOWER Foundation under the Linux Foundation and providing strong patent troll defences. There’s a nice tutorial on downloading and modifying one of IBM’s POWER cores to add instructions here:

https://www.talospace.com/2019/09/a-beginners-guide-to-hacking-microwatt.html

The Talospace blog has a lot of other interesing news too for those unfamiliar with modern POWER systems.

The shirt off my back for an edit button. We have a rouge italics tag.

Sigh. Nothing is great enough that it can’t be brought low by greed. Of course, they could fork their own variant, but then they’d have to support it and its software chain. That’s the economics of the situation – if you’re a big player and are willing to support the development and maintenance, you get to have a bigger say. TANSTAAFL, as Heinlein would say.

Well, the upside is they’ve found a much better home with OpenPOWER, where they’re receiving far more support. Really everything you could ask for, from patent troll protection to free CPU designs.

Last I checked, they were working on taping out a test chip about now.

Because, of course, the great thing about open standards is that there are so many to choose from.

Do you have schematics for the power supply and clock ?