Imagine that you’re serving on a jury, and you’re given an image taken from a surveillance camera. It looks pretty much like the suspect, but the image has been “enhanced” by an AI from the original. Do you convict? How does this weigh out on the scales of reasonable doubt? Should you demand to see the original?

AI-enhanced, upscaled, or otherwise modified images are tremendously realistic. But what they’re showing you isn’t reality. When we wrote about this last week, [Denis Shiryaev], one of the authors of one of the methods we highlighted, weighed in the comments to point out that these modifications aren’t “restorations” of the original. While they might add incredibly fine detail, for instance, they don’t recreate or restore reality. The neural net creates its own reality, out of millions and millions of faces that it’s learned.

![]() And for the purposes of identification, that’s exactly the problem: the facial features of millions of other people have been used to increase the resolution. Can you identify the person in the pixelized image? Can you identify that same person in the resulting up-sampling? If the question put before the jury was “is the defendant a former president of the USA?” you’d answer the question differently depending on which image you were presented. And you’d have a misleading level of confidence in your ability to judge the AI-retouched photo. Clearly, informed skepticism on the part of the jury is required.

And for the purposes of identification, that’s exactly the problem: the facial features of millions of other people have been used to increase the resolution. Can you identify the person in the pixelized image? Can you identify that same person in the resulting up-sampling? If the question put before the jury was “is the defendant a former president of the USA?” you’d answer the question differently depending on which image you were presented. And you’d have a misleading level of confidence in your ability to judge the AI-retouched photo. Clearly, informed skepticism on the part of the jury is required.

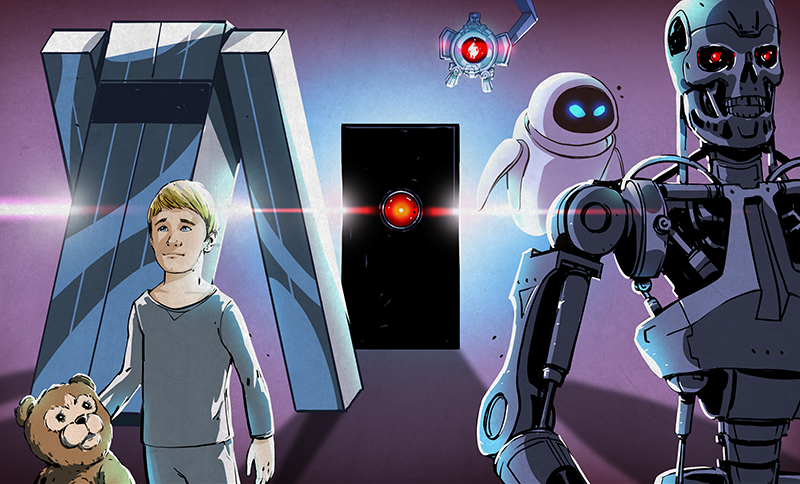

Unfortunately, we’ve all seen countless examples of “zoom, enhance” in movies and TV shows being successfully used to nab the perps and nail their convictions. We haven’t seen nearly as much detailed analysis of how adversarial neural networks create faces out of a scant handful of pixels. This, combined with the almost magical resolution of the end product, would certainly sway a jury of normal folks. On the other hand, the popularity of intentionally misleading “deep fakes” might help educate the public to the dangers of believing what they see when AI is involved.

This is just one example, but keeping the public interested in and educated on the deep workings and limitations of the technology that’s running our world is more important than ever before, but some of the material is truly hard. How do we separate the science from the magic?

“is the defendant a former president of the USA?” LOL

Yes, he was famous for being the lead singer in the hit songs “Peaches” and “Lump”.

Can’t wait until it all starts in a couple of months …

Yep, should be interesting to see Hunter & Joe in jail for taking bribes from China.

Do you think this comment was appropriate for this blog?

Ooh, what a great example.

I said it once and I’ll say it again: you see Obama because you already know it’s a picture of Obama. People are good at seeing what they want to see once we’ve decided to see it.

For example, if you see a banana in a picture, it looks yellow even if the actual wavelength of light coming off of it is green – because the brain expects to see a yellow banana and adjusts the perceived color balance accordingly.

The point they article is making is based on this false notion. The AI algorithm constructs a plausible face that fits the small amount of data you have. The human perception distorts the data to match what the person has already decided it should be.

Squint your eyes to blur the image till you can no longer see the squareness of the pixels in Obaman’s face, and the two start to look remarkably alike. That’s how much actual information there is. If you didn’t guess it was Obama already, but you did know someone who looks like the computer generated image, you could just as well guess it was him.

But that’s the PROBLEM. The enhancement takes away the doubt about what you’re seeing – you lose the perception that it’s not a reliable picture. If I see a picture at the resolution of the “before” picture, I would say, “that looks like Obama”, but I would also be aware that I was seeing limited data. With the “enhanced” version, because it looks like a high-resolution photo, I don’t have any doubt when I say “That’s not Obama”.

There is no doubt for people who say “That’s definitely Obama”. That’s the entire point of the argument: it’s supposed to be obvious beyond doubt that the computer is mistaken.

Which is the point that I’m making: you know it’s Obama, so you see it without doubt. You lose the sense of unreliability anyways, because your brain alters the data you see to match your pre-conception about it anyhow. You are definitely sure that this is Obama even though pixelating the picture of the computer generated man could produce the exact same pixels.

So how do you know it’s a picture of Obama and not the other guy? You aren’t, so take a look at the picture and try to see it not as Obama. Can you do it? Seems impossible, doesn’t it?

This is btw. the same effect as how in languages, the brain tries to match all the sounds it hears into the native language, so you may actually hear different sounds than what the other person is saying because your brain is “rounding it up”. It takes a lot of unlearning to actually hear what is being said, because your brain is constantly trying to shoehorn the information into what it expects to hear.

So the false sense of certainty is already built-in. Having a computer generated image that looks “too real” is no worse, and it can actually help with a lot of biases where people fill in details according to their expectations rather than what the data actually supports.

I disagree. While people would say “That’s definitely Obama”, if you told them it wasn’t, they WOULD look at the picture again, and then say “then whoever it is sure has a similarly-shaped face”. The only reason our doubt is reduced is that this is a famous person. It’s like the picture of Lincoln done in only a few dozen pixels – you say, Oh, I see it, it’s Lincoln. But if you showed them an alternative picture that it resolves to, then they would think it looked like that.

By the way: The Obama picture is clickbait. I don’t believe it was enhanced by a neural network – I think this was done by hand, looking for pictures of people who looked like the original, or it may have been done with a “make me look like this famous person” app just by morphing. I got suspicious because in the “after” picture, he isn’t wearing a white shirt. So I tried pixelating the “after” picture to the same level as the Obama picture, and it looked nothing like Obama, nor does it look anything like the “before” picture. In fact, if you just scale down this picture to 1/8 size (the size of the pixelization), they are very clearly different people. It’s a pretty poor algorithm if the output doesn’t even map back to the original data.

Dude: I don’t want to pick on you, but I’ve heard that many times, that people lose the ability to hear sounds that aren’t part of their native language. But is there any evidence of this? I think it may be the very opposite of that: they GAIN the ability to differentiate between sounds in their native language that are infinitesimally close to each other in actual harmonic content. If you listen to vowels that are allegedly distinct from each other, in isolation, that is, not in words, it is almost impossible to distinguish some of them from each other. And even aside from this, sometimes we THINK we hear sounds that just aren’t there, because they are there in the spelling, but silent! For example, a ‘t’ sound that is closer to ‘d’, (called a ‘flap’). Take the words “city” and “water”. Most Americans pronounce these more like “ciddy” and “wadder”, and don’t even notice until they hear someone from Britain actually pronounce the ‘t’. And even then, they don’t really know what was different, just that it was a “funny” pronunciation. I noticed when I was in Australia that there they pronounce the numbers thirteen through nineteen with a flap, sounding like ‘thirdeen’ through ‘ninedeen’.

I am fully aware that there are sounds used in French that I have a hard time distinguishing, but I attribute that to the fact that these sounds fall within the range of sounds that in English all mean the same thing. Sorry not to have an example of this one, but my point is, we can actually HEAR the difference, it’s just that we group together sounds that different English speakers use for the same symbols. I know we can hear the difference because this is one of the things that makes up regional accents. We can hear the difference, but we know what they are saying without having to think too hard about it.

It’s kind of like colors: there are many shades of pink, and we can discriminate many shades of pink from each other, but it isn’t apparent because we don’t have that many NAMES for the shades of pink.

Everyone looking at the pixelated clearly black descent guy sees Obama because he is hugely well known and probably the only face they know that looks even remotely like him, but if they know an Obama lookalike personally they are more likely to see them, unless the clothes give it away). Which is why when you have blurred suspect images from CCTV etc you will always ask the victim and/or their friends and relatives, then the local community – we are good at fitting limited data to spot the face we know, and with that context odds are good somebody it might be is actually who it is.

In a trial/police investigation situation a high degree of similarity can get you looked at, if you are unfortunate, but if you are then clearly miles from where you are supposed to have been etc its instantly clear that guy that in the blurry image looks like it might be you isn’t. Its pretty much impossible to convict off just poor footage – you would need heaps of other circumstantial evidence or verifiable proof to make one blurred shot that happens to look like a suspect into ‘real’ evidence.

The AI takes all that away – if its used and happens to match the suspect nobody will have any doubt it could be wrong, as it looks flawless . But clearly isn’t – for one thing if that image pair is to be believed it turns a black guy really really white, which no human would do on that image – could well guess a little darker or lighter than his real skintone – but not enough to change from an African style blackish tones to pure bloody white! At worst the human might look at it and guess its asian/middle east style swarthy tan darker skin…

While you are quite correct humans are great at trying to fit what was said/seen into their normal, which can be useful when dealing with partial data. Its not the whole story, a human with incomplete data will say it looks or sounds like x, think it was, until they have better data. But that doesn’t mean they won’t correctly identify the actually correct target later if given other inputs (and usually we know when we didn’t see/hear it properly, we filled in the blanks based on past experience, but are not that certain we saw/heard it right in the first place)

This AI system takes all that away, its too clearly a particular person, and is basically always going to be completely wrong (the potential number of matching faces is infinite, and its giving exactly one very specific face – the odds of being even close are not good).

Same reason why most plant identification books still use drawn/painted images and not pictures – pictures show too much extraneous detail that identifies that specific specimen of that species, where the artist takes only the universal important details, creating a instantly recognisable template to all specimens of that plant. – If you created that artists sketch of Obama and the white guy the AI generated there would be differnces, but hold either of them up to a real photo and the human would see the match… But they won’t see the match when the basics are all wrong, the AI has given the wrong skin colour, the shapes and relative posistions of the eyes, nose, ears are all wrong, close but wrong. So because the AI image looks real they can’t possibly be the same as our highly developed facial matching skills rightly reject it. If it wasn’t identical twins would rarely be separable – needing a scar for example to distinguish them, but ask anybody who really knows such duplicates and even though you might not know how, you know which is which after a while (at least as long as you keep in touch so your face map is updated – I grew up with tripplets for friends, and a pair of twins too, always knew over the years we kept in touch which was which. They could fool others for a while, if they tried, but I always knew, and everyone else who knew them would figure it out relatively quickly still)

They don’t lose the ability – it’s more like the rabbit and duck illusion. Unless you concentrate really hard, you only see the rabbit or the duck.

> they WOULD look at the picture again, and then say

“…no, that’s definitely Obama.”

> I don’t believe it was enhanced by a neural network

Point in case. You’re trying your best to rationalize because YOU see the first picture as Obama and you can’t shake it off.

Please don’t tell me what I’m thinking.

NO, I don’t believe it because of objective faults in the pairing of the two pictures: 1) the person in the first picture is clearly wearing a white shirt and jacket, while the second is wearing a black T-short, and 2) if you pixellate the second picture to the same level as the first, the two pictures don’t look at all alike. I am NOT trying to rationalize why I see the first picture as Obama – that is just a fact, and there is enough data in the pixellated picture to support that. I am just stating that any algorithm that would produce the second picture from the first is doing a very bad job. The SHAPES of the faces don’t even match. Try it yourself. Take the pair of pixtures and view them at 1/8 size.

As one who looked at the pictures before reading the article I can tell you that I easily recognized the pixelated image as Obama before reading anything in the article to tip me off. The point of the article stands as far as I’m concerned.

The algorithm used could have generated thousands of different plausible faces that match the pixelated image with high likelihood. The fact of the matter is that the pixelated image is missing information. No algorithm can truly restore that missing information. It’s can only guess, and that guess can be wrong or misleading.

On the other hand, stacking multiple frames of a video to get enhanced resolution is a good way to enhance image quality because each frame potentially adds new information. I’ve had some fun playing around with that before.

+1

I think you may be arguing about the wrong think here. Eliot is not writing about the pixilated image he is writing about using AI “enhancement”. He is writing about the “enhanced” image. If the pixilated image is offered as evidence it would be easy for the defence to say, “That could be anyone.” With the “enhanced” image it looks like some one!

Seems like you don’t know how comments sections work.

An MRI scan can detect the difference between seeing an image for the first time and recognizing an image.

The hypothetical example of this was showing an image of a murder scene. The MRI can tell the difference between someone seeing that image for the first time, and someone recognizing the image as something they’ve seen before (due to the extra high-level processing that comes from familiarity).

Reportedly, the CIA uses this. A suspect sits before a computer screen and is shown a series of seemingly random images. Included in the images is a view of the office that was robbed, and the MRI can detect that the person was in that office when they had no security clearance to do so.

That sort of thing.

There was also a trial of a policeman a couple of years ago, where the prosecution used FMRI in an attempt to show that the defendant was racist. The claim was made – by experts – that his thinking process could be read accurately by the brain scans, and because he was racist the crime should be elevated to the status of “hate crime”, with extra punishment.

(Not reporting a 3rd hand account here, I actually read about the trial and some of the claims made.)

(I totally wish I was on the jury of that trial, because I would have done jury nullification in a heartbeat. We can’t allow prosecutors to use phrenology-style mind reading to tell us what the defendant was thinking at the time.)

Oxytocin will make you trust someone, and it’s absorbed from the air (but has a very short effect – less than a minute). Reportedly, the CIA and FBI puts this in the HVAC systems of their interrogation rooms, to make the suspect trust the interviewer. This is using chemical means to extract a confession from someone who would not otherwise have confessed, but there’s no way to prove that this method was used.

Parallel construction is a technique where the police get inadmissible evidence, and then back-construct a different technique that would make the evidence admissible. For example, tell a different officer “be at a certain intersection, look for a specific vehicle, stop it and do a search”. The 2nd officer is not involved with the 4th amendment violations, so the evidence is considered admissible.

Media suppression can be used to hide a crime perpetrated by a political candidate, taking the transgressions out of the public eye so that there is no outrage or calls for prosecution. For example, a laptop containing child pornography with a paper trail, E-mails and texts from multiple cell phones, confirmation from more than one recipient of the E-mails, eye-witness testimony from an ex-partner, and financial records including amounts, banks, and dates of transfer can be completely ignored by the media, commentary can be suppressed by search engines, and online discussion can be demoted or outright banned. (This, despite the evidence implicating a high-ranking politician in 3 federal high-sentence crimes based on clear statute wording and explicit evidence.)

Lots and lots and lots of technology will have a big impact on justice, and we as a society need to sort it out.

On flip side, so many alternative facts and conspiracy theories (some even suggesting collusion and corruption of ALL doctors and politicians, judges in world) getting shared by top leaders, and people actually start believing them. This iis leading to ever increasing number of politicians getting swayed by popularity of these conspiracy theories and perpetuating same falsehood. This erodes trust in current justice system and make people turn to echo chambers.

“This erodes trust in current justice system and make people turn to echo chambers.”

Good, honestly. Having a universal mass culture is a bad thing; let’s break up the monopoly.

Death to the tyrany of reality!

“Reportedly, the CIA uses this. A suspect sits before a computer screen and is shown a series of seemingly random images. Included in the images is a view of the office that was robbed, and the MRI can detect that the person was in that office when they had no security clearance to do so.”

Yeah, that’s so much simpler than putting a lock on the door and using a surveillance camera like every 7-11 everywhere.

“I read about” ? I’m throwing the “thirdhand account” flag on that play.

Far too many “reportedlys”. Reportedly, the FBI sprays Bat Love Gas into the room [and it doesn’t affect the interrogators?].”

Probably the tough part is getting your suspect to submit to an MRI. It’s kind of hard to do surreptitiously.

Also, please leave your political poison at the door, before coming here.

The flipside of this is also interesting: Our brains are neural nets and incredibly good at filling in missing details. This is based on a given person’s lifetime experiences, hence analogous to the training of these artificial neural nets. So, this analogy is food for thought on how unreliable and biased eye witnesses can be.

Yes, and our brains are similarly capable of coming to the wrong conclusion. Which is one of the reasons eyewitness testimony is less than perfectly reliable.

Malcolm Gladwell’s book “Blink” is an interesting read about the subconscious brain’s ability to make split-second determinations. Sometimes they tend to fare better than more considered decisions, and sometimes they unfortunately are the wrong conclusion. In some cases it’s similar to (and probably operates with) confirmation bias. Sometimes the results can be really tragic. This all happens in the subconscious, so it’s very difficult (impossible maybe) to be objective about the accuracy of your own assessments.

I think there is ample possibility for compounding effects as AI gets thrown into the mix. Image enhancement AI is one thing, but I don’t think it would be a stretch to also feed it parameters from eyewitness accounts like race and age, so that it’s no longer simply an image processing program but also compromised by the same biases and misinterpretations as the humans that provided the eyewitness accounts.

I’ll also point out that when I first saw the pixelated image, I was 100% sure that was Barack Obama. After seeing the second image, I don’t see it the same way. I still know consciously that it is him, but after visually associating the second image to it, my perception is altered—maybe enough to alter my perception of reasonable doubt were I on a jury. Does that mean that my own ability to process and recognize the subject is great and the AI fake is simply making me doubt? Or does that mean it’s actually not that great and I’m just associating it with something familiar, and I should not be so confident in myself?

So, according to you, ‘almost nobody’ ~= 300,000 people in the U.S. alone.

Also, who cares if someone “only” has a few more years to live, but “dies early” from complications related to covid? Who are you to qualify someone else’s life, regardless of its length? Everyone has a finite lifespan, and it isn’t the same for everyone. If someone were to murder a 90 year old man would you defend them with your same reasoning (i.e. the victim was living on borrowed time already, so the murderer shouldn’t be punished as severely)?

While statistics are great and all, 300,000 people don’t magically cease to matter because they make up less than 0.1% of the U.S. population. Just like the LGBTQ community doesn’t magically cease to matter because they make up “only” ~5% of the population (at least). What percentage of the population has to die before you’d consider covid a threat?

(I’m assuming you’re in the U.S. based on your use of English and general attitude regarding covid, so I focused my numbers there. Feel free to correct me if I’m wrong.)

Agreed. Anyone who tries to pass off that “they’re almost dead anyway” argument has really drunk the Kool-Aid. And that’s giving the benefit of the doubt. In reality, to be able to say that is to confess your utter lack of humanity.

If Foldi-one has any particle of good within him, this would be the place to recant his horrible, horrible statement.

Or what about another example, where the scrutiny of a court and protective skepticism of a defense attorney isn’t there, such as when police are trying to establish probable cause for an otherwise impermissible search or seizure? In that case you wouldn’t ever have protection for the accused as the only driving effort would be by law enforcement to establish cause for a search warrant.

Sounds like AI professionals could be making * big town money* for testifying in courts for defense lawyers. If probable cause is thrown out then….

Question is if law enforcement officers will be fully honest (or even aware) of image modifications from AI or even just pixelation errors leading to the warrant as a basis for a trial.

What makes this a serious problem is that while human brains make similar mistakes when filling in the blanks of insufficient sensory data, human brains don’t produce photo-realistic renderings of their misinterpretations. If a human witness identifies a suspect, on cross-examination the defense could ask “how far away were you from the suspect”, when looking at an AI-enhanced image, the question may not even come to mind when the interpretation looks like a perfectly clear photograph.

Only tangentially related but the south park guys put out a deepfake web series on YouTube called “sassy justice” [1]. For a few of the people in the episode they even generate a fake voice. They are well done enough that I could absolutely see myself getting fooled, and even for a couple of these with people I wasn’t familiar with I wasn’t so sure. Cool stuff. Zuckerberg the dialysis king [2] is hysterical and worth watching if you don’t have time for the full episode.

[1] https://youtu.be/9WfZuNceFDM

[2] https://youtu.be/Ep-f7uBd8Gc

What I find interesting about the example is the racial bias. It would seem the algorithm was trained with a data set that is predominately biased towards Caucasian skin types. The picture is obviously Barack Obama as found here: https://www.biography.com/.image/t_share/MTE4MDAzNDEwNzg5ODI4MTEw/barack-obama-12782369-1-402.jpg

Yes, the lighting lends a lighter tone to his skin, but the algorithm chose to interpret that as shadowing on the face rather than skin tone.

While the neural net was “programmed” by “seeing” data, it misses some of the more obvious classification characteristics that a programmed approach wouldn’t miss.

The weird irony is that in situations like this, the neural net’s racial bias could actually work for black people, if such a neural network were employed to analyze surveillance footage. Then black people would become essentially invisible to the police.

It’s actually rather hard to tell the difference between a light black person and a tan white person, because there isn’t any. Race is a social construct.

Ah, yes, a social construct. If we all stopped thinking white and black were different, then “white” blond parents would give birth to kids with tanned skin and dark, frizzy hair, and “black” parents would give birth to kids with light skin and straight, blond hair.

Honestly, this is the stupidest thing I’ve read in ages.

Yes, racism is evil.

Yes, there’s variation between skin tones in “white” and “black” groups, and an overlap between the extremes of these. And there are no clear deliminators to these groups.

That does not make race a social construct. Racial groupings, perhaps, but not race.

Any more than “height” is a social construct. Grouping people into “Tall” and “short”, sure, but not “height”.

The reason why race isn’t a scientific category is because it doesn’t predict anything. You can have a person who is “black” and they can have a skin tone that is indistinguishable from “white”. This is because the racial category is socially determined, not according to their biology.

Go to Brazil and watch as black people call themselves white, and white people call themselves black – because it’s a sociopolitical class. It’s the same thing in the US, only with slightly different rules – like one drop and you’re it. Just because dark skinned parents give birth to dark skinned children doesn’t give a fundamentally social category any biological or scientific merit.

Where does the hill start and the valley end? What do you call people living there?

More to the point, “race” isn’t a useful concept scientifically because it actually relies on differences that are only outwardly visible, like skin tone, or differences that do not depend on the individual – like their ethnicity. For these reasons, two people put together in the same “race” may not have anything to do with each other, genetically, ethnically, socially…

Race isn’t a scientifically valid concept because it lacks the power to predict anything. You can place an individual into a race by various criteria, but you can’t predict what the individual is like out of the assumption of that race because it is so ill defined. I.e. you can’t know which of the various arbitrary and mostly socially constructed criteria apply to this individual.

“Where does the hill start and the valley end? What do you call people living there?”

So by your own conclusion, this means hills and valleys are meaningless concepts, right?

” The picture is obviously Barack Obama as found here: https://www.biography.com/.image/t_share/MTE4MDAzNDEwNzg5ODI4MTEw/barack-obama-12782369-1-402.jpg”

I’m not disagreeing with the point of your post, but I would not say that is the original picture. The pixelated picture has a blue background and the picture you linked to has a white background. Also the pixelated picture shows the shirt collar being much lighter than the background and the picture you linked the shirt looks a shade darker than the back ground.

I think racial bias is a hard sell here. Barack Obama’s mother is Caucasian and his father is African. If it had generated an image that appeared to be a black man, I could make the exact same argument that it was biased because it failed to identify that a half-white man was a white man.

FYI, exchanging the identities is a very useful thought exercise when talking about racial and other biases. It can help separate what we feel from what is rational.

Also I’m curious what you think the more obvious classification characteristics are (in this particular image). If you hadn’t previously seen a similar portrait of Obama would you really say “oh that’s obviously a black guy?” Trying to be objective, I’d probably have said he’s maybe Latino or middle eastern ethnicity.

Well fingerprints, lie detectors and DNA had to go through the same process, the courts will determine weather they will accept this as “evidence” or not, we’ll just have to wait and see.

Let’s just hope to many wrongfully convicted suffer before a ‘resolution’ is found.

Lousy example. The pixelated and ‘enhanced’ images are similar but not the same subject. Pixelated has a white collar. Ignoring that, it makes no difference since fact and fiction now carry the same weight in the media or in court. Media now cares only about advertising revenue. Truth is irrelevant. Entertainment value is important. Juries are not selected for competence or lack of bias. They are selected for stupidity and conformance to some politically correct composition. Equal numbers of male/female, black/white, Asian, Hispanic, transgender, martian, marsupial, etc. Ever been called for jury duty? If you graduated from high school they try to dismiss you.

This very thing was addressed in the Kevin Costner/Gene Hackman movie “No Way Out” from the 80’s; reconstructing an image, it’s all in the hands of the programmer. They can make it look like a person, or they can make it look like a car – no matter what the source material is.

Wait. The AI didn’t catch that he was wearing a white shirt?

I am interested to know the source of the images. It’s not clear in the article how the second image was come by. It’s implied that some neural net created or selected it from the original photo, but it doesn’t say. It could also simply be a demo based on convenient photos.

I suspect it was found by an image-matching algorithm, not by a reconstruction (I won’t use the word “enhance;”too much baggage). Or simply someone noticed the images look similar and faked it.

If it was done using image matching, then it was done poorly. If you view the pair of images scaled down to 1/8 size (which is the level at which the pixelation on the upper image was done), you will see that the facial SHAPES don’t match.

It was a GAN. https://en.wikipedia.org/wiki/Generative_adversarial_network

A first stage network takes the pixels as a seed and tries to make a face, while a second network tries to tell if it’s a generated face or a real picture. Repeat until it converges, and you have a model that’s very good at tricking your discriminator.

It’s not “matched” to the pixels per-se, but it used them as the seed.

That being the case, this would be a completely invalid way of identifying people. If the algorithm’s sole objective is to convince another algorithm that it is looking at a real photograph, then all it would need to do is submit a real photograph, unrelated to the seed. But even if it does actually follow some path that is actually related to the seed, the only thing this would guarantee is that PEOPLE looking at the resulting bitmap would conclude that it was a real photograph, NOT necessarily a photograph of the person in the seed. This is exactly the problem I posed in a different thread in these comments: by removing doubt that we are seeing a real photograph, the algorithm erroneously, or even maliciously (depending on the programming) presents an image meant to deceive the viewer into overestimating the quality of the photo. Clearly I’m missing something here. Or not, depending on what side you’re on: if the AI really DID process that low-res picture of Obama, and came up with that result, most viewers would never identify the person in the processed photo as Barack Obama. Either way, no litigant would be able to use such images in court without well-justified objections from their opponents.

Thanks for the explanation.

As an illustration, I think the generated image works perfectly. Misapplied, neural nets are capable of producing incriminating evidence for an innocent person, and that’s the problem.

But imagine if such a tool were applied to an image of someone with a really unique feature like a strange piercing or a tattoo. The presence of something like that would probably make it more difficult to trick the second network into thinking it’s real, so it might remove it in an attempt to better fool it. In that case it’s actually removing information.

And that makes total sense when you see how much of Obama’s distinctive qualities were removed from the seed image to produce the output. Which is why it looks nothing like him. In other words, it’s not random variation produced by the neural net simply trying to fill in gaps—it’s actively removing anything unique to the original subject.

It can be argued that an enhanced image is the visual equivalent of hearsay and therefore is inadmissible as evidence.

Even further, how are police drawings of suspects allowed? You’ve got witnesses telling a police sketch artist what the person looked like, and then the drawings are literally generated based on this account.

I don’t think that police sketches ARE allowed in court. As I understand it, these are used only by police in FINDING a suspect, not in court. In court, you ask the witness on whose responses the police sketch was made, not the sketch.

In the same way, sure you could use an enhanced photo to ask people “have you seen this guy?”, but if as a result you bring in a white guy who looks nothing like Obama, and then compare him to the low-res photo taken by the security camera, you’re going to realize that your case is pretty weak, because all you’re going to be able to take into court is that security camera image.

They are used to narrow down suspect numbers to be investigated by objective means, alone they are just as worthless.

Should you demand to see the original?

Not only the “original” (whatever that means in an electronic age), but also the complete and documented chain of custody.

This is what will probably keep this from ever being seen in court. Once the jury sees the 12 pixels that are actually all that were captured of the suspect, they won’t pay much attention to the 8×10 color glossies with the circles and arrows and a paragraph on the back of each one. Because blind justice.

The question is whether the jury is ever told it’s been enhanced.

I’ll bet in China they’re not. Especially when their government already publishes photoshopped images of the Australian army to the public international area, where they’re subjected to actual scrutiny.

No, it never gets that far, because the defense by then knows that there is such a thing, and asks for the original photograph, then argues that it would be prejudicial because while it looks like a photogaph, it is actually a rendering made by an algorithm, and therefore not evidence. This would be roughly equivalent to the testimony of polygraph charts.

I mean, you can get anything you want…

..excepting Alice.

Sounds good to me!

Conversely, there are times when computer-controlled vision is our friend. ‘Expert’ witnesses; that is LE personnel, have these same issues of memory and image interpretation as of a citizen witness or juror.

To wit, my vehicle records data from two cameras, and some accelerometers, and some other stuff, and was used to dismiss a ticket with charges serious enough to cause license suspension. A sheriff’s deputy had issued a ticket to my nephew that not only would be difficult to do per Newtonian physics, but should not have been observable per his reported position. I do not believe that the officer had intentionally provided incorrect information, but that he was up against human limitations vs his LE experience. The officer later admitted that he was (internally) convinced of a legitimate citation, and was happy that the magistrate immediately dismissed the case.

Humans cannot trust their memory where vision and hearing are the principle elements.

Not really Dude, “race” can predict a huge array of things, because the reason folks have similar skin colour is the same reason you can be more prone to this or that cancers, alcohol sensitivity, asthma, diabetes, allergies – who your descended from changes your genes, which changes your susceptibility to life’s little gifts…

For exactly the reasons you point out, “enhanced” images should never be allowed as evidence in a criminal trial.

The “enhancement” is actually “thinking” for the juror. You might as well let an AI determine the defendants guilt or innocense.

And why stop there? Why not determine who is going to commit a crime in the future, and incarcerate them preemptively?

+1 Excellent point.

That’s definitely Josh Meyers, doing a spot-on impression of Obama.

This is no different than a number of other pseudo-science claims put forth over the decades. FBI Bullet Lead Analysis has been proven baseless, as have a number of other techniques claimed over the years. Unless the prosecution can put forth a randomized test, where they don’t use pre-selected photos, I’m going to consider it snake-oil.