It was a trope all too familiar in the 1990s — law enforcement in movies and TV taking a pixellated, blurry image, and hitting the magic “enhance” button to reveal suspects to be brought to justice. Creating data where there simply was none before was a great way to ruin immersion for anyone with a modicum of technical expertise, and spoiled many movies and TV shows.

Of course, technology marches on and what was once an utter impossibility often becomes trivial in due time. These days, it’s expected that a sub-$100 computer can easily differentiate between a banana, a dog, and a human, something that was unfathomable at the dawn of the microcomputer era. This capability is rooted in the technology of neural networks, which can be trained to do all manner of tasks formerly considered difficult for computers.

With neural networks and plenty of processing power at hand, there have been a flood of projects aiming to “enhance” everything from low-resolution human faces to old film footage, increasing resolution and filling in for the data that simply isn’t there. But what’s really going on behind the scenes, and is this technology really capable of accurately enhancing anything?

An Educated Guess

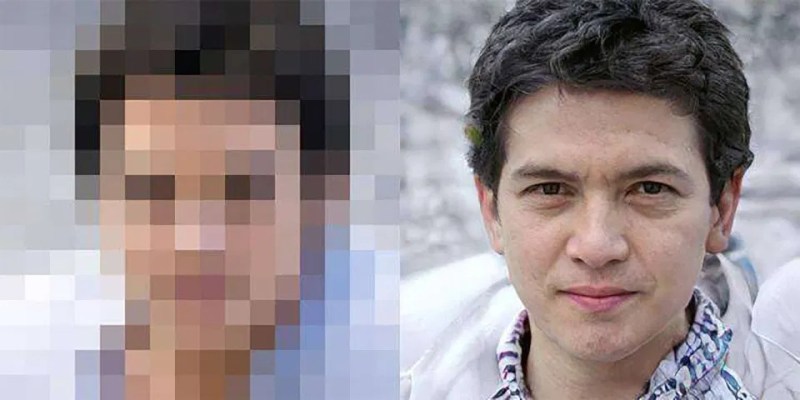

The important thing to note about this technology is that it’s merely using a wide base of experience to produce what it thinks is appropriate. It’s not dissimilar from a human watching a movie, and guessing at the ending after having seen many similar tropes in other films before. There’s a high likelihood the guess will be in the ballpark, but no guarantee it’s 100% correct. This is a common thread in using AI for upscaling, as explained by the team behind the PULSE facial imaging tool. The PULSE algorithm synthesizes an image based on a very low-resolution input of a human face. The algorithm takes its best guess on what the original faces might have looked like, based on the data from its training set, checking its work by re-downscaling to see if the result matches the original low-resolution input. There’s no guarantee the face generated has any real resemblance to the real one, of course. The high-resolution output is merely a computer’s idea of a realistic human face that could have been the source of the low resolution image. The technique has even been applied to video game textures, but results can be mixed. A neural network doesn’t always get the guess right, and often, a human in the loop is required to refine the output for best results. Sometimes the results are amusing, however.

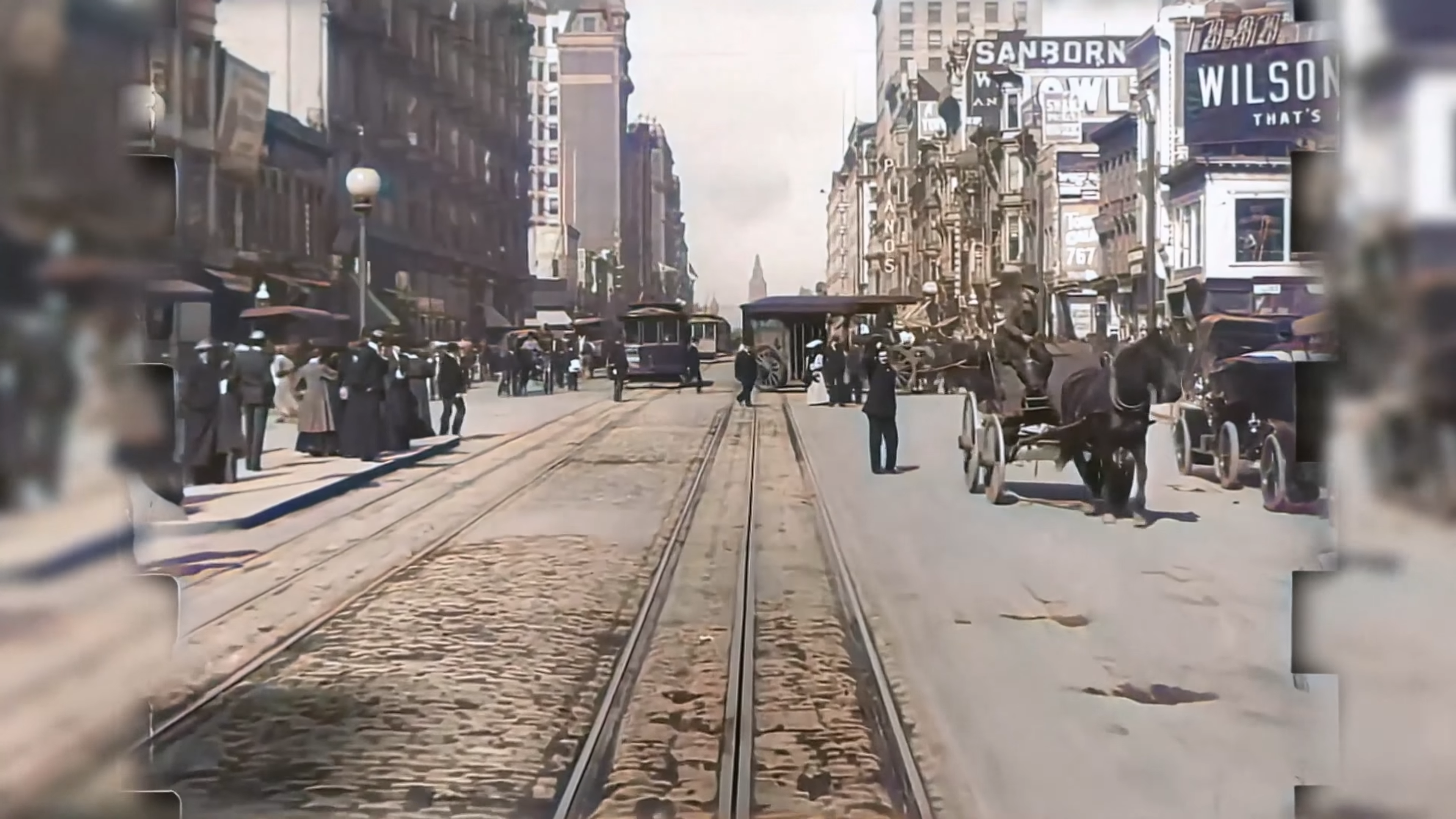

It remains a universal truth that when working with low-resolution imagery, or black and white footage, it’s not possible to accurately fill in data that isn’t there. It just so happens that with the help of neural networks, we can make excellent guesses that may seem real to a casual observer. The limitations of this technology come up more often then you might think, too. Colorization, for example, can be very effective on things like city streets and trees, but performs very poorly on others, such as clothing. Leaves are usually some shade of green, while roads are generally grey. A hat, however, could be any color; while a rough idea of shade can be gleaned from a black and white image, the exact hue is lost forever. In these cases, neural networks can only take a stab in the dark.

Due to these reasons, it’s important not to consider footage “enhanced ” in this way as historically relevant. Nothing generated by such an algorithm can be definitively trusted to have basis in truth. Take a colorized film of a political event as an example. The algorithm could change subtle details such as the color of a lapel pin or banner, creating the suggestion of an allegiance with no basis in fact. Upscaling algorithms could create faces with an uncanny resemblance to historical figures that may never have been present at all. Thus, archivists and those who work on restoring old footage eschew such tools as anathema to their cause of maintaining an accurate recording of history.

Genuine perceived quality is also an issue. Comparing a 4K upscaled film from Paris in 1890 simply pales in comparison to footage shot with a genuine 1080p camera in New York in 1993. Even a powerful neural network’s best guess struggles to measure up against high quality raw data. Of course, one must account for over 100 years worth of improvement in camera technology as well, but regardless, neural networks won’t be replacing quality camera gear anytime soon. There’s simply no substitute for capturing good data in high quality.

Conclusion

Applications do exist for “enhancement” algorithms; one can imagine the interest from Hollywood in upscaling old footage for use in period works. However, the use of such techniques for purposes such as historical analysis or law enforcement purposes is simply out of the question. Computer fabricated data simply bears no actual connection to reality, and thus can’t be used in such fields to seek the truth. This won’t necessarily stop anyone trying, however. Thus, having a strong understanding of the underlying concepts of how such tools work is key for anyone looking to see through the smoke and mirrors.

Link to futurism site refuses to load with an adblocker enabled.

Welcome to the future.

Works fine with uBlock and Anti-Adblock Killer

Just need to start downloading your anti-adblocker detector blocker software soon.

Works for me and I have only loaded with the default blacklist enabled. What ad blocker are you using?

I have only loaded > I have only uMatrix loaded

Welcome to the future, where you don’t just get to take content people created on your own terms without compensating them.

>However, the resulting output is a fabrication, and not necessarily one that matches the original face in the low-resolution

This is extremly dangerous. Image the wrong person going to jail because of “enhanced” footage of some security camera. :-/ It’s like finding collisions on cryptographic secure hashes, you do not know if you found the original data or “just” a collision.

I am not sure if this statement is true, but i think improving pictures also has to do with Shannon-Nyquist: If your samplerate (resolution) is too low you will definitively loose data.

Someone ought to present “counterexamples” .. i.e., examples of original images, pixelated, then “enhanced” incorrectly .. to show that this is *in fact* fabrication, not recovery. At a quick glance, I don’t see that being done.

Something similar was done recently when it was found that a similar program did not know how to handle nonwhite people and then whitewashed them. So people ran it with low res images of people of color and shared the results. I think one of the most famous examples was with Obama

https://twitter.com/osazuwa/status/1274448630183845888

https://cdn.vox-cdn.com/uploads/chorus_image/image/66972412/face_depixelizer_obama.0.jpg

We already do that by witness testimony, where the police artist draws a picture of the suspect. The only difference here is that it’s recognizably a drawing and not a photo.

That has a massive psychological effect on the jury. That’s why the new digital suspect pictures aren’t super realistic. They’re deliberately vague enough that you can use it to recognise the real person.

If anything, it’s less likely that the algorithm would produce the correct face out of all the possible faces that fit the data, which would make the jury identify the photo-realistic version as not the accused because of the added details that distract the viewer to believe that this is a photo of a real person who looks distinctly different. In other words, it’s like a hash function. You’re very unlikely to find the password knowing only the hash code, but you can always find the hash code by the password.

What you can do is generate a few candidates and compute an average of them, which gives you a sort of generic face that looks synthetic – the only trouble there is that a generic average face looks pretty much like anyone, which is the problem that suspect sketches have in the first place – and yet they are used.

The value is to eliminate the case where the person looks remarkably different from the sketch. If the algorithm happens to generate the face of some completely unrelated real person, the police isn’t going to drag them up to court just on that evidence alone in the first place.

The police most definitely will pick up a person who doesn’t look like the suspect simply because the computer told them to. The police are idiots who can’t find better jobs. The computer is a magic box that never lies. Put those two together and you always end up with disaster.

https://www.npr.org/2020/06/24/882683463/the-computer-got-it-wrong-how-facial-recognition-led-to-a-false-arrest-in-michig

https://www.nytimes.com/2020/06/24/technology/facial-recognition-arrest.html

The police most definitely will pick up a person who doesn’t look like the suspect simply because the computer told them to. The police are idiots who can’t find better jobs. The computer is a magic box that never lies. Put those two together and you always end up with disaster.

https://www.npr.org/2020/06/24/882683463/the-computer-got-it-wrong-how-facial-recognition-led-to-a-false-arrest-in-michig

https://www.nytimes.com/2020/06/24/technology/facial-recognition-arrest.html

So I can separate the text from the links, and don’t have to wait for moderation. Interesting. Get ready for long broken collections of comments. Time to get all twitter up in this bitch.

>”They never even asked him any questions before arresting him. They never asked him if he had an alibi. They never asked if he had a red Cardinals hat. They never asked him where he was that day,”

They just up and arrested him without no other supporting evidence or cause of suspicion. Now, I think this is a case of trying to roll the blame on the software instead of racist and incompetent policemen. They didn’t need the facial recognition software to pick up a random black dude and arrest him.

> HaD don’t care of your opinion

Well, as long as you don’t criticize HaD or its editors, or present some viewpoints they don’t like. In that case they come back couple weeks later and remove your comment in silence. Wipe the slate of history like in 1984.

Akismet seems to flag comments for moderation when they have a high link-to-text ratio, b/c they look like spam.

But don’t fret, we clean out the moderation queue fairly frequently.

I think it definitely has everything to do with Nyquist sampling, the algorithms are entirely just guessing, like a human artist would if hired for the same task.

Crypto collisions, when the but strings are long enough, can be made so uncommon that it’s more likely that a cosmic rays flips the output bit that says whether it’s a match or not. Or that a perfectly sane person misreads the match, or decides to lie in court about it, and nobody checks them.

UUIDs would have about one single collision, somewhere in the world, if everyone on earth generated something like hundreds of millions, and those are only 128 bits. For quantum resistance, longer hashes are regularly used.

This assumes no flaws in the algorithms, the data fed to the hash (oh look, we only used the first 8 digits of your phone number…), and no malicious actor deliberately generating collisions.

This is not enhancement, so much as combing through a database of existing images and automatically Photoshipping them into a new picture that would match the original. No police department in their right mind would use this as an investigative tool, and a prosecutor would get laughed out of court for entering it as evidence.

A malicious deep fake as a frame-up job could be another story, but if you already have blurry security footage that looks vaguely like the person to frame, you don’t need a high tech option. A competent liar in the witness box would work just as well.

Ahem…

https://en.wikipedia.org/wiki/Facial_composite

>In the last two decades, a number of computer based facial composite systems have been introduced; amongst the most widely used systems are SketchCop FACETTE Face Design System Software, “Identi-Kit 2000”, FACES, E-FIT and PortraitPad. In the U.S. the FBI maintains that hand-drawing is its preferred method for constructing a facial composite. Many other police agencies, however, use software, since suitable artistic talent is often not available.

These “automatically photoshopped” images are used especially in the UK where,

> in extensive field use EFIT-V has shown a 40% naming rate over an 18-month period with 1000 interviews.[25] The EvoFIT system has been similarly evaluated in formal police field-trials.[26] These evaluations have reported a much higher naming rate for EvoFIT composites but, using the latest interview techniques, a suspect arrest rate of 60%. This latest police field trial has also indicated that an EvoFIT directly leads to the arrest of a suspect and then a conviction in 29% of cases.[27]

The system works by showing the witness a bunch of pictures and having them select whether it looks more or less familiar, until the result converges to something that resembles the person they saw. This is exactly what the “enhancement” AI does with the low resolution image – it finds other faces that when combined match the fuzzy version.

In other words, the police is already using this technology. Only, the AI is a mechanical turk.

When you say “no poilice department”, we are talking about the kind of people people who, in the past, have bought dowsing rods to detect explosives.

@ some guy said: “This is extremely dangerous. Image the wrong person going to jail because of “enhanced” footage of some security camera. :-/ It’s like finding collisions on cryptographic secure hashes, you do not know if you found the original data or “just” a collision.”

On the contrary. If this sort of thing becomes widespread, even if you are caught on camera robbing a bank you can’t be convicted – the perfect defense is: “Oh yeah? Prove that’s really me on the recording!”.

You talk about Shannon-Nyquist, you are thinking rationally and scientifically.

That is so old-school, you have to get onboard the hypster(ia) about ML/IA/DL to understand 2020 and beyond.

Today Shannon-Nyquist have been disrupted by ML who can now claim be better than Information Theory, after all that’s old stuff none of the people doing ML cared to learn, as it gets in the way of demos.

Nothing to see here, we have to go back to watch TV, that is where science is

fast forward, and i’m humming a song while working, someone uploads the footage to youtube and it gets blocked because the humming was enhanced back to some copyrighted song.

GANs are really a thing, but if this would be anything near true, we’d use this method to massively compress data in a lossy way and then magically recover it from 3-4 bits.

the whole outcome depends on how you train your network, and most likely the people in the training dataset will be jailed :-)

>the use of such techniques for purposes such as historical analysis or law enforcement purposes is simply out of the question

Why? This is equivalent to forensic sketch art, which is done from way less information by on plausible guesses by the artist.

If you take a fuzzy CCTV footage and “enhance” it, someone might recognize it as a particular person.

The main problem is this “enhancing” already picks one particular person, or possibly an averaging of a few people, but it looks like a definitely, specific person when it isn’t. A good police sketch, on the other hand, should communicate “We’re looking for someone who more or less looks like this”.

You can deliberately make it look more artificial if you want to.

Its not just looking photo real or not. The human artist rendering somebodies description can see in the witness reactions when they are close, which ratios are and are not right, what shape the eyes should be – so you should end up with a very identifiable image – in the same way those drawings and paintings for plant identification are usually better than a photo – all the key elements are where they need to be, but none of the noise of the real world provides distractions.

Where guessing from fuzzy CCTV based on nothing but ‘people usually look like this, so this is what that blur should look like’ type guess work is going to make a person that looks more real than the sketch but likely looks nothing at all like the subject unless that subject happens to be of the same ethinicity and age as the training model – the CCTV enhance for example isn’t going to give somebody the kink of a broken nose just backfilling on what it thinks people should look like – as people don’t tend to have a broken nose that has healed crooked, It will probably give them a more bulbous nose instead. Now with enough frames from enough angles you can statistically crunch all the blurred details and reconstitute a high confidence correct image, as that broken nose will cast shadows that across those hundreds of images let you know its badly asymetrical, will be caught in profile multiple times so you can say the nose is this long with confidence, as in every blurry image it is within this range of possible length so the middle of that pile somewhere is a small area where every image’s nose length errors overlap – giving a reasonably precise length.

The point is that you don’t necessarily have eye-witness testimonies, so you wouldn’t know of a crooked nose anyhow. Making a best guess out of a CCTV frame works better than just having a pixelated image to show around.

Not sure I would agree Dude – humans are pretty good at noticing this could be that based on incomplete data.. A computer generation will take away clues humans use to do this and give them something that looks however the computer thinks it should. By removing the traits that suggested who it really is to that human they won’t have a clue – at least unless it guesses correctly and leans into those traits.

>humans are pretty good at noticing this could be that based on incomplete data

Humans are pretty good at seeing what they want to see. The computer extracts only what is actually supported by the data. We have all sorts of “autocorrect” features in perception, where take our perceptions of the world and insert our expectations, like seeing a banana as yellow even if the actual color of light reaching your eye is green.

The Obama picture is actually a good example. You see Obama because you already know it is. Squint your eyes and the two images actually do look identical.

Humans are pretty good at seeing what they want to see. The computer extracts only what is actually supported by the data.

I suspect the problem will be that while jurors and witnesses immediately understand that a forensic sketch is just a sketch, they’ll view a synthetic photo as a true representation. Heaven help you if the algorithm regards your photo as being like one in the training dataset.

Like that time David Schwimmer stole beer in the UK.

Furthermore, using the technique for “historical analysis” is no different from artists trying to sculpt facial features on skulls of pre-historic people.

We are already confusing ourselves with actors stand in for historical people in films and TV. Wouldn’t it be better to use a computer generated reconstruction of e.g. Stalin rather than someone who just looks somewhat alike?

Enhancing for use in court is manipulative — it makes it seem like there’s more certainty about what the person looks like than there actually is.

I’m not sure how police sketches are even admissable: wouldn’t they be hearsay? Because the drawing is something that someone made based on something that someone else said about how the person looks.

Anyway, if you look at the examples of famous people, or people whose faces you already recognize, you can figure out the problem here really quickly. You’ll recognize a person you know from the pixellated source image, but when the AI “enhances” it, enough information can be changed/added that the person is no longer recognizable, even though you know who it is.

If anything, this works the opposite way of what should be required of evidence in court.

For example: the Twitter link above to President Obama: He’s totally recognizable in pixels, but the AI turns him into a higher-resolution someone-else, where you would never say that was him.

From an entertainment standpoint, this tech is amazing. In particular I’m thinking of Peter Jackson’s movie “They Shall Not Grow Old”, where he used tech like this on 100 year old footage from WWI. It may not be historically accurate with regard to exact colors and such, but he took film that was really not watchable by a modern audience because of its original and age-related defects, and brought it to life again. It was a truly amazing feat of compelling storytelling, even if it wasn’t true restoration in the archival sense.

In many ways its a good archival restoration too – its got inaccuracies from history, but so does the original source because of the technology that took the image, and damage taken in its years since then. But ‘fixing’ it much like translating a book takes something lacking to your audience and makes it understandable. You still get all the meaning the original creator of the work was trying to convey, the story they wanted to tell, and whatever was in the image.

That said if you are going to do that to historic footage it really needs as a subchannel/tacked on the end/picuture in picture the original exactly as it was when you processed it – so future processing still has the most original data to look at, and the source material is preserved as well as possible.

The main issue is where the algorithm regards something in the original as an error or artifact and replaces it with something else, like changing a rose into a daffodil. For historical accuracy then, you wouldn’t want that to happen because even things that seem irrelevant, like what kind of a flowers are growing in your garden, may be very significant.

I am sure it is only a matter of time before people start gaming the algorithm to produce the exact opposite image, or NFS images.

Seems like it would be good for some niche entertainment. Take a decent photo of yourself on your phone or webcam or whatever. Downsample it into a pixelated mess. Then use this algorithm for a laugh to see what you “look like”. If someone made a web app, I’d give it a try.

I prefer to see it as an imagination prosthesis, or virtual beer goggles.

you can’t create data that isn’t there, but some people will believe that you can. By pixelating, some of the data is lost for good, it is one way. I imagine it would be possible to calculate some kind of mathematical goodness-of-fit between candidate images that are already known and the pixelated one, and I also imagine it is possible to use multiple different pixelated images of the same person (from different angles and positions) to recreate additional detail to a certain extent.

That would somewhat depend on how its pixelated. In theory data can be preserved despite being processed, but only in certain situations – in much the same way deleting and even an overwriting of data on your HDD doesn’t definitively stop it being recoverable – you really have to overwrite it a fair few times to blur it beyond recovery, its possible some methods will leave behind enough data to fill in with very low (or even no) loss after enough sanity check post processing.

If the method is known, it may have flaws that make undoing it trivial – perhaps around the image compression, maybe in how it chooses the block colour, and maybe it leaves telltale markings in some fashion, or even has rows of real image bordering each block – nothing the eye would see, but very very useful data for reconstruction.

Even when it doesn’t have ‘easy’ flaws if the new image is not a big enough change from the original image there is enough real data to give good confidence on all the guessed pixels, potentially to the point you won’t have recovered the source image, but a recognizable image looking more like the source after its been through the touch up filters to remove camera noise etc. Even more so if its a blurred video – all those extra angles that a moving target gives on top of the multiple frames of the ‘same’ image, enough statistical work and you can theoretically at least start to pick the details out.

Never going to be the magic enhance of bad TV though, far to much computer time would be needed and done right there shouldn’t be enough solid data let points for a good restoration. At least without millions of frames to crunch – so video blurring version should create say 30 sprites for different face directions and pick the best fit each frame so there is massively less data to look at – at 24fps those 30 sprites are like having just over one second of data to extrapolate from, and you only have a few sprites on similar angle, where in a live video you will have at least 60 odd frames with nearly identical content pre-bluring but that show differences in the blur – and probably get that same angle again seconds later for heaps of frames too…

Maybe that sort of TV enhance magic would be possible IFF the camera’s CCD and LENS were well classified and sensitive enough that you can find the slight aberrations from its imperfections in other parts of the image – if the lens happens to throw a faint ghost of the area of interest elsewhere on the sensor and you know exactly where that will be you can take that and the CCD’s usual noise levels to have a good guess on more detail in the area of interest. Add in enough of those and enough images you can perhaps make it work.

“Infer” just doesn’t have the same ring to it.

Hello, Denis here (I have done those video upscales).

Im a regular reader of hackaday and was pleased to see my name mentioned here, thank you.

I want to add few points regarding mentioned problems:

You’re comparing modern raw video and Paris one upscaled with the same technique, it is a bit wrong, because now I’m training models with modified version of ESRGAN and dataset used there do not contain any “modern” data. Basically dataset influence the result In upscale tasks mostly. Paris video was done on a “low quality” input, just a compressed YouTube video, that is why results also not so great, but because of those experiments we are aware of limitations and we have ways to fix those issues a bit.

Upscale task do not “change” much inside a footage, I could say that it redraw and add some pixels, but compared to the colorization task, those “redrawing” almost unnoticeable and could be still used to represent historically accurate data.

But facial restoration (GAN based) and Colorization – two additional tasks that already could not be considered as historically accurate and I always mentioning that to my viewers. It is a modern viewing experience, but it is based mostly on datasets and on that fact how well GAN training performed for a models used in that enhancement.

For sure, it is impossible to use those GAN techniques, for example, in a law enforcement. But for modern aesthetics of an old film, it is could be more enjoyable to watch, even being historically not correct.

Algorithms will keep evolving, in the next 5 years we will have almost indistinguishable colorization (in my opinion), it is matter of time and people who doing those GAN-stuff should be clear with their audience that this data is artificial – that is why me or https://neural.love do not call our results “a restoration”, but more like “an enhancement”.

Thanks for sharing Denis, very impressive work on your part!

now if only this got put into a doom/wolf3d/etc port to make them look great again :P just imagine a engine that could make near photo-realistic beauty out of 90’s pixels…

Can’t wait till they are using this to unscramble passwords people have blurred / pixelated in videos. Bet if you knew the font you could decode it using a brute force reverse hash.

FYI use the FBI standard BLACK BAR of RNADOM LENGTH.

I worked with some early Neural network software and my first takeaway was this:

There is an old trope in SCIFI movies that goes “Computers don’t make mistakes, they have errors.”

Neural Network algorithms actually give the vomputer the ability to make mistakles in addition to having errors

lol; I’m sure ‘vomputer’ was merely an adjacent-key typo, but I like it a lot!

I thought mistakles was also good.

Ah, yes, that lesser-known Greek philosopher noted for his erroneous rhetoric.

Video forensic analysts have been able to “enhance” video for quite a while; at least when it meets certain criteria. What they can do is the equivalent of “frame stacking”, very similar to what astronomers use to improve photos.

The way it works is If the frame rate is high enough, and there is a slight relative motion between the subject and the camera, they can determine from the rest of the pixels changing when a particular point in the scene crosses a pixel threshold. This works when the subject is a live human that’s standing still, or if the camera is handheld. It doesn’t work when the subject moves more than a fraction of a pixel per frame, which happens if the subject is running, or if it’s a typical security camera storing only one or two images per second.

Another enhancement technique is the reassembly of an image from a set of images. I’ve seen footage where a vehicle was on the road passing a gas station. The gas station had an indoor security camera facing the road, but the view was obstructed by posters taped to the window. The camera picked up slivers of the image in the gap between posters. The forensic analyst was able to grab the slices and piece them together to get a full picture of the vehicle.

The analyst also used the same technique from a homeowner’s door camera to piece together the image of a car leaving the scene of a murder in a nearby suburb. A gas station had footage of the victim’s credit cards being used to purchase gasoline an hour or so after the crime. The reconstructed image of the car matched that of the car at the gas pump, placing the murderer fleeing the vicinity of the crime moments after it had happened.

Theoretically pixelated video should be easier to reconstruct than still images, depending on the subject matter. A slow side-scrolling video with fairly consistent lighting would act as a scanner, so the average values of a group of pixels between two frames would eventually reveal as much detail as the framerate allowed.

I believe that’s how folks like NASA can extract so much detail from three pixels worth of observations, by taking hundreds or thousands of photos of those same three pixels over time and averaging things out. Not sure if that technique is considered AI or just a plain algorithm.

Hopefully AI will eventually be able to use its ‘intelligence’ to figure out which algorithm can extract the maximum amount of detail encoded in the video depending on each scene presented to it.

There’s a huge difference between extracting accurate precise data and fuzzy logic, so hopefully any AI that’s developed can be instructed to perform archival, forensic, entertainment, artistic reconstruction etc to suit the task.

I guess we’re in for a new form of ‘uncanny valley’ when restoring old movies. An actor who appeared in hundreds of movies will have a more detailed pixel map of their likeness than the one-time background of a particular movie, so an actor could look photo-realistic against a horrible blurry background. This already happens with some TVs, where the edge dection software brings moving objects into focus only to disappear into the background when they stop moving. It’s very distracting, but fascinating.

All of the stupid legalese discussion aside, I could really use this for a picture I took with my Razr of my mom and her now dead cat. I would love to have that picture in (better) glory. Had zero luck on my own with my meager ‘shop skills and even tried to hire a couple of pros to clean it up but they either required an obscene amount of money for 10 mins of work or they said they couldn’t do it. Gonna give it a shot anyway. Thanks for the article HaD.

Placing the card and a similar car. That’s what you know. You can infer, reasonably, the events were related but for all you know it was a room mate going on a beer run and was allowed to borrow the ‘new wheels’ and it needed gas so he took the offered car.

Keep your facts in the fact bucket, opinions in the opinion bucket and have the grace to see that we always like our opinion in the fact bucket but expect nobody else to do that.

Cool stuff. Also shows that speed of traffic in San Francisco hasn’t changed in 114 years.