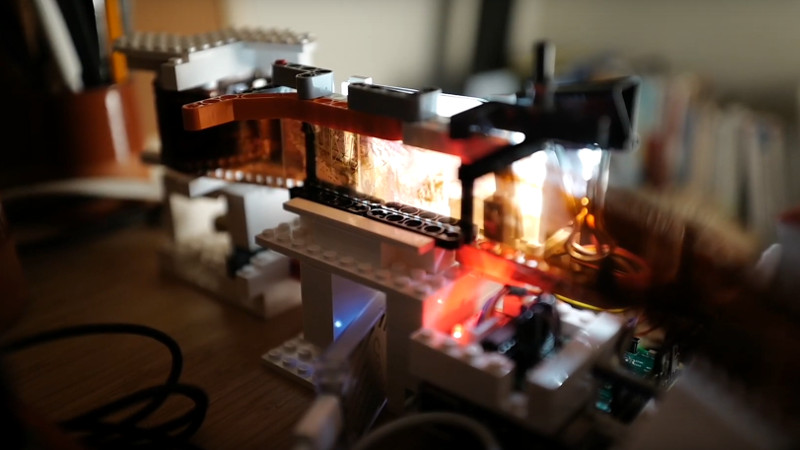

There was a time during the early years of mass digital photography, when a film scanner was a common sight. A small box usually connected to a USB port, it had a slot for slides or negatives. In 2020 they’re a rare breed, but never fear! [Bezineb5] has a solution in the shape of an automated scanner using a Radpberry Pi and a mechanism made of Lego.

The Lego mechanism is a sprocket feeder that moves the film past the field of view from an SLR camera. The software on the Pi runs in a Docker container, and features a machine learning approach to spotting frame boundaries. This is beyond the capabilities of the Pi, so is offloaded to a Google Coral accelerator.

The whole process is automated with the Pi controlling not only the Lego but also the camera, to the extent of retrieving the photos from it to the Pi. There’s a smart web interface to control everything, making the process — if you’ll excuse the pun — a snap. There’s a video of it in action, that you can see below the break.

We’ve featured many film scanner projects over the years, one that remains memorable is this 3D printed lens mount.

Via r/raspberry_pi.

Oh there’s plenty of film left to scan. For my parents’ 30th anniversary, I found and had professionally scanned the negatives from their wedding album, and got reprints made. They came out WAY better and sharper than the crappy faded colors of the original prints they had in the albums. I also got an ultra high res scan of a 100 year old negative (someone had the forethought to stick in the back of the picture frame with a tiny print) of a photo of a historic building my dad owns. Blown up to 8×10, the super sharp black and white detail left in the film was impressive.

Well, it’s cheaper than a Mongoose, but not as good as a Valoi, or even a Pixl-Latr in terms of light diffusion or masking! I also would worry about scratches…

And scanners for 120/220 film are even more rare. I might just play around with this.

Be aware that color negatives have low contrast from the digital camera’s point of view, so you end up with less steps from light to dark than with a dedicated film scanner. For proper exposed negatives this is not a real problem, but for over or under exposed negs it is. changing exposure times on the digital camera does not alter that. it just shifts the same few steps on the “digital” contrast scale. this becomes very apparent when trying to correct this in gimp or photoshop.

black and white fares better and of course slide film does have the full dynamic range of digital cameras.

I Have experimented with this, using my EOS 5D as digital camera and later the pi hi res camera and an enlarger lens. It turned out that my old Nikon LS-30 had way more color info, but less resolution and could handle under or over exposed negs better than the digital camera.

I can feel myself ageing at how slow that process is.

That’s the whole point. You can take your significant other on a walk while the scanning continues.

I feel like finding the boarder of an image should be well within the machine learning capabilities of even an early Raspberry Pi. Doing it procedurally wouldn’t take too much programming, and would be fast on even the slowest first-generation Raspberry Pi.

Yeah but then he couldn’t use the snazzy Cloud features and thus would have much reduced buzz word bingo value! ;-)

Using Cloud stuff increases your buzzword powa! It is quite cool though!