It seems like within the last ten years, every other gadget to be released has some sort of heart rate monitoring capability. Most modern smartwatches can report your BPMs, and we’ve even seen some headphones with the same ability hitting the market. Most of these devices use an optical measurement method in which skin is illuminated (usually by an LED) and a sensor records changes in skin color and light absorption. This method is called Photoplethysmography (PPG), and has even been implemented (in a simple form) in smartphone apps in which the data is generated by video of your finger covering the phone camera.

The basic theory of operation here has its roots in an experiment you probably undertook as a child. Did you ever hold a flashlight up to your hand to see the light, filtered red by your blood, shine through? That’s exactly what’s happening here. One key detail that is hard to perceive when a flashlight is illuminating your entire hand, however, is that deoxygenated blood is darker in color than oxygenated blood. By observing the frequency of the light-dark color change, we can back out the heart rate.

This is exactly how [Andy Kong] approached two methods of measuring heart rate from a webcam.

Method 1: The Cover-Up

The first detection scheme [Andy] tried is what he refers to as the “phone flashlight trick”. Essentially, you cover the webcam lens entirely with your finger. Ambient light shines through your skin and produces a video stream that looks like a dark red rectangle. Though it may be imperceptible to us, the color changes ever-so-slightly as your heart beats. An FFT of the raw data gives us a heart rate that’s surprisingly accurate. [Andy] even has a live demo up that you can try for yourself (just remember to clean the smudges off your webcam afterwards).

Method 2: Remote Sensing

Now things are getting a bit more advanced. What if you don’t want to clean your webcam after each time you measure your heart rate? Well thankfully there’s a remote sensing option as well.

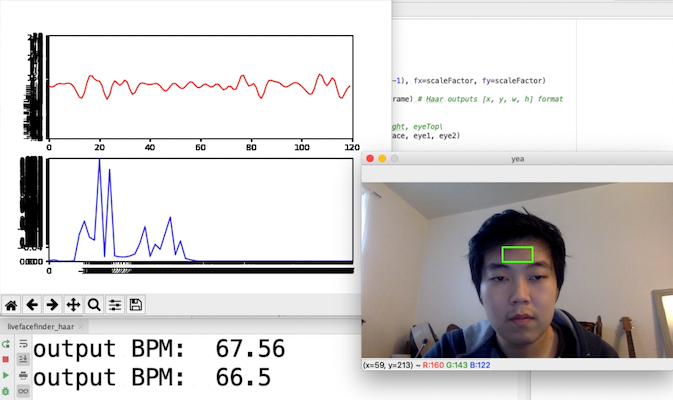

For this method, [Andy] is actually using OpenCV to measure the cyclical swelling and shrinking of blood vessels in your skin by measuring the color change in your face. It’s absolutely mind-blowing that this works, considering the resolution of a standard webcam. He found the most success by focusing on fleshy patches of skin right below the eyes, though he says others recommend taking a look at the forehead.

Every now and then we see something that works even though it really seems like it shouldn’t. How is a webcam sensitive enough to measure these minute changes in facial color? Why isn’t the signal uselessly noisy? This project is in good company with other neat heart rate measurement tricks we’ve seen. It’s amazing that this works at all, and even more incredible that it works so well.

Pretty much all the technologies have been developed to create a Star Trek style medical bed where a person just has to lie down and all their vitals can be sensed without any direct contact.

As long as the error rate is low enough I’m down for using it. Would be nifty to integrate it all into an overhead baby monitor. The commercial contact vitals sensors really only serve to severely anger most infants and thus throwing off the data. Would be nice to be able to see all that data without disturbing the infant.

Those radar breathing sensors seem interesting.

Lol, the massive word in the title forced me to turn my phone to landscape so I could read all of it. I would add it to my vocabulary, but even with my level of English literacy I had to take a moment to say it correctly.

Next step deployed at airports and train stations to …. Hello 1984, you have taken an extra 36 years to arrive, but boy did the book failed to do you justice.

Not quite yet. In 1984 Winston Smith was told if he wants an image of the future to imagine a boot stamping on a human face forever, but they’re still working on making the face like it.

This line has stuck with me since I read the book at school. In a terrifying way. So I have minimal social media presence and suspect I have paranoia.

I’m pretty sure it has been deployed since years.

I remember this being a thing back in the iphone 3 days. Those face scanners at the airport surely do more than just photograph you.

Using video feeds to detect heart ratehas been impressively demonstrated by Hao-Yu wu et al. in 2012. Check out:

https://people.csail.mit.edu/mrub/evm/ for code and video examples.

perhaps a longer averaging time between FFT results would calm my beating heart, which was swinging between 60-80. Or maybe I’m still excited by Christmas?

I’m sure advertising agencies would love to use this for measuring engagement.

Minority Report.

– Yep – I have a hard time seeing this being used for good… anyone can easily monitor your pulse – boarding a plane, employer during interviews (salary negotiations / etc?), advertising as you say, etc…. Good for people who have no business knowing your pulse/interest/excitement level, but not good enough for someone who needs to (medical/etc) know it, where there are better alternatives.

Run it against newsreels of politicians.

For good done.

You can actually see the pulsing variation in colour with the naked eye if you use a green light source/LED and put it behind the edge of the thin web of skin between your thumb and forefinger in low ambient light conditions.

I did this a couple of years ago when I was adapting Pulsesensor.com’s pulsesensor for a remote unit using Bluetooth to pass the sensor data to an app on my Android phone. I was doing a lot of experimenting, and at one point wondered just how faint the variations were, so I tried it out, and it was surprisingly clear.

Next step: use this to measure to mine user reaction data to advertisement.

Eulerian Video magnification

http://people.csail.mit.edu/mrub/vidmag/

One could see if the microphone picks up the swish-swish of blood moving around. And how about thermal changes.

I’m thinking about the easiest and least noticable method of jamming a cam trying this on you. How about face lighting that constantly shifts intensity at random, minor increments?

use make up

Ski mask.

And now on to pulse oximetry using a camera with IR and visible response?

I wonder how well this copes with makeup.

High SPF sunscreen has stuff in that’s also used in radar absorptive materials, so if we assume it’s effective from VHF to UVC, you can preserve your electromagnetic emissions privacy with that if you really lard it on :-D

Wonder how well it works on dark skinned people.

Also, how well does it work for those with irregular heart beat (e.g. atrial fibrillation).

Could be handy to have something that could screen for afib (for those that don’t

know they have it.) However since there is no regular pattern to the heart beats,

you can’t use beat frequency to do noise reduction.

This could be a handy addition to virtual doctor visits, which are the style at the time, and sure it is not as accurate as an ECG but then again a finger on the wrist isn’t either.

Amazing technology. Might even be interesting to try if there were actually some basic instructions for installing it for those who aren’t Python proficient. I got as far as some chart displaying. It seems to try to access the web cam when I close it before it pukes out some incomprehensible error message.. I have ZERO idea what a “Harr cascade” is or where to get it.

Exception in thread Thread-1:

Traceback (most recent call last):

File “/usr/local/Cellar/python@3.9/3.9.1_2/Frameworks/Python.framework/Versions/3.9/lib/python3.9/threading.py”, line 954, in _bootstrap_inner

self.run()

File “/usr/local/Cellar/python@3.9/3.9.1_2/Frameworks/Python.framework/Versions/3.9/lib/python3.9/threading.py”, line 892, in run

self._target(*self._args, **self._kwargs)

File “/Users/jcw/Downloads/face_tracking_ppg.py”, line 133, in readIntensity

face = getFace(frame)

File “/Users/jcw/Downloads/face_tracking_ppg.py”, line 51, in getFace

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

cv2.error: OpenCV(4.4.0) /private/var/folders/nz/vv4_9tw56nv9k3tkvyszvwg80000gn/T/pip-req-build-wv7rsg8n/opencv/modules/objdetect/src/cascadedetect.cpp:1689: error: (-215:Assertion failed) !empty() in function ‘detectMultiScale’

Traceback (most recent call last):

File “/Users/jcw/Downloads/face_tracking_ppg.py”, line 179, in

ROI = frame[bb[0]:bb[1], bb[2]:bb[3], 1]

TypeError: ‘int’ object is not subscriptable

Ignore this. It wasn’t supposed to be posted. I’ve flagged it, so hopefully it’ll get deleted.

Any clues how to get this running? No idea if it’s supposed to be Python2 or Python3. ‘pip’ is for Python 3, installed openCV, etc, but it barfs with some error that I have no idea what it means.

$ python3 face_tracking_ppg.py

Traceback (most recent call last):

File “/Users/jcw/Downloads/face_tracking_ppg.py”, line 179, in

ROI = frame[bb[0]:bb[1], bb[2]:bb[3], 1]

TypeError: ‘int’ object is not subscriptable

$

I had to add additional delay because apparently the main loop doesn’t wait for image data to be set by a thread. Add “time.sleep(3)” under the line with t1.start(). I also had to remove the whole plot/animation thing because I couldn’t get it to work under Windows – it just blocked on plt.show().

But anyway – it works, crashes if I look at the webcam in a wrong way (that could be fixed) and most of the time reports half the BPM I expect. Also it deviates a lot from BPM reported by a finger pulse oximeter

Thanks for the reply. Still doesn’t work right, at least on a Mac. I have to close the graph window before the webcam window appears, then it gets a lock on my face, but without the graph, there’s no heartbeat output.

Seems like no matter what OS I’m on, if it involves Python, it’s a miracle if it runs at all.