The Raspberry Pi is a fine machine that appears in many a retrocomputing project, but its custom Linux distribution lacks one thing. It boots into a GNU/Linux shell or a fully-featured desktop GUI rather than as proper computers should, to a BASIC interpreter. This vexed [Alan Pope], who yearned for his early days of ROM BASIC, so he set out to create a Raspberry Pi 400 that delivers the user straight to BASIC. What follows goes well beyond the Pi, as he takes something of a “State of the BASIC” look at the various available interpreters for the simple-to-code language. Almost every major flavour you could imagine has an interpreter, but as is a appropriate for a computer from Cambridge running an ARM processor, he opts for one that delivers BBC BASIC.

It would certainly be possible to write a bare-metal image that took the user straight to a native ARM BASIC interpreter, but instead he opts for the safer route of running the interpreter on top of a minimalist Linux image. Here he takes the unexpected step of using an Ubuntu distribution rather than Raspberry Pi OS, this is done through familiarity with its quirks. Eventually he settled upon a BBC BASIC interpreter that allowed him to do all the graphical tricks via the SDL library without a hint of X or a compositor, meaning that at last he had a Pi that boots to BASIC. Assuming that it’s an interpreter rather than an emulator it should be significantly faster than the original, but he doesn’t share that information with us.

This isn’t the first boot-to-BASIC machine we’ve shown you.

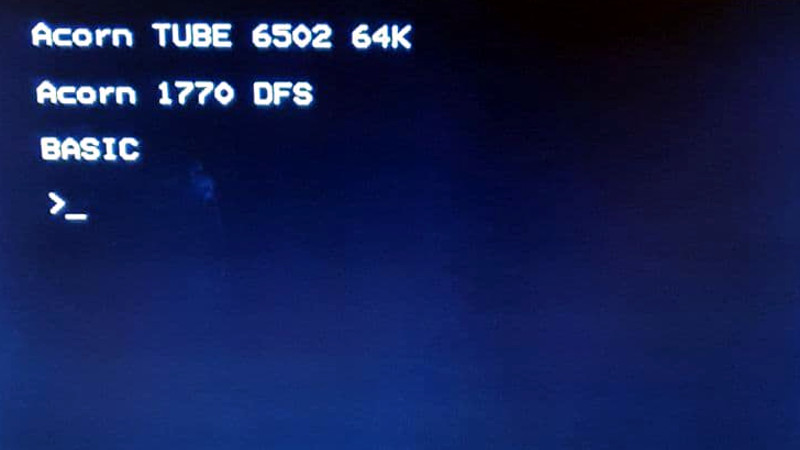

Header image: A real BBC Micro BASIC prompt. Thanks [Claire Osborne] for the picture.

I like the Raspberry Pink Look of RaspberryPi BASIC: https://www.highcaffeinecontent.com/rpi/

when the open firmware gets hdmi, hdmi_audio & usb. (In 2030 or so), I would love to flash it to the eeprom of a RPI4.

Abstraction is like onions. It has layers.

He starts off saying he doesn’t want to use emulation to get an old interpreter to work. So instead he finds a BASIC interpreter that’s written in Python. An interpreted interpreter. Facepalm.

I guess the good news is, he should get authentic 80s performance from that.

Oops – didn’t read far enough.

Interpreted interpreter like TI-99/4 and 4A console BASIC. From BASIC to GPL (Graphics Programming Language) to 9900 machine code. Extended BASIC was much faster due to skipping GPL and tokenizing many common commands, which reduced program size in storage and RAM.

It’s *BASIC*. Why complain about trivial things like interpreter recursion? And it’s still going to be orders of magnitude faster than the original machine, because you have a very warped idea of Python’s performance.

Python is roughly one to two orders of magnitude slower than compiled C++. Modern hardware makes the performance of Python acceptable, but If you need speed then you still should be reaching for a compiler.

Which really does go back to pelrun’s question. There’s no need to worry that small of a performance difference for an RPi interpreting BASIC.

If you need reliability too you should reach for a compiler, they bring up all those annoying typos which an interpreted language doesn’t notice until it reaches that biut of the code, hours after it started running.

THIS is my biggest issue with Python. Some interpreters pre-translate the source code into some sort of byte code, giving them the chance to find blatant errors like this, and to catch most syntax errors, but not Python, or at least, not CPython.

If you think it’s the “compiling step” that catches syntax errors you need to revisit your language design textbook. Oh, and Python absolutely does compile source to bytecode before execution, contrary to your statement. And you should know that the old BASIC’s do so too (although in a more rudimentary fashion).

Abstraction is love, abstraction is life

Until eventually, it’s just turtles, all the way down.

Abstraction is wonderful it lets you hide the fact that you’re a shit programmer. Why write code that actually solves the problem you were set when you can write a layer that does nothing useful (except bring in the pay checks) and get other people to actually write the real code for you :-)

I would love a machine that booted to Comal.

But really what I love was when I had a SuperPat, one machine and I could boot to APL, BASIC, FORTRAN, PASCAL, Cobol, I see no reason why more computers today are so limited in their basic boot options.

I want computers to be more like IBM mainframes where there is a hardware hypervisor so you can install multiple operating systems at once, and you can migrate OS images to new hardware without shutting them down.

I think there’s options like that that are exactly like IBM mainframes, you have to rent them… some cloud platforms from MS and the usual suspects.

It’s not “exactly” like an IBM mainframe if it lives in the cloud. Indeed your solution won’t work at all if your data has to stay on premises.

This is HaD. We can do that.

Please provide a link to your hardware hypervisor implementation

Erm, no, IBM mainframes had hardware support for their hypervisor, just like modern X86 machines have hardware support, but not a hardware hypervisor (my first commercial role was working on an IBM 3090 using VM/MVS, the mainframe team discovered that they could nest VM inside of VM etc).

There aren’t too many bare metal hypervisors around today, but you can get a free copy of VMWare VSphere for private use.

We have software hypervisors that can do exactly what you’re asking for.

I am running IOTstack, which is a simplified wrapper of many projects using docker, I would say that is the closest thing to VMs in a box like the RPi.

SuperPat or SuperPet?

Technically most modern machines boot to the GUI shell. You can however configure them to boot to captive applications, like a BASIC interpreter or other language environment if you wish.

Wow someone else did this too? I’m launching bwBASIC at boot in Armbian on a OrangePI Zero, inside a Myntz tin, connected via composite video to a TV… Gotta get that whole ’80s feel. ;-) And just for fun I added an espeak and NeoPixel interface.

@Earl Colby Pottinger you could setup a virtual console with each of those languages.

@X I don’t know about the IBM way… but it sounds like KVM is awful close to what you want.

Now if you want that real 80’s goodness, add a Votrax SC01 chip to create synthetic speech…

No, no, no. For the BBC, you need the TMS5220 and a TMS6100 PHROM with Kenneth Kendall’s (UK TV news broadcaster) voice.

SP0256 for the win!

I think I still have one in a blister pack at home.

How is KVM “awful close to what I want”??? I can tell you right now that your mind-reading skills are poor at best.

It’s x86 only, which is obsolete in 2021, might as well be VAX, just not interesting any more It needs an otherwise pointless copy of linux running under it. It’s a waste of resources that accomplishes nothing and simply introduces more opportunities for security issues. You can’t upgrade this OS without bringing down all of the other operating systems on the computer. The Whole Point of hardware virtualization is that you can keep your applications running continuously with no downtime.

Quote from the RedHat web site:

“KVM virtualization on ARM systems is not supported by Red Hat, not intended for use in a production environment, and may not address known security vulnerabilities.”

Yeah again, please tell me how this is “awful close to what I want”…

How would you do a security upgrade on a hardware hypervisor?

I did preface that with “I don’t know about the IBM way…” In truth, like you, I’m very opinionated about my preferred computing environment. IBM has never produced anything I’ve wanted to use as a computer. So I’ve not bothered to follow their endeavors and would have never been able to afford the machine of which you speak.

Apologies, I confess I do not have “mind reading skills”. Just thought I’d throw it out there in the hopes it would be helpful. And obviously it was not. :-D

FWIW: I think you’ll have a hard time convincing the rest of the industry that x86 architecture is obsolete. As much as I’d love it if it were…. I’m sure it has at least a couple of more years in the running. :-D

And thank you for the laughs!

Not quite:

https://stackoverflow.com/questions/41893314/virtualization-architecture-on-mainframe-z-architecture

Virtualization on ARM.

https://www.researchgate.net/publication/331566686_Virtualization_on_TrustZone-Enabled_Microcontrollers_Voila

But does it boot as fast as a BASIC computer from the ’80s?

Unless it can have the ‘READY>’ prompt flashing by the time my finger leaves the power button, it is simply not good enough.

And I’ll also bet that you have to “shut down” – like every damn computer OS seems to have required since DOS was put into the ‘obsolete’ category.

1980’s microcomputers with their entire OS in ROM. “Boot time? What’s that?”

The old fogies here have apparently forgotten that their CRT displays took a long time to warm up, probably longer than it takes a modern machine to boot up.

80s monitors may have been CRT based, but the CRT was usually the only vacuum tube in the box. Warm up times were only about 5 seconds. Chances were good that by the time your hand traversed the space from the monitor power switch to the computer power switch, that warm up time was nearly half over. Most of the machines I recall using would have a visible, legible display when prompt appeared, and would be settled in before you could finish typing the command to load your game of choice.

Now, if you were stuck using the old family television, that 550W space heater, gawd help you. Turn on the TV, go pour yourself a mixing bowl of cereal, you’d probably be able to see something by the time you got back.

“Warm up times were only about 5 seconds”

Speak for yourself, actual professionals at the time (like me) were using enormous Sony Trinitron monitors that took almost a minute for the colors to settle in properly.

Welcome to 2021! Ubuntu boots up on my computer in 5 seconds.

Gotta call BS here. You’re exaggerating in the extreme in both cases. Show us a video of your Ubuntu going from power on to desktop in five seconds, or it never happened. And 5 seconds may be an exaggeration, but well under ten seconds for TVs made in the 80s to show a picture.

A $2500 22″ Sony Trinitron monitor is NOT a TV, can’t display a TV image. It only has 3 BNC connectors on the back for RGB input. It was the State of the Art in video technology in the 1980s, accurate color for publishing professionals. It takes a Long time to warm up.

And Yes I just fired up an ubuntu virtual machine on my fancy new Mac and it’s 5 seconds from power up to login prompt.

Nope. Still calling BS. As I said, video or it didn’t happen. And I don’t mean splash screen covering up all of the messages. You’re obviously leaving out something important here.

I’d say about 3 seconds, don’t tell me you paid full retail for a monitor with a broken heater? LOL

Also, there’s a significant difference between a consumer TV/monitor, and a pro monitor for high res graphics. Fast start was a big selling point for consumer monitors.

Probably not many computer monitors, but consumer TVs in the 80’s used to run the heater during standby so when the user pressed ‘on’ it would come alive almost instantly.

I’m with BrightBlueJim : BS. As far as I’m concerned, pressing ‘go’ on a VM is not booting. I’d pretty confidently bet that when you *boot your hardware*, the BOIS takes considerably longer than 5 seconds before it even starts looking for an OS!

Pretty much a non-issue in reality. Most monitors from that era took around 5 seconds or so. Even the big 19″ CAD monitors came up fast.

OS’s like WinNT and Linux took much longer, Remember back in early 90’s a 25MHZ 386’s with 8 MB of RAM and IDE HDD were the norm. Heck you couldn’t even run a modern OS on them considering how bloated Windows and Linux have gotten.

Also an issue in some models that persisted into LCD monitors might have been sluggish mode switching. If your board put up the video bios string, then the bios splash screen, then the boot options on short timeout, then the bios summary, then started loading the OS, a sluggish switcher could be black all the way through to the OS loading.

CRT?

http://hpmuseum.net/display_item.php?hw=55

This was my first computer and the display was on *instantly* because it was individual LED’s. Rocky Mountain BASIC in ROM, with extended commands in plug-in rom.

LATE 80s home computers had their OS in ROM, if you’re talking about the 16 bit machines. The only 8 bit machines that can be said to have an OS were booting from floppies. This was not the “80s experience”.

Commodore 64, TI-99/4A, various Atari systems. All ready to roll instantly with the flip of a switch. With the TI you could plug in the Editor/Assembler cartridge and then load games from disk that had been ripped from cartridges. The disk system was a lot faster than Commodore’s 1541. Pretty much everything but a cassette tape was faster than the 1541.

The TI benefited from having a much larger cartridge library than other home micros – then TI picked up the idiot ball and tried to lock out all 3rd party cartridges with the final revision of the computer shortly before dropping the axe.

I run Linux, up time, 306 days. Booting, whats that?

“Required”??? Huh??? So you are apparently unaware that one can boot up Ubuntu from the installer disk, run programs from the desktop and just turn it off when you are done with no ill effects?

Yeah you could boot Linux from CDROM way back, IMS over 25 years ago when I first played with it. Pretty really useful. Way before Ubuntu existed.

That;s not the point though. With the systems that booted to BASIC you didn’t need to learn about the complexities of OS’s like Linux to do anything. That would be a nice option to have on a Raspberry for noobs who want to learn to code.

You don’t need to learn the complexities of OSs like Linux to do anything now.

Based on this and other comments you’ve made, it seems like you have missed the whole point. Yes,of course you can use a computer without knowing anything about how it works. Just like you can get on an airliner and fly around the world without taking a single flight lesson. The point that you are missing is that for many of us, the 1980s “boot to BASIC” machines were simple enough that we could UNDERSTAND HOW THEY WORK. You can’t really understand what’s going on, when the user interface is 37 levels deep in abstraction.

Now, I’m an old person, and I lived through much of the evolution of computers, from mainframe computers that only clergy were allowed to share air with, to phones that could handle guidance for a moon landing while also showing a movie. The late 1970s and early 80s were a magic time, because for most people, this was the first time when computers became accessible to non-priests. And not only that, but because nobody as really sure what they were going to be used for, ALL of them came with a way for the owner to program them. We learned to program in BASIC, and when we figured out that there was a lot more power than that available, we learned to program in the assembler for our particular CPUs. But BASIC was the spark, the initial Aha! The what-it-meant to control a computer.

But then came the 16/32 bit CPUs, and the worst thing ever, as far as hobbyists could see. The dark ages. In order to make computers more reliable, and to make them capable of doing multiple tasks simultaneously, memory protection was introduced, and THE VIRTUAL MACHINE. Very quickly, programming computers was shifted back to the high priests, because every device that connected to a computer became a “shared resource” that an “operating system” had to manage. Yeah, BASIC was still there for a while, but emphatically missing were the “peek” and “poke” instructions that had given us absolute dominion over our computers, because these were inconsistent with the notion that only a Master Control Program could be trusted with the devices that we had bought with our hard-earned cash.

It got even worse, when simple interfaces like the printer port and the RS-232C serial port, artifacts from a more primitive time, were obliterated, replaced by a much harder to control interface called the “universal” serial bus.

But no dark age lasts forever, and after ONLY A GENERATION, we got our computers back, in the form of microcontrollers. Computers too small to cram an operating system into, that once again, a single human brain could understand. It took a while for everybody to notice – I was working with the 68HC11 and Microchip and Atmel micros in the late 90s – but two devices were introduced in the next decade that brought us a second enlightenment: first the Arduino, then the Raspberry Pi. The Arduino gave us the bare metal again, but held our hand a little. BASIC had been universally panned over the previous generation, so Arduino brought us C++ with enough libraries to keep us from ever having to actually learn C++, and then Raspberry Pi brought us a computer that worked almost like a “real” computer.

From what I’ve seen with young people, some of them are getting that “aha!” moment today, and it brings hope to my heart. And even if due to my training I’m still not sure that BASIC is really the best option, and not really that big a fan of Python, I can see that there is a HUGE benefit to lowering the barrier to entry. “Sometimes there’s so much beauty in the world, I feel like I can’t take it, like my heart’s going to cave in.”

So telling us that “we already have things to do all that” is really an insensitive thing to say to all of this. It smacks of elitism (“what, you don’t have a degree in software engineering?”) and suggests that there are forces that wish to impose on us a new dark age. Don’t be surprised if there is some push-back.

Long post Jim, and I’m with you every step… except for the last one.

Arduino, yes: easy to use, easy to learn, and bare-metal.

But although the Pi might also be easy to use, it is not a bare metal machine, and if you want to know how it works – you’d better brush up (and be prepared to spend some serious effort doing so). There have been some attempts to wrest it away from having to run Linux (MM-BASIC), but from my understanding, none got there.

Modern machines can hibernate or sleep, so the time between power and having a prompt can actually be less than that (a modern laptop can be up and running before you finish opening the lid).

In Basica we trust.

Tho the RS6000 & SP2 were fun

I learned BASIC in high school on a Research Machines 380Z back in 1978, and later when I could afford it, I built a ZX81 from a kit.

BBC BASIC has been around for 40 years, and has been runing on ARM devices for close to 35 years, thanks to the life time involvement of R.T. Russell

http://www.bbcbasic.co.uk/index.html

If I buy a new washing machine, I don’t need a working knowledge of Linux and be familliar with Github, to load the latest software in order to wash my clothes.

A Pi 400 that boots direct to a baremetal BASIC would be ideal for newcomers to computing.

Once they grow tired of BASIC, they can move on.

It would also be a lot smaller and faster than emulating an 80×86 in python and running MS BASIC.

Well done to Popey (Alan Pope) for perservering with this, but as a computer professional, with some foresight from the Raspberry Pi Foundation, it could have been made a lot easier for him – and a lot easier for every kid that go a Pi 400 for Christmas.

I run a version of BBC BASIC on my Amstrad NC200 – again, a RT Russell build :-)

But I think it’s best that this isn’t the default, although an optimised image once the author has time to speed up the boot would be cool.

Also, some colour, you don’t need to replicate the drab BBC BASIC editor!

Yes and no. Friends don’t let friends program in BASIC. But using Cortex-A machines to do straight-to-the-editor is overkill. MicroPython or CircuitPython on a Cortex-M from a terminal is the holy grail today. Terminal? No, I don’t mean a terminal emulator running on a PC. I’m talking about a microcontroller-based thing that connects a display and keyboard on one side to a serial port on the other. Operating systems dribble. It takes three minutes for my PHONE to go from power up to doing its job, for crying out loud. How the oil-shale FRAKK did we get here?

” Friends don’t let friends program in BASIC.”…

BS BS BS…

A true friend would do his best to make programming look easy, to encourage his friend to try. He wouldn’t make it look like an impossible task (only an egotistical ahole would do that to make himself look superior). BASIC is great as an introduction. It is very forgiving when you make mistakes and doesn’t take the keyboard away from you and make you feel like a loser. Write a program in C, make a small mistake and chances are the program crashes without any indication of what went wrong… great for self esteem, great way to help you progress, great way to motivate you. But hey at least your friend thinks you must be so much more clever than him.

I never mentioned C. Like the Raspberry Pi Foundation, I advocate for Python as a gentler introductory language that you can use for real work, that you don’t have to un-learn bad habits from.

I am waiting for it to boot into TRSDos or DOSPlus…..😁

PR#6

The only way this could be any better is by adding a rubbery chiclet keyboard with a key for each BASIC command.

RISC OS Pico already does that.

it should boot to Forth, or Lisp, or Scheme. Tempting prospect, really.

Why is everyone focusing on “boot speed”.

The real strength of BASIC in ROM is the bullet proof environment and ease of use that it gives you. Ok so you write a program on a machine that has BASIC in ROM and your program tries to write past the end of an array. The interpreter stops and flags the error and then lets you investigate at the source code level. What happens if you do that in C on a machine that doesn’t have a debugger ready and waiting to jump in, or if you don’t have the experience to use such a tool. What happens with a BASIC program when it seems to just go out to lunch. Do you reach for the break key, have a look around, maybe print a few variables and do some single stepping. What sort of support do you need on a micro controller to be able to do that with a program written in C.

How many novice BASIC programmers have tried a bit of code then gradually added to it watching the results as they go, learning by doing and gaining a firm understanding. How many of them have progressed on to assembler in search of greater speed because they’ve been encouraged by their efforts. How many of these people then found it easy to move to C because of their understanding of the underlying assembly code.

Anybody that says “It is practically impossible to teach good programming to students that have had a prior exposure to BASIC: as potential programmers they are mentally mutilated beyond hope of regeneration.” is a ***SHIT*** teacher

You are right, boot *speed* is not that important, except if you are doing something real.

I started out in the mid-80s just like you say – writing a simple BASIC program and expanding on it, getting into assembly, finally going to uni to learn other languages.

Microcomputers and microcontrollers have diverged considerably since they were pretty much born together in the late 70s. Microcomputers had an OS (started with BASIC in ROM) and did “non-real” things like ray-tracing, word processing and games. Microcontrollers did “real” things, like CNC machines, assembly lines and building HVAC.

But now the line is blurring, and microcomputers with bloated OSs are now fast enough even with all their bloat to be given ‘real’ tasks. And that is why we are now lumbered with TVs that take longer to boot than an old-school TV with a vacuum tube.

Some readers may recall in the late ’90s, manufacturers of VCRs were in an all-out war to make their machines the fastest to go from off to playing a tape when you put it in. Now to do the equivalent you can make a coffee in the interim!

I focus on boot speed because most of the stuff that an operating system is doing when it is starting up has nothing to do with what I want to do with that computer. Would you accept an automobile that wouldn’t let you put it in gear for 30 seconds after you turned it on? Most people wouldn’t. And this is something that has gotten worse over time, not better. To the point where Windows 10’s default is to not shut down at all, but just hibernate when you tell it to shut down. Which is a problem if you’re dual-booting your machine, because it means that the filesytems haven’t been unmounted when you reboot to another OS. If you like waiting for lengthy boot sequences, knock yourself out, staring at hundreds of lines of initializations scrolling faster than you can read. Doesn’t do a thing for me.

As for the “Friends don’t let friends program in BASIC”, that was a joke. I started out in BASIC myself. Learning BASIC is no more damaging than learning how to program a programmable calculator. There are languages that call themselves “BASIC” that encourage writing modular code, and I have no problem with these. Please calm down.

Unfortunately most people seem to be going for cars that ‘won’t let them do’ these days – cars are becoming dumbed down like everything else in this nanny state… won’t start without foot on brake, handbrake won’t apply unless in park or foot on brake (both sometimes), won’t change out of park without foot on brake again. Next thing I won’t be able to accelerate unless my foot is on the damn brake!

And while I’m whinging, I get it that you might not be able to just pull the plug on your computer because your filesystem might get trashed, but filesystems that handle just that use case have been around for a *long* time. It’s just that M$ didn’t invent them, and because they are a dominant player everyone else has to use their crap – and pay M$ licence fees for the privilege!!

“I get it that you might not be able to just pull the plug on your computer because your filesystem might get trashed, but filesystems that handle just that use case have been around for a *long* time”…

Repairing the file system is one thing but this does not automatically fix the data in it. Consider the situation where several tasks are writing data to various files. The OS buffers the data and writes it out at some future time. Maybe before the task that tried to write it is allowed to continue, maybe several seconds (or tens of seconds) after it has been allowed to continue. This might be because the OS is organising the disc writes based on where the physical read/write heads are actually positioned (this helps prevent unnecessary head movement and improves performance) or it might be because some very high priority task needs to access the disc in preference to the task that has just tried to do a write (maybe doing a virtual memory swap). Then there is the lag between the OS writing the data to the drive and the drive actually writing it to the surface of a disc (which is further complicated when using shingled drives). So although you might be able to easily fix the meta data on the drive, the actual data is another story. Shutting down the computer is by far the best way to prevent actual data loss.

BTW ever wondered why sometimes WinBlows writes data to a drive faster when it is run in a VM rather than a physical PC. The answer is because sometimes WinBlows is synchronising its write to the physical drive so that the data is guaranteed to be on the drive before it continues but when running on the VM the hyperviser is caching the writes in memory while telling WinBlows that the write has completed.

It seems like you’re arguing both sides of the issue! (No worries – I often do that.)

That’s the thing with operating systems, as they evolve: a) they require time to initialize themselves in order to be prepared to do literally anything, and b) are often doing things you don’t know about. Which is why many people still like to have a separate camera and a separate calculator, even though their phones *can* do the functions of those devices. So essentially, we’re talking about a standalone “programming appliance” that in some respects is better than using your do-everything computing devices.

“It seems like you’re arguing both sides of the issue!”…

It was not my intention to do so. I was trying to stop a misconception from spreading further. If people rely on file system repair instead of shutting down their systems properly then sooner or later they will have huge trouble.

Yes I understand your point regarding a standalone “programming appliance” but even a PC that needs to boot from a disc could easily do this. One of the biggest problems with a modern OS is that when it boots up it needs to look around to see if any of its hardware has changed. Think about all the components inside a PC, motherboard, CPU, RAM, graphics card, NIC, drive controller – even the BIOS!. Then there are things outside like the network, servers, monitor, scanner, printer, camera. Devices inside and outside need to be identified and initialised, responses need to be received, conflicts need to be resolved, bad hardware needs to be isolated and worked around. Get rid of this flexibility and you will have much faster boot times. Then you have a*hole software companies that insist on “phoning home” to check for updates, licences and even just report back statistics so that they can target you with advertising. Again get rid of this and your boot time will decrease. The point ***I’m*** trying to make is that YOUR “programming appliance” doesn’t need to be a bare metal micro booting off ROMs to fulfil your high speed boot requirements / development cycle. You could boot from FreeDOS on an SSD if you wanted to which is extremely fast or from FreeBSD (again on an SSD) which is a little slower but provides a bullet proof environment with decent compilers, debuggers and network support. I’ve just timed a low end PC I have here (for you – I don’t normally need to reboot it). It uses a J3455 CPU running at 1.5GHz with 8GB or RAM and an SSD. it takes about 17 seconds to boot up AND if a program that is under development crashes it doesn’t need to be rebooted.

Correct me if I’m wrong but are you using WinBlows and Linux and using these as your yardsticks? Get yourself a cheap dedicated PC to run FreeBSD and you’ll be happy that you did :-)

Yes, I understand that there are reasons for every microsecond of that boot process, BECAUSE it’s a do-everything machine. But I don’t need ANY of that. You know that PCs have boot code in ROM because without that, the computer doesn’t even know there’s a disk drive. And in the earliest floppy-based systems, that was enough, because you could put enough code in ROM to recognize the disk drive and read its first sector, and from that you could understand the filesystem, and so on. THIS is the point I’m trying to stop at. You are suggesting that I use a system that goes a few steps further, but I say, WHY? This was enough for me in 1989, and it’s actually still enough for me. Everything is bigger, so in a microcontroller I can have enough program in place to run both an interpreter AND a basic SD based “operating system”. I put that in quotes because it wouldn’t qualify as an OS today, but I question that all of the progress made since that point is even useful to me TODAY.

Okay, so a crashing program won’t require rebooting. So with my primitive (proposed) system, I turn it off, turn it back on, and I’m back in my editor in three seconds. So all those layers of abstraction you insist on saved me a whole three seconds. If that, since if my program hangs, I either have to wait for something to time out, or kill the process, or something else. And if for some reason my program ate up all available memory, that something else is going to take almost as long as rebooting, and I really, really, REALLY won’t want to hold down the power button to turn it off, even though this is STILL sometimes necessary, for the reasons you describe with file system “repairs”.

You’re saying that it’s worth those extra layers, and I’m saying, no, it’s not. But I guess we’ve both made our points by now.