These days, nearly everyone communicates through some kind of keyboard, whether they are texting, emailing, or posting on various internet discussion forums. Talking over the phone is almost outmoded at this point. But only a few decades ago, the telephone was king of real-time communication. It was and still is a great invention, but unfortunately the technology left the hearing and speaking-impaired communities on an island of silence.

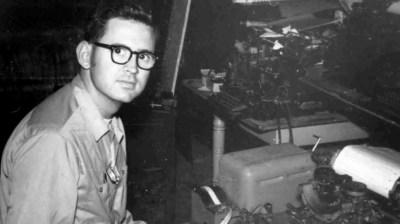

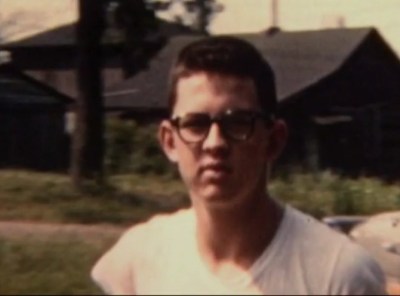

Engineer and professor Paul Taylor was born deaf in 1939, long before cochlear implants or the existence of laws that called for testing and early identification of hearing impairment in infants. At the age of three, his mother sent him by train to St. Louis to live at a boarding school called the Central Institute for the Deaf (CID).

Here, he was outfitted with a primitive hearing aid and learned to read lips, speak, and use American sign language. At the time, this was the standard plan for deaf and hearing-impaired children — to attend such a school for a decade or so and graduate with the social and academic tools they needed to succeed in public high schools and universities.

After college, Paul became an engineer and in his free time, a champion for the deaf community. He was a pioneer of Telecommunications Devices for the Deaf, better known as TDD or TTY equipment in the US. Later in life, he helped write legislation that became part of the 1990 Americans with Disabilities Act.

Paul was diagnosed with Alzheimer’s in 2017 and died in January of 2021 at the age of 81. He always believed that the more access a deaf person had to technology, the better their life would be, and spent much of his life trying to use technology to improve the deaf experience.

Learning to Speak Without Hearing

Soon after three-year-old Paul started school at CID, he met a little girl named Sally Hewlett who would one day become his wife. Along with their classmates, they spent the next several years learning to speak by holding their hands to the teacher’s face to feel the vibrations of speech, then touching their own faces while mimicking the movement and sound.

Paul’s father died while he was still in school. His mother moved to St. Louis to be with her son so he could still attend, but live at home. She took the opportunity to study at CID and she became an accredited teacher for deaf children. When it was time for high school, Paul and his mother moved to Houston, where she started a school for the deaf, and he enrolled in public school for the first time. Paul had no interpreter, no helper of any kind.

In a 2007 documentary made by the Taylors’ youngest daughter, Paul tells a story about an experience he had in high school. There was a nice looking girl in his class, and he wanted to know more about her, so he asked a different girl who she was. When that girl offered to give Paul the first girl’s telephone number, he stopped in his tracks, realizing at that moment how different he was because he couldn’t use the phone like all the other kids. The experience stuck with him and helped drive his life’s work.

Phones for All

After high school, Paul completed his bachelor’s of chemical engineering degree from the Georgia Institute of Technology in 1962 and moved back to St. Louis to earn a master’s degree in operational research at Washington University. In the meantime, Sally, who had gone to high school in St. Louis, earned her bachelor’s degree in home economics and returned to CID to teach physical education, religion, and home economics. When Paul learned that Sally was living in town, he got in touch with her immediately. They started dating and were engaged six months later.

Paul took Sally to the 1964 World’s Fair in Queens, New York for their first anniversary. They marveled at AT&T’s Picturephone and wished the future would arrive sooner so they could easily talk from anywhere by reading each other’s lips. By day, Paul was an engineer at McDonnell Douglas and later, Monsanto. He was a different kind of engineer at home, devising different ways to help raise their three hearing children. After their first child was born, Paul built a system that would blink the lights in the house to let them know the baby was crying.

He also did whatever he could to help the deaf community by volunteering his time. The phone problem still bothered him greatly. When he noticed an old Western Union teletype machine from WWII just sitting around collecting dust, he got the idea to turn it into a new kind of communication tool.

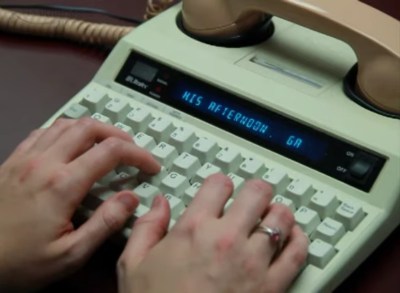

Around the same time, a deaf physicist named Robert Weitbrecht was developing an acoustic coupler that would transmit teletype signals over consumer phone lines. Paul got Weitbrecht to send him one and created one of the first telecommunication devices for the deaf (TDD). With one of these devices on each end of the phone line, anything typed on one would be printed out on the other. Paul worked with Western Union to get these old teletypewriters into the hands of hearing and speaking-impaired people, and convinced AT&T to create a relay service to use them as well.

Paul started a non-profit organization to distribute these early TDDs to other deaf St. Louisans. He asked a local telephone wake-up call service to help out, and built one of the first telephone relay systems in the process. Although both parties needed a TDD to be able to communicate, this was a big step in the right direction.

Paul also did a lot of work to keep the machines humming for the people who depended on them. Teletypewriter manuals were helpful, but were awfully dense reading material for the layman. Paul organized a week-long workshop to create a picture-rich manual called Teletypewriters Made Easy to help people repair and maintain three common models of teletypewriter. Paul discusses his personal history with TDD development in the video below.

A Loud Voice for the Deaf Community

In 1975, Paul was offered a position at the National Institute for the Deaf at Rochester Institute of Technology, so the Taylor family moved to upstate New York. Paul became a computer technology professor and chairman of the Engineering Support team. He stayed there for the next 30 years before retiring.

During this time, he advocated for a national, operator-assisted telephone relay service through which deaf and hearing impaired people could communicate with anyone, whether or not the other person had a TDD.

The idea was that the deaf person would use a TTY to call an operator, who would get the other person on the line and relay messages back and forth between the two parties by typing out what the voice caller said and reading aloud what the TDD user typed in response. Paul took a two-year leave of absence from teaching and worked directly with the FCC to write regulations that became part of the guidelines prescribed in the 1990 Americans with Disabilities Act (ADA).

Learning How to Hear

At the age of 65, Paul and Sally decided to get cochlear implants after a lifetime of silence. Their youngest daughter Irene made a documentary about their experience called Hear and Now, which is embedded below. It’s an interesting firsthand look into the process, which is not the instant cure that the internet may have led you to believe. The implant can’t be activated until the swelling from surgery goes down, which takes about a month. And it can take years for the brain to get used the new sensory information and begin to distinguish relevant sounds from background noise.

Although TTY/TDDs are falling out of use thanks to the videophone-enabled text messaging devices in most people’s pockets, their influence on communication lives on in shorthand now used in our everyday messages — OIC, PLS, and THX are older than you might think.

Thanks for the tip, [Zoobab].

I have severe hearing loss and was very dependent on a loaner TDD (thanks to a non-profit) for talking to people with difficult voices in the 1990s. Helped me get through early job searches after finishing school. Some people I called, including friends, lost their patience with the time it takes to use a relay service where you type to live operators who communicate with those who hear. Thankfully, email and internet messenger became very popular as back-ups around that time. Now I have a cochlear implant and almost always use voice on the phone. Thank you for a life-saving invention, Paul!

Pretty damn cool. I knew about TDD, but not the background. Makes you thankful for iPhones and The Internet (not to mention cochlear implants).

You think Alexander Graham Bell would have worked on something like this considering he invented the telephone and worked with the deaf all his life.

“At the age of 65, Paul and Sally decided to get cochlear implants after a lifetime of silence.”

I thought that deaf adults couldn’t benefit from cochlear implants as their brains hadn’t learned to “hear” during their youth? Maybe that only happens in totally deaf people and not those who are just profoundly hard of hearing?

The cochlear implant experience of Paul and Sally is the main focus of the documentary that Kristina linked to. Worth a watch.

Learning to hear after a lifetime of deafness is possible. There are several causes of deafness and as long as the cochlea and optic nerve are not damaged, it can be stimulated or “woken up” when an implant triggers the nerve cells in the cochlea. It’s true that deaf people hearing for the first time will often say “What’s that sound?” when they hear a bird chirp. It’s quite a miracle for them.

Next time you have to deal with /dev/ttyUSB0, think about this guy!

Wait, is that the same thing?

No. “TTY” is a generic abbreviation for “TeleTYpe”, which was the manufacturer of both 5-level (Baudot) and 8-level (ASCII) machines. Baudot was the earlier code, used (primarily) by Western Union (for telegrams and TELEX) and the military. ASCII came in the 60s and was used for the Bell System’s Teletypewriter Exchange (TWX) service…and got picked up by DEC as a cheap user terminal for their PDP series of computers.

As the older Baudot Telex machines were replaced by newer ASCII TWX machines in industry, the Baudot machines became available cheaply. Ham radio and TDD were the primary “markets” for these.

There’s a LOT of history behind Teletypes…google “Teletype History”.

Teletypes were built in Chicago by a subsidiary of Western Electric. They were always a product of The Bell System, though they weren’t advertised as such. Their development is related to that of the paper tape “stock tickers” you see in really old movies, and came about because skilled telegraphers were expensive and scarce. A totally automatic replacement that worked over the same lines (thus, “current loop” interface) as telegraph keys and sounders was cheaper, faster and more confidential.

Early UNIX *might* have had the ability to support the 5-level Baudot code. I’m not definite about this. It’s not only a 5 bits vs 8 bits thing, there are different bit rates involved and Baudot has two modes, “LTRS” and “FIGS” and a hardware relay in the machine that switches between the two, triggered by a pair of control characters.

On the computer side, there are hardware issues. The UARTs that convert serial to parallel data used to support character lengths from 5 to 8 bits, but the newer UARTs don’t, so it’s difficult (but not impossible) to send Baudot data from a PC. It can be done, but it requires some fiddling (and possibly an Arduino): https://heepy.net/index.php/USB-teletype

Antron – Check this out if you haven’t seen it yet, ‘curious marc’ on youtube connects an old baudot teletype from 1930 to linux: https://www.youtube.com/watch?v=2XLZ4Z8LpEE

The sound alone is worth the watch.

My memory says most UARTs were 8 bit. Or maybe standalone UARTs could be 5 bit. I do remember that the Mos Technology 6551 could be set to 5bits, and could be used with an external baud rate generator, perfect for Baudot. That seemed unique in the age of UARTs that ran on the bus.

Of course, one could do a software UART, maybe at 45 baud all would be fine. I used a Radio Shack color computer for ten years, it had a software UART for the serial port, which I used with the printer. That would slow the multitasking OS-9 down to a crawl.

When TinyBASIC was a thing, some people modified it to use with a Baudot printer. But it lacked a bunch of characters that an ASCII printer had.

I have a relative who is deaf and utilizes the TDD via relay to communicate with me. She was fascinated with me learning morse code but wondered how I could talk to someone with just “clicking a key.” I built her a code light and we practiced morse code together with light and buzzer on both ends. Now, she has her license (via handicap waiver) and is a General Class operator as well. We talk via RTTY on the weekends and meet up when we can in the summer time. It’s what we take for granted that makes us dependent on other means. I met Professor Taylor in Buffalo many years ago, and totally enjoyed the quick 5 minutes I got to “talk” to him. He smiled when I signed “Thank You,” but said, “Keep talking, your dialect is hard to see.” Funny man, and great man who brought a better way for those with disabilities to communicate.

Recently I read an article about how much Polish Sign Language is different from “regular” Polish. I knew that sign language grammar is simplified, but did not realize that for many deaf people Polish is a secondary language and that even some adults have problems — they tend to use simplified grammar in writing.

The article doesn’t mention this at all, so does it ever happen with American Sign Language? English grammar is much simpler than Slavic languages so common sense suggests it’s much easier for a deaf American to use English in writing properly, but could any native speaker/signer shed some light on this?

Alike regular languages, sign language (SL) has its variants by region and country. There are nuances in SL that are not unlike how we speak can change the meaning of a sentence. Researchers have studied sign language and found that it is rich in its own way and deaf people come up with variations unique to their regions and even families. SL does not consist of every word to be spelled, so to a non-SL speaker, it can look like a series of sentences missing prepositions and articles, but signers get it. This is true of SL in every country. As for writing it down, it really is dependent on the signer’s education. I have American English signers as friends and I’ve noticed how their writing can be a bit different, but it really depends on how they were educated and what they do for a living. There are signers who write impeccable English and there are those who don’t, just like with English speakers.

Thanks!

Seeing Voices: A Journey Into the World of the Deaf is a 1989 book by neurologist Oliver Sacks. The book covers a variety of topics in Deaf studies, including sign language, the neurology of deafness, the history of the treatment of Deaf Americans, and linguistic and social challenges facing the Deaf community.

Not sure about others, but American Sign Language was developed in France and generally uses French word order. So instead of subject -verb- object, it’s subject-object-verb. My wife interprets in church and noticed that Latin word order is the same as ASL. Same with leaving out the articles.

My mother passed away recently aged 102. She left behind 2 complete Cochlear Americas Nucleus CP910 systems. I think they are state-of-the-art for those who already have a 22 channel implant. I have no use for them, and they are just gathering dust. As they are “medical equipment”, I cannot sell them but I think I can pass them on to anyone who can make use of them. I offer them free of charge with no warranties to anyone with a legitimate use, one system to a customer. Contact me – clarelove@comcast.net

Do those systems include the electrode that was either removed from your mother or never installed? Your best bet is to reach out to Cochlear Americas https://www.cochlear.com/us/en/connect/contact-us to talk about your options. Perhaps they have a way to get the implants to those unable to afford them or have no insurance. The electrode has to be matched to the processor and if your mother still has her electrodes inside her, Cochlear Americas will have to provide new matching electrodes. My condolences on the loss of your mother and hope she benefited from the implants as long as she had them.

My brother in law was born deaf, and can not speak. I’ve thought about the ways in which we learn to form speech…we hear our parents, and we hear our own sounds, and through repetition and parroting, eventually we get it right. Is it this feedback that trains our vocal chords? I think it is…but a deaf person can not use sound feedback in order to train his or her vocal chords. I was motivated to write these thoughts when I read at the beginning of this article, that Paul Taylor used to feel the vibration on his teacher’s face to feel the words…the vibration of the spoken words. But what about other stimulus…visual, for instance. Consider a laptop computer running a program which outputs not only the vibrations from programmed words and turns the sounds from the user into vibrations they can feel, but also creates a visual pattern in response to a particular sound such as a letter of the alphabet, or words, to help children to speak who can’t hear. I envision children’s ‘books’ for the speech and hearing impaired programmed onto a laptop which was designed for this purpose might help to improve lives. I hope someone smarter than I will find merit in this idea and create the tools to make this work.

Peace.

Neat, will read into some more. One of those topics where I’m amazed more work hasn’t gone into less morbid devices and instead in wireless designs. More reason to have an extremely thorough impact and risk assessment study of the wireless effects to narrow down optimal electromagnetic spectrum ranges for use.

Then again, guessing the nefarious are the root cause obstructing R&D or implementations since they don’t want their wireless concealed assault weapons exposed that in a more human, less Pan Troglodyte trait, World the wireless devices capabilities can be used as remote life support and medical devices for healthcare.