Making unobtainium graphics cards even more unobtainable, [VIK-on] has swapped out the RAM chips on an Nvidia RTX 3070. This makes it the only 3070 the world to work with 16 GB.

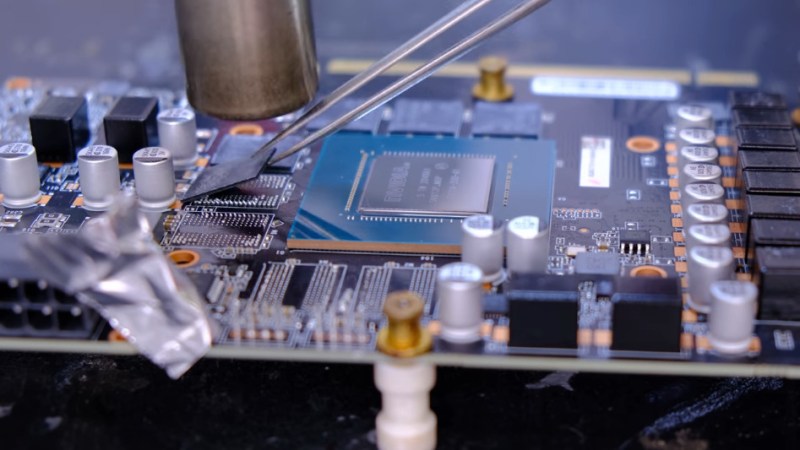

If this sounds familiar, it’s because he tried the same trick with the RTX 2070 back in January but couldn’t get it working. When he first published the video showing the process of desoldering the 3070’s eight Hynix 1 GB memory chips and replacing them with eight Samsung 2 GB chips he hit the same wall — the card would boot and detect the increased RAM, but was unstable and would eventually crash. Helpful hints from his viewers led him to use an EVGA configuration GUI to lock the operating frequency which fixed the problem. Further troubleshooting (YouTube comment in Russian and machine translation of it) showed that the “max performance mode” setting in the Nvidia tool is also a solution to stabilize performance.

The new memory chips don’t self-report their specs to the configuration tool. Instead, a set of three resistors are used to electronically identify which hardware is present. The problem was that [VIK-on] had no idea which resistors and what the different configurations accomplished. It sounds like you can just start changing zero Ohm resistors around to see the effect in the GUI, as they configure both the brand of memory and the size available. The fact that this board is not currently sold with a 16 GB option, yet the configuration tool has settings for it when the resistors are correctly configured is kismet.

The new memory chips don’t self-report their specs to the configuration tool. Instead, a set of three resistors are used to electronically identify which hardware is present. The problem was that [VIK-on] had no idea which resistors and what the different configurations accomplished. It sounds like you can just start changing zero Ohm resistors around to see the effect in the GUI, as they configure both the brand of memory and the size available. The fact that this board is not currently sold with a 16 GB option, yet the configuration tool has settings for it when the resistors are correctly configured is kismet.

So did it make a huge difference? That’s difficult to say. He’s running some benchmarks in the video, both Unigine 2 SuperPosition and 3DMark Time Spy results are shown. However, we didn’t see any tests run prior to the chip swap. This would have been the key to characterizing the true impact of the hack. That said, reworking these with a handheld hot air station, and working your way through the resistor configuration is darn impressive no matter what the performance bump ends up being.

I’d hate to go through all that, then realize “oops, I forgot to characterize it beforehand”.

It might be worth changing the resistor configuration back to the original, to determine the performance difference when half the RAM is disabled.

It adds so much RAM that I wouldn’t be surprised if most games don’t see a difference, although it may be useful for cryptocurrency mining, especially when the difficulty gets to the point that 8 GB isn’t enough RAM.

It reminds me of when I bought a toilet seat and its bolts didn’t match holes in the bowl. Tried drilling it with SDS drill on the lowest possible RPM but it still ended up shattering to bits. In the end I had to buy a new loo and a seat. The one that didn’t match was hanged on the wall and used as target holder for indoor airgun shooting at home.

sorry to be “that guy”, but it’s “hung” in this case. “Hanged” is indeed the past tense for when a person has been put to death by hanging, but for inanimate objects you should use “hung”.

You’re giving grammar points to a guy who uses toilet seats as decoration and regularly shoots airguns indoors? heh…. You should tell him which fork is the salad fork next ;)

LOL You made my day ! :-)

Over the years, I’ve found it very very rarely paid to get the highest RAM version of a card for game performance. It usually works out that by the time games NEED that much the actual GPU is 2 or 3 generations behind and it’s not lack of RAM making things slow. Even many years later when you run retro stuff on games of it’s generation the 8MB Rage doesn’t feel any faster than the 4MB Rage, the 128MB GF3 doesn’t feel any faster than the 64MB GF3, the 2GB 9600 GT doesn’t feel any faster than the 1GB 9600 GT. There’s some exceptions, when a new type of RAM was particularly expensive and to meet price points there were cards with “halved” RAM vs what was intended to be standard, and some of those might tend to be on the crippled side. Crypto mining is a different kettle of worms/can of fish though.

That was back then. Nowadays games ship with 4K texture pack and those require a ton of memory. More ram on the GPU doesnt make it faster, it just enables you to use bigger nicer looking texture pack.

Ehhh that’s not true at all lol. Re2 remake, re3 remake are just 2 examples I can think of that used all the vram on my 2080ti ftw3 ultra and that was when it was essentially new.

So there are absolutely games that will take advantage of more vram.

The issue with adding vram is how wide the memory bus is. You might have more ram available but if the bus can’t transfer enough data then it’s essentially useless in gaming and minimally beneficial in productivity.

Lastly we don’t need tests from the unmodified card. We can just compare it to the same card that hasn’t been modified. Just make sure you set the clocks the same and you’re good to go.

I have been a computer animator professionally for close to 8 years and while different from game dev/playing, there is some overlap, and I am inclined to disagree about it not being true at all. I would revise it to, its not true sometimes. They are correct that 4k textures take up significantly more ram. Thats why computer animators are dying right now that we cant get our hands on the 3090 for a reasonable price because historically, although quadro was designed for maximum ram performance, the 3090 supports NVlink and comes with 24 gb which was a nice step back from upgrading our cards with $6-8k cards MSRP. Ok, I am breaking topic. Back to the point. It is worth mentioning that even significantly compressed 4k maps still take up around 500 kb. These are what you may find on leaves. No I am not saying you will have every leaf 4k. You use a 4k map that has all the trees leaves on it because it will take up less ram than loading each leaf individually. You will likely start hitting the 1mb-2mb mark for less compressed more complex textures on characters faces, ground textures, houses, etc. Lets then remember that LOD saves on vram but also has to do the work to compress the graphics which depending on the game may or may be the GPUs domain or the CPUs domain. That would be up to the developers and the engine they use, but thay may be taking up resources as well if it is momentarily caching the larger image to compress it and resize it. Now lets push it one step further because we have been making an assumption of color maps this entire time which are significantly smaller than normal or bump maps because they do not need the same bit depth. So now we go to RGB16 normal, bump, and displacement textures. These will cost you an arm and a leg. When I work with 4k textures these usually top 50mb each but more compressed versions for games may get down to 10 or 15. In games these days we are most commonly using color, roughness, specular, normal, bump, displacement, alpha, and emission. knowing that lets do some math:

making the assumption that we are in a particularly complex scene where there are 40 high detail assets visible in the scene and lets say 100 low detail 4k assets in the scene, but lets also say there are 3 LODs which are exponentially lower detail as they move back in the scene, add up all the maps in all average sizes based on the bit depths of the average maps used and that puts us at around 3gb of graphics ram dedicated to just textures. That may not seem like a lot but remember we also need to run the scripts, physics engines, and lighting engines on these as well. 3gb just for textures is a lot more than it was a decade ago.

Now, I do recognize this is a perfect storm scenario of a “complex” scene or map but the addition of all this matters. At least there is an LOD system in games. I have to sorta manually circumvent that issue and my scenes in 3D are typically looking in the 10-12gb to render range.

You’re not wrong.

I should have been more clear when I said productivity. I was thinking purely consumer/average user level. I wasn’t including things like game dev, special effects and many other jobs/applications for use of the hardware.

If I was you I would want as much power and memory as I could get my hands on too. I was leaning more towards stuff like streaming, basic editing for something like YouTube, some Photoshop here and there.

I don’t know why I just used so many words to say I agree with you again lol.

mining takes ram? i thought it just calculated a pseudo-random number, did a hash on it, and then compared the hash to the goal. i thought the point of the gpu was to be able to try many hashes in parallel, but i can’t imagine it’s so many that ram is a consideration?

otoh, i can’t imagine how consumer-grade gpus can compete with mining-specific asics or even fpgas since the gpu has to be able to do so much more. sure they’re fast but you’d think the custom solution would be faster per dollar? what edge would nvidia have over an asic there? so there must be things i don’t know about this.

This post brought to you from 2013 courtesy of the waybackmachine…. you’re a little out of date Greg. SHA2 ain’t the only game in town.

> i thought the point of the gpu was to be able to try many hashes in parallel, but i can’t imagine it’s so many that ram is a consideration?

If you’re trying to store the DAG on system RAM during GPU mining you’re going to have a bad time. To add some nuance to your understanding, on most proof-of-work schemes the hash needs to include more data than that pseudo-random number.

> sure they’re fast but you’d think the custom solution would be faster per dollar? what edge would nvidia have over an asic there?

Simple: It takes time to manufacture a new ASIC design, and some blockchains have algorithms specifically designed to hurt ASIC efficiency, usually be requiring access to large amounts of memory at very high speed, which GPUs are great at but is more difficult for ASIC designers.

Normal encryption, decryption, and hashing don’t require much memory, but brute forcing hash collisions, as is done to generate a new block in a blockchain, can trade between processing power and memory usage. For an n-bit hash, a table of 2^n strings and their hashes could be generated, containing a string for every hash, effectively negating the need for any computing power, to find collisions. It isn’t an all-or-nothing tradeoff; rainbow tables allow a reduction in processing power, with the use of a smaller table.

The Burstcoin cryptocurrency does take it to an extreme, using a proof-of-storage system, instead of proof-of-work. It’s still looking for collisions, but using tables to make the computational power minimal but scaling with the available storage. This creates a blockchain that is just as secure, but uses much less electricity.

Would be handy for running beefy neural networks. Something the 20 series still beats the 30 series on.

Or they could compare to the 16GB benchmark to an 8GB version online ?

(e.g. https://www.videocardbenchmark.net/gpu.php?id=4283 )

That’s what I would do.

Those results would only be truly valid if they had the same memory bus.

>This makes it the only 3070 the world to work with 16 GB.

Pretty sure that Nvidia would have some samples in their lab(s) for testing/evaluation if they have jumpers and provisions ro accomodate this.

I guess maybe the carrier board is fundamentally the same betwen the 3070/3080, just with a different die, memory, power management tuning and config resistors set.

They also have different configurations ready for market segmentation – trying to match AMD’s pricing/offering. And they have done that wiping out a whole slew of SKU in confusion after AMD’s 16GB models.

It’ll be too naive for HaD to say that they don’t try out the different configurations in their labs. Click bait points are silly.

Just because the manual doesn’t say it is supported doesn’t mean it will not work. The old 5150 IBM PC only officially handled 640k of memory. By installing IBM memory cards, setting the memory card dip switches for undocumented memory addresses as well as changing the dip switches on the motherboard you could get a usable 1mb of memory to run on these systems with newer versions of DOS. Worked with older DOS versions with a custom driver loaded in config.sys.

Dang, I wish I could forget the chore of setting up high memory in a DOS based PC.

Never did get it to work on my old XT, though my 386DX-33 did have full use of its 4mb of RAM after I got done fiddling with it.

Worse yet, for a time I worked as the IT guy for a company that ran a bunch of 386 workstations on Novell 3.12 and Windows 3.11… Ugh.

Similarly, my new Thinkpad laptop only officially supported 16GB of RAM. But, Intel’s documentation said the max supported RAM for the CPU was 32GB. I decided to try out 32GB, as I’d read that Lenovo only tested on what RAM was available when the product was released, and 32GB sticks weren’t available at the time. And, the 32GB stick worked.

What Thinkpad is that? I’m very much in the market for one at the moment

I have 64gb and 4 TB nvme in my aio that told me only 16gb max. You are right. Now that bigger is available it still works.

Would be interested to see if a mod would work on older cards like the GTX780 (3GB)

I was thinking the same thing. I had done some (limited) research once the run on GPUs started, and was considering modding some of the 4GB cards to accommodate a larger DAG file.

Crypto miners are already ready to horde them all so RIP graphics market for the gamers. It will never return back to normal ever again.

If we are telling old timer stories… I remember circa 1995 when I soldered extra RAM onto my lower-cost S3 Trio32 Video Card which also improved it’s DRAM interface from 32 bit to 64 bit (effectively transforming it into a Trio64). It was noticeably faster and more memory was better…

Although games hardly use that amount of VRAM, this could be sooo useful for machine learning… Even for the “modest” models nowadays you need absurd amounts of memory, 12GB+.