We normally think of atomic clocks as the gold standard in timekeeping. The very definition of a second — in modern times, at least — is 9,192,631,770 periods of the radiation corresponding to the transition between the two hyperfine levels of the ground state of a stationary cesium-133 atom at a temperature of 0K. But there is a move to replace that definition using optical clocks that are 100 times more accurate than a standard atomic clock.

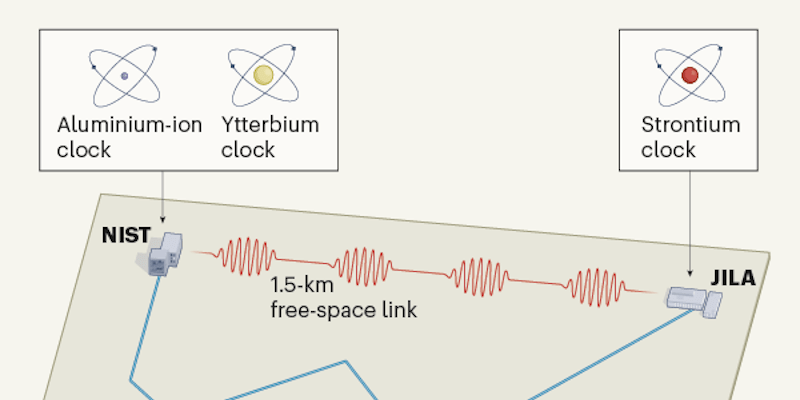

In recent news, the Boulder Atomic Clock Optical Network — otherwise known as BACON — compared times from three optical clocks and found that the times differed a little more than they had predicted, but the clocks were still amazingly accurate relative to each other. Some of the links used optical fibers, a method used before. But there were also links carried by lasers aimed from one facility to another. The lasers, however didn’t work during a snowstorm, but when they did work the results were comparable to the optical fiber method.

A standard atomic clock takes a beam of atoms that are in two states and uses a magnetic field to remove all the atoms in one state. The remaining atoms are subjected to a microwave signal which causes some of the atoms to flip to the opposite state. Another magnetic field removes one state and the remaining atoms are counted. When the microwave signal exactly matches the material’s resonance frequency — 9.192631770 GHz, in the case of cesium — the number of atoms changing state will be at a maximum, so you know when you’ve hit the right frequency.

Optical clocks such as the aluminum ion, ytterbium, and strontium clocks have much higher resonance frequencies, high enough to be in the spectrum of light. This allows a much more precise frequency in the same way that a ruler with 32 marks will be more precise than the same ruler with 4 marks. While a cesium clock may be off one second in 100 million years, the optical clocks will take 15 billion years to have the same magnitude of inaccuracy.

If you want to build your own clock, you might start with rubidium at a less accurate 6.8 GHz. If you want to know more about how atomic clocks work, we have just the video for you.

Well great, now my cesium watch is inaccurate garbage.

Glad I stuck with my sundial, a light clock is a light clock everything old is new again!

Unless the sundial is made of stone, iron, or other heavy substance.

B^)

Keep an eye on Craig’s List and Ebay for surplus cesium clocks.

The only used cesium clocks you will see are decades old, broken, or cost more than a car.

Some auction site sellers are located in the user URI,

That and any really cheap ones are probably near the end of their useful life. The typical 100 micrograms caesium-133 (or rubidium-87) inside the resonance lamp of an atomic clock is slowly transferred from one side (exciter lamp) of the physics package to the far end (absorption cell) over the lifetime of the device and eventually they will just run out atoms.

Joel said: “The only used cesium clocks you will see are decades old, broken, or cost more than a car.”

Truth said: “That and any really cheap ones are probably near the end of their useful life.”

Nonsense. Cesium beam clocks and frequency references are still made today and are widely used. Currently there are no clocks manufactured for the commercial markets better than cesium beam clocks. One long legacy that lives on today goes: Hewlett Packard > Symmetricom > Microsemi > Microchip.[1]

A mini-industry exists in repairing, refurbishing, and/or replacing the cesium beam tubes. Many refurbished cesium standards and clocks exist in the hands of timekeeping hobbyist-fanatics.[2]

Much of the commercially available supply of cesium clocks is taken up by the financial services industry for use in timing/synchronizing high speed electronic trading. If these new optical clocks are as good as claimed, even if they cost more than cesium clocks the greedy Wall Street goons will snap them up and drop the cesium clocks like hot potatoes – hopefully into my waiting arms.

* References:

1. Cesium Clock and Frequency References

https://www.microsemi.com/product-directory/clocks-frequency-references/3832-cesium-frequency-references

2. Time-Nuts — Precise Time and Frequency for Amateurs

http://www.leapsecond.com/time-nuts.htm

Accurate enough where gravity can be an influence.

Cesium is already that accurate. See https://hackaday.com/2013/09/03/a-weekend-trip-to-verify-general-relativity/

Not a “direct” measurement of relativistic forces (what is?), but Pendulums are also already that accurate. Local gravity varies enough on Earth that pendulum length on a regular tall clock has to be adjusted to where you are. With a reversible pendulum, like a Repsold-Bessel, you can do some very accurate gravimetry (accurate enough to notice the tidal force). Free pendulum clocks like the Shortt-Synchronome also pick up on small changes in gravity.

So again it is confirmed that adding bacon is always an improvement.

Does anyone else find it bothersome that our definition of the speed of light is now fully obviously circular?

I can get why normals didn’t see it when we changed to a cesium timebase, and I get a rotatoinal timebase was inaccurate, but it was at least not self refernecial.

yeah, I can’t spell, haven’t slept in days. If you want to pick on that, don’t bother, it only proves you extratentorical.

You probably should enclose your comments with the lesser known html tag

Meter’s a defined using the speed of light * frequency of an atomic oscillation. This is redefining the second from one element to another’s optical oscillation which occurs at a higher frequency.

I think you may be interpreting the laser link as a component of the measurement. That link is used to compare the measurements of the atomic 3 clocks used at the 2 facilities not as part of the oscillator itself. That becomes more crucial because we know the offset due to General Relativity for a fixed location. That can be calculated independently, but moving the clocks will add an error. Say you have a 1 meter / hour measurement error on the velocity for 1 second, that’s a 0.2mm difference in position. Thanks to time dilation, this would add about an 1E-11 second error when comparing the results. Pretty tiny. Except the accuracy estimates are 2E-18. This would be the dominant source of error over a timeline of less than 1 year.

>our definition of the speed of light is now fully obviously circular?

No it’s not. Only, the meter and the second are defined as relative to the speed of light by an arbitrary constant.

The speed of light itself exists regardless of the definition of the second and the meter. Logically, if electromagnetic radiation is passing through a vacuum, when there is nothing to affect it except space itself, then whatever speed it has is the speed of light. It needs no other definition. When we say “the speed of light”, we are actually giving you the length of the second and the meter relative to this universal constant.

The common objection is, “Well, if we just assume that the speed of light is constant, and we define our yardsticks and stopwatches by it, then how do we know that it isn’t changing all the time? All the tools we use to measure the speed of light are defined by the speed of light in the first place.”

Easy: take the previous yardstick you made, make a new one, and compare whether they came out the same. Compare whether the clocks you’ve made run at the same rate. If the speed of light changed between measurements, then the new and old standards would differ.

People often get confused by the fact that we keep “changing” the constants used to define the meter and the second as we improve the measurement, but what’s happening is, we’re simply adding more decimal points. It doesn’t change the previously established value which had an uncertainty of plus-minus something in the first place.

If at some point we find that the results of measuring the speed of light no longer converge towards a single value within the uncertainties of all the previous measurements, we would find the level of precision to which either our measuring apparatus or the speed of light is variable. So far we’ve always found a way to make a better measuring device, which shows that the speed of light is constant to at least that precision. This also limits the rate at which the speed of light could vary if it were variable by itself.

You can see a sampling of the measurements and their uncertainties in past experiments in this chart:

https://static4.olympus-lifescience.com/data/olympusmicro/primer/lightandcolor/images/lightspeedfigure6.jpg?rev=8DEC

The measurements started by astronomical observations.The value got more precise from 1920 forwards with radio propagation experiments and cavity resonators which might have been precise but the conditions were not well controlled for. Then 1960’s saw the introduction of the laser and the value for the speed of light was fixed down in vacuum chamber experiments to a very high precision and accuracy.

Hope these are cheaper in small packages and quantities.

I’m still saving up for the microsemi chip scale atomic clock!

Ii wonder just how revolutionary cheap but highly accurate clocks would be?

probably not all that much…

Optical and Quantum clocks rely on atoms being cooled to temperatures of a few microkelvin above absolute zero. I do not think that they will fit in your pocket any time soon. I’d like to wrong.

Reading the words „magnitude of inaccuracy“ in a text about clocks that are off one second in 0.1 / 15 billion years feels odd

The Big Bang was just God’s way of resetting his atomic clock.

Now, if we can get more fiber optic communications implemented and less wireless systems since obviously wireless systems will have more interruptions. :-|)

So an optical clock is just an atomic clock using different atoms?

I checked on linkedin to see how many degrees of separation I am from someone in BACON, and it was a disappointingly low 2.

You can get a pre-owned rubidium frequency standard from eBay for $300 to $800. Cell sites use them and they are replaced periodically.