Our trip through the world of audio technology has taken us step-by step from your ears into a typical home Hi-Fi system. We’ve seen the speakers and the amplifier, now it’s time to take a look at what feeds that amplifier.

Here, we encounter the first digital component in our journey outwards from the ear, the Digital to Analogue Converter, or DAC. This circuit, which you’ll find as an integrated circuit, takes the digital information and turns it into the analogue voltage required by the amplifier.

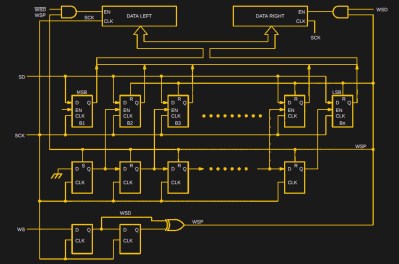

There are many standards for digital audio, but in this context that used by the CD is most common. CDs sample audio at 44.1 kHz 16 bit, which is to say they express the level as a 16-bit number 44100 times per second for each of the stereo channels. There’s an electrical standard called i2s for communicating this data, consisting of a serial data line, a clock line, and an LRclock line that indicates whether the current data is for the left or the right channel. We covered i2s in detail back in 2019, and should you peer into almost any consumer digital audio product you’ll find it somewhere.

Making A DAC Is Easy. Making A Good DAC, Not So Much.

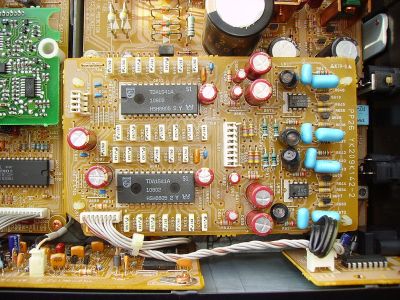

Remembering that i2s is a technology from the end of the 1970s, it’s a surprisingly simple one to create a DAC for. The original Philips specification document contains a circuit using shift registers and latches to capture the samples, which can be fed to a simple resistor ladder and filter to perform the conversion. This is an effective way to turn digital to analogue, but as with every audio component it carries with it a level of distortion.

If you look at the output of any DAC in the frequency domain rather than the time domain, you’ll find noise along with the spectrum of whatever signal it is processing. For instance, the sampling frequency will be there, as will a multitude of spurious mixer products derived from it and the signal. In an audio DAC all this out-of-band noise can manifest itself as distortion.

The problem faced by audio DAC designers is that the sampling frequency is relatively close to the signal frequency, so while the low-pass filter does its best to remove the offending spectrum, it has a difficult job. As an example back in 2020 we took a look at the CampZone 2020 badge, an audio playground that used a very cheap DAC to keep costs down. The Shenzhen Titan TM8211 i2s DAC is a single-chip implementation of something close to that Philips DAC circuit, and while it boasts an impressively low price, the noise is clearly audible on its output in a way that it wouldn’t be on a more expensive chip.

Shifting The Problem Upwards For A Better Sound

The solution found by the DAC designers of the 1980s and 1990s was to move that out-of-band noise up in frequency such that it could be more efficiently rejected by the filter. If you remember CD players years ago boasting “oversampling”, “Bitstream”, or “1-bit DAC”, these referred to the development of more advanced DAC designs that performed the shift upwards in out-of-band noise frequency by various different techniques. At the time this was a hotly contested marketing war between manufacturers as a CD player was seen as a premium device, from a position three decades later when a CD is something that has to be explained to children who have never seen one.

All of these are essentially sigma-delta DACs, and they approach the problem of moving the out-of-band noise upwards by producing pulse chains at a high multiple of the sample clock where the number of pulses corresponds to the value of the sample being converted. By sampling with lower resolution, but much faster, the associated out of band noise is shifted much higher up the frequency range, which makes the job of separating it out from the signal much easier. It can be decoded into an analogue signal by means of a fairly straightforward low-pass filter. These are the “Bitstream” and “1-bit” DACs advertised on those 1990s CD players, and what was once the bleeding edge of audio technology is now commonplace.

A Good DAC Can’t Compensate For A Poor Source

Although a good DAC makes a huge contribution to audio quality, we’ve assumed that the digital data comes from a source without much compression, such as a CD. CDs are now no longer mainstream, and the data is much more likely to have come from a compressed source such as an MP3 file or an audio streaming service. Compression is a topic in itself, but it’s worth making the point that the quality of the audio expressed on the data stream reflects the characteristics of the compression algorithm used, and no matter how good the DAC may be it can not make up for the quality of its source.

This series will return for its next installment, where we’ll address the concerns of the vinyl and tape aficionados who were screaming at the assumption that there would be a DAC in the signal chain. While once-dominant analogue audio formats such as the LP record and the cassette tape may now command a fraction of the market they once had, their rediscovery in recent years has led to a minor resurgence in their popularity. It’s still by no means unusual for a high-end audio system to have analogue source components, so they are very much worth a look.

A DAC with low clock jitter is a rare treasure

For all the effect clock jitter has on the DAC, it is that much more critical for ADC.

I’m working on a design for professional audio, and just having a free-running 24.576MHz oscillator operational near the digital logic carrying the master clock (same frequency, but not locked to the crystal) introduced enough jitter to generate measurable frequency spurs in the idle channel output of the ADC. The oscillator was used for testing, and it was a simple matter to disable it when not needed.

Most jitter you will encounter is going to come from a PLL-recovered clock on a digital interface (e.g. S/PDIF). There are designs out there that have low enough jitter so it is not a limiting factor in the product.

The more “real” bits your DAC/ADC has, the less it will suffer from clock jitter.

I’m not sure what you mean by “the more real bits.. the less it will suffer from clock jitter”. I’m guessing by “real” bits you mean the ADC/DAC linearity. That is all good and well, but even with “perfect” quantitization, clock jitter is still clock jitter as the change in the sample point cannot be distinguished from an error in linearity and hence, it produces “spurs” and these spurs can mix and produce more spurs due to IM.

So no, better linearity does not reduce the “suffering” from clock jitter, it just reduces one of the sources of generation/reproduction noise. You can simulate all this errors sources easily enough to verify it for yourself.

Clock jitter for an ADC would only matter on the down stream transport to the digital file master.

For DAC’s there already exists Femto clocks for RME, SCHIIT and other manufacturers to clean up and sync USB data in.

The master clock should not be linked or a harmonic of the data clock. PCM and Optical aren’t an issue of USB data loss or sampling errors/DC level noise/60hz line noise. If the master clock is suffering from drift it’s not a good clock. If the master clock is susceptible to line voltage fluctuations, you have a bad power supply (should have a linear integrated supply, non switching).

In so far is “real bits” I’m sure she is referring to the dynamic range which can rob advertised bit depth due to the noise floor rising and causing poor implementations advertised at 24 bit depth to only have 20 or so “real bits” but this would have no impact on quantization only analog signal degradation. The information is plotted correctly, but the overall quality of the signal doesn’t come out 24/192… its more like 20/192.

By “real” bits I mean the actual number of quantization levels after delta-sigma modulation. I have seen as many as 66 levels (approx. 6 bits of resolution) in oversampling DACs.

Oversampling allows the exchange of sampling rate for quantization levels. This is common practice because it is generally easier (read: cost effective) to implement a very stable clock reference than it is to precisely match binary weighted elements. In order for this to be useful, most of the quantization noise generated by this process needs to be as far away from the band of interest as possible. This is where the delta-sigma modulator does its thing.

Uncorrelated jitter in a reference clock of any kind is equivalent to phase noise. On an oversampling DAC, this phase noise is converted to amplitude noise in the output, as each discrete time interval in the output varies slightly. A linearity error in a binary-weighted DAC output shows up as distortion, as the errors are strongly correlated with the input data.

Consider the following: a binary-weighted DAC and a 1-bit oversampling DAC are both set to output a constant mid-scale value. The theoretical noise floor of the binary-weighted DAC is the power sum of all forms of electronic noise in the circuit (thermal noise, shot noise, flicker noise, etc.) in the frequency band of interest. You could adjust or even completely halt the sampling clock and this will not change, as the output level is always the same.

On the other hand, the 1-bit oversampling DAC must be constantly switching between its quantization levels (1 and 0) so that over time its output equals the desired mid-scale value. As long as the modulator is doing a good job of keeping the quantization noise out of the band of interest the output remains relatively quiet.

If you halt the sampling clock then the output will go all the way to 0% or 100%. Adding jitter to the clock causes the output to be affected beyond the control of the modulator. If the clock has phase noise present in the band of interest, it will directly contribute to amplitude noise in the output.

A hybrid approach uses some number of real, discrete quantization levels in the output, and the delta-sigma modulator only toggles between a few of them (the remainder being directly controlled by the most-significant bits of the codeword input). Thus the phase noise in the clock used for oversampling affects only a subset of the available quantization levels.

I follow audio since late 90s and knew multibit or Sigma Delta only until recently I discovered the SANYO LC7801 (embodied in the Musical Fidelity CDT, the ‘frog’ CD), and found its peculiar approach similar to what you call ‘hybrid approach’ Do you know other chips using it? Since you look very knowledgeable … could you comment the current flagship ESS sabre DACs family and/or the SACD principle?

Somehow I knew e would get one of those at the top of comments :)

Remember, any time you see someone even mention _jitter_ when it comes to audio you know they are an audiophool talking nonsense. Blind test studies proved even “golden ears” idiots cant tell the difference up to hundreds of nanoseconds of jitter. For example https://www.jstage.jst.go.jp/article/ast/26/1/26_1_50/_pdf Cheapest crystal oscillators have rms jitter measured in picoseconds, and obviously no one ever used RC oscillators in CD players :-) yet you see those morons replace factory crystals with “audio quality” snake oil ones to “fix jitter”.

One thing that often helps; turn the time units into distance units. The speed of sound in air is 330 metres per second, give or take.

Being able to hear 500 nanoseconds of jitter is equivalent to being able to hear a 0.2 millimetre movement of the sound source. That’s a tiny shift that you should be able to reliably detect in the real world if you really can hear 500 ns of jitter.

Given that a tweeter at 20 kHz can still have excursions of 0.5 mm quite happily, and a subwoofer can have an excursion of over 100mm, that’s implausible – it implies that you can reliably (at a distance) identify which speaker cone is responsible for the sound, and whether it started when the cone was at one extreme of movement or the other.

So the real problem was the 16 resistors needed to balance the bits of the DAC had to be ridiculously accurate, driving up cost or just delivering poor conversion.

A single bit DAC essentially PWM the signal, and there is no need to balance the resistors.

It’s kind of like how we don’t have to tune multiple carburetors anymore, we just use matched fuel injectors and let the computer set the fuel mixture.

Its not that multiple carbs reaulted in poor performance, just that its much simpler to achieve with a single automatic adjustment, and its more likely to stay in “tune”.

https://forums.stevehoffman.tv/threads/have-1-bit-dacs-killed-hi-end-cd-players.991673/

https://www.stereophile.com/content/pdm-pwm-delta-sigma-1-bit-dacs

R2R’s precision film resistors are a work of art though

Even though the course in IC design I took was back in 1975, a lot of what i learned then is still applicable. One thing I recall was that the reason analog circuits worked so well (even then) in spite of the lousy tolerance of about 20% (or worse) for IC resistors (at least back then) was that even then, their ratios are much tighter, and only got better over time (resistance is based on ionization, whereas their ratios are based on areas controlled by lithography). This was mostly before laser or other methods (e.g. fuses) of trimming became popular.

It’s the ratios of resistances that affects accuracy and things like the balance between op amp inputs that rely on balancing the current ratios of differential amplifiers. The same is true for R2R networks — absolute accuracy is less important than ratiometric balancing of resistances, but that applies for the entire chain, so, for 8 bit accuracy, you need all resistors to be balanced to within 1/2 * 1/256 for 1/2 LSB linearity. This scales up to higher bit quantitizations.

Something not mentioned here is monotonicity. Even if the linearity is only 8 bits, it is possible to achieve “better SNR” if the ADC/DAC is monotonic to more bits. Why is monotonicity important? Well, because its required to create a linear output with fewer spurs (not all noise is equally “bad”). It’s the difference between a function and a relation. A function is monotonic (i.e. each input value has a unique output value), so it is reversible, but a relation (e.g. something that’s not monotonic), cannot is not reversible (since there can exist more than one input value for any output value). In set theory, this property is called homomorphism.

Also not mentioned is that any gains in oversampling requires “clean” noise (i.e. Gaussian). “Dirty” noise does not average well and all low-pass filtering is essentially an averaging process.

“A function is monotonic (i.e. each input value has a unique output value), so it is reversible, but a relation (e.g. something that’s not monotonic), cannot is not reversible (since there can exist more than one input value for any output value).”

Err… I have a bit of a problem with this definition. In order to keep the confusion to a minimum, it’s safer to say that a function does not have more than one output for a given input( by virtue of the vertical/Y test). Also, given the nature of periodic functions, not to mention many others, it should be noted that not all functions are reversible due to multiple domain values matching a specific range value.

When you said: “..not all functions are reversible due to multiple domain values matching a specific range value”, you defined a relation, not a function. Some relations can be made functions by rotating axes, but not all.

All real-world processes are functions (and I don’t include those that can be created with an FPGA or micro). You can only apply ordinary calculus to continuous functions, although it’s possible to integrate piece-wise continuous functions, literally ‘in pieces’.

Nice to see a post about digital audio that understands the importance of time… One gets tired of all those posts online from newbie know-it-all that argue it is all fine as long as the bits are all there…

A crate of car parts, a badly assembled car, and a well built car… They can all have the exactly the same car parts, but result in very different performance when you attempted to drive them.

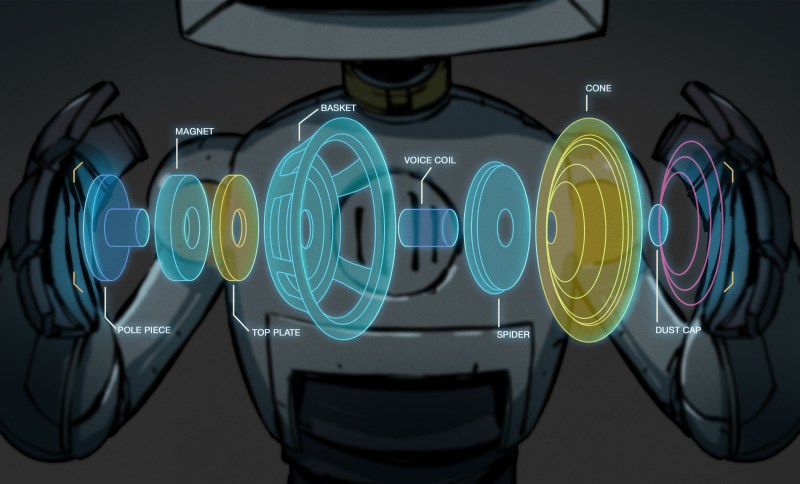

The Title Illustration, (Thanks, Joe Kim!) reminded me of a web search I did earlier this year.

I was hoping to find a company that would recone the mid/tweets in my brother’s old KLM bookshelf speakers.

I did not find one willing to take on the job, but a company in California is doing “land office business” reconing the huge bass speakers for auto hi-fi(?).

I ended up buying some speakers that were close enough to the hole size. Neither my brother, or I, are “golden eared” enough to know the difference. (Heck, I don’t recall if we “knew” the mid/tweets were blown, until we put our ears right up to them! B^)

> huge bass speakers for auto hi-fi

I had a pair of 18 inch ex-movie theater woofers jammed into a 1985 Cavalier Wagon, once. Blew the back window out (without breaking it!) running them up once. They don’t make ’em like that anymore.

But we can get big batches of cheap high power bare voice coils now and make our own sounding boards and resonators.

It’s very easy to buy new cone material and re do it yourself. YouTube videos and everything. Did it for a pair of nice blown out Boston Acoustics.

In your signal chain, any modern DAC from Topping or the likes is going to be the least of your problems, and it can be had for 200$ and below. They measure to the extremes now, and I will bet a handsome sum that a pure A and B test will make you see that there is no difference in this category of Sinad 120 db + and minus 10 db. Granted, a lot of so called hifi DAC’s are not even at that level, but they would for most people still be transparent. Your problem is going to be in the speakers and their frequency curve combined with your room, and to a lesser degree your amp’s and their distortion level. We should admire the engineering level that is going into these new generations of DAC’s, and their approach to these limits of even the best measuring equipments that we have, and push towards it for the “peace” in our souls and purchase decisions. But let’s not kid ourselves that we could reliably hear the difference, any old amp or speakers combined with the room you are listening in will give you a much higher chance of a change in frequency and distortion that you might find more pleasurable, or less so, than a modern DAC

Very true. Readers of this whole series of Know Audio posts will understand that it applies the magnifying glass to all parts of the chain.

Maybe I’m completely wrong here. But do you really need DAC when most common amplifier today is D class.

Very true, a class D amplifier is one type of one-bit DAC. And indeed, there are all-digital chains from I2S stream right up to the low-pass filter behind the speaker.

Yes; because the typical class D amplifier is an analog circuit (often) oscillating the pulse width on the “digital” output. This circuit is also looking at a feedback signal from the output which affects how it modulates it. This is not a system where you can just feed a precise digital bit-stream into this final set of “digital” output drivers.

(I use quotes on the word “digital” in some context here because of the propensity to conflate something having two discrete states with a notion that it necessarily has a discrete value, like the case when considering the circuits in digital logic.)

My previous comment was intended to be attached as a reply to Boris’s comment of “Maybe I’m completely wrong here. But do you really need DAC when most common amplifier today is D class.” I’m probably blocking so much fsck’ing javascript that I threw a wrench in that process.

“… their rediscovery in recent years has led to a minor resurgence in their popularity.”

A lot of people seem to believe this. I have never met anyone able to explain why they believe it.

I think it’s the same reason some enjoy steampunk and such. It’s not nostalgia, which is really based on prior, personal experience, but more of historical value, like a Cuckoo or grandfather clock.

A long time ago, closer to the KaZaA heyday, I heard someone predict a paradoxical increase of emphasis on authenticity. When everything you’re used to listening to is ephemeral/intangible, readily duplicated, etc. folks seek out and return to valuing things that are verifiably “authentic” and tangible. CDs are tangible but easily duplicated. Vinyl is even more tangible and impossible to duplicate for most (sure you can rip to digital but you don’t get an LP out of that process). Even better (in this view), a single copy acquires unique defects and noise, meaning that copy is no longer equivalent to any other: it becomes non-fungible (which CDs do not). Yes, this old Theory of Everything manages to explain the nft craze as well. Wish I could remember where I read it so I could credit the author.

“I heard someone predict a paradoxical increase of emphasis on authenticity. ”

I guess that is why Non-fungible Assets became so popular!

B^)

“MAKING A DAC IS EASY. MAKING A GOOD DAC, NOT SO MUCH.”

Best audiophile headline ever!!!!!

All hail the linear power supply earable difference fright is maybe not correct word but on well known track a heavy bass part was French me my Q Acoustics 2020 did not sound like that and it wasn’t short on the wattage on switching supply.

Usb isolator more comfort of mind cause I could not hand heart say would notice if asked someone remove said device but leave looking like signal chain was there, lack of power green led would get me prodding, so that’s moot. Plus who would tease their trusted DAC like that and don’t care enough to do testing to find out.