C and C++ are powerful tools, but not everyone has the patience (or enough semicolons) to use them all the time. For a lot of us, the preference is for something a little higher level than C. While Python is arguably more straightforward, sometimes the best choice is to work within a full-fledged operating system, even if it’s on a microcontroller. For that [Chloe Lunn] decided to port Unix to several popular microcontrollers.

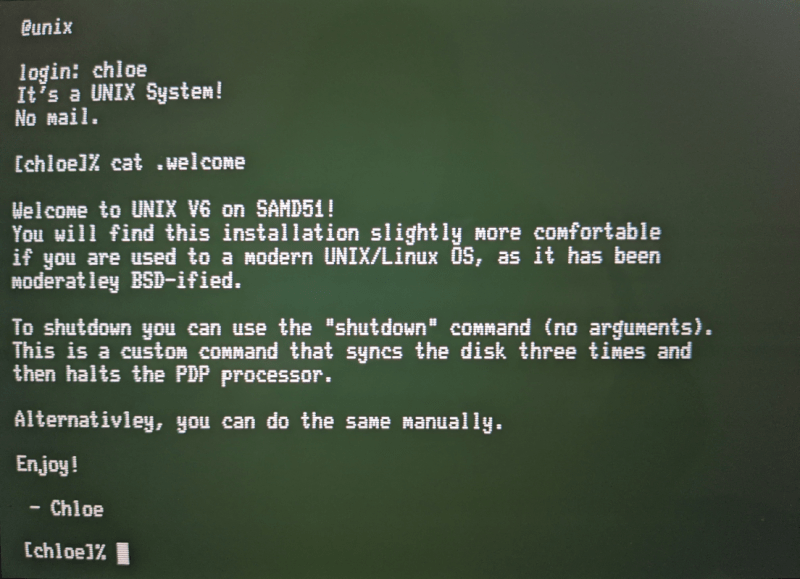

This is an implementation of the PDP-11 minicomputer running a Unix-based operating system as an emulator. The PDP-11 was a popular minicomputer platform from the ’70s until the early 90s, which influenced a lot of computer and operating system designs in its time. [Chloe]’s emulator runs on the SAMD51, SAMD21, Teensy 4.1, and any Arduino Mega and is also easily portable to any other microcontrollers. Right now it is able to boot and run Unix but is currently missing support for some interfaces and other hardware.

[Chloe] reports that performance on some of the less-capable microcontrollers is not great, but that it does run perfectly on the Teensy and the SAMD51. This isn’t the first time that someone has felt the need to port Unix to something small; we featured a build before which uses the same PDP-11 implementation on a 32-bit STM32 microcontroller.

Comparing an operating system to a programming language is top notch trolling imho. Nice job.

you mean they’re not the same thing? But isn’t Unix just C?

No, it isn’t just C, it is U, N, I, C and S.

Makes sense to me.

Writing software for a micro in C limits you to C code that you or someone else wrote for that micro.

Utilizing an entire UNIX BSD OS on a micro now lets you use code you and others have written in hundreds of different programming languages, all at the same time if you want, and decades worth of tools and libraries to accomplish your task.

That we have “lowly microcontrollers” with enough computation power for just a few dollars where such options are even possible should be plenty awe inspiring in itself.

The lack of vision, creativity, and the hacker mindset lately is quite sad and depressing.

“Writing software for a micro in C limits you to C code that you or someone else wrote for that micro.”

No, it doesn’t. Writing software in C means you can compile it for any machine for which there is a C compiler. That was the whole point of it.

But that’s not the issue here. Here, the issue is that most operating systems require more memory than most microcontrollers can directly address. But by using an emulator that CAN fit within a microcontroller, with external memory attached, not only the CPU but also the memory can be virtualized, expanding it to pretty much arbitrary limits, at the expense of speed.

No external memory needed for the SAMD51 or Teensy, in fact.

The 11/40 only had a 4MB bus and only 248KiB memory, the emulator just uses the internal ram for this.

It’s only on the micros with less that you have to add other methods like the swap file or external ram options.

He is comparing them as means of operating the microcontroller, so it makes perfect sense: Many people program microcontrollers directly, without going through an operating system. A few decades ago Not using an operating system “hitting the metal” was the usual way, or even having an OS but then writing software which switches it off, so the “comparison” makes sense.

That brings back bad memories of the IBM PC 5150..😰

Originally, in the days of CP/M, programmers followed the golden rule that programs used the official APIs/ABIs. Programs then even supprted different terminal types. A little later, programmers started to use low-level optimization to make programs running better on the poor performing IBM PC and “MS-DOS compatible” PCs with a better architecture died off – because programmers didn’t use, say, the PC BIOS or DOS anymore to output text; instead, they directly accessed the text generator. This caused better performance on the PC and PC compatibles, but it broke compatibility for machines like the Sirius 1 and other fantastic DOS machines. If programmers/hackers only spent a bit more time for a generic binary or a second code path! This could have saved us all from the mediocre PC architecture that we have today! *sigh*

If it had been open source it could have been recompiled. That’s what was missing.

Ironically, the hardware-dependant parts of CP/M (the BIOS) and MS-DOS (up to v3.x) were available in source code form and could be modified by the manufacturers, to adapt the OS to a variety od different machine types. In some way or another, CP/M-80 was a portable, platform-independent system. It’s only dependency was the CPU in practice, programs needed no or just minor changes to run. But even that was overcome with CP/M 86k, CP/M-86 etc.

In addition, source code back then was written not only in C. It also was Assembly, Fortran, Basic, Pascal etc. Each in a different dialect, sometimes.

Open Source wasn’t the answer back then, I think. Just think of the waste of resources (disk space, libraries, memory) needed to have some sort of GCC. But ABI compatibility was real. :)

PS: Sorry for my poor English, it’s not my first language.

Never mind, I’m afraid I misunderstood your expression. 😅

I agree that the PDP-11 emulation is neat and features better application support than C alone.

Some, I think most Unix distributions in the 1980s included source code. Not because they were open-source, but because you had to be able to recompile the kernel to add drivers or switch on or off a number of kernel features. I worked at a company that made BSD 4.2 based electronic design automation tools, which ran on their own 68k hardware, Corvus, VAXstation, and SUN 3 computers. For the proprietary hardware, there were only a few configurations, so we just copied a disk image, but for both VAXstation and SUN, system configuration could take hours and included compiling a kernel. IIRC, we eventually made disk images for all of the common configurations, but the point is, compiling kernels was just part of Unix life, and this required source code.

This makes no sense. Mention of C, then “python is better” and an opersting system is best.

Is this about a PDP-11 emulation, or running an early iteration of Unix?

And then you’re back to C again.

Right. So you get to run C code in an emulator. Oh, joy.

Cool. The PDP-11 was a 16 bit architecture running less than 1MHz. It should go like crazy on a Teensy, by which I mean faster than the 300 baud Decwriter and 1200 baud VT100 terminals. 600 times fast or more I would think.

It’s the emulation layer that kills performance, I suspect. How many ‘Teensey’ op-codes are needed to do what the PDP-11 did I. One operation PLUS all the housekeeping emulation entails?

I like running PDP-8 emulation on a Pi Zero in a case the size of a pack of chewing gum with storage that would have been nearly impossible ‘back in the day’…

Teensy 4 is 600MHz M7 ARM. PDP 11/70 instructions are 1 to 5 cycles (I misremembered above. About 1.2MHz.) The ARM can do most of them in 1 cycle and the 2-address ones like MOV in a couple cycles. It could also easily combine instructions if one wanted to, like every ARM instruction can be conditional so you get a branch at the same time. Also the barrel shifter can be added to any instruction. Plus the pipeline and stuff gives it more like 1.7 instructions per cycle. Also simple interpreters like instruction emulators are very efficient with the ARM instruction set. There is more than enough RAM on The Teensey to have a lookup table with code for every PDP instruction. Just use the instruction as the lookup address.

But this wouldn’t be portable, which was part of my goal.

You could port this to PICs just by adding an entry in platform.h to say where LEDs are. Or run it on an AVR.

There are many things you could do to optimise it for a specific architecture, and I’ve seen PDP-11 emulators with a mechanism you described, but it wasn’t the way I wanted to go.

You’re also missing that instructions were only part of the process in an 11/40 (not an 11/70). The UNIBUS (which included the RAM and core memory) had a timing spec of *milliseconds*, these micros handle the emulated IO side so fast that any instruction speed loss is easily made up again by that. You don’t have to wait for disk access, for example.

It’s not just emulating instructions, it’s also emulating everything else.

The Teensy, sure, only gets 6x the (emulated) MIPS of the original 11/40, and you could get more out if you wanted, but it’s not exactly slow.

It’s indeed not many opcodes (though the code is explicit and does rely on the optimiser), but it’s also about keeping it portable.

In theory you could write an entry in the platform.h to get it to run on PICs, or use the one for AVRs, or whatever.

The teensy at full whammy can run the emulated PDP-11 at about 6x on the OP speed, but the real improvement is the IO speed because the real ones were *slow* when it comes to disks and whatnot.

The UNIBUS has a timing spec in milliseconds!

I wrote a data logging application for a PDP-11/03 in 1980. It was programmed in Fortran-IV and hosted under RT-11.

This machine had 32K words of RAM and dual 8 inch floppy disk drives. The OS and application used one drive and data was logged to the other.

16 factory machines were logged each having up to 16 sensor outputs at a sampling interval of 1 second. RT-11 permitted a foreground and a background task. The data collection ran in the background while the OS was available to run a reporting program to provide data summaries, in printed tabular format, on demand.

That wasn’t a bad implementation for such limited hardware by today’s standard. What I could have done with a modern ESP32 were it available to me at that time.

I am all about C, unix, and operating systems, so I am reading this with eager interest, but like some others I don’t see how Python found its way into this discussion. It does have its place of course, but this probably ain’t it.

I am always the odd man out, but for me programming in C is the most natural, easy, and instinctive thing there is, but I won’t tell you how many years of adventure reside behind that. But the older I get, the less interest I have in these “my favorite thing is better than your favorite thing” discussions. I just say this to point out that some people find C to be rainbows and sunsets.

Now when I read the title, I thought, “cool, I have some Edition 7 unix source laying around someplace that is supposed to run on a PDP-11”. I could waste (or should we say invest) several weeks in fooling around with that via this emulator he is talking about. It is worth pointing out that Unix performance on a real PDP-11 was not something that would knock you over if you are spoiled by todays crop of hardware.

I haven’t actually got V7 to boot properly yet 😅

But there’s a modified version of V6 in my repo that has a library from 2BSD that should allow you to compile V7 source on V6.

Reminds me of squeals of “who’s compiling??” when 20 students (including me!) were trying to edit their code back in the day…

Some of the arguments for Python revolve around there being so many libraries available for it. Most of which are written in C, but that’s not the point. The point is, people often choose a programming language for its ecosystem, and because people who are afraid of C seem to embrace Python, providing a rich ecosystem.

Yeah, I know: if those Python libraries are written in C, then shouldn’t they also link with C programs?

Some of us are not afraid of C, we just hate it. Mostly for the simple things it makes complicated, especially hardware interfacing due I think to the programmer’s model and pointers, pointers versus arrays, strings with an EOF character, etc.

Anyway, for me portability sounds great but almost never turns out to be important, so Python can be optimized in a number of ways. For example MicroPython can be sped up right down to the assembly level.

Confusing write-up aside, this is really cool.

I was looking at the list of working and in-progress modules though, and couldn’t help but wonder why there aren’t any parallel interface cards listed. Seems like with all those I/O pins, that would be a likely next step, no?

Hi! This is my project

The plan is indeed to break out the bus to a parallel interface, after all that’s how the unibus worked.

I’ve actually been designing a PCB to go with this, but haven’t finished deciding on a spec yet.

If you check out the to-do list it’s somewhere on there.

Neat, thanks for clarification, and for sharing your work!

There was a fella who wrote a ARMv5 cpu emulator for a old 8-bit atmega and stuck on a stick of ancient ram and a old SD card.

It booted a very old version of Ubuntu to the console in a couple of hours though.

http://dmitry.gr/?r=05.Projects&proj=07.%20Linux%20on%208bit

Neat! I am sure there is a lot of work to make it all happen. Amazing the ‘power’ we have at our finger tips in such small packages and low price. Nobody should be complaining! Just about anything is possible.

To the left of where I am typing, I have my obsolescence PDP 11/70 chugging away. Something about seeing a computer with ‘lights’ :) . What I find interesting is that I can run the simulator plus a lot of other tasks in the background and it doesn’t even break a sweat…. This one is powered by a RPI4 8G booted off a USB 3.0 500G SSD. A PI4 with a front panel. Anyway, I think it is so ‘cool’ and fun to play with every now and then. Ie. Say, try to key in a little boot sequence program from the front panel (back then there was no BIOS), then run it to boot an OS like RT11 or SYSV . :)

https://obsolescence.wixsite.com/obsolescence/pidp-11

Love the blinkenlights. LOVE the toggle switches. TBH, I’d do terrible things for an ARM computer that could be booted from switches. My first computer build was a Z-80 machine with a lights-and-switches panel.

rclark, that is very, very cool.

This reminds me of the Nuttx operating system, which was intended to bring Unix to microcontroller

Nuttx is used in the PX4 to stand in for Unix on their microcontroller (usually stm32) hardware.

The problem is the overhead of the IOCTL abstraction, which adds up to a lot on an I/O bound microcontroller.

A dedicated RTOS such as FreeRTOS or Chibios is much more efficient on a microcontroller.

ArduPilot also used Nuttx once upon a time with goal of Unix compatibility, but later realised that Unix is not really designed for real time OS and switched to Chibios for Microcontrollers

In depth video of the ArduPilot experience here https://www.youtube.com/watch?v=y2KCB0a3xMg

Fun project! My retro-fuse project (https://github.com/jaylogue/retro-fuse) may be of interest to those looking to access or populate v6 disk images for emulation (… or v7, or soon 2BSD). It even includes mkfs functionality.

I’d be interested to hear whether the author considered running v6 natively rather than via emulation. My retro-fuse code incorporates the original Unix filesystem code, modernized to compile with current gcc/clang. The modernization effort was not all that difficult, and as I was doing it I began to see how one could go about modernizing the rest of the code. Without an MMU of some sort, it might make sense to start with MiniUnix (a v6 variant that runs without an MMU). Or perhaps an MMU-less solution could be fashioned using -fPIC. Of course, there a plenty of things in v6 that are written in PDP-11 assembly (mkdir for example). But c versions of many of these could be back-ported from later Unix versions relatively easily.

Taking this approach, I could imagine a system where a minimal Unix OS is used as a shell in a modern embedded project. I’ve worked on may embedded projects where it was helpful to have a shell interface for inspecting device state, or for orchestrating hardware testing. I could even see Unix running as a single task within an RTOS (this would be straightforward with FreeRTOS). This would allow the embedded application proper to be written in a modern embedded style, while providing a Unix-like shell for developer interaction.

Hey, I’m the author of this project ;)

I actually submitted an issue on retro-fuse about date stamps because I’ve been using it to tweak my V6 installation that I run on my emulator!

I did, yes, consider just porting and running it directly, but there are *a lot* of source files to port and tbh porting interests me less than wwriting

I have ported other vintage Unix things to modern nix, and my shell on my laptop right now is essentially a port of the V6 Thompson shell with some tweaks to make it more comfortable for a modern user, like cwd in the prompt string.

I had created a NIX-like OS for micros (my Gitlab has the early start of my generic-izing of that), and yeah -fPIC was one of the things I used in the original to load programs.

I’m actually an EE rather than software engineer, but my work includes managing the OS for a micro, and on that I’ve got a pretty complete shell experience running in parallel with the embeded tasks.

Oh! And if people are interested, there is a project out there (not mine) called retrobsd which is a true port of 211bsd to PIC32s!

So how do I connect my ASR-33 teletype for output?

Change the default baud in KL11.h to match the ASR-33, add a TTL to RS232 level shifter, and plug it into the standard serial out from the micro board. 🤷🏻♀️

(Actually, you might have to do a find and replace Serial to Serial1 on some boards)

Or just use a 100R to generate it straight off the TTL instead of going to RS232 and then current loop, either way.

“While Python is arguably more straightforward, sometimes the best choice is to work within a full-fledged operating system”

– that’s why I run Unix V6 on top of Python in my PyPDP11 project.

We’ve been running 2.11 BSD, natively, on the PIC32 for years now. Maybe your Internet is unplugged from the rest of the Internet? :)