Technology moves quickly these days as consumers continue to demand more data and more pixels. We see regular updates to standards for USB and RAM continually coming down the pipeline as the quest for greater performance goes on.

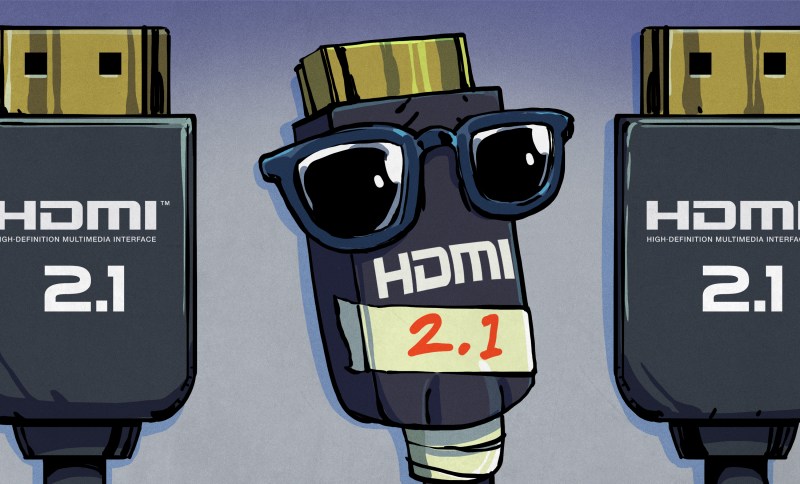

HDMI 2.1 is the latest version of the popular audio-visual interface, and promises a raft of new features and greater performance than preceding versions of the standard. As it turns out, though, buying a new monitor or TV with an HDMI 2.1 logo on the box doesn’t mean you’ll get any of those new features, as discovered by TFT Central.

The New Hotness

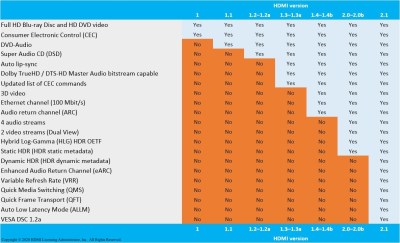

HDMI 2.1 aimed to deliver multiple upgrades to the standard. The new Fixed Rate Link (FRL) signalling mode is the headline piece, providing up to 48 Gbps bandwidth, a major upgrade over the 18 Gbps possible in HDMI 2.0 using Transition Minimised Differential Signalling, or TMDS. TMDS remains a part of HDMI 2.1 for backwards compatibility, but FRL is key to enabling the higher resolutions, frame rates, and color depths possible with HDMI 2.1.

Thanks to FRL, the new standard allows for the display of 4K, 8K, and even 10K content at up to 120 Hz refresh rates. Display Stream Compression is used to enable the absolute highest resolutions and frame rates, but HDMI 2.1 supports uncompressed transport of video at up to 120 Hz for 4K or 60 Hz for 8K. The added bandwidth is also useful for running high-resolution video at greater color depths, such as displaying 4K video at 60 Hz with 10 bit per channel color.

Also new is the Variable Refresh Rate (VRR) technology, which helps reduce tearing when gaming or watching video from other sources where frame rates vary. Auto Low Latency Mode (ALLM) also allows displays to detect if a video input is from something like a game console. In this situation, the display can then automatically switch to a low-latency display mode with minimal image processing to cut down on visual lag.

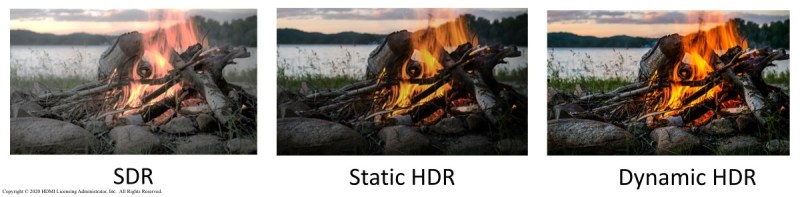

A handful of other features were included too, like Quick Media Switching to reduce the time blank screens are displayed when swapping from one piece of content to another. There’s also special Dynamic HDR technology which can send data for color control on a frame-by frame basis.

Overall, HDMI 2.1 offers significant improvements in terms of resolutions and frame rates that are now possible, and this is paired with a raft of updates that promise better image quality and cut down on lag.

Not Necessarily Included

The problem with the new standard became clear with the release of a Xiaomi monitor which touted itself as HDMI 2.1 compatible. Despite what it says on the box, however, the monitor included absolutely nothing from the new HDMI standard. No higher resolutions or frame rates were available, nor the useful VRR or ALLM features either. Instead, everything the monitor could do basically fell under the HDMI 2.0 standard.

Amazingly, this is the appropriate thing to do according to the HDMI Licensing Authority. In response to queries from TFT Central, the organization indicated that HDMI 2.0 no longer exists and that devices could no longer be certified as such. Instead, HDMI 2.0 functionality is considered a subset of HDMI 2.1, and new devices must be labelled with the HDMI 2.1 moniker. All the new features of HDMI 2.1 are considered “optional,” and it is up to consumers to check with manufacturers and resellers as to the actual specific features included in the device.

Other embarrassments have occurred too. Yamaha has had to supply replacement boards for “HDMI 2.1” receivers that were unable to handle the full 48 Gbps bandwidth of the standard, leading to black screens when customers attempted to use them with high-resolution, high-framerate sources. Even with the upgrade, the hardware still only supports 24 Gbps bandwidth, leaving them unable to provide the full benefits of HDMI 2.1 functionality. Other manufacturers have faced similar woes, surprisingly not testing their hardware in all display modes prior to launch.

The result? Forum posts full of alphabet soup like “4K+120Hz+10-bit+HDR+RGB+VRR” and “8K+60Hz+10-bit+HDR+4.2.0+VRR” as people desperately try to communicate the precise feature sets available in given hardware. If only there was some overarching standard that unambiguously indicated what a given piece of hardware could actually support!

A Poor State of Affairs

Confusion around the functionality included in new standards is not a new thing; USB has had its own fair share of troubles in recent years. However, the USB-IF at least managed to make the names of its standards mean something; there’s just too many of them, and it gets really confusing.

As for HDMI though, the decisions made muddy the waters by not deciding. It’s difficult to understand why the HDMI Licensing Authority could not have kept handing out HDMI 2.0 certifications for devices that didn’t implement anything from HDMI 2.1.

In any case, the organization has made it clear that this is the way forward. Speaking to The Verge, spokesperson for HDMI.org Douglas Wright confirmed that devices can not be certified for the HDMI 2.0 standard any longer. As for the confusion sown into the marketplace, Wright simply responded that “We are all dependent on manufacturers and resellers correctly stating which features their devices support.”

So, what is one to do when shopping for a new monitor, television, or games console? Simply checking if the device has “HDMI 2.1” will not be enough to guarantee you any particular level of functionality. Instead, you’ll have to make yourself familiar with the specific features of the standard that are important to you, as well as the resolutions, frame rates, and color depths that you intend to run your hardware at. Then, you’ll have to hope that manufacturers and resellers actually publish detailed specifications so you can check you’re getting what you really want.

It seems like an unnecessarily painful state of affairs, but sadly that’s just the way it is. One can speculate that it was commercial pressure that drove the decision, with neither TV manufacturers nor retailers wanting to be stuck with “old” HDMI 2.0 stock on the shelf in the face of newer models that “support” a new standard. Alternatively, there may be some other arcane reasoning that we’re yet to understand. As it is, though, make sure you’re checking carefully when you’re next purchasing hardware, lest you get home and hook everything up, only to be disappointed.

Caveat Emptor! Or as the manufacturers and standards group are implying, “Chuck you, Farley! “

Yep. What a disgrace. The entire display industry should’ve moved to DisplayPort years ago.

“HDMI 2.0 no longer exists… the new capabilities and features associated with HDMI 2.1 are optional”

Up yours, HDMI industry group.

First world early adopters problem. I’ll sit back and wait for the technology to hit main stream at lower price brackets.

There are more important things than to spend all your money to “upgrade” to keep up with the latest bling. e.g. getting out of the rat race early, perhaps?

It’s a problem for the first world and early adopters now, but when it “hits the main stream” at lower price brackets it’ll be a problem for everyone else, and it’ll be a problem for you.

If your only available standard is always bleeding edge, your prices will never drop and it will never be mainstream. 1.4 is perfectly fine for most consumers. 48 billion bits of data per second will not be any better on a 40″ LED UHD screen.

Not an early adopter problem. This is the same problem that plagues USB, all it’s done is migrate to HDMI. MFGs know consumers won’t know the difference, they’ll buy thinking their product is “new hotness” when it’s still the old standard. If you think this is just going to exist for early adopters you’re either ignorant of history, or willfully ignoring it. MFGs will just keep turning out the same hardware, slapping the new logo on it with no explanations at all and this will continue indefinitely, just like it does with USB devices and cables. Only engineers will be able to tell the difference because they’re the only ones with the test beds to find out who’s doing what – and they may or may not publish any results.

The screw up is HDMI’s steering group, and it was an intentional middle finger to all consumers.

So basically “USB 2.0 Full Speed” and “USB 3.1 Gen 1” all over again?

Also, wouldn’t it be possible to work around the AV receiver issue by having the display forward the audio using ARC or using a second HDMI cable to use another HDMI output on the GPU as audio output?

Worse news: an HDMI 1.0 transmitter, correctly received by other HDMI 1.0 receivers, is refused by some HDMI 2.1 receivers.

In detail: I have this wonderful 75″ TV/monitor (Xiaomi with full Google software) that cannot display data that ordinary ancient monitors correctly display.

So sad.

What a mess, again. Standards design by commercial interests each having their own agenda to push and competitors to quash.

This is already a problem if you are looking for HDR or 4k compatibility. Xmas morning the kids games complained they weren’t in full HDR mode – turns out the receiver can’t handle passthrough, and its not clear any available new ones support it either. F you if you have a use case beyond 2 devices. Add eARc and other complexities and its a huge mess.

Products such as the AVPro Edge AC-MX-42X are designed specifically to solve this problem. You’ll need to update receivers to one that supports HDMI 2.1 or use a product like this to output a scaled down signal older receivers can handle.

Wouldn’t it be good if they made a specific variety of HDMI a requirement for the new certification so it’s not all up to going over the spec sheet?

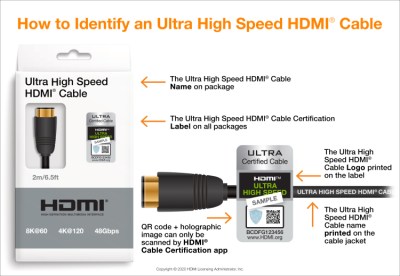

I remember the early days of HDMI, when I carefully checked all the packaging to make sure the cables could do what was required. Of course all of that turned out to be pretty much meaningless, at the time.

Turn the clock forward, and I have to go through that again, only this time it actually could matter.

Clearly. But the HDMI standards-setting body is clearly a marketing-scam enabler and not a standards-setting body at all.

Let’s not pretend HDMI hasn’t been an unreliable mess from the start anyway.

There’s a reason I had to transition my grandma (with bad eyesight)’s TV back to component video.

What would all these tech publications that are complaining about this suggest instead? I haven’t seen any recommendations by them, just complaints. Should it be 10 different specs that are all dependent on each other specification to work? It makes more sense for them to all be in one specification to me since many of the features depend on the specification of the others in order to work.

The HDMI 2.1 specification clearly lays out all the features, and the display devices tell the source devices what features they support.

Older versions of specs weren’t torn apart like this either, HDMI ethernet was optional, eARC is optional in HDMI 2.0 etc.

What is the reason everyone is gung-ho about this particular release?

Because HDMI 2.1 doesn’t mean HDMI 2.0 with extra features. It means the same as HDMI 2.0 but it COULD POSSIBLY MAYBE have one or more extra features. So it’s equivalent to 2.0. Therefore it misleads Joe Customer.

The alternative is retain HDMI 2.0 as a certification and make some sort of additional features a requirement of HDMI 2.1 certification.

Counter point that if you’re using VRR (variable refresh rate), QFT (quick frame transport), high FPS/resolution (4K 120Hz / 8K+), QMS (quick media switching) you are a power user and should be checking that each of your installation/setup equipment supports all of those features anyway.

The average consumer won’t use those features let alone know what they are, it’s irrelevant.

My needs are more modest. Until this fiasco, I have always simply looked for HDMI 2.0 and an alphabet when I needed to make sure I could get 4k resolution at 60hz. It appears I was merely lucky that that got me the features I expected. You can bet I would have been returning both monitor and GPU that claimed to be HDMI 2.0 if I couldn’t get them to talk at 60hz in 4k.

i’m pretty sure that Joe Customer doesn’t even understand any of those new features, but fond of the idea that bigger number means better at something.

so you’re spot on, but somehow this is – sadly – how the world works nowadays.

Perhaps the appropriate labeling would be “HDMI 2.0+” followed by a list of supported features until the device achieves complete support for “HDMI 2.1”. Oh wait, that’s what “4K+120Hz+10-bit+HDR+RGB+VRR” is supposed to do… but is labeled as 2.1. Hmmm…

Lets all assume that there are no 2.1 devices until 2.2 comes out. Only then might we be able to depend on the 2.1 labeling (and maybe not even then).

There are no good simple solutions.

Okay, so why didn’t people complain when dealing with ARC and eARC (which is a HDMI 2.1 feature supported on most late 2.0 devices)?

Most features of 2.1 can be supported by 2.0 devices without an issue, just restrictions on implementation of the manufacturer via hardware/software support. The primary change with 2.1 is the addition of new available features and FRL (fixed rate link). FRL is only required for certain bandwidth video content anyway so again, pick the right products for the features you want and don’t tie the support to specific versions of HDMI 2.0 vs 2.1 because they are capable of doing both. If you see DP v2.0 does that mean that product must support 8k 10 bit in order to claim DP v2.0? No, because it doesn’t make sense.

The big change between HDMI 2.0 and (full) 2.1 is the increased data rate from 18Gb/s to 48Gb/s. The extra bandwidth is needed to support 10K/120Hz mode. While there’s nothing on the market that can handle that yet, there are many devices that will manage 4K/120Hz and anyone who sees HDMI 2.1 on the label has a reasonable right to expect that.

So it is reasonable to expect devices labeled with DisplayPort 2.0 to support full 77.4 Gbps that the spec calls out?

Since DP 1.4 can handle 1 4K screen at 120Hz then it’s not unreasonable to expect anything claiming to be DP 2.0 to handle 2 at the same resolution. Whether or not it manages the full bandwidth is moot, the important bit is that the limits have improved and you can use the improvements on current hardware, otherwise you might as well have bought DP 1.4 hardware for less money.

With DP however you ARE getting improved bandwidth if you upgrade to 2.0, not just 1.4 hardware with the badge crossed out in crayon and a couple of (optional) software tweaks.

The very fact that you not see a problem with this is enough to hope for me that you never end up on a position to create standards.

There are already too many authists on that position in the world so we end up with a more complex world in stead of a clear and simpler one.

Do people ever learn from the past? Apparently not.

You haven’t provided any good information why this standard alone is being called out for the very same thing every other standard does: layered support.

The minimums are well defined and many 2.0 products support the 2.1 specifications so just call them 2.1 like they are…

Normal users shouldn’t be using any of those extra features anyway so if you want to use them you should be checking your hardware for it for optimal performance.

Let’s not even discuss HDR spec vs real performance on displays called “HDR” by marketing teams who know it doesn’t meet the spec (especially brightness/contrast) just because it can process an HDR signal.

Let’s not talk about the many DP 2.0 devices don’t support 77.4 Gbps despite that being “the spec”. Yet those are still certified and people are expected to look to their GPU and monitor to determine support.

So again: why is this just a thing NOW and with this specification?

With that many options that need to be supported by both devices in order to work, the only thing that is guaranteed to the user is the physical plug, maybe. That is, the user could look at 1 million combinations of devices and never find any that work together, in spite of what looks like an interface standard that effectively says “You go and figure it out.” Even the plug might not work; both devices might refuse to use the cable unless the cable supported that particular transport layer.

The usual suggestion is to mark the output with all the features and if the variability of features is to much to conveniently mark them, then it’s not a standard, it’s the Tower of Babel, which turned out to have a bad outcome.

In the old days one could look at a VCR and see if it had composite outputs and the TV to see if it had composite inputs. But thank marketing they can hide all of that so the consumer cannot immediately reject a possible purchase just by looking at the device. Now they can go over the 100 page manual to see if it supports Audio X.14.g.25/b with the 75 kHz/channel in Derpy format 80.a.2020 so that some sound might come out. Sure – the equipment will automatically do that handshake and tell the user MEDIA NOT SUPPORTED in foot high letters on their $1000 monitor. And that’s the message consumers lover to see.

The whole point of standards certification is so that the consumer doesn’t have to dig deep to know of there did is compatible. If an end product says “requires HDMI 2.1” and all my relevant hardware is certified HDMI 2.1, that should be the end of it. I shouldn’t have to go looking to see if it’s ALL of 2.1 or investigate WHICH PARTS of 2.1. Why even have standards certification at that point?

If I’m going to have to do a feature by feature comparison of every link in my hardware chain anyway, then the certification has provided little value.

Just off the top of my head, but maybe a system like HDR? VESA has tried to clean that up a tad by adding subgroups to the standard, so HDR 400, HDR 600, etc .. because the HDR label had become watered down and unable to fully indicate *what* it meant to the consumer as manufacturers quickly slapped HDR on all their boxes.

So instead of everyone having to track each individual feature set that is part of HDMI 2.1 for a particular model of device / cable .. maybe have subgroup labels of [related and likely to be included together] feature sets that? Not many, but binned groups. If you don’t include all features of that group, you fall back into the tier that you do support. E.g. HDMI 2.1 Legacy (essentially the 2.0 stack), HDMI Full (the entire 2.1 stack), and possibly something in-between.

so so sad. That’s like saying you have Sars. OK Whitch One? ( I know I allways you the wrong Whitch)

I would like to use other words but then this would not get posted.

HDMI-Δ or HDMI-Omicron?

Oh not again. I hate optional features on standards, though last time I got burned by it was with the Intel HD 4000 that claimed to support HDMI 1.4. In theory that would support 4K 30Hz or the more useful 2560×1440 60Hz, but no, it couldn’t hit the data rate required for either of those.

If they want optional bits of the standard can they at least make the data rate mandatory and then give everything else it’s own logo or trademark? We support HDMI 2.1 (data rate) and HDMI 2.1 VRR, HDMI 2.1 Flossing Extension or whatever.

So anyone keeping track of how many TVs and monitors that were returned because it didn’t support any of the new 2.1 feature even though they were labeled as 2.1 compliant? That’s going to suck for stores dealing with returns and manufacturers stuck with support bogged down with “It says 2.1 compliant but it won’t do this or that feature” or getting stuck with multiple returns that are otherwise perfectly fine with original 2.0??

Who does still use HDMI and why? It seems to me that the whole previous decade DP was always at least one year ahead with real technological capabilities while being royalty free.

DP doesn’t support ARC and eARC type support so no going from a sync device back to a source device (i.e. TV with built in streaming service sending audio back to the audio video receiver over HDMI).

Also in the pro av industry there aren’t any DP extenders to go 100m, no display port switches, etc.

Would require entirety of a multi billion $$ industry to shift and change in sync which won’t happen.

FYI: Sink device as in something that accept a signal from a source. e.g. current sink/source

not Sync (Synchronous). It gets confusing when you are talking about video signals.

FYI: Just remember the kitchen Sink vs the tap as the source. That’s exactly how the word is used.

No one say kitchen sync.

DP implementations seem more prone to buggyness (disconnected/powered off monitors on running devices never go live again on re connection being a common one I’ve seen), as well just less widely available – if your projector/phone/laptop etc has a built in connector its almost always HDMI in one size or other, DP might exist as well but its not as common, which leaves people frequently having to use HDMI some of the time, so you might as well just use HDMI all of the time – meaning when you need that long 8m cable, a spare one to connect up the new device, or whatever odds are you will have one in your pile-o-junk/spares, it may not be up to spec – but it will still at least work, which is really all that matters much of the time (you get a cable up to spec you need later if required)..

It’s just a money grab by HDMI cert. But when it gets to the point that –

“We are all dependent on manufacturers and resellers correctly stating which features their devices support.”

When there’s is absolutely no point in paying for a HDMI cert. The death spiral is on its ways.

I had to laugh at “Transition Minimised Differential Signalling”

It’s sounding more and more analogue with each transition.

It’s an embarrassing law of physics that an analogue signal will always require less bandwidth for that same data rate. Or written conversely, data can be sent down a carrier more efficiently in analogue.

High speed digital coms is always very analog at the transport layer. TMDS is just a differential pair with 8/10 encoding to guarantee clock recovery. Even so there is lots of analog processing needed to handle it at 18Gb/s. The digital signal so encoded is however much more robust than a pure analog one, where issues like reflection and signal noise will render the pure analog signal unusable far before the digitally encoded one.

“Instead, HDMI 2.0 functionality is considered a subset of HDMI 2.1, and new devices must be labelled with the HDMI 2.1 moniker. All the new features of HDMI 2.1 are considered “optional,””

Same bolshoi that’s happened to USB. They didn’t insist that for storage devices to be eligible to be called USB 3.x they would be required to be able to maintain a minimum sustained write speed from 0% to 100% full. So we get a lot of utter crap half-USB 3.x flash drives with sustained write performance that can be worse than a good USB 2.0 flash drive. There are some painfully slow USB 2.0 flash drives from big name companies (PNY) that can’t even manage 1 megabyte per second writing.

When will these outfits specify “Devices must meet these minimum performance and feature specifications or they will not be allowed to use the logo and other trademarks.”? By allowing their logos and trademarks to be put on garbage hardware, it’s not helping them.

“It’s difficult to understand why the HDMI Licensing Authority could not have kept handing out HDMI 2.0 certifications for devices that didn’t implement anything from HDMI 2.1.”

So, ask them:

HDMI® Licensing Administrator, Inc.

admin@hdmi.org

Another mess, brought to you by the same short-sighted thinking that brought up too many HDTV screen formats, and a bizarre USB roadmap with crazy connectors.

I think that HDMI is messy and I don’t like it (and I also don’t like USB). I had work on my own specifications for a better one; we will see how good it is once it is written. (One thing it does is separate cables for video, audio, and control; captions (if any) can be transmitted using the control cable (HDMI does not have captions at all, and I also don’t know if HDMI has a “suppress OSD” command; these are some of the things I dislike about HDMI). I dislike also that they put USB, internet, etc in the same cable too, making it difficult to be separated; the licensing fees; HDCP; and many other problems with HDMI.)

HDMI vs DisplayPort : Round 2

I thought PCs turned to DisplayPort because of cost issues (paying for your goods to be certified, etc).

Maybe the Manufacturers Going Their Own Way movement had the right idea?