The team from the Sensing, Interaction & Perception Lab at ETH Zürich, Switzerland have come up with TapType, an interesting text input method that relies purely on a pair of wrist-worn devices, that sense acceleration values when the wearer types on any old surface. By feeding the acceleration values from a pair of sensors on each wrist into a Bayesian inference classification type neural network which in turn feeds a traditional probabilistic language model (predictive text, to you and I) the resulting text can be input at up to 19 WPM with 0.6% average error. Expert TapTypers report speeds of up to 25 WPM, which could be quite usable.

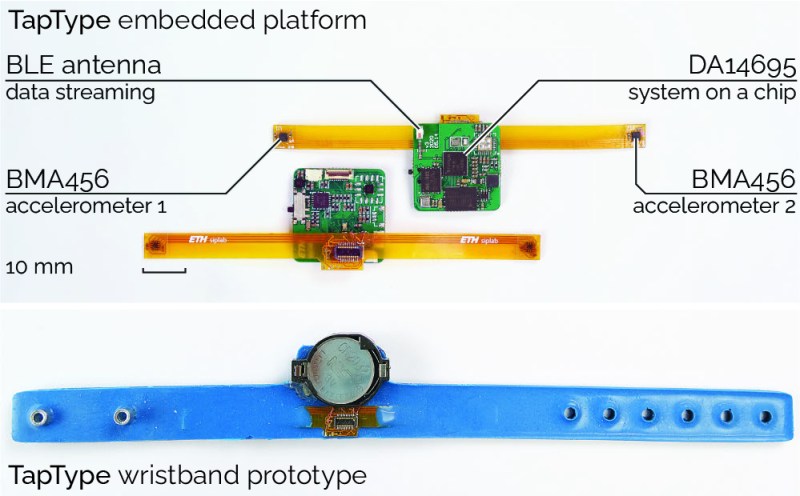

Details are a little scarce (it is a research project, after all) but the actual hardware seems simple enough, based around the Dialog DA14695 which is a nice Cortex M33 based Bluetooth Low Energy SoC. This is an interesting device in its own right, containing a “sensor node controller” block, that is capable of handling sensor devices connected to its interfaces, independant from the main CPU. The sensor device used is the Bosch BMA456 3-axis accelerometer, which is notable for its low power consumption of a mere 150 μA.

The wristband units themselves appear to be a combination of a main PCB hosting the BLE chip and supporting circuit, connected to a flex PCB with a pair of the accelerometer devices at each end. The assembly was then slipped into a flexible wristband, likely constructed from 3D printed TPU, but we’re just guessing really, as the progression from the first embedded platform to the wearable prototype is unclear.

What is clear is that the wristband itself is just a dumb data-streaming device, and all the clever processing is performed on the connected device. Training of the system (and subsequent selection of the most accurate classifier architecture) was performed by recording volunteers “typing” on an A3 sized keyboard image, with finger movements tracked with a motion tracking camera, whilst recording the acceleration data streams from both wrists. There are a few more details in the published paper for those interested in digging into this research a little deeper.

The eagle-eyed may remember something similar from last year, from the same team, which correlated bone-conduction sensing with VR type hand tracking to generate input events inside a VR environment.

There is already a commercial product like this. See it on Amazon: https://www.amazon.com/gp/product/B09C2K7L73

I have one. It works pretty well.

The new device uses just one device per hand, worn around the wrist, not one sensor for each finger.

Requires that you be a fluent touchtypist, or bring that keyboard printout with you everywhere? :-P

This sort of idea is good for some, but still fails the most important element of any serious typing experience, that haptic feedback so you know even while the text is buffering on a slow remote connection or whatever is actually correct.

If you are a serious touch typist, which these techs kind of rely on why would you take the step backwards to typing on nothing, with no feeling – what you need with something like this is a key layout roll or something so you can still feel the keys at least a little…

There’s no reason you couldn’t add vibration motors and play slightly different patterns for each key.

Back in the day, when the initial Wii controllers came out, I was wondering how to get rotation data from an accelerometer. You can’t do it with one, unless you know something about the installation and use case. However, with two, you could get rotation information, except for rotations that are collinear with the line between the two devices. Having three would overcome even this limitation. But eventually, MEMS gyro sensors came out and made it mostly moot.

A tour de force of mathematical expertise, it’s truly impressive to infer the movements of each finger with just two sensors. Though I wonder whether it would allow one to type slowly and accurately, considering its reliance on accelerometers and statistical models

Still a mystery to me why folks don’t just learn Morse and use a key …

You would have to extend the morse code a bit to handle special function keys. how would you do a ctrl+alt+del?

On very long Beep as you only need that combination when something has gone very wrong on your Windoze box, so it can beep out the expletives?

Seriously though there are a great many voids in the Morse keying patterns you can fill, and you can always add modifier shift layer instructions to get to use every letter pattern many times if you prefer.

The real downside to Morse, at least keyed traditionally for human decoding is it is damn slow..

One on/off signal is not anywhere close to exploiting the full data rate you could be getting.

The idea isnt bad (typing anywhere would be sorta cool & useful) but at the same time it really is..

There is no way to ‘home’ your hands to the virtual keyboard, without that there is pretty much no way to know what keys the user is expecting to be pressing. If my brain doesn’t know what key is below my fingers, how am i ever going to be typing? And there doesn’t seem to be any sort of distance measuring between hands and there’s nothing like ‘hand position relative to chest’ either, this means that when you start typing, for all the code is concerned you could be intending to be ANYWHERE on the keyboard??

So taking that observation a step further, how (if ever) is it going to decide what you actually wanted to type? the way i see it its 95% reliant on its ‘predictive text’ logic, but that means there’s very little use for it in most cases, like i might be typing in a language it doesn’t know, i might be typing in a character set it doesn’t know, i might even be combining languages/character sets in a single sentence, how is the ‘predictive text’ bit ever going to make sense of what i want to do?

Or if the point of multiple languages and character sets is ‘too harsh’, what if im trying to write a basic program? what if i want to mention some place near me? my variable names are not in the English dictionary, neither are all locations in the world? And what about the most common problem with text prediction?? how would it know if i want to type polls or pills? how does it see a difference between pressing Tab & Capslock? What about the small differences between people? For example i can reach ZXCVB with several fingers, how is it gonna decide what finger i use for what characters? Im sorry but this system wouldn’t work at all in so many use cases…

This comes across as a cool little project for school or something, but not as a replacement for typing on touch screens.

Good risk analysis Oliver. Project needs time and work but the idea is good. It will basically learn every user when writing on their keyboard. So you have dataset for machine learning in every language. After some time your own brain will learn writing without looking at or touching a keyboard. Because there is no keyboard. AI is more capable than brain, just believe and watch

Another keyboard replacement completely reliant on “natural language processing” to make up for inherent flaws in the design. When will these people realize that 90% of keyboard use isn’t prose. And coming from a swiss group I can expect it to only support some weird lobotomized version of English and no other language.

That seems a little nationalist… just don’t like the swiss? I guess these comments really aren’t moderated.

Is this actually useful? 25WPM is worse than what I get out of a phone’s onscreen keyboard.