Over the decades, a lot of attempts have been made to try and make pens and pencils “smart”. Whether it’s to enable a pen to also digitally record what we’re writing down on paper, to create fully digital drawings with the haptics of inks and paints, or to jot down some notes on a touch screen, past and present uses are legion.

Where SIP Lab’s DeltaPen comes in as an attempt at a smart pen that acts more like the pen of a drawing tablet, just minus the tablet.

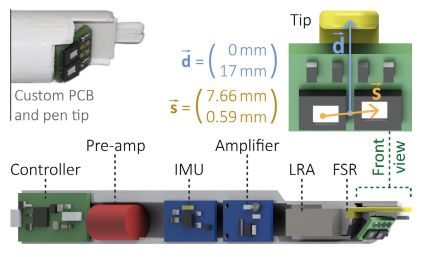

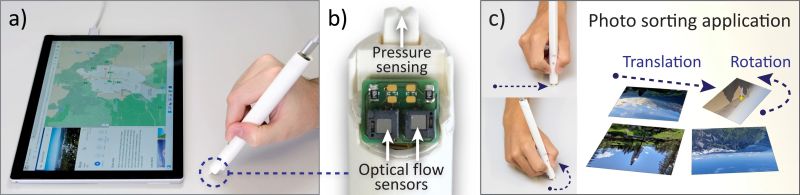

This project is related to the decidedly more clumsy Flashpen which we featured previously. Due to the use of new flow sensors, the underlying surface (e.g. a desk) can be tracked without needing to be level with it, which allowed for the addition of a pressure-sensitive tip.

In addition the relative motion of the pen is measured, and there is haptic feedback, which allow for it to be used even for more delicate applications such as drawing. The results of trials with volunteers across a range of tasks is described in their presented paper (PDF).

Looks like that lab version of the first mouse, not exactly that finished product look is it?

And I LOLed at that squiggly drawing which suddenly had that perfect shading.

Anyway, what I guess I”m saying is that you successfully made me look at this.. ad?

It’s quite clearly a research paper, not trying to sell anything and not trying to be a finished product.

And the paper PDF does have quite a lot of interesting details.

Re: Shod, my first thoughts too– Exactly ! Or I kind of said ‘cool’, but then ‘wait a minute’– That thing looks like a damn stick ! Who did this ‘product design!?’

All the design people will get to the steam punk dwarves hell for not being able to understand the value under the shell of stuff… That’s a tech prototype

Shod being shoddy with his comment and thinking process. This isn’t an ad, it’s an article about a prototype. Stop being superficial with the things you skim on the internet.

Also, stop reading a blog about hacks and diy electronics if you expect to read about finished products for normies. This is not an apple fan blog.

Pity this article doesn’t go into more detail on how optical flow sensors work and what their limitations are. Afaik basically of PixArt’s sensors are 2D (translation only). Where’s the fancy “AIoT” image processing that at least massages two more degrees of freedom out of the image, adding height change and rotation to the mix? After all, that’s what an array of those sensors attempts to deliver?

One thing where a tablet has a big edge: it’s absolute positioning.

I did once see (maybe it was photos of) a “stylus”-like mouse; used a large ball at the end which rolled against the table, and you had two buttons for your clicking operations. Never saw it much in use, so I guess the idea never caught on.

I’ve tried various tablets in relative mode and hated it. The aforementioned “pen mouse” would probably be the same: relative, and also very chunky to use, which is probably why it never caught on.

I’d be interested to know if this thing can sense “absolute” positioning… but my guess is probably not, which would put it in the “nice out-of-the-box idea but no thanks” basket.

> used a large ball at the end which rolled against the table

Isn’t that just how mouses use to work?

…mouses? mice? Be that not how mousipod had worked?

Meeses

B^)

“…to pieces.” Although we can “draw” things back together.

Correct, this was basically like a mouse in “stylus” form factor. Except the ball was about half the size.

@jpa It does have that ad feeling though.

And when researchers cheat like with that shading nonsense I’m starting to wonder what is going on, since that is not the behavior I expect to see from serious researchers.

But OK, if the (pdf) info is interesting fro HaD readers all is well I would say.

BTW not all ads are directed at consumers, some are directed at investors specifically.

What “shading nonsense” are you referring to? You can clearly see them drawing the drawings from start to finish without discontinuities.

The major issue I have with this concept is that the drawing is not under the pen but on a distant screen. It makes it decidely harder to coordinate.

An amateur digital artist in my family insists that one of the benefits of drawing with a stylus on a Wacom tablet and seeing it on a separate screen is that the stylus doesn’t hide any of the drawing. Looking at how intuitive the Wacom is to them, it’s clear that the brain really nicely adapts to the separation between input and output.

Like…a mouse.

Most people, especially artists, use the drawing tablet in “absolute mode”. This means that it works exactly like a touch screen, it just doesn’t show an image (usually). It can also be put in relative mode, which makes them work like a mouse or trackpad

Except with absolute positioning.

Thanks Johnny Lee for inventing the Haptic Pen.

https://www.youtube.com/watch?v=Sk-ExWeA03Y

Interesting- I used a Livescribe pen to get through dental school in 2007-11. It has a camera and dedicated notebooks with an almost microscopic dot pattern that tells the pen where it is on the page. It works extremely well for taking notes and drawing things, but doesn’t have any artistic brushes. The pen also records audio as you take notes and the notes are synced to the audio, so when you’re playing back the audio, you can tap the pen on one of your notes and the audio will jump to what was being said as you wrote that note. You can pick up used ones on ebay for $50 or so, and the notebooks are readily available and pretty cheap.

I’ll believe it when I can test a beta version 🤔😍👌👍😊

I used to have a similar, less powerful device in early 2000’s. The device was called C-Pen and operated a bit like optical mouse. It had a bit of internal memory, could read barcodes and take notes using Palm–style alphabet. There was also a RS232 cable for interfacing to a PC. It also had IrDA interface. Funky little gadget…

I picked up a device used, going on what it said on the box, soon dawned on me it was a mistake, it used a fiducial paper, specially spotty, to know where it was. Company was defunct paper unobtanium in brick and mortar and a fortune to order online.

@sebastian No you cannot ‘clearly see the drawing from start to finish’ since they stop showing the pen but instead show a sped up screen where the viewer is asked to infer things, things like that it was done with that pen, which to this observer is not consistent with its handling. For one thing the pen is too coarse to do that precise shading at that observed zoom level.

You should really be careful with that kind of forced inference, since that trick is also used by the news, it is specifically popular and well done by the BBC(.co.uk), they come close to perfecting it and use it constantly.

It works great for the news because they can say ‘we never said that’ but meanwhile get droves pf people to accidentally infer all kinds of nonsense. While arguing they are in the clear as for liability.