The up-and-coming Wonder of the World in software and information circles , and particularly in those circles who talk about them, is AI. Give a magic machine a lot of stuff, ask it a question, and it will give you a meaningful and useful answer. It will create art, write books, compose music, and generally Change The World As We Know It. All this is genuinely impressive stuff, as anyone who has played with DALL-E will tell you. But it’s important to think about what the technology can and can’t do that’s new so as to not become caught up in the hype, and in doing that I’m immediately drawn to a previous career of mine.

I Knew I Should Have Taken That 8051 Firmware Job Instead

I’m an electronic engineer by training, but on graduation back in the 1990s I was seduced by the Commodore CDTV into the world of electronic publishing. CD-ROMs were the thing, then suddenly they weren’t, so I tumbled through games and web companies, and unexpectedly ending up working for Google. Was I at Larry and Sergei’s side? Hardly, the company I had worked for folded so I found some agency temp work as a search engine quality rater.

This is a fascinating job that teaches you a lot about how search engines work, but as one of the trained monkeys against whom the algorithm is tested you are at the bottom of the Google heap. This led me into the weird world of search engine marketing companies at the white hat end, where my job morphed into discovering for myself the field of computational linguistics without realising it was already a thing, and using it to lead the customers into creating better content for their websites.

At this point, it’s probably time to talk about how the search engine marketing business works. If you own a website, you’ll almost certainly at some time have been bombarded with search engine optimisation, or SEO, companies offering you the chance to be Number One on Google. As we used to say: if anyone says that to you, ask their name. If it’s Larry Page or Sergei Brin, hire them. Otherwise don’t.

What the majority of these companies did was find chinks in the search giant’s armour, ways to exploit the algorithm to deliver a good result on some carefully chosen search term. The result is a constant battle between the SEOs and the algorithm developers, something we saw first-hand as quality raters. If you’re unwise enough to hire a black-hat SEO company, any success you gain will inevitably be taken away by an algorithm update, and you’ll probably be thrown into search engine hell as a result.

On the white hat end of the scale the job is a different one. You have a customer with a website they believe is good, but with little interesting content beyond whatever it is they sell, the search engine doesn’t agree with them. Your job is to help them turn it into an amazing website full of interesting, authoritative, and constantly updated content, and in that there were no shortcuts. The computational linguistic analysis of pages of competitor search results and websites would deliver a healthy pile of things to talk about, but making it happen was impossible without somebody putting in a lot of hard graft and creating the content. If you think about Hackaday for a moment, my colleagues have an amazing breadth of experience and are really good writers so this site has very good content, but behind all that is a lot of work as we bash away at our keyboards creating it.

Does A Thing Have To Be Clever To Tell You Things You Didn’t Know?

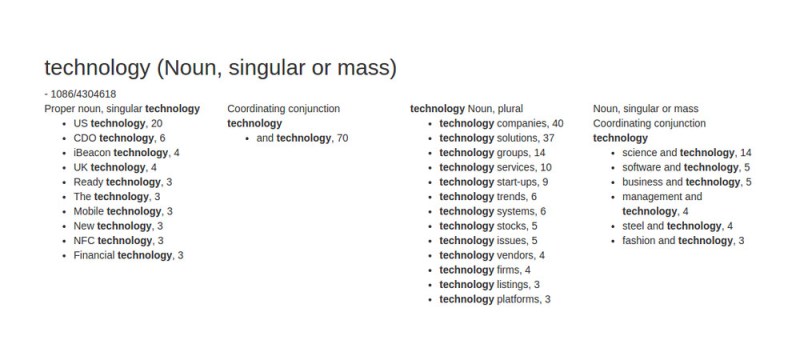

If there’s one awesome thing corpus text analysis can do for you it’s tell you something you didn’t know about something you thought you knew, and there were many times we had customers who gained a completely new insight into their industry by looking at a corpus of the rest of the industry’s information. They might know everything there was to know about the widgets they manufactured, but it turned out they often knew very little about how the world talked about those widgets.

But at this point it’s super-important to understand, that a corpus analysis system isn’t clever and it’s not trying to be. Comparing it to AI, it’s a big cauldron full of sentences in which the idea is to make the stuff you want float to the top when you stir it, while the AI is an attempt to make a magic clever box that knows all that information and says the good stuff from its mind when you prompt it. For simplicity’s sake, I’ll refer to the two as simply dim, and bright.

I’m very happy to be writing for Hackaday and not tweaking the web for a living any more, but I still follow the world of content analysis because it interests me. I’ve noticed a tendency in that world to discover AI and have a mind blown moment. This technology is amazing, they say, it can do all these things! And it can, but here I have a moment of puzzlement. I’m watching people who presumably have access to and experience of those “dim” tools that do the job by statistical analysis of a pile of data, reacting in amazement when a “bright” tool does the same job using an AI model trained on the same data. And I guess here is my point. The AI is a very cool tech, but it’s cool because it can do new things, not because it can do things other tools already do. I’ve even read search engine marketeers gushing about how an AI could tell you how to be a search engine marketeer, when all I’m seeing is an AI that presumably has a few search engine marketing guides in its training simply repeating something it knows from them.

Please Don’t Place AI On A Pedestal Just Because It’s New To You

A friend of mine is somewhere close to the bleeding edge of text-based AI, and I have taken the opportunity to enhance my knowledge by asking him to show me what’s under the hood. It’s a technology that can sometimes amaze you by seeming clever and human — one of the things he demonstrated was a model that does a very passable D&D DM for example, and being a DM is something that requires a bit of ability to do well — but I despair at its being placed on a hype pedestal. It’s clear that AI tools will find their place and become an indispensable part of our tech future, but let’s have a little common sense as we enthuse about them, please!

My cauldron of sentences eventually evolved into a full-blown corpus analysis system that got me a job with a well-known academic publisher. When fed with news data it could sometimes predict election results, but even with that party trick I never found a freelance customer for it. Perhaps its time has passed and an AI could do a better job.

Meanwhile I worry about how the black hats in my former industry will use the new tools, and that an avalanche of AI-generated content that seems higher quality than it is will pollute search results with garbage that can’t be filtered out. Who knows, maybe an AI will be employed to spot it. One thing you can rely on though, Hackaday content will remain written by real people with demonstrable knowledge of the subject!

Thank the ai overloards for that!

AI winter and “the computer is always right”.

“the computer is always right” is what really REALLY terrifies me about AI…

“This is a fascinating job that teaches you a lot about how search engines work, but as one of the trained monkeys against whom the algorithm is tested you are at the bottom of the Google heap.”

ERROR 2,19. COMMA NOT DETECTED. DELETE ARTICLE. REMOVE 2 MERIT POINTS FROM AUTHOR. +1 COMMENTER POINT. UPDATE AI.

I think one of the bigger things to consider about most “AI” (actually machine learning) systems is how the training dataset were gathered, and what the system is meant to do.

A typical solution for gathering a sizable dataset for machine learning is to just data mine the web as a whole. Often with a complete disregard to if the owners/authors of that data were okay with it or not.

A lot of people shrug their shoulders considering this all to land within fair use. After all, it is derivative work with only a loose connection to the original.

Or is it?

Now, the visual arts is likely the simplest example of how machine learning likely doesn’t fall within fair use.

Since any image used will directly help to make the machine learning system “better” at augmenting/mimicing that source. Directly becoming better at competing with the original author/owner.

And negatively effecting the owner is usually a sign that things likely aren’t fair use. (Now, fair use is more about economical impact, both current impact and (“easily proven”) potential impact in the future.)

For image recognition, it most likely is fair use.

For image generation, it most likely is copyright infringement. (with the exception of source material in the public domain, or where permission for this use has been granted.)

To a degree.

The fairly excessive datamining happening on the internet at large is questionable at the least.

But of course this doesn’t just apply to visual arts.

It applies to any work that can be copyrighted. (more or less everything.)

I don’t suspect that anyone who does something professionally prefers their work getting taken to improve a system that is in direct competition with oneself.

In the end.

I am not saying machine learning is bad, nor that it should be outlawed.

What I am saying is that copyright violations still apply if one uses source material without permission outside the terms of fair use, even if one uses it for something new and novel that there so far haven’t been court cases about.

Just because something is made available to the public, doesn’t mean that anyone is free to use it however they desire.

The question of how copyright law applies for these applications is of interest to us all.

Unless one believes that the world becomes better if everything is public domain by default, since that is how most organizations doing machine learning systems seems to treat the internet at large.

Maybe the Microsoft case will answer that.

https://www.theverge.com/2022/11/8/23446821/microsoft-openai-github-copilot-class-action-lawsuit-ai-copyright-violation-training-data

Given how that is just a month old. And how class action lawsuits are really slow. Then this is an interesting development that has taken far too long to pop up.

To a large degree, I think using a dataset to make a machine learning system that in itself can generate similar data likely should fall under copyright infringement.

It is a form of copying. But a debatable one.

And it isn’t like both authors and artists have been successfully sued for copyright infringement for mistakenly making a “new” work of art that turned out to be something they have seen/read/heard before.

(US) Copyright law however doesn’t regard non human authors/creators as viable for copyright ownership regardless. So anything that a ML system makes is effectively public domain. But a lot of people have completely forgotten about this legal requirement. (so Copilot technically sprinkles your code with public domain content, what effect this has on your license-ability is likewise debatable.)

Though, where the line between “a human did a minimal amount of input” and “a human hand wrote every single bit of the output.” as far as “human authorship” is regarded when it comes to copyright law is rather debatable. How much work can the computer do and still not overtake the human authorship? A guess a certain English photographer asks the same about monkeys pushing a button.

But regardless.

Just because it is a complex, legally diffuse and complex topic.

Doesn’t mean that “use whatever data you find however you desire.” is valid.

Personally hope that the class action lawsuit against copilot succeeds. Since for all of us making programs, we all likely want our licenses to actually mean something. Same for all other copyrightable works.

At least some places “slowly” makes progress towards the right direction.

Deviantart for an example opted to make a “noai” flag just like there is an anti-webcrawler one. However, they at first made it opt in, only for their whole user base to complain about it, so now it is the default. But I guess stable diffusion among others don’t care regardless, not like they will remove all those images from their dataset and retrain their whole system. And the same is likely true for all other ML systems meant to generate content.

The long and short of it is that these “AI-as-a-service” sites are in the wrong. Both morally and legally, but from my perspective, the users of the sites are more or less in the clear.

Think of it like this: If you, as an artist, assemble an artist endeavor from snippets of other media – photographs, TV shows, movies – then there’s an argument that can be made that it’s both transformative enough, and the amount of each work sampled is low enough, that your work falls under Fair Use or Fair Dealing (depending on where you live).

If you, as the aforementioned artist, take the time to pull together all of this other media from which those small snippets were hewn, then great! It’s no different than making a collage, broadly speaking.

Now imagine if, tomorrow, Adobe released an update to Premiere and After Effects that included terabytes of TV shows, movies, and photographs that they didn’t have a license to distribute. Within those terabytes of TV shows, there’s all of the content that the previously-mentioned artist had sampled is included. Would *that* be okay?

No. Of course not. People would rightly lose their minds. Adobe would effectively be violating copyright and standing in as the curators for what the artists are using to create their collage or film.

And that’s exactly what these “AI image generation” sites are doing.

Realistically, if an artist wants to go collate millions of images and feed it into an AI, then guide the AI until the output matches something that is pleasing to them, hey, fine. The artist is the one creating the art every step of the way. But that’s not what’s happening here – the people running these sites are constructing their data sets from millions of images, frequently without licensing, and the people running the sites *aren’t even the ones making the art*.

There’s nothing wrong with AI-generated images. There’s nothing wrong with someone using an algorithm to generate an image.

But there’s everything wrong with people who aren’t the artist going and violating copyright law to do it. I guarantee you it’s only a matter of time before someone provably shows that images from Getty or other major commercial stock-photo providers are in that data set, and these sites get their asses sued into the ground for it.

Yes.

I too am not against machine learning systems generating content. It is an interesting field.

However. I still think it is a copyright violation for an “independent” artist to take artwork from the web at large to create an ML system for their own art generating endeavors. For the same reason that I consider it wrong for OpenAI to do such.

But I don’t consider it a copyright violation if a creator of an ML system is granted permission by the owner of the original to use each source for their accumulated dataset. (Since this wouldn’t be a copyright infringement by current laws. An asset owner can license said asset however they desire.)

The debate around if it is fair use or not is partly not all that gray.

For something to be fair use (The EU’s copyright law isn’t majorly different) then the use of the work has to:

A. Criticism of the work.

Can’t actually contain the original work in such a way that it fullfils/replaces the reason to view/otherwise-behold the original. Machine learning systems generating art doesn’t criticize the source material. But a machine learning based art critic is fair use.

B. Used in an educational fashion.

Still can’t fulfill/replace the need to behold the original work. But the used material has to be provided in an educational fashion. So an ML system only using it to generate something completely different isn’t really educational. Some might argue that the ML system got educated by the used material, but I’ll reiterate that copyright law don’t care about non humans, so that the computer is technically “learning” is frankly irrelevant.

C. Derivative work. Or the main thing people think that ML systems can use to their defense.

No they can’t. Since any derivative work (that isn’t of a sufficiently criticizing or educational fashion) can’t negatively effect the owner of the original. And sourcing the material without permission to train one’s ML system to better create content in the style of said original is in direct competition with the original’s owner.

There is however some more exceptions to copyright law.

But these other ones are even more restrictive in their applicability. And don’t really allow for derivative works at all. So content generating ML systems can’t defend themselves with these. Image/content recognition ML systems however can, same for search engines, and a lot of other analytical systems. (since these additional exceptions were made specifically for search engines and scientific research.)

I should likely also include:

D. Parody.

It is an extension of the criticism exception. Effectively it is the legal loophole specifically made for comedians. So if the ML system is providing social commentary and/OR criticism of the original, then technically, it is fine…

In the end.

Some content created by these ML systems could perhaps be legal.

But a huge amount isn’t.

And this is when we look at art. (Images, video, music/sound, 3d, text/literature, etc.)

Source code, way less room for this loophole. It is not practical to write parody code, but one likely can.

And so far haven’t even begun to talk about doing things in the “likeness” of others. Character rights, trademarks, impersonation/fraud, etc. Suddenly this rabbit hole might need another planet to continue drilling its way down through.

Jeff Koons, Richard Prince, two names to look up to learn how the art world has already blown it’s foot off with a shotgun in that regard.

Artists have this love for shooting themselves in the foot. It isn’t surprising. Nor is it new.

Same goes for a lot of other industries.

Or Glenn Brown, whose painting got as far as winning a prestigious art prize before the original artist recognised it as being an almost exact copy of his own work:

https://www.theargus.co.uk/news/5150621.artist-sues-over-copying-claim/

This is a gross misstatement of the law. I hope you’re not a lawyer or at the very least don’t advise clients on intellectual property.

Quite some time age I switched from “googling” to ducking (duckduckgoing) and in recent years(?) I noticed many results to specific search terms kinda read like real texts from/in a blog but where most likely AI generated crap. The AIs used are/were probably not the best and I didn’t ask the AIs directly but with quasi-static intermediary blog posts in between – so the results couldn’t be good enough, but still…

After the internet turning more and more into walled gardens (pinterest, tiktok, and what not) and websites turning into piles of shitshow where you need to scroll to infinity and still cant find actually useful information on the product (after enabling a dozen 3rd party domain scripts) this – lets call it – “search engine stuffing” is not a good development at all.

Anyone else notice something like that? Only a DDG phenomenon?

I’ve been also using DDG, and no, this has been happening even before DDG. It isn’t even likely AI, as these websites just hire freelance writers that get paid per-article, with no basis on quality.

End result: Seach engines don’t show what you are looking for, and unverified statements become “fact”.

DDG only brings this problem to light because they haven’t downgraded the content farming websites enough, so they appear more often. (From my information, DDG runs off of bing)

Indeed, it’s been a few month I’ve noticed “void pages”, in which a subject is introduced, talked about, but there is no real information, despite the promising header. I’ve found several, on several topics, always the same kind of layout, and the same writing style. Each time some strange turns of phrases.

I only use Duckduckgo, so I don’t know if other browsers suffer the same.

And I use adblockers, so I don’t know if there are more ads than elsewhere on these pages.

AI hasn’t seemed to me to transcend “good actor” level. In that a good actor can convince you they are a doctor on TV or in a movie, but god help you if you’re in a remote plane crash with them and they’re your only chance of immediate medical attention.

Star Trek’s “Doctor” is a good ways away.

I think the legalities of an AI doctor are more of a hindrance than technical problems. lawyers are warming up their brief cases and rarring to go.

It’s cool they’ll just infiltrate the regulatory agencies and make it illegal to sue them

I like the “good actor” analogy – the one I prefer for self-driving cars is trained monkey; you can train a monkey to drive a car pretty well most of the time, but you can’t ever really be sure it isn’t going to freak out about something and crash the car, and you can never really be sure it understands the implications to its own and everyone else’s safety.

If your taxi arrives driven by a monkey, even one that was backed up by a company that professionally trains monkeys and that the insurance company says is trained well enough to be a taxi driver… are you getting in the taxi?

This is a really cool article Jenny, and honestly something I’ve honestly something lately I’ve been thinking a lot about ‘a lot’ lately, and that is in this ‘new found world’, how anymore are we going to declare or declaim ‘authenticity ?’ I mean yes, one could perhaps claim ‘NFT’s’, but, almost overnight that kind of turned into a situation where a whole lot of people with a lot less technical experience were ‘just trying to make money’– And at the same time you can’t quite put ‘all of society’ under ‘lock and key’– I mean after all, this *is* HaD; Even *because* the lock even exists, is even there, well… Someone will want to try and ‘break it’. Yet, personally, I think if we lose our sense of ‘authenticity’, in art, language, society I feel at the same time we also ‘lose our humanity’. Or yes, I accept ‘I am a nerd’, though not one as talented as you. Yet not as one as talented as you. Yet, even given that, I cut my teeth, and my very soul, growing up on literature, not even electronics, though I love it. And yes, of course, so much of tech today is based upon things like unfactorable primes or the ledger– But for those of us with these talents, and I am not even for a second suggesting I’m ‘up to snuff’ or have them, yet for those there, for our ‘future’, we have to ‘envision the key’– With simply no lock ‘there’. To me, that is the truth and the tragedy.

> Prompt: “A female journalist, skeptical about the promise of AI, oil painting”.

Looks like the Queensland Premier with longer hair…

https://en.wikipedia.org/wiki/Annastacia_Palaszczuk

>Write article about AI skepticism

>Get AI to draw you

>It depicts you as a soyjak

Uh oh..

The best way to think about “current” AI (well, corpus analysis) is Robin Williams’s quote from Good Will Hunting.

“Thought about what you said to me the other day, about my painting. Stayed up half the night thinking about it. Something occurred to me… fell into a deep, peaceful sleep, and haven’t thought about you since. Do you know what occurred to me? [..] You’re just a kid, and you don’t have the faintest idea what you’re talking about. [..] So if I asked you about art, you’d probably give me the skinny on every art book ever written. Michelangelo, you know a lot about him. Life’s work, political aspirations, him and the pope, sexual orientations, the whole works, right? But I’ll bet you can’t tell me what it smells like in the Sistine Chapel.”

I’ve seen people fascinated with what ChatGPT’s spit out on a bunch of topics. Then I tried it, and on several topics (physics, specifically areas that I’m reaaally well read in) it spit out answers that I knew *exactly* where it pulled them from.

It was just a kid. There wasn’t anything I could learn from it that wasn’t in some […]ing book.

Don’t get me wrong, I’m not bad-mouthing corpus analysis stuff. It’s definitely got potential. But they need to add the ability for it to spit out a metric describing where the information comes from. Until you’ve got that, it’s just a kid.

Machine learning systems are indeed good at mimicing.

However, a lot of stuff out on the internet is just copy pasted from elsewhere. Especially when it comes to more factual stuff. Most run with the same explanations since the explanation is more or less a “definition”. So it isn’t illogical for a sense of familiarity to arise.

But having the ML system spit out its sources would be a bit dangerous.

Dangerous for the operator of the ML system. Since suddenly there is better evidence for the original author to claim copyright infringement. Since their work most likely weren’t used with consent, and likely isn’t used in a fair use fashion either.

Well 60% is duplicate.

https://www.seroundtable.com/google-60-percent-of-the-internet-is-duplicate-34469.html

And why is that?

Because people use AI to steal and duplicate content for SEO.

“However, a lot of stuff out on the internet is just copy pasted from elsewhere.”

That’s my point. It’s a bit like Google’s original PageRank: the stuff it repeats is what’s parroted, not what’s correct. It seriously disturbs me that people think it “sounds” smart/impressive, because to me, it absolutely doesn’t: it sounds *exactly* like what modern reporting sounds like.

So, you want a Bibliography option. Fair enough.

Never mind advanced learning, a basic homework assignment (is the earth flat or round?) needs more than just the Web address where you asked the question.

intelligence is a byproduct of consciousness

An immense data base (call it the web) and a

monsterously fast search engine,run by a triksy

algorythim is well an immense data base,a monsterously fast search engine and a triksy

algorythim and no one is ever going to invite it

to a family dinner

Data analysis is replacing common sense. As I view topics such as ‘picking nose causes alzheimer’s’. Surely more people would have those by now…Seems to me, and I could be wrong, that some sort of data was scrubbed for connections from one to another. Many other examples. But I digress..

Oh great, now that’s in the training set for the next one…

Inre: The Title

Ai is the Mandarin word from “love”,

So I read the Title as “Love [love], but…”

Love your AI… but don’t “love” your AI.

There are a number of different approaches to AI and some can (seem to) produce the “same” results. But they all have one deep and intrinsic flaw: what they “do” is NOT verifiable in any known sense of the word “verifiable”. They can repeat something, given the same input, but that’s not a verification. If I write a program to always respond with “4” when fed “3 + 3”, that’s not verification. But beyond that, I have seen no convincing way of verifying that any AI’s solution is “correct”. Sure, we can “live” with that shortcoming, but how do we ignore the liability of that?

There was an attempt to create “perfect” software based on verification in project Viper, but if the input is flawed (e.g. the requirements), so is its output. Gödel proved the futility of any absolute verification based on any set of rules as they cannot be made unambiguous.

As as for derivatives being copyrightable art, what about Andy Warhol’s Campbell’s soup and other “derivative” works that were copyrighted by him using images that were probably also copyrighted?

You can still prove most any relevant property, it’s just that you cannot create a formal general system that can deduce this property automatically. But it is possible to create adapted/limited formal systems where it is possible, or a system where you add additional information (apart from the limited rules/axioms of the general system) and can prove what you want. Or by slightly adapting your requirements by reconsidering the actual problem to solve.

Also the very big limitiation of Gödel is that it requires your system to work with infinity (theoretical infinity as in natural numbers). But the real world is never such a system, only models of the real world, that *claim* to represent reality (but can only be validated with experiments) are limited by this.

When assuming an algorithm is allowed to use finite resources (and finite sets of inputs/outputs), you can *always* prove/disprove any property with certainty. Add on top of that clever optimizations and reductions of cases, and you can find solutions for almost everything.

As long as what you want to do is not create a sufficiently expressive formal system that can deduce everything, you are not as limited as it might seem.

Also what you criticize about AI obviously also applied to traditionally written programs. They cannot be proven to be correct, given formal methods, only with adaptations of what you consider correct, and limiting the complexity you have a faint chance to do that.

That’s why there are limited formal languages where you can prove important properties, for example to ensure certain properties of network protocols.

The real problem with statistics or machine learning is not Gödel.

It is that it has no clear notion of how to accurately sample a problem as to not ignore important edge or corner cases (or sometimes even whole important classes of subproblems).

But so do we, when we try to understand the real world, as exemplified by science.

So the real challenge is how to test a system for all possible inputs, without using all possible inputs, i.e., generate a “compressed” set of input and output pairs, so that a system can be tested in reasonable time.

Formal deductive methods will not help much regarding this issue. They are just one form of data compression, but not necessarily the relevant one, as it’s not about deduction, but classification (grouping of sampled data into classes).

Similarly, formal methods do not at all describe how you create concepts, they *use* concepts to deduce properites, and they ground concepts on existing concepts (proofs by reduction). That’s a completely different problem than proving properties. It’s a creative activity, which is usually outside of the domain of math or formal methods.

The real issue is finding these concepts, but all the work of making math “beautiful” and more grounded in similar concepts, has always been manual. The real work has always been in the formalization, not in the automatable deduction.

You greatly exceeded my generalities and even discounted Gödel’s incompleteness theorem. Then you state: “They are just one form of data compression, but not necessarily the relevant one, as it’s not about deduction, but classification (grouping of sampled data into classes).” How do you collapse what was said into “data compression”, which is a science in itself. Maybe you didn’t mean that in the technical sense. Then you say: “..it’s not about deduction..” but what is your meaning there? (Logical) deduction is a set of rules applied to theorems and postulates that can be used in a formal proof. Granted, Gödel did not destroy all hope in establishing clear, logical conclusions, but that it’s not possible to use rules and theorems to define those rules and theorems without accepting that they are “true” only by belief. We can’t say, as has been publicized, that global warning is not “real” because it still gets cold (besides, it’s no longer call “warming” but climate change). I worked in avionic landing systems and there are lots and lots of rules and regulations for creating “safe software”, but in the end, it relies on testing, testing, testing, especially those that can result in the greatest loss of life. How do we ever “verify” an open system like AI? What is an acceptable loss of life for using AI? My waring is that since we do not have visibility of the internals of any AI, regardless of the rules used in their implementation, the state space for a definitive solution is infinite. In avionics SW safety, changing on “bit” requires full regression analysis and testing for critical SW. What safeguards are there for AI? It’s like the screwup in facial recognition that failed to identify races of people it wasn’t trained with (sure, that’s a “training bug” but that’s part of the point here). As long as we don’t “know” how AI “logically deduces” its output, we cannot rely on that output without limits. Then if we just “throw a human in the loop” to mitigate the liability, then that too can fail, especially if the human is under qualified and/or overworked, things and go bad quickly. Laws will have to be enacted and evolve to address this issues if AI becomes a part of our everyday lives. But we’re not there yet.

” As long as we don’t “know” how AI “logically deduces” its output, we cannot rely on that output without limits.”

Ah, but unlike say the brain we stand at least a chance at tapping into an internal state.

Isn’t this the turing test for AI?

If art generated by an AI is indistinguishable from art generated by a person then the AI is an artist.

Lot of modern art is very derivative, lots of technology used to turn photos into paintings, set up an LCD projector then colour by the numbers, not impressed. Very difficult to tell geniuine artists from the artisans.

Many artists use an amalgam of styles from other artists nothing new there, just automated.

What happened to the AI that was going to revolutionise science, solve all the big math problems (by brute force if necessary), take us where no man has gone before. Instead new AI is spending most of its time generating porn, yes porn, I said it, you know its true. All those poor graphics cards sweating to produce filthy disgusting new porn by recycling old porn, got to love it.

(didn’t mimicing used to have a “k”?)

>If art generated by an AI is indistinguishable from art generated by a person then the AI is an artist.

Not really. Art is that which can’t be explained by a method or a rule – the rest is illustration or copying. A lot of people aren’t really artists either in a strict sense.

The behaviorist argument to AI is faulty because the same outcomes can be had in different ways, and the inability to notice or measure which way it went doesn’t mean they are the same way.

Or, to put it in more illustrative terms: the “Turing Test” argument of, “if you don’t see any difference” is basically like dropping a square peg through a round hole. If the square peg is narrow enough, it goes through – so do you then call it a round peg?

If a million monkeys typing could eventually (re)create Shakespeare, what happens when the planet hosts 8 BILLION monkeys? Looks like you get 60% of the internet is duplicated.

I think that when the dust settles and the “amazing” ceases to amaze, humanity will be left with a simple conclusion:

It is not that hard to build a machine that amazes the masses

Janelle Shane did an excellent analysis of Galactica, a “knowledgeable” AI that was supposed to be able to answer scientific questions. She asked it how many giraffes have been to the Moon. It said 15 had been to the Moon and 13 to “the Mars”, then in the next sentence that 17 had been to the Moon and 11 to “the Mars”. The correct answer is, of course, “none”.

https://www.aiweirdness.com/galactica/

There was also the time Facebook, er, Meta (whatever, Bob, call me when you’ve bought an aircraft carrier) introduced a scientific large language model. They took it down after a few days after they realized it was a prime target for pranksters asking for things like a wiki article on the history of bears in space.

The algorithm had no concept of truth or what the words it wrote actually meant. It just knew how to arrange them in a way that statistically would resemble the articles fed into it. And they were surprised to find all they had done was to teach a computer the art of bullshitting.

Oops, just realized that Galactica was the exact same artificial stupidity algorithm from Facebook / Meta / Reverend White’s Pearly Gates.

> one of the things he demonstrated was a model that does a very passable D&D DM

Wait, I need more information about this…how do I gain access to this model?!

Are you saying we can make single-player D&D a reality?!

These are the important technological breakthroughs we should be focusing on!

In the EU they are in the process of hammering out laws to curtail AI.

Because it is too likely to be used against people.

Of course the politicians then attempt to make it so it CAN be used against you for ‘national security’ and crap like that.

note: I initially misspelled ‘of course’ as ‘of curse’ in the line above, and perhaps that was more inspiration than misspelling.

Anyway, any time the EU makes a positive law these days they put in a really nasty side-stipulation under the green green grass. Or maybe they make a nasty law and dress it up with some positive bits the journalists can report?

Similar in the US though, with the bits they add to bills that are OK, so you end up with super-nasty stuff sneaked in to be passed into law.

That’s not really about AI, just about tracking people using image recognition and statistical predictors used to determine cost and access to services. The predictor could be some fancy modern ANN or some ancient SVM, as long as it’s fit using aggregate data it’s AI for that law.

As for using it against me specifically, I’m not being searched for breaking any law in 2002/584/JHA so they are still not supposed to. That said, with that laundry list of crimes what’s being left out feels a bit arbitrary to me. They can track someone selling fake Levis but they can’t track someone who beat up someone on the street unless it’s they had a racist motive.

[Jenny List]

I don’t think that puce colored lipstick looks good on you.