In case you thought that cameras, LiDAR, infrared sensors, and the like weren’t enough for Big Brother to track you, researchers from Carnegie Mellon University have found a way to track human movements via WiFi. [PDF via VPNoverview]

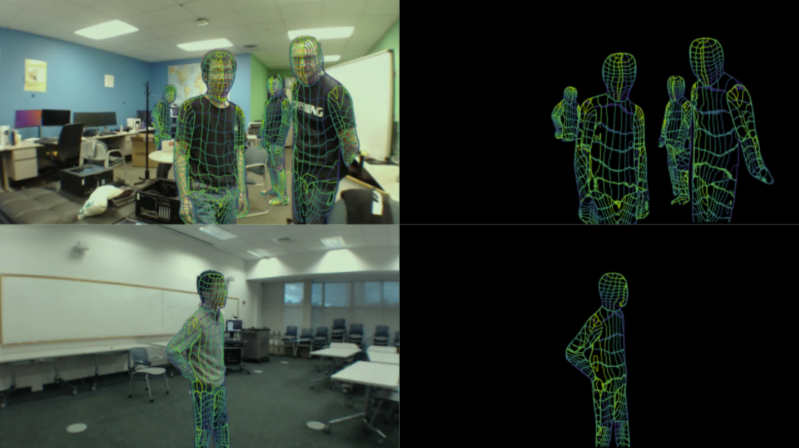

The process uses the signals from WiFi routers for an inexpensive way to determine human poses that isn’t hampered by lack of illumination or object occlusion. The system produces UV coordinates of human bodies by analyzing signal strength and phase data to generate a 2D feature map and then feeding that through a modified DensePose-RCNN architecture which corresponds to 3D human poses. The system does have trouble with unusual poses that are not in the training set or if there are more than three subjects in the detection area.

While there are probably applications in Kinect-esque VR Halo games, this will probably go straight into the toolbox of three letter agencies and advertising-fueled tech companies. The authors claim this to use “privacy-preserving algorithms for human sensing,” but only time will tell if they’re correct.

If you’re interested in other creepy surveillance tools, checkout the Heat-Sensing Crotch Monitor or this Dystopian Peep Show.

“The authors claim this to use privacy-preserving algorithms for human sensing”

HA I despise these people

“Look how smart I am, tiger mom! I’m engineering Hell on Earth! YAY CCP!”

I hate them too. I hate the people who say “oh this is nothing” even more (enablers). All technology is weaponized against us at some point, and pretending like it isn’t happening makes them an enemy of humanity.

Please someone ridicule this person for his conspircy theories and tinfoil hattery! He incentivizes terrorism!

research is always important. If you disagree you might also protest against the concept of humans working with fire. Inherently dangerous and inherently useful at the same time. If you can’t bring yourself to conceptualize at least dozens of useful, privacy respecting use-cases, then i will ask you to think again. There are plenty.

Who is directing the research, and who is paying for it? Who will benefit from this technology? Who will be hurt by this technology? Have you ever asked yourself these questions and tried to come up with an honest answer? No, just wave your hands and chalk it up to luddites so you can cope with the fact that we’re all in the same techno prison with soulless cult members running the show. I’d like to see your face when you look up and realize the hole you’re in is too deep to get out of, but I’m not that lucky.

Hey, at least you got all A’s on your report card, Timmy! [head pat]

“Insert anecdote about the queen being shown electromagnetic fields here”

Not really a first. Look at this 10+ years old article

https://hackaday.com/2012/08/11/seeing-through-walls-using-wifi/

Or this one ( OK this one is active but still quite similar)

https://hackaday.com/2018/07/02/using-an-ai-and-wifi-to-see-through-walls/

First off, the privacy issues are more of a “Who has access to your router” rather than an inherent problem.

We’ve got wifi connected cameras that people can enable access via “the cloud” or not. That’s on the end-user to do due diligence on keeping it secure; A basic issue I heard was them just not changing the default login information.

Unlike a camera, this seems like it just will show shape, position, posture. In public spaces, that’s a lot of data to sort through to get anything relevant out of it. (LOTS of variables) In your private home, keep your freakin’ router locked down.

Government’s gonna spy regardless, it’s up to the people to enforce their will on those who they’re paying regulate access via backdoors and whether they’re “allowed” to.

The tech isn’t the issue, it’s our personal behavior and public policies.

This would allow human interaction via gestures without disclosing the vast majority of identifying information. How is this a bad thing?

GPT is that you

Using An AI And WiFi To See Through Walls (July 2, 2018)

This is the work of a group at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL).

https://hackaday.com/2018/07/02/using-an-ai-and-wifi-to-see-through-walls/

How did you know?

“The system produces UV coordinates of human bodies”

Ultraviolet?

Nah UV as in maths, similar to uvs used in image textures.

It’s the process of taking a 3D object and unwrapping the surface as a 2D area, so that textures can be painted on the 2D area to decorate the 3D object.

Check the “UV mapping” Wikipedia page.

Not sure if this could feasibly be used for malicious purposes really, or atleast I hope. Can’t see how it could be granular enough to identify individuals, doesn’t look like it can pick up a nose let alone recognise a face, the poor thing needs an AI even to turn leg sized reflections in to pose estimates, and it’s not like it could track fingers on a keyboard, this is bulk detection of limb locations. Looks much more like a technology to replace cameras in gesture interfaces, because that is what this is suited to pick up, expansive gestures, waving, pointing, semaphore… I think we need to eradicate facial recognition, drive in to oblivion government assaults on end-to-end public key cryptography, and make facebook, the three letters agencies, and all communist parties extinct, before we start worrying about whether expansive gesture detection is a threat to privacy.

Look into gait recognition. There are more ways to identify individual people than just faces. Faces just seem obvious to us because we come with built in face decoding hardware.

The poses are given by vague reflections and such and created by an AI… you’ve more chance to “identify” the gait of some statistical model than the actual user gait…

I think you would find its more than enough information to identify people in most cases – take your own house/office for instance are any of the people in it even remotely similar in size and shape to you? If none then its going to be correct nearly every time, if not every time just from that.

In any more public space there are also going to be camera, maybe ID cards or biometrics in other ways as well, so even very average shaped people ‘have’ to be in that area so tracking/identifying the ‘right’ one isn’t too bad – between knowing only a handful of people have entered this spot, shape, size and gait recognition separating them all should be possible.

May not be ready for wide use yet, and I expect its going to need a great deal of locational training to correctly filter out all the other things that will add noise to the data. But now it is proven to work without needing its own personal power station to handle the computations I expect it won’t be all that long before it is used (if it isn’t already in use somewhere).

Most people carry phones and various ID/Credit cards… if you add the facial recognition to the list, the possibility to fingerprint someone is so large already that this is most likely a vague and imprecise addition at best… it won’t make matter any worst than they already are.

As this can in theory work though walls and ceilings and potentially at great range with the right antenna its got its own benefits. You don’t have a snooping insecure ip camera, phone etc in this location on purpose and yet the RF soup tells the observer enough to know its you and what you are up to.

Gait recognition is very definitely a thing. I happen to be largely face blind, but am quite adept to distinguishing people’s individual gait.

This is really very naive:

“Not sure if this could feasibly be used for malicious purposes really, ”

You might have picked up in the last 20 years that the main big brother thread is in the “Big Data” thing and combining stuff from multiple sources. You take a few stills from a camera to identify persons and then follow them for the rest of the day through the whole building with techniques like this. People are also creatures of habit. If a person is sitting at a particular desk for some hours, it’s pretty obvious which employee it is and you can start logging time at the coffee machine, talks with colleagues in the hallway and toilet breaks.

The amusing thing is that said institutions on the one hand are incompetent while on the other so competent to wield such technology effectively. People want their cake and eat it as well.

Our institutions were built to serve us. They were overtaken by political extremists (proxies of hostile foreign nations) and turned against us, using our own tax dollars. What’s your take?

I think that your political extremists are very well capable of overtaking your institutions for their own hidden agendas. Occams Razor tells us that your ‘{proxies of hostile foreign nations}’ addition is not necessary to explain the problem. And is therefore only distracting you from the solution.

I agree with you. Our institutions were built to serve us. But institutions generally live past their usefulness. And that is when the benefit and the cost invert.

Solution? Never let institutions become monolithic giants. Dismantle them just about after their cost and usefulness invert. Don’t keep them around ‘for the case that…’.

Do you see how institutions are quite similar to all that stuff that’s building up in your attic or lab? You get something that’s past it’s usefulness, but you keep it because ‘maybe I can use it later’. Sometimes it does happen. But most of the time you end up building more and more garages to store all your stuff.

Hackers tend to hoard electronics, countries tend to hoard institutions.

And both will probably end up in an intervention at some point. Which in the case of countries generally means: revolution and the killing of the people that were holding on to those institutions and had started using them for their own good.

Read some Noam Chomsky, if you want to read about this from a more reputable source than me. :)

RetepV:

Chomsky is a reliable source for linguistics. Though the thesis (language is built into the brains architecture) that he built his career on has been disproven.

For politics, he had his Shockley moment decades ago.

Shockley moment: I’m good at one thing, so listen to me talk out my ass about something else.

He’s just another credulous red.

Friendly advice: Before freaking out over this or going on about big brother kinds of concerns, actually read the research paper and the experimental setup they used to generate these results. Don’t read the headline and jump to the conclusion they did something that was either A) generalizable or B) actually scary. What scares me more is the technological illiteracy of the journalists that latch on to this stuff to make click bait articles out of it.

Actually READ papers? But how will I get outraged over my imaginary version of research projects then?!

Yeah! What he said!

B^)

Can’t track you as well if you aint broadcasting with that snitch in your pocket

there are good uses for this tech, but there is also the side of it that gives remote viewers or hackers too much power to know what the end user is up to in their personal space.

Better VR/AR headset tracking.

Thats 100% the best use case and for robotic vision.

Red Faction railgun suddenly became a lot more realer.

Passive WiDar ?

It’s actually a pretty old research topic. Sure, it is more advanced than the previous works, but I don’t quite understand why it is suddenly a big deal.

There’s a whole IEEE standard for the Wi-Fi based sensing: 802.11bf. Pose estimation is a relatively new one (vs. fall detection. etc), though.

Passive WiDar ?

yeah – why not seems you could easily do it with some code and a kraken

The papers outlining this use of technology have been around for years. Quite a few implementations exist and have been in use for some time. For those thinking this might not be that great, you’re really limiting yourself. This is very similar to how ‘stealth’ planes can be tracked. Capturing this information combined with a few other pieces and an individual can be located/tracked even in a crowd. Fun times.

Ok so it isn’t just me, I read the original article and was thinking that I’d heard about this exact same idea being proven possible maybe 5+ years ago (tbh everything pre-covid feels like 5 years ago so who knows how accurate that guess is)

I’ve heard of the method as a concept before, but I don’t recall anything like real-time or with this level of detail in the human models.

Sensing people with WiFi or similar radio waves is an old technique. What’s new is using AI to map signal-blobs to human poses

It really isn’t new; MIT Csail was using neural networks for pose estimation using Wifi at least as early as 2018 (see http://rfpose.csail.mit.edu/ ) but that’s just the first “wifi pose” result I found. They had been doing work related to that for years, at least once saying they intended to use it to detect falls from seniors who live alone.