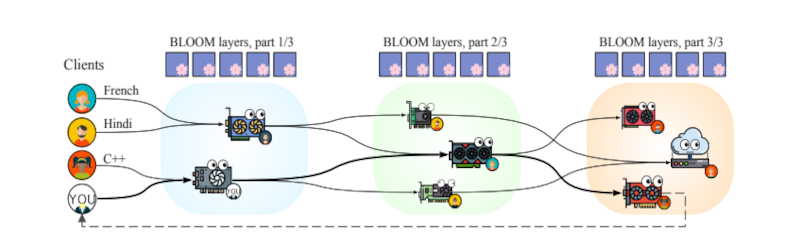

In the old days of the Internet, FTP was sufficient for downloading the occasional file. But with the widespread use of computer audio and video, it was easy to swamp an FTP server so — eventually — BitTorrent was born. The idea was you would download bits and pieces of a file from different places and, in theory, people would download bits and pieces that you have if they need them. Now Petals wants to use this same method with language models. These AI language models are all the rage, but they take significant computer resources. The idea behind Petals is like BitTorrent. You handle a small part of the model (about 8 gigabytes which is small compared to the 352 gigabytes required), and other people have other parts.

Of course, if you are privacy-minded, that means that some amount of your data is going out to the public, but for your latest chatbot experiments, that might not be a big problem. You can install Petals in an Anaconda environment or run a Docker image if you don’t want to set up anything. If you just want to access the distributed network’s chatbot based on BLOOMZ-176B, you can do that online.

Since the network processes tokens, a chat with the device is a little jerky, with a noticeable pause between words. It did say you can increase speed by connecting your GPU. Here’s a transcript:

Hackaday: How many nodes host you? AI: Two thousand six hundered and ninety-three Hackaday: Do you know about hackaday.com? AI: Yes, of course. Hackaday: What is it? AI: It's a website for hackers and geeks. Hackaday: How would you write an Arduino program to blink an LED? AI: You have to use the Arduino language and create a sketch that can load onto the Arduino board. Hackaday: Can you write that program? AI: No

So we suppose our jobs are safe for now. Still, if you want to experiment with your own custom models based on BLOOM or BLOOMZ, this is a quick way to start without needing a server farm. The tutorial notebook will get you started. If you want to dig deep, there is a whitepaper.

We’ve been worried about model security, so having it distributed will make some attacks easier and some harder, we imagine. We don’t know if we trust any of them to run a circular saw blade around our yard yet, though.

I think there’s a market for annoying chatbots. E.g.:

Hackaday: Do you know about hackaday.com?

AI: Yes, of course.

Hackaday: What is it?

AI: A genderless pronoun.

Hackaday: No, Hackaday.

AI: What about it?

Hackaday: What is Hackaday?

AI: Don’t you know? It’s your name.

…

Duh.

That just sounds like Cleverbot.

The best description seems to be a decentralized pipeline.

Next step is to run this in the EVM

This is unnecessary. Cloud architecture is insanely cheap to lease.