Believe it or not, building a tiny compiler from scratch can be as fun as it is accessible. [James Smith] demonstrates by making a tiny compiler for an extremely simple programming language, and showing off a hello world.

Here’s what happens with a compiler: human-written code gets compiled into low-level machine code, creating a natively-executable result for a particular processor. [James]’ compiler — created from scratch — makes native x64 Linux ELF binary executables with no dependencies, an experience [James] found both educational and enjoyable. The GitHub repository linked below has everything one needs, but [James] also wrote a book, From Source Code to Machine Code, which he offers for sale to anyone who wants to step through the nitty-gritty.

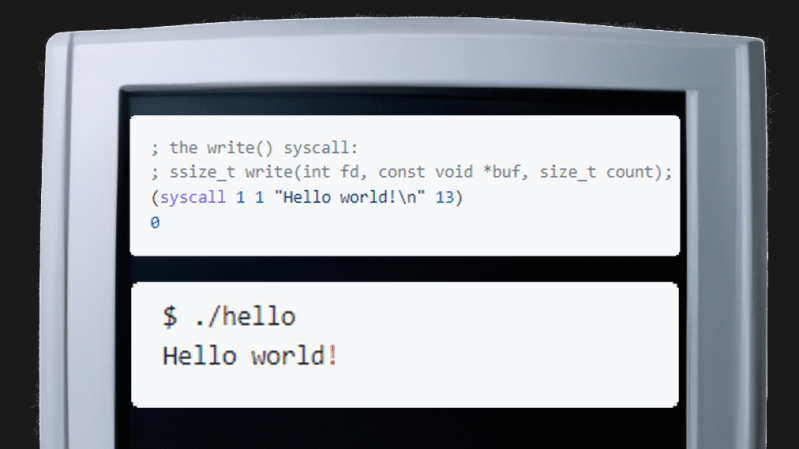

The (very tiny) compiler is on GitHub as The Pretty Laughable Programming Language. It’s tiny, the only data types are integers and pointers, and all it can do is make Linux syscalls — but it’s sufficient to make a program with. Here’s what the code for “Hello world!” looks like before being fed into the compiler:

; the write() syscall:

; ssize_t write(int fd, const void *buf, size_t count);

(syscall 1 1 "Hello world!\n" 13)

0

Working at such a low level can be rewarding, but back in the day the first computers actually relied on humans to be compilers. Operators would work with pencil and paper to convert programs into machine code, and you can get a taste of that with a project that re-creates what it was like to program a computer using just a few buttons as inputs.

My first system was a Motorola D2 kit. It came as a couple of PCBs with lots of components and had to be assembled. It was programmed directly in machine code via a hex keypad and display. I actually wrote my programs in assembly language and hand-assembled them.

I have a D2 kit in the attic. It has seen much use. You could add a n RS-232 interface and install the MIKBUG PROM and program the kit from a serial terminal

I only get the same error with all examples I tried…

$ ./print

Segmentation fault

bridgescript.com is better :)

May I offer “the dragon book”, Aho and Ullman’s “Principles of Compiler Design”. Once you’ve looked at that, Linux has (inherited from Unix) all the tools you need to build your own compiler: lex, yacc, etc.

this project clearly comes from the lisp and forth school of compiler design. lex and yacc and a lot of the ‘classic’ stuff in those books is completely irrelevant and unnecessary for this sort of compiler. there’s a lot of neat stuff in those books but almost the entire content of both of those books is almost completely irrelevant to ‘simple’ compilers.

there’s some classic stuff you can’t hardly get away from if you want to make a ‘real’ compiler (like register allocation), but i’m gonna say even if you’re going in that direction, i wouldn’t recommend starting with a book. write the simple compiler and tackle the classic questions as they come to you. that’s just my opinion.

Unnecessary, maybe, but writing a compiler for a context free grammar with (f)lex and yacc/bison finite state machine is almost trivial. Of course there are more modern tools available for the job as well, e.g. antlr. Or just ask ChatGPT to write you a simple compiler.

Ugh. A masochist! Wouldn’t you rather play along with a maestro, than try to rediscover all the rules of symphonic composition on your own? I disagree with your approach, but to each their own. I think my suggestion (and that of the other poster) get you more value, faster.

In high school I could code in assembly for both the c64 and Apple iie as well as read Hex. At one stage I remember looking at a project to compile BASIC, but it was easier to just use Machine code.

And this was a great way to go at the time, especially since the C64 and Apple iie use the same microprocessor. But today, if you want to do a microcontroller project, it’s nice to not have to care about what the instruction set is, especially if you’re having to upgrade a project from 8-bit to 32-bit, or just adapt to a new architecture for supply chain reasons.

I’ve implemented a few language parsers with lex and yacc, and I’ve implemented a few with no tools and recursive descent. I found it way easier to develop and maintain the recursive descent parsers.

I was wondering about that. Seems like having to learn the “language” needed to write the specification for a code generator might be more challenging than writing the parser, and just raise the overall complexity of the design process.

What I’d suggest is write a translator to convert the language to C, then compile that. It’s a much quicker path to production. Later, if there’s a need, you can write a direct compiler to assembler for the language.

This is what happened when Pascal went out of favor – someone came up with a Pascal-to-C translator, and that was the last we heard of Pascal.

But I think a better way to go is the other direction.

C was a failed concept that just won’t go away, and yet I use it almost exclusively because it’s the best existing solution that is available for every architecture. But this has been a roadblock many times, like in the early days of microcontrollers, when C compilers for them were expensive, and because of the complexity of C, difficult to write.

I’d far rather have a simpler syntax that does little more than remove the need to know the instruction set of a given computer, rather than trying to read like English, and then make a translator that converts C to that lower-level language.

So a project like this has a great deal of appeal for me.

Pascal and C were so similar you could almost use the others compiler with a set of macros.

C++ and Object Pascal, the same. Just enough screwy differences to make things complicated.

Kind of like Java and C#. Right down to one being clearly better.

C a failed concept? No. Just bonkers wrong. Is sharp tool, don’t cut self. Do not attempt to perform bris with C.

The “C was a failed concept” was supposed to be taken as tongue-in-cheek, since the very next thing I said was that I use it almost exclusively.

I remember when IBM was trying to make something of APL, probably because theirs were the only printing terminals that could type the APL character set with just a switch of Selectric typeballs. But that’s beside the point. Point is, they were running magazine ads comparing APL code with contemporary compiled languages such as Fortran, by comparing the overall time it took to compile and run a Fortran (or COBOL, or whatever) program to the time it took to run the interpreted APL program. I guess they never imagined that pretty much everybody said, “well, yeah, if I wanted to run a program just one time”.

I sometimes like diving into C in Four Functions when I am in the mood for seeing how a simple compiler fits together. https://github.com/rswier/c4

How about N.Wirth’s Compiler book: https://github.com/tpn/pdfs/blob/master/Compiler%20Construction%20-%20Niklaus%20Wirth%20-%201996%20(CBEAll).pdf

Accessing this link gives a 404 error.

You just have to include the PDF part at the end that didn’t get linked: https://github.com/tpn/pdfs/blob/master/Compiler%20Construction%20-%20Niklaus%20Wirth%20-%201996%20(CBEAll).pdf

It looks like the () characters bork WordPress comments so: https://tinyurl.com/wirthbook

works if you include “.pdf” in the link. https://github.com/tpn/pdfs/blob/master/Compiler%20Construction%20-%20Niklaus%20Wirth%20-%201996%20(CBEAll).pdf

And of course, it didn’t do it there, either. Thanks again, WordPress.

Has anyone actually read the book that is sorta-being-advertised here? Coming from the Lisp/Forth world myself, I’m curious, but I want to make sure this is a solidly-written book before I plunk down $29+. One reviewer on Amazon quipped that the author’s DB book was more “a bunch of notes” than a “book.” (But as we know, Amazon reviews are never biased :-). I do want to believe. But this book is so new and self-published, that a second opinion would be very much welcome.

Example given in the linked article:

(def foo (n)

(if (le n 0)

(then 0)

(else (+ n (call foo (- n 1))))))

Instead, PLEASE:

(def foo (n)

(if (le n 0)

(then 0)

(else (+ n (call foo (- n 1))))

)

)

I shouldn’t have to rely on the parenthesis-matching features of a coding-focused text editor to read your code.

#facepalm# Stupid WordPress took out all of my indentation.

Those reading this far may be interested in this website/course/book. Excellent education in this general area, in my non-professional opinion. https://www.nand2tetris.org/

An excellent course/book, with just a couple of flaws: 1) it starts you off by teaching you about gates and having you write a simulator for logic to test your exercises, but that only works up to the point where you need to use flip-flops, at which point they have you switch to their simulator rather than losing students at the stage of trying to get flip-flop timing to work in simulation, and 2) the high-level language they use to teach how to build a compiler is not a language anybody would actually try to use in real life. Together, these are just too “hand-wavy” for my taste. If I’m going to spend a bunch of time writing a hardware simulator, where’s the fun if it won’t work for any practical circuit, and similar argument about the limited high-level language. I realize that trying to teach how to write, for example, a C compiler would bog things down because of all of the real-life complexity needed to handle things like different data type lengths, but this was where I gave up because I could see that I was being led down a “this is easy” path that wasn’t going to actually serve me.

I actually thought for a few seconds, about implementing the CPU they describe (and which I had designed the hardware for in the earlier chapters) using a CPLD, before realizing that I would end up with a slow, inefficient microprocessor, which could be seen by looking at some of the source code examples they provided in later chapters.

All in all, a great way of teaching the basic concepts at all levels from logic gates through application software, but not nearly enough to be able to do much work at any of these levels.