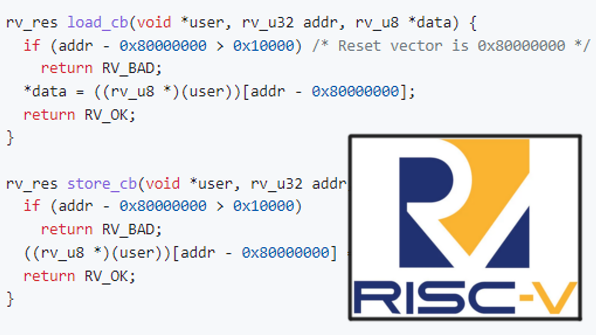

If you have ever wanted to implement a RISC-V CPU core in about 600 lines of C, you’re in luck! [mnurzia]’s rv project does exactly that, providing a simple two-function API.

Technically, it’s a user-level RV32IMC implementation in ANSI C. There are many different possible flavors of RISC-V, and in this case is a 32-bit base integer instruction set (RV32I), with multiplication and division extension (M), and compressed instruction set extension (C).

There’s a full instruction list and examples of use on the GitHub repository. As for readers wondering what something like RISC-V emulation might be good for, it happens to be the not-so-secret sauce to running Linux on an RP2040.

I think that’s really cool, albeit not very practical. 32-Bit is dead.

https://hackaday.com/2021/06/06/is-32-bits-really-dead/

RV64I would make more sense.

That being said, the existing emulation code likely could be completed.

For MCU’s, 8/16/32 bit are all alive and very well.

More bits means more power and more RAM which also means more power!

For MCUs, you typically want LESS power. Or, more precisely, just enough power and not a bit more.

There are differences between µC (microcontrollers) and SBCs (single board computers, though).

A µC does have a single application or program running (ex. Arduino Uno, 8052-AH BASIC, PIC16F84), while an SBC usually is a allround-computer (ex. Raspberry Pi running Linux).

I made that statement because ARM and RISC-V are typically used for the second type (SBCs).

Of course, it’s also possible to misuse a RISC-V core as a µC.

The i8088 had been used as the heart of a quick, discreetly built microcontroller.

IBM’s Professional Graphics Controller had used one as a graphic controller, for example.

There is the RV32E with half the number of registers because it is targeting the MCU space.

RISC-V wants it all!

RISC-V for a microcontroller is no more misuse than MIPS which is in the PIC32s, or the higher end ARM Mx cores…

The RP2040 in question is an ARM microcontroller. It’s very hard to come by non-ARM microcontrollers anymore.

ESP8266 and ESP32 run FreeRTOS.

Cool, and MP/M can technically run on an 8085, too.

@Joshua It’s actually a common use, and for completely reasonable uses.

My soldering iron (Pinecil) uses a RISC-V, and it’s quite a bit more appropriate as a µC than the solution it replaces.

Instruction-set architectures aren’t “appropriate” or “inappropriate” for microcontrollers or SOCs, or discrete processors. A microcontroller, a SOC, and a discrete processor will simply use a different selection of peripherals, each suitable for its own role. The ISA itself is not much of a factor, unless some detail of the ISA creates particularly inappropriate requirements.

We’re a long ways away from the era of simple, bare address/data-bus processors coupled with as-necessary support chips… and your assertion of instruction-set appropriateness would have been challenging to defend even back then.

And how exactly it’s a “misuse”, if the vast majority of actual deployed RISC-V silicon are MCUs?

even a 4-bit BCD (decimal) computer is Turing complete and capable of emulating a 64-bit CPU. These architectures are technically all equivalent to each other. The main difference is power and performance. And generally it is tough for a 64-bit CPU to beat an 8-bit or 32-bit CPU when it comes down to very small transistor counts and dynamic power consumption.

The problem is rather C/C+h and the use of 32-Bit pointers and source code compatibility.

Or let’s put it this way, a lot of existing source code requires modification to be 64-Bit “clean”.

There’s a lot of potential problems of staying in a dead 32-Bit world.

It’s like the stubborn unwillingness of IT of getting rid of IPv4, once and for all.

At this point, it’s backwards (or not future-proof) to specifically focus on developing new 32-Bit code.

Hence my separation/differenciation between microcontrollers and single board computers.

A simple µC doesn’t even require to have a clock, but a single board computer usually runs a full-fledged OS.

And that shouldn’t be 32-Bit anymore, because of 32-Bit limitations (year 2038 problem, to name an example).

https://en.wikipedia.org/wiki/Year_2038_problem

That’s my main concern. It doesn’t matter whether or not a coffee machine or any insignificant hackaday.com project uses a 4 or 64-Bit system

It’s about keeping in mind that 32-Bit and everything that goes with it (32-Bit/4GB IPv4 address space) is a thing of the past. Ignoring this will cause trouble for the people that come past us.

That makes no sense. You can use a 64 bit type for time_t, as is already the default on my 32 bit arm gcc. You can also use IPv6 without problems on 32 bit systems. Or just keep using IPv4.

“That makes no sense. You can use a 64 bit type for time_t, as is already the default on my 32 bit arm gcc. ”

Merely since about ~3 years. There was a recent update in 2020, I admit.

Anyway, what I was referring to was : “There is no universal solution for the Year 2038 problem. For example, in the C language, any change to the definition of the time_t data type would result in code-compatibility problems in any application in which date and time representations are dependent on the nature of the signed 32-bit time_t integer. ”

The X32 article also says:”The x32 ABI was merged into the Linux kernel for the 3.4 release with support being added to the GNU C Library in version 2.16.[14]

In December 2018 there was discussion as to whether to deprecate the x32 ABI, which has not happened as of April 2023.[15] ”

So it’s not gone, but developers already considered removal of 32-Bit pointer support etc. on x64. The days are numbered, so to say.

“You can also use IPv6 without problems on 32 bit systems. Or just keep using IPv4.”

Sure, you can do that on an 8-Bit microcontroller, too. Some have an IP core, even. That’s not what I meant, though, and I think you know that.

I was thinking about forward thinking here. The IT holds on to IPv4, NATting method and dual-protocol use. The latter is dangerous because of shadow networks. If both IPv4 and IPv6 are enabled, but merely one is being maintained.

https://www.networkcomputing.com/networking/shadow-networks-unintended-ipv6-side-effect

You would have even worse code compatibility changes when going from a native 32 bit to 64 bit system.

Err. Don’t try to plan for fixes to problems like y2k when a real solution isn’t offered.

By the year 2038, I’ll ask my computer on my ring to patch and fix every clock in the world that needs fixing. Geeze.

64 bit is nice. 32 bit was nice. 16 bit was it. 8 bit was groovy. 4 bit was math here we go. It’s all just fun. There is no such thing as misuse of architecture since this stuff really exists so we can play with really cool finite state machines.

There are many, many reasons this is nonsense, but there biggest one is that 32 bit systems are not broken by design as you imply. IPv4 it’s not *only* around for legacy support, but because it and it’s many hacks have proven useful in modern contexts. Yes, NAT is good actually. Also, backwards compatibility without emulation enables whole slews of interesting things with real world use, like efficient x86 emulation on ARM in the current Apple ecosystem.

600 rows of unreadable spaghetti else-if code. Great success!

Funny, that’s the part I’ve found to be positive. The typical C/C++ coding style with the differently-spaced code blocks looks totally ugly to me.

This one here in the headlines fits nicely on a 80×25 standard terminal.

It’s not “unreadable spaghetti” because each else-if is only 1 or 2 lines, and it’s very easy to see the structure. Obviously, to understand the details you have to be familiar with the RISC-V instruction encoding, but that would apply to any other implementation.

Have you seen emulator code before? MAME source is far less readable than this. I wrote a 6502 core which is much more readable and OOP-style but, consequently, is also far longer than 600 lines of code, too. Even still, you have to have some kind of switch or if/then block to handle all the different instructions eventually regardless of implementation.

HaD been there, done that (in fewer lines and implemented kernel mode): https://hackaday.com/2022/12/07/a-tiny-risc-v-emulator-runs-linux-with-no-mmu-and-yes-it-runs-doom/

It might be useful to see a compare and contrast between these two implementations. The more the merrier after all.

Code looks clean and unbloated. At first glimpse it looked like it was doing direct memory access but then I saw that the functions “load_cb” and “store_cb” are there to do the job. These things (emulators) are hard to get right specially on how the flags are affected. Congratulations to the autor!

Author here. Thanks!

what % of the risc v processor are the instructions using?

Be ready for the obligatory rewrites in rust/zig/etc