Although ChatGPT generated a huge amount of hype around replacing white collar workers completely when it was first released to the public, the general consensus now is that it won’t outright replace anyone yet, but rather people who know how to use it as a tool will replace those who don’t. Getting started with it is not too hard, either, but you’ll of course need a project to work on to familiarize yourself with the tool. [Volos Projects] gave himself the challenge of writing a poker game using ChatGPT not as the opposing player, but as a co-designer in order to learn more about it as an assistant.

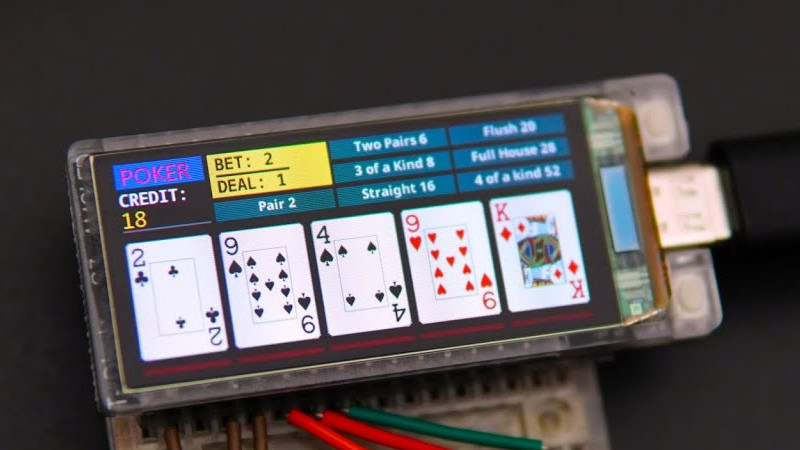

The poker game is being built on an ESP32 board with a built-in AMOLED screen. Five buttons are wired to the microcontroller to allow the player to select which cards to discard and which to keep. The bet for each hand can be raised or lowered much like the tabletop poker games often seen in bars and restaurants. To program it, though, ChatGPT was used to help design the code at each step of the way, first describing the overall goal and then building each function one-by-one like shuffling the deck, dealing the hand, and then replacing and dealing new cards.

For anyone who hasn’t yet explored using ChatGPT to help design their programming projects, this effort goes a long way to showing just how useful a tool it can be. For more complex tasks, though, it does take a little bit of knowledge on the part of the user because ChatGPT can often turn out nonsense or factually inaccurate information, but at least in a programming environment you’ll generally find out quickly when that happens. It’s not just a useful tool for writing programs, either. It can accomplish a lot of ancillary tasks related to programming as well, even if it’s not writing the code directly.

Thanks to [Peter] for the tip!

Beautiful build. Slap a 3d printed case on it, you have the an advanced handheld video poker on the cheap.

Before asking Chat GPT write some C code I asked it to optimize some existing working C code with no known errors. It eliminated about 30% of the code. A quick visual inspection revealed that the code would no longer work and It failed to compile.

Yeah I also don’t recall it being particularly useful the handful of times I’ve tested on some c and c++ code. And before someone comes out saying “have you tried our savior gpt4? praise openai”, yes I did and some of the pitfalls were still there (incorrectly allocated/freed memory, filter coefficients completely wrong, returning arrays allocated on the stack, wrong regex past any babby level). The only time it has been useful for code was to make up some boilerplate code for a circular buffer in c++, which had to eventually be adapted for actual use.

Also @Brian

>general consensus now is that it won’t outright replace anyone yet, but rather people who know how to use it as a tool will replace those who don’t

Source on that “general consensus” or is it just good old genAI FUD to fill up a paragraph? Because if so, shame on you.

Well… They say: you learn from your mistakes. But if you’re so experienced that you hardly make any mistakes anymore, how are you going to learn then?

We now have the answer: learn from ChatGPT’s mistakes!

;)

My very own senile ramblings on the subject, all cleaned-up by ChatGPT (she did say I make some valid points, so there):

I’m intrigued by the capabilities of AI, particularly in assisting with coding questions. It’s impressive how AI can provide precise answers for known API queries, saving us from the hassle of navigating different platforms for solutions.

However, I have two concerns:

Attribution: When using code generated by AI, how do we handle attribution to the original authors? Is this code free for anyone to use?

Accuracy: While AI can offer solutions, it’s crucial to be cautious. Sometimes, it’s hard to distinguish between valid solutions and “hallucinations” that may not work in practice. It won’t replace a skilled programmer but could replace one who doesn’t use these tools wisely.

AI’s potential is clear, but we need to navigate these concerns as we integrate it into our development processes.

>Attribution […]

Well, GPTs learn the same way as humans do, just more efficiently and the remixing is still lacking.

From what i get we are, to say it blunt, meme-machines, perpetual copycats. We see something, connect it with its context and use it. For example if you are a writer you most certainly have more books than anything else and most certainly have read them all, if you now write your story you are influenced by what you read, your style is a mix of the styles you read with some random new stuff sprinkled on top. I think if i would write a story it would be heavily influenced by folks like Stephen King for example as i read a bunch of his books in my youth, especially The Stand which i devoured.

The same goes for programming, most programmers have read tons of sources by other folks and are just remixing what they saw, be it building something from fundamental blocks you learned at the start or just remixing that fancy integer to ASCII function you read some time ago.

Yes, some time ago someone came up with how compression works, but i bet if you dig deep enough you can trace from where all the ideas came from, they just merged them together.

It all started when some primate before us found out that you can use that fancy thing called Fire for practical uses and to warm oneself and everything evolved from there. Monkey see, monkey do, monkey combines.

Chat GPT, at least at this time, does not seem to be aware of when it’s hallucinating or fabricating code that won’t work and it will always be subject to the Halting problem. The best it will ever do is assemble a program from a library of validated functional blocks with well defined interfaces, limits, and domains, or review code for errors (Chat GPT Super Lint). That may be good enough in a lot of cases. If Chat GPT could correctly write a non-trivial program from scratch it will have solved the equivalent of the Halting problem which is undecideable. And, the definition or specification used to tell Chat GPT what the program should do would be as complex, and similarly not probably correct, as the eventual program. So the problem of telling Chat GPT how to write a correct program effectively becomes a new programming language or grammar, Chat GPT++.🤣🤣

I’m not a Python programmer. But I needed a few hundred XML files to be processed, so I asked Chat-GPT4 to write me some Python code to parse XML and export stuff.

It took more than fifty iterations to arrive at code that worked as expected. 50+ back-and-forth exchanges with Chat-GPT4. It made a lot of mistakes on the way, including code that absolutely did not work. I had to persist in refining it’s “reasoning” and pushing it to do better.

So as a coding assistant, it has it’s limitations, which you need to discover as you work with it. Just as with a human being, you need to tell Chat-GPT when you think it’s reasoning is poor, when the code doesn’t compile/interpret, when the code does not deliver results as requested, etc., Unlike a human being, it won’t get upset when you show it how wrong it is, when you tell it you are disappointed in it’s work, when you tell it how frustrated you are that it has forgotten what you told it earlier…

But to help me solve a problem using a programming language I don’t know – great tool!

You can get good results with chatGPT for some tasks.

You can get bad results from chatGPT for some tasks.

You can get both for some tasks.

But you’re supposed to be the smart human pushing chatGPT to give you what you need.

I got chatGPT to write python code to calculate values for a magnetic loop antenna; it wasn’t identification to a commonly used web calculator for the same, but it was close enough that I couldn’t show a difference compared to real world. Was it right the first time? Nope. Clumsy mistakes made it not run, careful prodding got chatGPT to fix those issues and move the code forward.

I’ve had to prompt external contractors developing code for work more, after handing them better specifications than what I gave chatGPT.

Focused application of chatGPT can be impressive. Many of the nay sayers were looking to say “no, this won’t work”, expecting perfection on the first attempt.

AI has been in use so far by : The cops, the Pentagon & contractors, the ‘intelligence community’, Google, Microsoft, Adobe..

Oh and that OpenAI CEO went all creepy with his big iris scanning globe project too.

Makes you raise an eyebrow perhaps.

There’s Topaz though, they seem more friendly in purpose.. so far.