If you’ve made a robot or played around with electronics before, you might have used a time-of-flight laser distance sensor before. More modern ones detect not just the first reflection, but analyze subsequent reflections, or reflections that come in from different angles, to infer even more about what they’re looking at. These transient sensors usually aren’t the most accurate thing in the world, but four people from the University of Wisconsin managed to get far more out of one using some clever math. (Video, embedded below.)

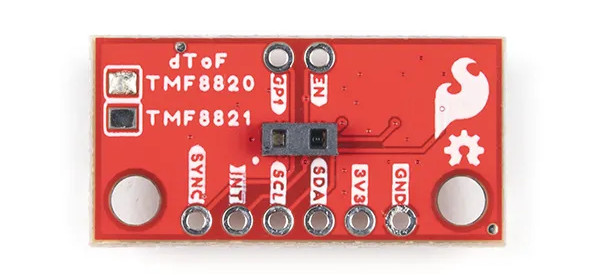

The transient sensors under investigation here sends out a pulse of light and records what it receives from nine angles in individual histograms. It then analyzes these histograms to make a rough estimate of the distance for each direction. But the sensor won’t tell us how it does so and it also isn’t very accurate. The team shows us how you can easily get a distance measurement that is more accurate and continues by showing how the nine distance estimates can even distinguish the geometry it’s looking, although to a limited extent. But they didn’t stop there: It can even detect the albedo of the material it’s looking at, which can be used to tell materials apart!

Overall, a great hack and we think this technology has potential – despite requiring more processing power.

I wonder if precision and accuracy of such sensors could be raised to the level that would allow them to be used for 3D printer bed calibration.

Speed of Light is about 300.000 m/s. So for 1/10 mm resolution we need at least to measure 0,1 ns = 100 ps, better add another 1/10 for good.

https://www.testandmeasurementtips.com/measuring-picoseconds-without-breaking-the-bank-faq/

That’s 300 000 KILOmeters/sec. Light travels 1/10 mm in about a third of a picosecond. Whatever you’re trying to do, measuring time of flight to that resolution is probably harder and more expensive.

Wow. /me stupid. Sorry.

Just a note that I still suffer from that stupid error. So embarrassing.

Sometimes making a mistake is how we learn. So just that this as a learning opportunity.

So the trick to measuring distance to high accuracy with light involves not just timing the reflection, but measuring the phase-shift of the reflection. Typically this involves recombining the laser light with some split off and sent via a known distance short path. The amount of combing/cancelling of the light tells you how much phase difference there is, and hence what fraction of a wavelength distance difference there is. Visible light has a wavelength of between 450 and 750 nanometers so very small distances.

Unfortunately it can’t tell you how many whole wavelengths of distance there is in addition to that fraction. However, as you vary the distance the interference will “pulse”, and the number of pulses tells you how many wavelengths the distance has changed by. So it can’t give you absolute distance, but it can tell you change in distance to very high accuracy.

There are several ways light can be used to measure distance. Putting triangulation aside, there is Time Of Flight and Phase Shift. (Several years ago) I had found it frustratingly difficult to dig out which is actually being used in these sensors. If Phase Shift, then arguments centered on fractions of nanoseconds of time may not be as relevant.

The TMF8801 specifications lists a 22mm to 2500mm range w/a +/- 5% accuracy. So, at 22mm x 5% that’s w/in 1mm. Not enough for bed calibration. But who knows. This hack is about messing w/the OEM’s software. A 3D printer may be much different application than the original purposes of the sensor. For instance, in a 3D printer the sensor can be held steady for a long periods over the same spot.

and it could be mounted on the gantry which by design moves in XYZ. I hope somebody cracks that at some point

That’s what I was thinking. The resolution of 0.1mm would be enough for the purpose. Although the sensor itself is far off, we have a lot of information in our hands to calibrate/make up for the error. And with some techniques like oversampling suggested by st2000 or some other mathematical magic maybe it would be possible.

If printhead is of a known height, why not just mount the ToF sensor from the top of the print head looking down at the bed? This increases the distance the light needs to travel and should decrease the needed measurement resolution… right?

UngaBunga; Light go further so computer dont have to be as fast.

(UngaBunga = simple mans TLDR)

it would require the known height of the print head to be rather accurate… right?

ToF, Math, Speeds that fast make my head hurt. Just looking at it from a detached perspective.

High speed printers are starting to put inertial navigation (accelerometers and gyros) on both the print head and frame, giving accurate relative positioning including for things like vibration and table bumps. Adding more sensors does mean you can combine them using something like a khalman-filter for even more accurate measurement, but you get diminishing returns and at some point the increase in accuracy is not worth the extra sensor’s cost.

Oh, and to answer @Pr0toc01 below, increasing distance doesn’t increase resolution. Increasing distance does increase the reflection time, but resolution depends on the resolution you can measure that time to. As explained above by @-jeffB, even measuring time to 1picosecond accuracy only gives you about 0.3mm distance accuracy. 1ps intervals is 1 THz, or 1000GHz. So far no transistor switches that fast (but they are getting close), so you can’t us a normal digital clock to measure time to that accuracy.

Wrong calculation about accuracy. Basically, you should calculate 5% of object distance.(it could have 10~15mm under 200mm range) The accuracy has improved from dozens of millimeters to a few millimeters.

I would guess the proprietary processing of the “transient histogram” is a simple correlation with the transmitted histogram. Maybe the emitted signal is meant to have the characteristics of noise, which is one of the optimal signals for correlation, the other being a chirp. (I don’t know what ‘histogram’ means in the sense used in the video. )

The resolution for s system like this is related to the length of the signals that will be correlated and is arbitrarily precise, is it not?

No guess required: Answer is in the first 25 seconds of the video.

(and you guessed wrong)

Read the linked paper for more info.

I saw it before but did not bore down through the jargon. Arrival-Time Histogram might be a better description. There is a reference for the laser or “emission histogram” that might be used to eliminate data outside some range. But yes, if there is truly single photon detection then correlation makes no sense.

Does anyone have a link to something that’s not a video? (Like, y’know, searchable text, equations, source code?) Video seems a poor medium for anything other than an accompanying demo, and for those of us time or bandwidth constraines it asks a lot on faith that it’ll be worth both if there’s no more suitable media available alongside the video. (I might watch a video *after* reading the documentation so I know what I’m looking for and that it’s worthwhile, but non-trivial projects with just video are not appealing to many of us old fogeys).

Follow the link to the video: A link to the project website and thence to a thorough paper is in the youtube video description.

I agree with the sentiment (if not the tone) of your comment, but this video is actually a useful digest of the paper. Worth watching.