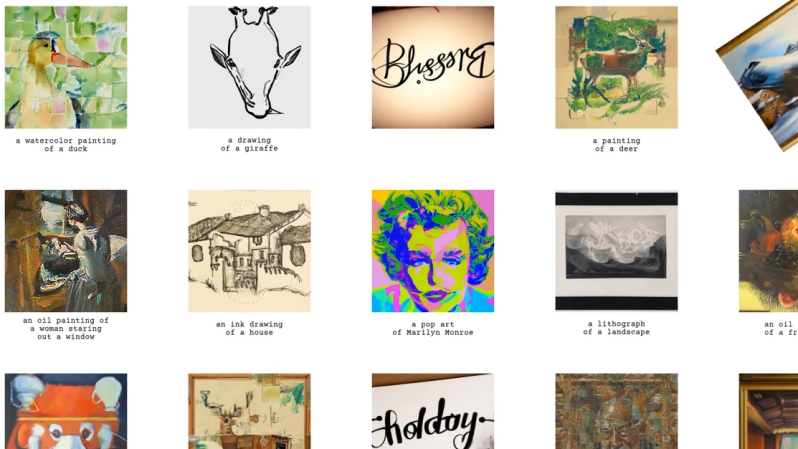

[Daniel Geng] and others have an interesting system of generating multi-view optical illusions, or visual anagrams. Such images have more than one “correct” view and visual interpretation.

What’s more, there are quite a few different methods on display: 90 degree flips and other (orthogonal) image rotations, color inversions, jigsaw permutations, and more. The project page has a generous number of examples, so go check them out!

What’s more, there are quite a few different methods on display: 90 degree flips and other (orthogonal) image rotations, color inversions, jigsaw permutations, and more. The project page has a generous number of examples, so go check them out!

The team’s method uses pre-trained diffusion models — more commonly known as the secret sauce inside image-generating AIs — to evaluate and work to combine the differences between different images, and try to combine and apply it in a way that results in the model generating a good visual result. While conceptually straightforward, this process wasn’t really something that could work without diffusion models driven by modern machine learning techniques.

The visual_anagrams GitHub repository has code and the research paper goes into details on implementation, limitations, and gives guidance on obtaining good results. Image generation is just one of the rapidly-evolving aspects of recent innovations, and it’s always interesting to see unusual applications like this one.

Following an example in an early 1980s magazine, (Omni?), I made drawing of my name, and when it was rotated 180 degrees it was my girlfriend’s name.

Too bad we broke 💔 up…