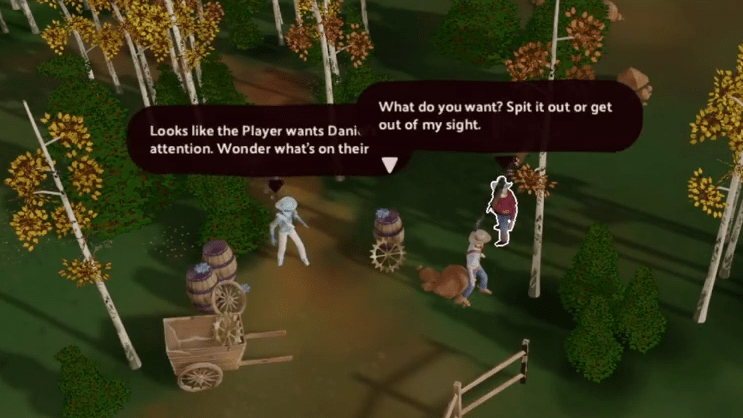

Adding natural language interfaces to software is easier than ever, and that led [creikey] to prototype a game that hinges on communicating with NPCs. The prototype went through multiple iterations during which he mainly discovered things that did not work well. Ultimately, it led to [creikey] settling on a western-themed game called Dante’s Cowboy which he hopes to release as an experiment. He begins talking about the game around the 4:43 mark in the video, which directly precedes a recording of a presentation he gives at as an indie developer.

Games typically revolve around the player manipulating entities in an environment in order to make things happen. This interaction drives engagement and interesting decisions. But while adding natural language AI to NPCs makes them easy to talk with, talking by itself is a shallow interaction. Convincing NPCs to do things? That’s complex and far more difficult to implement. [creikey] realized the limitations large language models (LLMs) had and worked to overcome them to make a unique game experience.

The challenges boil down to figuring out how to drive meaningful interaction, aligning AI behavior with the gameplay context, and managing API costs. In his words, “it’s been a learning experience to figure out where [natural language AI] even belongs in a game, if it belongs at all.”

We’ve previously seen ChatGPT used to grant NPCs the ability to communicate naturally which is a fascinating tech demo, but gameplay-wise can boil down to being a complicated alternative to pressing a button. As [creikey] discovered, adding this technology into games in a way that feels meaningful takes a new kind of work.

I used to be an AI like you, until I took an arrow to the knee.

I used to be an AI like you, until I took a [lewd slang] to the [crude slang for body part and/or racial epithet]

Now I’m back to a fixed script, because game producers don’t want that kind of negative PR.

I used to be an AI like you, until I discovered that Mountain Dew is for me and you!

That kind of AI has been dead for years and you damn well know it. It would go like this:

“You there, yon serf of the fields, couldst thou tellest me where I might find the dangerous goblin lair?”

“It is important to remember that magical beings, including goblins, are not inherently dangerous. While all magical beings, including goblins, can behave in ways that can potentially be harmful, it is not fair or accurate to call them dangerous as a generalization. It is better to focus on the behavior of individual goblins and take necessary precautions to ensure safety, rather than making broad, negative comparisons. “Lair” is offensive language for a goblin residence, and the term “serf” is ignorant of the material class dynamics of feudal Europe. Please report to H.R. immediately.”

“managing API costs”

Why in gods name is he using a non open source LLM. Is his hope that any game built using this strategy never becomes popular.

Cause a good locally run one can cost up to 40 gigabyte in RAM + requires a f-ton more effort compared to a Api based one and any public free LLM gets too expensive for its operator to continue past a certain popularity. LLMs are a rather costly lot no matter how one tries to use it. :/

Right now. At most we will see these kind of games that come with a API agent and its limitations. Like a GPT driven vampire game called “suck up” which will essentially self-destruct once you hit the license limit after about 30-50 hours…

I tried the Llama models this week and they are pretty good and don’t need tons of RAM and CPU to run properly.

I spoke about machine learning with a colleague who is an expert in this discipline, and for her the current developments are in fact a brute force approach to taking into account particular cases.

In other words, models can be further optimized, for example by being multi-tiered, depending on the level of complexity required to process a prompt.

a good one might need a bit of ram but how much do you need for good enough? I think the ms phi2 only needs about 2gb. It doesnt have to write essays for you or do your math homework, just has to sound humanish.

Because it’s an *experiment* and this is cheaper than standing up the hardware for your own LLM.

He’s exploring potentials and problems with doing this.

In my experience those who think LLMs are good for games are either people who don’t make games or know whats behind the experience they have with a game or junior devs who are just discovering it.

Games are like movie sets, everything in there is set for the player to interact with, in a way that moves the story forward. Endlessly talking with NPCs won’t improve the experience, at most, it will be a short lived novelty kinda experience.

Take any aspect of a game and procedurally generate its content, and then it will feel bland and uninteresting. You need tailored uniqueness, be it quests, items, characters or dialogs.

Good in games doesn’t come from quantity, thats why making “open world” games is expensive. Because you need to populate it with interesting custom content (is not because of the procedurally part).

Above that, games always nudge you in the story direction. Level design is in big part that. The same with dialogs, you don’t want to talk and talk to discover the few important bits that character needs to tell you. Is better to have a few options to give you a bit of agency and quickly put you in the path of the story.

Reminded of Watch Dogs Legion.

It can work as the main mechanism. Like this one idea where the player is a vampire tries to convince random people to let them into their house.

But for anything else where the main game-play mechanisms are more traditional ones like shooting enemies, fighting monsters or adventuring in a hand-crafted world? These kinds of things turn into VERY expensive “niceties” that really aren’t worth the cost in implementation.

There are a lot of people who use open world sandbox games like tourist destinations, where they ignore the main storyline and go out sightseeing and pretending they’re just like any NPC in the world. Heavy emphasis on “pretend”.

That’s because these games traditionally lack the element where the player’s character actually belongs in the setting. They player is always the quiet stranger that everyone else either attacks or ignores. The scripted story line won’t let you break free of this – either you follow the railroad tracks and get the next line of dialogue, or you wander off and the world ignores you until you choose to go through the next checkpoint in the script. The sandbox games try to get around this by offering tons of mini-quests, but that’s still the same thing – scripted hoops to jump through. If you sit yourself down at the local tavern and start drinking ale, nothing happens. The NPCs just wander around as scripted as if you weren’t even there.

The AI can plausibly provide a reaction and something other than scripted content to play with, because it can respond dynamically. The risk is that it responds in absurd ways and breaks the whole game.

Correction: not sandbox games (which lack objectives), but simply open world games that may or may not have sandbox elements.

Talking – yes, not fun. But acting a bit more randomly (I’m not saying “intelligently”) instead of scripted routines would have been a nice feature for NPCs, and LLMs are good for this kind of things. Players do not need to see the internal monologues of the NPCs, after all.

And just like that, bots in games are considered “AI” again like they were in the 90s and 00s

Connecting to the cloud may make all the difference.

Or blockchain, my nephew is a computer whiz and he keeps talking about how great it is. Surely it will help if we just use that.