On modern desktop and laptop computers, there is rarely a need to think about memory. We all have many gigabytes of the stuff, and it’s just there. Our operating system does the heavy lifting of working out what goes where and what needs to be paged to disk, and we just get on with reading Hackaday, that noblest of computing pursuits. This was not always the case though, and for early PCs in particular the limitations of the 8086 processor gave the need for some significant gymnastics in search of an extra few kilobytes. [Julio Merion] has an interesting run-down of the DOS memory map, and how memory expansion happened on computers physically unable to see much of it.

The 8086 has a 20-bit address bus, giving it access to a maximum of 1 megabyte. When IBM made the PC they needed space for the BIOS, the display, and the various accessory ROMs intended to come with expansion cards. Thus they allocated a maximum 640k of the map for RAM, and many early machines shipped with much less than that. The quote from Bill Gates about 640k being enough for anyone is probably apocryphal, but it was pretty clear as the 1980s wore on that more would be needed. The post goes into how memory expansion worked, with a 64k page mapped to switchable RAM on a card, and touches on how DOS managed extended memory above 1 Mb on the later processors that supported it. We dimly remember there also being a device driver that would map the unused graphics memory as EMS when the graphics card was running in text mode, but such horrors are best left behind.

Of course, some of the tricks to boost RAM were nothing but snake oil.

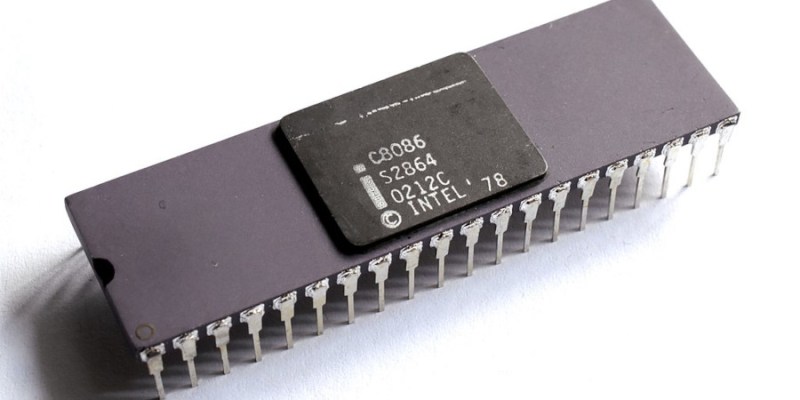

8086 header: Thomas Nguyen, CC BY-SA 4.0

Back in the day, yes … you spent a fair portion of your time optimizing your DOS computer (and especially RAM) in order to be able to do anything useful on it. But that was part of the fun. I remember reading lots of articles in BYTE magazine and talking with friends on the latest tricks to free up as much usable RAM as possible so we could perhaps get Lotus 1-2-3 actually running. :-)

Adding /lh to most files (such as mouse and smartdrv) in autoexec.bat loaded them into upper memory, freeing more of the 640k space. Useful for some DOS games that required a lot of memory. Ultima 7 and Serpent Isle were a bit greedy with the memory need.

Or just use Helix Multimedia Cloaking back then, like those guys in this story:

https://www.robohara.com/?p=8565

The bundle had special editions of SmartDrv, Mouse and MSCDEX that didn’t need conventional memory or UMBs. They simply ran past 1 MB.

Some software shipped with lite versions of cloaking software, too.

Especially that Logitech mouse driver was common, I believe.

Ohh, it’s so much worse. The Ultima 7 engine had some kind of memory manager of it’s own so it required a completely different DOS startup config from every other game on the system. One that didn’t use the usual utilities. At one point I gave up on having mouse driver trying to get it to run and just upped the typematic rate so the arrow keys were usable.

Origin used Voodoo memory manager so it was incompatible with DOS memory managers like emm386. I had 2 different autoexec.bat back in the day and swapped them depending on if I wanted to run Ultima or something else.

It was only ‘fun’ for me because I was still in University and had al the time to spend on such nonsense. In my first two jobs I worked with IBM RS/6000 AIX machines and Sun IPC workstations, and was able to avoid the PC crap. But in my third job it became unavoidable because of the price point of these PC’s. And I spent quite a few of my time cursing at these effing machines.

Still, luckily it was already the ‘386’ era by that time and it was only the access to extension cards that was something to curse about. Mainly about EGA and VGA cards with a 64KB window into 256KB of ram, where you had to write to an I/O port to switch the window to another 64KB bank out of the available 256KB on the video card.

I have no fond memories of that time, to be honest. Although I dealt with it and did it successfully.

Don’t forget different configurations build by yourself into “config.sys” (including an interactive menu) for different use cases.

e.g. w/wo a special driver for seldom used hardware

or a bare minimum configuration with as much free RAM as possible for some game or software.

The article covers much more than just EMS. It covers XMS, Highmem area, etc too.

I remember the attentions fire the two types of RAM were EXP and EXT and nobody could tell me the difference, so I decided to call them EXPloded and EXTruded.

Even the Macintosh, prior to the 32-bit Mac Ii, was limited to 16MB because various areas such as ROM, I/O etc were mapped to blocks of 4MB even though they used much much less.

In fairness, the 68K only had a 24-bit address space, and no decent MMU. 16MB was all it could address, until the 68020 exposed the full address bus.

Apple, however, did an even bigger screwup because of this: The upper byte of the address still existed in the registers, it was simply ignored. So Apple used that byte for system information, believing that 16MB was so huge, it would be a while before they’d ever have to solve that problem…

I guess you now know they meant “EXPanded” and “EXTended” memory? EXPanded memory was the bank-switched mechanism in this article. EXTended memory was a limited way of using all of the 16MB of physical memory to the 80286 CPU-based IBM AT.

Apple goofed on the original Mac mostly because ROM was decoded at 4MB. Just decoding it at 8MB would have doubled the memory available to the original 68000-based compact Mac format and a simple 3:8 decoder would have still provided an abundant address space for I/O. The Macintosh Portable and PowerBook 100, the last 68000 Macintoshes, had their ROMs at a higher bank of memory so that RAM could be expanded to 8MB.

For, not Fire

Abbreviations for, not Attentions fire

The article is a good introduction for starters, I think.

It uses clear language and the covers all the important aspects of DOS era memory models, I think.

That being said, someone could add to mention that bank-switching model existed before EMS.

In the late 70s, the Z80 and CP/M days, MP/M had supported it, too.

Likewise, MSX platform had used bank-switching to overcome that 64KB limitation (it used a Z80 at its heart).

Or the NES with its “mappers”.

EMS it’s not necessarily slower than XMS, also, since the EMS uses mapping method (XMS uses copy method; like a gripper that gets small chunks of RAM from over a fence).

Once memory is loaded on the EMS board, it can be mapped in/out any time very quickly.

That’s ideal for a big number of small information that fits in those pages and can stay there. Which doesn’t requires to be exchanged with conventional memory all the time.

However, the use of EMM386 to simulate EMS via 386 MMU can noticeably slowdown vintage PCs equipped with 386 CPUs and early 486 CPU revisions.

Some 286 chipsets (NEAT etc) supported EMS in hardware, also.

They had a special EMS “MMU”, combined with an EMS manager on the utility diskette that was included.

Interestingly, that 64KB number was both the segment size of 8086, as well as maximum address space of 8-Bit era CPUs like Z80.

The 640KB “limit” was ten times of this number, maybe for a specific reason.

EMS 3.2 had four 16 KB pages that did fit into a 64 KB page-frame, I vaguely remember. LIM 4 / EEMS allowed for bigger page frames and more pages (Windows 3 made use of it).

Making use of this feature required a newer generation EMS hardware, though.

That’s why several EMS board had an up-to-date LIM 4 driver shipped, but didn’t support it.

Likewise, “backfilling” feature was rarely used. It basically involves physically moving the RAM chipsf from motherboard on to the EMS board,

which then takes role of motherboard RAM.

That way, parts of conventional memory can be swapped in/an. Like a basic MMU.

Desqview supported this, I believe.

To being able to run multiple large, commercial DOS programs of the day.

The VGA mode 13h also uses 64KB of RAM, since that fits into a single x86 segment and is directly accessible, thus.

Same goes for the little used mode 11h (640×480 mono).

About Protected-Mode.. Himem.sys used Protected-Mode/80286 CPU reset in early versions,

but quickly switched to using LOADALL by the late 80s (that includes those Himem.sys versions that shipped with MS-DOS 5/6).

These modern Himem.sys versions had two code paths, one for 286 and one for 386 and up. Plus numerous methods to control A20 gate (can be forced manually via switches).

Under control of Microsoft’s Himem.sys, the 286 never really leaves Real-Mode, thus.

Last but not least, there’s that Helix software I read about recently.

It uses socalled cloaking technology to install big DOS drivers in extended memory.

It does so by installing a manager program and leaving behind a small dummy driver for each driver in the first MB, which can be seen by DOS. The drivers must have been modified first for that, of course.

Cool, this was the reply I wanted to write, but this is better than mine would have been :-) .

“The 640KB “limit” was ten times of this number, maybe for a specific reason.”

Maybe because the original IBM PC had 5 expansion slots. So, allocating a whole segment to each slot + 1 segment for the internal ROM would make sense, and would add up to 1024MB?

“The quote from Bill Gates about 640k being enough”

Which there was no source publication ever found of, and which Bill Gates denied having said every time he was asked about, already 30 years ago.

There is a quote of him saying something like it. At the time 32K was the max you could put in an IBM PC . The computer as a whole cost something like $3000 which is around $10K today. So yeah. 640K was enough for anyone in 1982.

Moore’s law was well understood at the time, so there’s no way he meant that it always would be enough.

Anyone remember Ralf Brown’s interrupt list? Those were the days.

It’s really simple in principle, it’s just the naming that makes it look complicated.

Base memory/DOS memory/ conventional memory..: Memory within the first Megabyte. Within the first 640KB, mainly.

Though in reality, some PCs in the 80s had shipped with as much as 704KB or 736KB of base memory.

It did depend on what video adapter was installed and other factors.

If a CGA card was installed, the A-segment was free and 736KB of base memory were possible. With Hercules, 704KB was possible, still.

By the 90s, with VGA being the norm, that was no longer being the case, so that little detail was being forgotten.

XMS: XMS is meant as a standardized way to access Extended-Memory (aka that memory above 1 MB).

It’s a specification really and himem.sys is its memory manager.

Without XMS, the memory above 1 MB must be accessed otherwise (the IBM AT BIOS has subroutines commonly known as “int 15h”; but they’re slow).

So strictly speaking, XMS is not same as Extended-Memory.

XMS is the software side, Extended-Memory the physical side.

An XMS memory manager could also use a swap file on an hard disk provide XMS memory. Or use EMS to simulate XMS memory.

However, this would be highly inefficient, because XMS likes to copy small chunks of information all the time.

It doesn’t do feature mapping like EMS does.

So it’s better off to limiting itself to just handle Extended-Memory.

EMS: Expanded-Memory historically is a kind of memory that sits on a memory board.

It’s foreign, separate memory that’s not part of the system RAM. It’s not even on the motherboard in a historical context.

To access it, EMS is being required (IBM called it XMA, by the way).

Like XMS it’s also a software specification (a concept).

An EMS manager (EMM386, QEMM, REMM.SYS etc) usually does care of making EMS available to DOS programs that ask for it.

Thus, EMS memory can be physically located *anywhere*, the DOS applications don’t really care.

The source can be Extended-Memory (EMM386 uses that) or a XMS memory manager (EMM286, a freeware utility, uses XMS/Himem.sys). Or a swap file – LIMulators were such programs that simulated EMS.

UMA/UMB/HMA/..: Things like UMA or HMA are just names for specific memory locations.

UMA is the memory between 640KB and 1MB. The range is also historically known as “adapter segment”, because devices and ROM routines are living up there.

Free, accessible memory spots in that location are known as UMBs.

Ideally, they’re arranged in a contiguous manner, like normal RAM. An UMB is a free spot of RAM a DOS driver can be installed.

Then there’s the HMA. It’s a location a tiny bit above 1MB, but still in range of Real-Mode address space (if the A20 Gate is set correctly, thus Himem.sys is needed).

DOS 5/6 can use it to move parts of itself into it. That way, more memory is available to DOS applications.

I hope that was useful as an overview. There might be small mistakes, I rushed things while typing on a smartphone.

Hm, why did my previous comment end up here? 🤷♂️

It was meant as a response to commenter Joel Finkle.

There wasn’t enough XMS available, so the HaD server software mapped it into EMS for you… :-)

Thanks, YMMD! 😁

wow!

If that is how much info you can quickly type out on a smartphone, Encyclopedia Britannica wants to meet you when you finally get to a proper keyboard!

I recall Lotus, Intel, and Microsoft issuing the L/I/M EMS spec to govern expanded memory. Applications like 1-2-3, AutoCad, and Flight Simulator benefited from getting beyond 640K.

https://en.wikipedia.org/wiki/Expanded_memory#Expanded_Memory_Specification_(EMS)

Back in the day (Early 90’s) I jointly wrote a DOS Voice mail platform as a .com executable (it used Rhetorex ISA cards) that sat it 64k of RAM as a TSR (Terminate, Stay Resident), that used XMS to ‘map in’ the data for each phone line every (safe) timer tick. This worked pretty seemlessly on a 486DX33 for up to 16 phone lines and 3000 users! In fact, the last remaining system was only taken offline 2 years ago!

?apocryphal?

alt + 168 ¿

The glorious days of having to edit autoexec.bat and config.sys to fit each program you wanted to run… Or choosing whether to play a game with glorious VGA graphics or with sound, because you could not fit drivers for both in memory…

Back in the day, some users also made a boot diskette for playing games.

Nowadays, with the floppy drive being deprecated, the retro community seems to thinking with DOS menus instead.

Personally, I was using a 16-Bit PC on my desk back then, so I didn’t tinker so much with UMBs and memory managers.

Instead, I was using replacements for the big drivers of the day (mouse, keyboard).

Omitting SmartDrive, Mode+keyboard.sys and ansi.sys had helped, too.

So instead of using SmartDrive (HDD cache), a more modern hard disk with a bigger buffer was used (that worked as a sector cache). It was a hardware solution to a software issue, so to say.

Another trick of ancient times was to not load KEYB driver and use an English keyboard instead. Saved quite some memory.

Or, more realistically, use the old localized keyboard drivers of DOS 3.x.

Such as KEYBGR.COM, KEYBFR.COM, KEYBSP.COM, KEYBIT.COM.

They were much smaller than the sophisticated and universal KEYB that shipped with MS-DOS 5.0 and 6.x series.

This was before free replacement drivers like KEYB2 (German) were known to some of us.

We simply used what we had at hand. ^^

Ah, yes. I remember the days of boot menus and having customized disks. Fun Times.

The last time I had to do such shenanigans was as recent as 2006, when I was in the support team of [RedactedCo]’s IT department, I made up a boot disk for imaging systems that prompted the tech for the site they were at and what system they had, and then loaded all the appropriate network drivers and pulled the appropriate image from the site’s local server.

Thankfully, I’m no longer in the support team and [RedactedCo] uses PXE or a bootable USB to pull down the appropriate image from SCCM, which is managed by someone else.

Back in the early 1980s, my dad bought Kaypro’s experiment with MSDOS, the Kaypro PC/XT. Normally known for CP/M machines, both desktops and luggables, Kaypro distinguished its PC/XT with a novel amount of RAM: 768k, 128k more than the standard 640k. One could only run this extra 128k RAM as a RAMDisk, but that was extremely useful.

In this era of home computers mostly running off floppy disks, instead of having to frequently swap the OS floppy out in Drive A: with application disks (Drive B: being the save disk), one could put a batch file on the OS disk that copied all of MSDOS’ OS files into RAM, freeing up both floppy disk drives and running the OS significantly faster.

Eventually Dad got a simply massive 50MB hard drive and floppy swapping was less important. So us kids would run regular programs (games, of course) in the RAMDisk that we wanted to run faster than the floppy disk or hard drive speeds.

I still remember the Kaypro keyboard (on the CP/M variants). Nothing since has been as definite in terms of “now you pressed it” and “now you released it”. And the noise it made.

I can run 4gigs of ram on my atari 800xl using an ay8910 and some array logic and latches

Finding 4gb in sram is the thing

Though modern nand and nor flash have fast enough access times to be used as ram

Along as read and write times are under 100ns

One of the most interesting things about all this was how you managed your entry/exit into protected mode. This was especially a problem for DOS extended programs that needed not just high memory but access above 1MB.

There were 3 things (I was involved in this quite a bit back then).

1) There was an undocumented “feature” that if you set the base/length of a segment in protected mode and then dropped back to real mode the base length “stuck”. So you could switch briefly, do some setup and get access to 4G flat. This has been retro named Unreal mode (https://www.os2museum.com/wp/a-brief-history-of-unreal-mode/). I was one of two people who popularized this (there were three of us around 1989-1990 who either knew about this somehow or had figured it out from the warning in the manual to not do this). You still had to switch in and out (see below).

2) The lazy way to switch in and out was to set a bit in the CMOS RAM and force a reboot. The BIOS would switch to real or protected mode based on that bit and (importantly) turn A20 on or off (A20 was gated in the original PC). This was easy because real mode was totally real mode and protected mode was pure. But it was relatively slow.

3) As far as I know, PROT (my DOS extender in Dr. Dobb’s and later in two of my books) was the first DOS Extender to use V86 mode so that DOS and BIOS calls could execute as “proper” protected mode tasks. That meant you didn’t reboot the computer to go to real mode and freeze everything else. It worked well and spawned at least a few commercial projects (not mine). I actually got the Mix C compiler to work with PROT but Jim Cinnamon (MIX) and I never came to any deal on distributing it.

Fun times. https://en.wikipedia.org/wiki/DOS_extender

I used this capability to test all of the memory on PCs. That stuff was still being used a few years ago, in the age of i9’s and gigabytes of memory! (Only for those who didn’t want to upgrade to a modern environment.)

Thanks for your contribution to the state of the art. While I didn’t use it directly, the knowledge that it could be done was a great help in trusting what I was doing back in the day.

His comment about ignoring the 8088 means he’s ignoring the IBM PC, XT, and the myriad of clones that followed. I can’t think of a PC clone that actually used a 8086 with its 16-bit data bus (rather than the 8 bit on the 8088).

The origin of the 640K memory limit is pretty simple. The previous generation of PC’s had only 64K (or with some tricks 128K). IBM decided to go 10x more. Thus 640K.

After LIM/EMS memory came XMS memory. Using a (80286 and later) protected mode trick an additional 64K could be made available just above the 1M line.

Then came the first 32 bit CPU’s (80386 and later). Folks like Quarterdeck found you can use its VM features to run DOS in a pseudo protected mode and give it a LOT more memory. 386’s could be equipped with 4M or RAM. The excess above 640K could be used as super fast LIM/EMS memory, or protected mode memory. A new specification VCPI was developed for the management of 386 memory.

With a 386, lots of extra RAM, and VCPI one could run “DOS extender” applications. These were specially compiled programs that would run in true 32-bit mode, with no 640K limit. Borland’s Paradox 386 ran on DOS-extender technology.

At that time my employer had a lot of complex engineering design programs written in Fortran. I set up a pilot test to recompile a few of them using a DOS-extender compiler. It worked extremely well! We found a well equipped $5000 386 PC could run the engineering programs as fast as most mini-computers (DEC) and mainframes (IBM) of the era. Only DEC and IBM’s biggest systems were faster.

The test PC was as fast as big-iron systems costing 100x more!

Just a few years later firms like Amazon and Google discovered the cost and scalability issues with using big-iron and realized a lot of low cost Intel motherboards could do the same job. They developed technology to run 100’s, 1000’s, and more Intel systems together. This was a watershed change in the design of large scale applications, big data analytics, cloud computing, etc.