The recently published book Understanding Deep Learning by [Simon J. D. Prince] is notable not only for focusing primarily on the concepts behind Deep Learning — which should make it highly accessible to most — but also in that it can be either purchased as a hardcover from MIT Press or downloaded for free from the Understanding Deep Learning website. If you intend to use it for coursework, a separate instructor answer booklet and other resources can be purchased, but student resources like Python notebooks are also freely available. In the book’s preface, the author invites readers to send feedback whenever they find an issue.

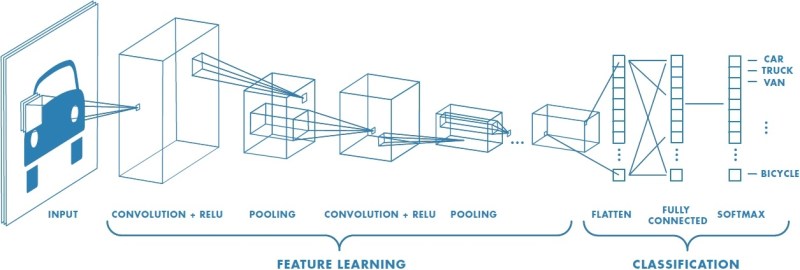

In the preface, the joke contained in the title is also explained, as nobody really ‘understands’ deep learning algorithms, even though they are beginning to underlie more and more of modern society, ranging from convolutional neural networks (CNNs) in machine vision to recurrent neural networks (RNNs) commonly used in large language models that often are ascribed near-magical properties which they most definitely do not possess. We’ve likened it to a bad summer intern, although they are getting better almost daily.

The book and materials look like a solid introduction to learning about the ideas behind these deep learning algorithms for anyone even mildly curious about the topic, and you can’t complain about the price.

Deep look at deep learning. It’s “deep” all the way down.

I’m gonna pass.

I read “Thinking Fast, Thinking Slow” and thought the book was a con job.

“You can’t complain about the price”

$90 for the hardback version.

Still, free beer is better than no beer.

Deep thoughts by Jack Handy. Why can’t I feed the dolphins at SeaWorld fried chicken?

You want to feed the dolphins fried chicken? ….. err …. I dont think that’s in their diet :(

fried Chicken of the Sea

So much of the material based on deep learning is focused on visual or image base deep learning types of models and I’m interested in large language models and convolutional neural networks as they relate to RF frequencies like sig53. Every time every time I open up a book on deep machine learning it’s just seemingly focused on facial recognition or autonomous driving and I’m not at all interested in using it for those purposes.

@John McBrayer said: “So much of the material based on deep learning is focused on visual or image base deep learning types of models and I’m interested in large language models and convolutional neural networks as they relate to RF frequencies like sig53. Every time every time I open up a book on deep machine learning it’s just seemingly focused on facial recognition or autonomous driving and I’m not at all interested in using it for those purposes.”

Deep learning models operate on Datasets, it does not matter where the dataset comes from, a picture of a person’s face, or samples of signals in quadrature (IQ), as long as the dataset is presented to the deep learning model in a way the model can operate on it, you should be able to get results (guesses).

Maybe provide a link to the Sig53 Dataset and ask the online ML Community (on Reddit maybe?) how to analyze the Sig53 Dataset and provide guesses as to what each example is? After all, you already know the correct answers, right?

More on the Sig53 Dataset:

Large Scale Radio Frequency Signal Classification

Luke Boegner, Manbir Gulati, Garrett Vanhoy, Phillip Vallance, Bradley Comar, Silvija Kokalj-Filipovic, Craig Lennon, Robert D. Miller

Existing datasets used to train deep learning models for narrowband radio frequency (RF) signal classification lack enough diversity in signal types and channel impairments to sufficiently assess model performance in the real world. We introduce the Sig53 dataset consisting of 5 million synthetically-generated (by TorchSig?) samples from 53 different signal classes and expertly chosen impairments. We also introduce TorchSig, a signals processing machine learning toolkit that can be used to generate this dataset. TorchSig incorporates data handling principles that are common to the vision domain, and it is meant to serve as an open-source foundation for future signals machine learning research. Initial experiments using the Sig53 dataset are conducted using state of the art (SoTA) convolutional neural networks (ConvNets) and Transformers. These experiments reveal Transformers outperform ConvNets without the need for additional regularization or a ConvNet teacher, which is contrary to results from the vision domain. Additional experiments demonstrate that TorchSig’s domain-specific data augmentations facilitate model training, which ultimately benefits model performance. Finally, TorchSig supports on-the-fly synthetic data creation at training time, thus enabling massive scale training sessions with virtually unlimited datasets.

https://pubs.gnuradio.org/index.php/grcon/article/view/132

https://paperswithcode.com/dataset/sig53

https://paperswithcode.com/paper/large-scale-radio-frequency-signal

https://arxiv.org/abs/2207.09918

https://deepai.org/publication/large-scale-radio-frequency-signal-classification

Thanks for the tip. Free books (especially science and engineering related) are always welcome news! :)