Back when I used to work in the pharma industry, I had the opportunity to hire summer interns. This was a long time ago, long enough that the fresh-faced college students who applied for the gig are probably now creeping up to retirement age. The idea, as I understood it, was to get someone to help me with my project, which at the time was standing up a distributed data capture system with a large number of nodes all running custom software that I wrote, reporting back to a central server running more of my code. It was more work than I could manage on my own, so management thought they’d take mercy on me and get me some help.

The experience didn’t turn out quite like I expected. The interns were both great kids, very smart, and I learned a lot from them. But two months is a very tight timeframe, and getting them up to speed took up most of that time. Add in the fact that they were expected to do a presentation on their specific project at the end of the summer, and the whole thing ended up being a lot more work for me than if I had just done the whole project myself.

I thought about my brief experience with interns recently with a project I needed a little help on. It’s nothing that hiring anyone would make sense to do, but still, having someone to outsource specific jobs to would be a blessing, especially now that it’s summer and there’s so much else to do. But this is the future, and the expertise and the combined wisdom of the Internet are but a few keystrokes away, right? Well, maybe, but as you’ll see, even the power of large language models has its limit, and trying to loop ChatGPT in as a low-effort summer intern leaves a lot to be desired.

Lock Up Those Chickens

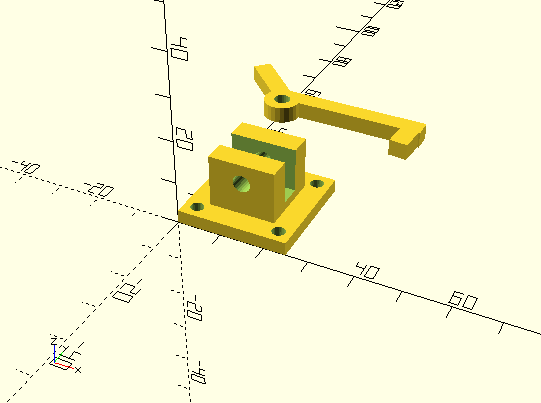

The specific task I needed help with was simple: a latch on the automatic door of my chicken coop. The details of why I need this are better left unsaid, but I will say that the latch is my attempt to deal with the cruel equation “11 Chickens + 1 Raccoon = 8 Chickens.” The first part for the latch that I had in mind was a simple bracket — something with two arms rising from a base that could be attached to the coop door (a sheet of 2-mm aluminum). This bracket will support a spring-loaded arm to engage a crossbar in the frame of the coop door, and hopefully prevent anything with clever little claws and a taste for take-out chicken from working under the lower edge of the sliding door and getting into the coop.

From my experience with Fusion 360, I knew it would be pretty easy to whip up a couple of sketches, extrude the arms up from the base, drill some holes, and tidy things up with the proper chamfers and fillets. The trouble with that is that I moved to Linux quite a few years ago, so Fusion 360 isn’t really a viable option. Yes, I know there are workarounds using Wine or VMs, but I’m also not interested in playing in Autodesk’s cloud. Of course, there’s FreeCAD, too; in fact, just prior to this project I had started fiddling with it, but I’m still in the early part of the learning curve and not terribly productive with it yet.

That final point gets to the crux of the matter, though. When I was learning Fusion 360, I found that it quickly became the case that perfecting the 3D model was mentally more important to me than what I was designing. I would spend hours getting everything just right, only to find out that something didn’t quite line up, or I had some constraint wrong, and I’d have to start all over again. I have every expectation that my mind will treat FreeCAD the exact same way, not as a means to an end but as the end itself. That’s a slippery slope, especially when there’s so much else I have to do.

The Mysteries of OpenSCAD

This is when I had the idea to outsource my design to someone — or something — else. Credit where it’s due; I got the idea to enlist ChatGPT after seeing [ROFLhoff]’s Hackaday.io project about repairing a broken Toyota speedometer. The project required a knob to easily spin the speed sensor that drives the speedo for bench testing. With just a brief description of the part needed, [ROFLhoff] was able to prompt ChatGPT to generate OpenSCAD code to create a model of the knob.

I thought that was pretty slick and decided to do the same. The bracket seemed like the best place to start, so I gave ChatGPT the following prompt:

I know I wasn’t terribly specific — I didn’t say how far in from the corners the mounting holes should be, for instance — but I figured that was something I could tweak later. And besides, when you hand a job over to a subordinate, you should be able to just vaguely describe what you want, and let them run with it. Right?

Maybe not, because here’s what I got back from ChatGPT:

// Bracket dimensions

base_size = 1; // One inch square base

hole_radius = 1/16; // 1/8 inch hole radius

arm_width = 1/4; // 1/4 inch arm width

arm_height = 1/2; // 1/2 inch arm height

arm_gap = 1/4; // 1/4 inch gap between arms

// Base

base = cube([base_size, base_size, 1]); // 1 unit height

// Holes

hole_offset = hole_radius + 1/16; // Offset from base edges

hole = cylinder(h = 1, r = hole_radius);

// Calculate arm positions

arm_x = (base_size - arm_width) / 2;

arm_y = (base_size - arm_height) / 2;

// Arms

arm1 = cube([arm_width, arm_height, 1]); // 1 unit height

arm2 = translate([arm_width + arm_gap, 0, 0]) cube([arm_width, arm_height, 1]);

// Combine all parts

bracket = base - translate([hole_offset, hole_offset, 0]) hole +

translate([hole_offset, base_size - hole_offset, 0]) hole +

translate([base_size - hole_offset, hole_offset, 0]) hole +

translate([base_size - hole_offset, base_size - hole_offset, 0]) hole +

translate([arm_x, arm_y, 1]) arm1 +

translate([arm_x, arm_y, 1]) arm2;

// Render the bracket

render(bracket);

Now, it’s important to note that at the time, I had even less experience with OpenSCAD than I did with FreeCAD. And by less, I mean zero — I’d never used OpenSCAD before. So the code ChatGPT created didn’t mean all that much to me. But it sure looked reasonable, especially to someone who used to code for a living — I could see all the parameters up at the top like constants, the calculations used to figure out where to put the arms, and the creation of the cylindrical holes. But I had no idea what translate was, and I couldn’t really see how the holes would be punched through the base.

Strictly speaking, though, that wasn’t my problem. In the ideal world, I would just copy the AI-generated code into OpenSCAD and get a 3D model ripe for tweaking, right? Perhaps, but in the reality-based world, I had no such luck. The code ChatGPT generated didn’t work in OpenSCAD — it just threw a syntax error on line 21. I told ChatGPT about the error and asked to have it fixed, but the code that came back was essentially the same and threw the same error. Disappointing.

If You Want Something Done Right…

At this point, I figured I had two choices: either keep fiddling with the ChatGPT prompt, or start learning enough OpenSCAD to figure out what ChatGPT was doing wrong. All things considered, the latter seemed like the smarter route, so that’s the way I went. It was quickly apparent that ChatGPT didn’t have the slightest idea how to program OpenSCAD — for example, note the complete lack of difference() commands, which are needed to make the holes, from the AI-generated code. This led me down the very rabbit hole I was trying to avoid, and I spent a few hours learning enough OpenSCAD to do the job myself:

// dimensions in mm [width, depth, height]

base_size = 25;

base_thickness = 3;

upper_size = base_size - 10;

upper_height = upper_size;

base = [base_size,base_size,base_thickness];

upper = [upper_size,upper_size,upper_height];

slot_width = upper_size/3;

slot = [upper_size + 2,slot_width, upper_height];

hole_dm = 3;

hole_margin = 1.5;

axle_dm = 4;

module bracket() {

difference() {

cube( base );

abs_margin = hole_margin + hole_dm / 2;

x_hole_dist = base.x - 2 * abs_margin;

y_hole_dist = base.y - 2 * abs_margin;

x_values = [abs_margin : x_hole_dist : base.x - abs_margin];

y_values = [abs_margin : y_hole_dist : base.y - abs_margin];

// holes

$fn = 25;

for (x = x_values, y = y_values)

translate( [x, y, -1] )

cylinder( d = hole_dm, h = base.z + 2);

}

translate([(base_size-upper_size)/2, (base_size-upper_size)/2,0])

difference() {

cube( upper );

translate([-1,upper_size/3,upper_size/3]) cube( slot );

translate([(upper_size/2),-1, (base_size/2)-2])

{

$fn = 25;

rotate([-90,0,0]) cylinder(d=axle_dm, upper_size + 2 );

}

}

}

module latch() {

$fn=25;

difference() {

union() {

cube([35.5, (axle_dm + 5)/2,slot_width-1]); // main arm

translate([35.5,(axle_dm + 5)/2 -0.8,0]){

rotate([0,0,-185]) cube([(axle_dm + 5)/2, 10, slot_width-1]);

}

translate([0,(axle_dm + 5)/2,0]){ // lever

rotate([0,0,135]) cube([15, (axle_dm + 5)/2,slot_width-1]);

}

cylinder(d=axle_dm + 5, h= slot_width-1); // pivot body

}

cylinder(d=axle_dm, h=slot_width+1); // pivot hole

}

}

bracket();

translate([0,50,0])

latch();

Was It Worth It?

Granted, my OpenSCAD code has both the bracket and the latch, but even accounting for the added complexity, I feel like ChatGPT’s first pass at doing what I wanted was half-assed at best. This is about the point where I realized that ChatGPT was behaving like an earnest and eager-to-please but somewhat clueless summer intern. Given the same instructions, such an intern might go off and spend a few hours Googling how to do the job, maybe hitting StackExchange or r/openscad for some help, and coming up with some code that they figured would do the job. That they didn’t even bother to see if OpenSCAD would interpret the code would be grounds for a Stern Talking-To™ with a meatspace intern; but with a virtual intern, what recourse did I have?

Honestly, although I didn’t get the magic help from ChatGPT that I was hoping for, this experiment in AI-assisted design wasn’t a complete loss. The original code may have been crap, but it at least gave me the impression that OpenSCAD programming wasn’t entirely foreign territory for me; flawed though it was, I could see that it’s just a combination of coding and 3D modeling, and intuitive enough to take a stab at. So now I know just enough OpenSCAD to be dangerous, all thanks to ChatGPT — the worst summer intern ever.

And as for the coop door latch, that project went in an entirely different direction once I realized that I could make the bracket and the latch from bent sheet aluminum — which forced me to learn not only FreeCAD but the Sheet Metal workbench as well. So ChatGPT actually forced me down two rabbit holes I’d hoped to avoid.

Simple: ChatGPT has sided with the racoons.

Ha ha! That, or ChatGPT has been educated by samples interns’ code, which is probably actually true.

Are you surprised? With ChatGPS’s artificial intelligence and the raccoons dexterous little hands they could be running the planet by the end of the year.

Just as long as OpenAI keeps the chicken coming.

Nasty little creatures, those raccoons. I actually caught him leaving the scene of the crime, about 4:00AM, skulking up a tree trying to look cute and harmless.

makes perfect sense. openai is a front for Big Raccoon.

It’s about as shocking as a sailor with syphilis.

I already used OpenScad for a lot of projects and this is actually the first thing i tried when chatgpt came along. It probably did not get enough training data. I guess in comparison to C, C++, python, etc. OpenScad is just barely used. (Also there are no explanations for code, like on stackexchange)

I also tried circuit design with verilog which works so so …. If its not too complicated like a counter or a shift register it is usable after some small modifications.

Dont even bother with VerilogA. ChatGPT doesn’t know the difference between Verilog and VerilogA ^^.

I found it had decent luck with VHDL. I was able to get it to generate a UART interface in VHDL. Granted I kept having to add more requirements and I think it had ~3 logical errors but I would give it summer intern ranking. I figured VHDL would be easier then Verilog since the language is so absolute in what you are typing.

Timing is where it really screws up. I asked it for a PUF and it immediately tried to clock it defeating the entire purpose.

I’ve had success using ChatGPT to write both three.js and OpenSCAD code. In my experience, one-shot attempts were unsuccessful but few-shots that incrementally introduced components of the design worked well.

I’ve had the same experience, except with three.js and cadquery instead of OpenSCAD. Providing starter code, being overly specific, and incrementally introducing components as you said works like a dream. It probably helps that I’ve been using GPT-4 instead of GPT-3.5.

Can confirm, found success with three.js and cadquery instead of OpenSCAD. I’ve found it helps to start with a code sample, be overly specific, and be incremental with components like you mentioned. For cadquery, I made a random “topography” generator that was pretty fun.

i agree chatgpt is much like a student…and maybe i’m being unfair…but i don’t think it’s the worst…it’s not as good as the ones who have already survived a “weed out” filter but it seems like middle of the pack for people going into the first weed out course? maybe better becasue it doesn’t suffer from fear / insecurity / paralysis?

This is a great description of all my experiences with AI content. Mediocre student, with average ability and no fear / insecurity / paralysis. Perhaps even unfiltered mediocre logorrhea.

Turning my thoughts into purely written form is so hard! ChatGPT for OpenSCAD will be better when I can upload a whiteboard sketch and tell it to make it 3-dimensional

You want every sketch line copied and displaced along a 45 degree axis, to create a 3D effect? I do that with shadows on MS Word text boxes.

no I want a 3D object that can be exported :P preferably OpenSCAD or STEP format so it can be tweaked

https://github.com/MarkMoHR/Awesome-Sketch-Based-Applications#5-sketch-based-3d-shape-modeling

Take your pick!

Sweet list, thanks

Excellent link, thank you.

This article was fun to read as I could see myself doing the exact same. Thank you for writing!

You’re welcome!

I am glad that I sparked the idea about using ChatGPT. Sorry to hear the initial results weren’t that great, but I’m happy that it made you learn more about OpenSCAD!

It does need more training with the less used languages, but I can’t blame OpenAI for not focusing on a language that has at least a couple magnitudes less use than C/C++/Python.

I see it struggling with structures. An example with Arudino style code it is putting no code in the setup() function and instead cramming it into the loop() function with the actual code inside yet another for(;;) loop. The OpenSCAD code it made also had trouble with ordering the shapes with the difference blocks. Even trying to walk back my inputs it couldn’t correct it.

Did you search HaD? I recall seeing several poultry premises permissions featured right here. One did this very thing with a weighted lever-cam on the rope lifting point. Scrap wood, pencil, and a saw. Done.

Man the tool maker-user is doomed if we all have to go down this rabbit hole.

As I said, the door needed to be put on the coop quickly — the remaining chickens were at risk if the raccoon decided to come back that night. So dropping $150 at the farm supply store was my best move, even if it galled me. The door just needed a latch, and I already had a pretty good idea of what I wanted that to look like. So I never had a need for design ideas, just help getting my vision for the part into a concrete form.

I had a very similar experience, having never used OpenSCAD and wanting to make something that I couldn’t quite visualize how to make out of primitives in Fusion360 (basically I wanted a chewed up bubblegum shape). Similar to your experience, the resulting code wouldn’t run without an error, so my first step was just deleting the offending line. Then it would run but it looked absolutely nothing like what I requested. It was literally a cylinder with a cone sticking through it at a right angle. I tried tuning the prompt but it didn’t help. I started messing around with the OpenSCAD code and made a little progress in figuring things out, but ultimately gave up.

if i am understanding correctly, “chewed up bubblegum shape” is like openscad’s biggest weakness. it is really hard to use it to make like compound curves, or surface transforms along a plane.

i love it for mechanical things, boxes with holes in them and cones coming out of the side. i don’t even find it hard to do fillets and all the mechanical strengtheners or lighteners that people whine about. but for art it’s almost completely useless.

There are bezier and other “organic” curve libs out there, though.

I’ve used libs that create arbitrary airfoils, for instance.

It’s an open secret that ChatGPT like most LLM are dealing badly with orientation and space projection. That’s most certainly a downside of not having a body! Or any training data of a physical environment at the minimum.

I’m just wondering how long this will take for such tools to develop this “space representation” ability. That day we might love OpenSCAD more than any other tool that can generate volumes.

I wonder if this is a symptom of the fact that most of the posted code used to train ChatGPT would be incorrect, i.e. people asking “why doesn’t this code work?” , so it in turn returns code that doesn’t work?

Ha, that’s brilliant. You’re probably right.

It will get better, though, and people who don’t realize this are being foolish. We’ve been seeing increasingly nervous, sweaty articles proclaiming “AI is overhyped guys, don’t worry, certainly don’t do a tech general strike or anything…” for decades. In those decades, AI has gone from being able do do almost nothing except flail and squirm around a bit, to being able to do a lot of things so well that 99% of people couldn’t tell the difference between it and human work, yet there’s still a couple things they can’t do well.

That will change quicker than you’d like to think.

So, what you’re saying is that we _should_ call a “tech general strike”?

B^)

I bet you believe full auto driving is ‘just around the corner’.

Debugging a neural net is the hard part. Sure you get ‘crap results, but looks interesting’ by training it with the whole steaming internet. Just as you get ‘mostly drives, occasionally thinks truck is billboard’ from full auto driving.

Where do you start? Training data that fixes X brakes Y. Whackamole. Endless testing and iteration. When you finally think you’ve got it, you don’t even know why.

AI gets overhyped by every generation of AI engineers. This isn’t different than last time, or the time before, or the time before.

Sure. If you say so. I mean, just keep in mind that LLM’s like ChatGPT, were trained to do one thing, and only one thing, predict text. That’s all. Billions of times, a sentence was plugged in, with the last word missing, and ChatGPT tried to predict what that word was. It did this so much, that without being told how to add, or multiply, or what a prime number is, or how to code cpp, or what schema therapy is, or baroque art, or any of the other weird little things it can do…. It figured them out. It figured stuff out. Think about what that means. Then tell yourself “this isn’t different than the last time.”

Computers don’t figure anything out.

They are inanimate objects like a rock.

For most of human history anthropomorphizing inanimate objects like a computer was enough to get you committed to an insane asylum for the rest of your life.

What you’re saying about a computer “learning / figuring stuff out” is outrageously offensive to a real engineer like me.

I have got up and returned to my computer thousands of times in my career and never has it completed my algebra sequencing for me.

Stop saying learn when referring to a calculator you sound insane.

_This_isn’t_different_than_the_last_ time!_

It understands nothing, it’s just regurgitating words from the training set. It also regurgitates wrong words more often the right words.

If it was as smart as you suggest, it could filter the painfully obvious incorrect results. Heuristically, for code, just bang the results through a compile and don’t return the ones that fail. But that won’t really work as code fragments on the net aren’t generally directly compilable, right or wrong.

You think ChatGPT can code C++? LOL. No. Perhaps as well as a dumb high schooler just learning to code. Copy from stackoverflow until it compiles, turn in assignment. But dumb HS kids don’t learn C++, they learn JS. I digress.

This might be a case of non-overlapping skills, but I can’t even IMAGINE taking the time to write a prompt to an AI to construct such a simple little shape. Let alone writing out over three score lines of code manually(ludicrous!). I would open Blender and whip that model up in ~45 seconds. Export STL, slicer, octopi, done. There are FOSS tools already for creating such shapes intuitively.

People are sometimes blinded by the tools they are good at. You don’t need 6-axis CNC with AI integration to cut a 2×4 to length, you just need a saw and a pencil.

To be clear this isn’t meant as disrespect; check out a couple videos on youtube for learning Blender basics, download the latest version and try it out. CAD doesn’t have to stay separate from general 3d modeling, especially for simple stuff.

I don’t disagree with your basic point.

But use a better tool than blender. That one sticks to heaven.

Tinkercad

Saying “saw and pencil” in one post, and then “Blender” in another? You just said “learn to ride a bike” and then “learn to fly an alien spaceship”. :)

Which is to say — I found Blender to have a really serious learning curve to it, and that’s even before you try to do anything dimensional. OTOH, I haven’t touched it in >5 years.

@Miles. Tinkercad is surprisingly solid. It’s a compliment rather than an insult that it’s easy enough for kids to learn it.

I see you’re trying to softly back pedal the low comprehension choice of chat GTP.

But that’s not going to work you have to be brutally honest with these people or they will not discern subtlety.

ChatGTP did not help him resolve this in any way shape or form it was garbage that slowed down the engineering process.

The author just tried to say it nice.

Yep. Problem is that it comes back with something that seems about right, but isn’t. It does exactly the same thing when you ask it other things too.

People are cutting the damn thing too much slack, and saying “oh, it’ll improve”.

The best computers on the planet – human brains – take years to learn anything. Why would anyone expect an AI to do the same in a couple of weeks! Tell the damn programmers to go back to the drawing board and have a good think about how they are solving the problem.

“Why would anyone expect an AI to do the same in a couple of weeks!”

Well, maybe because an AI can try years of rookie mistakes in a couple of hours. You may have missed the fundamental advantage of computers.

> hole_radius = 1/16; // 1/8 inch hole radius

It’s learned to confuse inches for millimeters and to leave comments that conflict with its code. I’m impressed.

But that is accurate, if misleading: the radius of a 1/8 inch hole IS 1/16.

How is it confusing units? Remember that OpenSCAD and STL use arbitrary units. Dan and his intern can call them inches just as validly as we call them millimeters. It’s slicers that assume the units in input files are millimeters. If they’re actually inches, you just have to scale up by 25.4; IIRC, some slicers even have a button for that scale factor.

(OT: I wish the WordPress comment form would simply refuse to load with a “you must reload the page and try again” message if it’s been too long since the page was loaded for it to accept a comment submission, instead of letting me write a comment and then eating it with the cryptic-to-commenters-on-sites-that-aren’t-Hackaday message “Nonce verification failed.” I do have a form recovery browser extension, Typio, but it doesn’t seem to work here—it only shows two entries from 2021. As a workaround, I sometimes copy the entire comment before clicking the post button, but I usually forget to.)

I mean, that result is NOT what I had envisioned from your description. A base with arms?

But if it was real life we would have talked about it, and gotten a common understanding.

I wonder what will come first (in context of llms such as gpt), skipping from text to speaking, or the ‘create common understanding before action’?

It’s different from people in that it can deliver a bunch of code as a response to an inunderstandable query, but also a bunch of code is not a very clear feedback..

A couple months ago I asked ChatGPT to use OpenSCAD to generate a dodecahedron. The results were even worse than yours. Syntax errors, followed by the inability to define a list of edges. After hours of trying to correct it for various errors, the closest it ever got was to create a thing that looked like an asymmetric spiked golf ball suitable only for a Klingon, but at least it contained 12 polygons. Many of the pieces of code it produced weren’t even suitable to copy/paste and manually replace the hard coded values with the equations for generating a dodecahedron, but eventually I did get something that was sort-of structured like you’d expect given the proper equations.

I figured there’s very little example training data available online (or at least it never found any of the libraries of OpenSCAD code). Maybe it found some generated OpenSCAD code that was highly repetitive but not well structured. But the big problem is the body of work of OpenSCAD available online is simply a tiny, tiny fraction of that of C, Java, Python, etc., so it just doesn’t have much to go on.

For this to work better, an AI is going to need to ingest a lot more specific training info.

Problem with your theory:

ChatGPT’s results for more common languages is just as bad.

It’s basically just an obfuscated search engine.

“the body of work of OpenSCAD available online is simply a tiny, tiny fraction..”

Probably you haven’t looked for parameterized models in Thingiverse.

You don’t mention if this was done using ChatGPT 3.5 or 4 which makes a gigantic diffference in terms of reliability..

Not on this task IME

I had a similar issue having chatGPT write a python script to calculate the ingredients to use to bake a single chocolate chip cookie. The script it made was mostly pseudocode and couldn’t be run by python without tons of syntax errors. It was a very simple test of chatGPT, but it failed badly.

The code interpreter of OpenAI provides a feedback loop. It can iterate on what it’s writing, perform tests, reflect on error messages, etc.

Here, there’s no feedback. I bet you’ll all write something broken at your first attempt too.

I suspect, the following approach may bear better results: pair it with a model similar to mini-gpt4 (i.e., BLIP2 + Vicuna backend), and let it see a few renderings of what it made in OpenSCAD. I suspect it’ll be able to iterate on its design this way, converging to something far more fitting.

That’s really cool. It’s also a great workaround to enable it to do math better.

Funny that “write symbolic python code to add two numbers together and then execute it in a sandboxed environment” works better than just asking straight up what the sum is, but … whatever works.

(And contributes maximally to the eventual heat death of the universe. Hail Eris!)

The problem that Dan encountered is that it came up with a show-stopper error on the first try, so there was nothing for him to iterate on, and he didn’t know the language well enough to fix it. Guiding the AI to get the result you want does often seem to require you to know some of what you’re asking for already.

But hiring a summer intern also requires you to know how to do the work that you’re asking of them. So…

For the errors in a language it does not understand well, a feedback loop also sort of works, it just need to be more complicated, involving executing external tools like “google(‘how to do this thing in openscad?’)” and then a few iterations of summarisation of the results.

As long as your context is big enough, you can fit your few iterations feedback and accumulated new knowledge (including the knowledge of the language) into it.

In my experience, this approach works even with new languages the LLM does not even have a chance to know in advance. I’ve experimented with typed concatenative DSLs, feeding the entire language spec into a prompt and then iterating on typing error messages and test run results a few times (similar to the code interpreter) until it get things right. Did not try it with GPT 3.5/4 though, used small local models (but without any fine-tuning).

s/intern/slave/

Fixed that for you.

Not true.

Some slaves were useful.

Interns are virtually all net negative work. They should pay the employer.

Granting that’s also true for most slaves, why societies with slaves were and are poor. Just have the guy holding the whip do the work instead.

In the end we find out the whole story was written by GPT, and using images from Midjourney – for Hackaday Traction.

I realize it’s satire but you are spreading a harmful lie by pretending that chat GTP can produce things of use.

All AI output is a rearrangement of existing human created original content.

Plagiarism is the academic term, theft of royalties is how the artist sees it.

But worse than all that it makes capable adults Wait For imaginary tech solutions instead of devising their own solutions. That’s why it’s technology is increased individual productivity has decreased.

This whole process reminds me of swatting flies indoor with a 12gauge shotgun. Yup it works, for a while until the flies return through the wall holes you just made. Without CAD, I could make a coon resistant latch in about a half hour out of whatever junk I have living in the wood shop, including an option to bolt the door shut with a deadman

OnShape is free for hobbyists, and runs in the browser.

‘runs in the browser’

Thanks for the warning. Will avoid.

Ditto. “Runs in the browser” is just 2023 for “cloud-based”.

Even worse.

Cloud based storage and coded in JS on top of the worlds worst steaming pile of JS libraries.

The first part of that prompt leaves so much room for error “a one inch square base” What’s the Z axis dimension?

If you tell ChatGPT about the difference() issue, and paste an example, can/would it then fix it?

If you modify the bare minimum of ChatGPT’s response to draw something in OpenSCAD, What does it give you back?

The bulk of the things I make around here using the 3D printer are made with basic shapes, and so OpenSCAD is the tool of choice. I’m still watching the ChatGPT game from the sidelines, and frankly, I have no interest in it at all.

I’m glad you wrote about it, though. It’s fun and helpful to know.

Thanks!

I can see why it’s hard to allow ChatGPT to test-run code (they would try to do great harm), but I think they should allow it to test OpenScad since it’s not a language that does much else than render things.

Addendum: Perhaps the people behind the various implementations of popular languages like Python should contact the ChatGPT people and supply them with a safe testing (compiling) environment and have that spit out error codes that ChatGPT can easily interpret.

A little cooperation might be the thing here. They would both benefit I would think.

fwiw, this is not an openscad specific problem. chatgpt (even 4o) simply doesn’t understand how to make a dodecahedron, even with its built in drawing/rendering mechanisms.