The motto of Sun Microsystems back in the day was “The Network Is The Computer” which might be kind of relevant when CPUs were slower and single-core affairs, but lately to get a faster compile, you’d simply throw more cores and memory at the problem. The thing is, most of us don’t do huge compilations all that often, we can’t remember the last time we even attempted a Linux kernel build. However if you do find yourself with a sudden need to do so, and have access to a pile of machines hooked to a network, then why not check out distcc: the fast distributed C/C++ Compiler? We’ve seen a few mentions in comments and a HaD links article referencing it, but never explicitly covered the tool. So here we go.

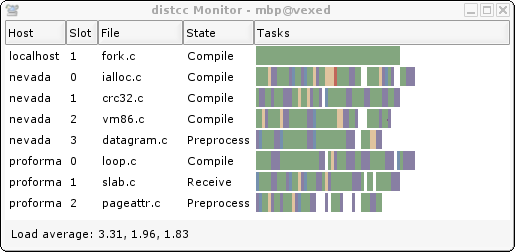

To call distcc a compiler is a bit misleading, it is a compiler frontend. Each client you have access to runs the distcc daemon process and sits there awaiting instructions. To start a parallel compile, you invoke your normal build command with the ‘pump’ script, enable parallel build mode set the compiler to ‘distcc’ and let the tool do the rest. A really nice feature is that the compiler hosts need not share a filesystem or have synchronised clocks. They can even be different operating systems and CPU architectures, with appropriate cross-compilers in place, so leveraging existing hardware without dedicating it to the task is much easier.

Distcc compiler support is focussed upon GCC, but does include support LLVM as well. OS support is primarily aimed at Linux but seems to run just fine on various BSD derivatives and even Cygwin on Windows. You can imagine a neat scenario where whilst working on your laptop, you come home and kick off a new build, for your machine to pick up the other machines in your vicinity and automatically tap into their CPU power. And you only need to set it up once!

Whilst this is distributed computing for your needs, on your network, we have seen many good uses lately of distributed computing across the whole internet. Like the one about a certain pesky coronavirus.

distcc!?! you have to configure the machines you’re going to use, last I checked: they don’t discover each other. https://github.com/icecc/icecream is like that but does discovery, so that machines can come and go from the network and the compile jobs just keep chugging along. I don’t understand why gentoo doesn’t switch to that. At Qt, we use it extensively in the offices, and I’ve also used it at home when my collection of machines were all getting a bit long in the tooth.

And both of these are rather primitive compared to the general-purpose clusters that we should have now (any process, not only a compiler, should be able to migrate to a less-busy machine); but somehow those are still not mainstream. I played with openmosix around 25 years ago, it seemed cool back then. I’ve been waiting for the other shoe to drop ever since. Let’s see, https://en.wikipedia.org/wiki/OpenSSI was supposed to have picked up where it left off, but the article says 2010 was the newest release so that doesn’t sound very hopeful. I figured since Plan 9 makes it easy to cross-compile for multiple architectures, and easy to mount all resources of remote machines locally, maybe a scheduler could be built to do roughly that sort of thing; but so far I haven’t tried. (It takes a while to get used to using Plan 9 at all, and then you have to write mostly C, perhaps some go if you are willing to do without the full complement of platforms; so it’s a bit wierd to do much general computing on it, for me so far anyway.) Haven’t tried Kerrighed either; I wonder if it’s worth trying.

Distcc can do mdns discovery for build agents.

icecream is looking rad, thanks for the recco. easy to set up on debian systems too.

Seem to remember NeXT machines could do this.

This sort-of happens inside Google, and with the OSS version of their build tool, Bazel (https://bazel.build/basics/distributed-builds). If your organization has the spare compute laying around, then it’s no big deal to spin up 100,000 processes to crunch C++ files in the cloud!

my issue is often the linking, not the compiling..

Compiling with a lot of cpu threads, and fast nvme drives, has got pretty fast even without leaving the machine. But all the linkers seem to be single thread…

There has been quite some progress on the linking front, see the mold linker for example.

I have lately worked on optimizing C/C++ builds. Isn’t just spawning the processes and reading the source files much of the work? I have looked up how distcc works and it sends the preprocessed version of the source code over the network so loading all the files and prerocessing them can not be spared.

What if we did a fusefs on the server that holds all sources and headers? It can be updated using something like rsync that only sends a repeatedly used header once at the beginning of the process or on-demand. And it is also practical to only send the changes. This way we could further reduce the load on the client machine and at least in theory it could scale even better.

So your optimisation would be… file caching? There’s already a common cache system used with distcc, ccache. I understand it’s particularly useful with partial builds.

hmm, why not just do make -j 32 locally? No overhead of file transfer, etc. Linux Kernel compiles in around 80-90 seconds or so.

With a decent SSD/NVME, it would beat even the largest clusters. By the time the files have finished transferring, I’m done compiling.

This isnt for just 1 kernel, and one time. billy bob clippy gates

What if you do the full Linux from scratch, with Beyond LFS book, and do some full system tests right after. recompile stuff aswell?

WTF? What kind of monster computer do you have that it can compile the kernel in 80 or 90 seconds? Or is it a REALLY cut down kernel?

Now I am curious… how long to compile Firefox and/or LibreOffice. Those are the really long builds on my machine.

i built quite some stuff for my dec3000 system (alpha axp) for dec unix. iE i replaced most proprietary stuff with gnu, and to have a 21164sx@533MHz on the network was a big helper. but this is talking day vs week :D . setting this up was a bit painful but once it works youre quite fine – especially on custom systems.

pls note this was done years ago, distcc was fine for me and helped a lot.

its not perfect for big applications like firefox or libreoffice or “make world” but on vintage machines it helps a lot – especially if youre not “cross” but “generation” compiling.

consider the man pages ;)

I’ve tried distcc to compile linux over multiple computers all around the house. It works, it’s easy. So why not? Just, some build environments won’t play ball, such as ninja. Ah well.

Aw nuts, I was hoping to set up a Zephyr build environment with this, but I think it uses ninja. For an embedded RTOS, it’s got a wild build process.

I avoid complex and large compilations. In 2006 when I began using Linux I had more luck compiling, but nowadays there are too many flavors of building toolsets I can’t feasibly debug anymore. Python and LTS distributions are a lifesaver for me.

Would distcc notice mismatching GCC versions?

I haven’t used it for ⅕ of an eternity…

While troubleshooting/tuning a large (200+ clients) long (~12 hours) distcc build on Sun hardware nearly three decades ago the largest difference to buildtimes between similar machines (same # MHz, same gigabit NIC’s) was traced back to the size of the L2 cache in the CPU’s and the order of libraries in the LD_LIBRARY_PATH.