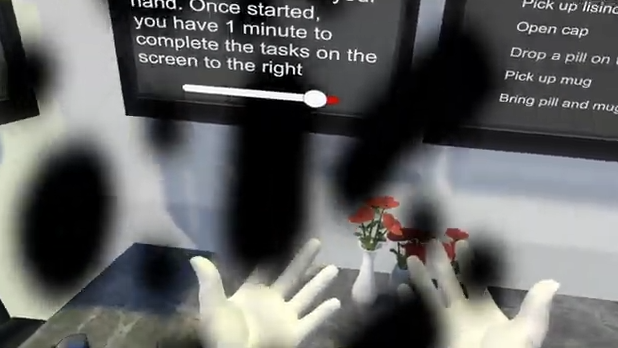

Researchers presented an interesting project at the 2024 IEEE Conference on Virtual Reality and 3D User Interfaces: it uses VR and eye tracking to simulate visual deficits such as macular degeneration, diabetic retinopathy, and other visual diseases and impairments.

VR offers a unique method of allowing people to experience the impact of living with such conditions, a point driven home particularly well by having the user see for themselves the effect on simple real-world tasks such as choosing a pill bottle, or picking up a mug. Conditions like macular degeneration (which causes loss of central vision) are more accurately simulated by using eye tracking, a technology much more mature nowadays than it was even just a few years ago.

The abstract for the presentation is available here, and if you have some time be sure to check out the main index for all of the VR research demos because there are some neat ones there, including a method of manipulating a user’s perception of the shape of the ground under their feet by electrically-stimulating the tendons of the ankle.

Eye tracking is in a few consumer VR products nowadays, but it’s also perfectly feasible to roll your own in a surprisingly slick way. It’s even been used on jumping spiders to gain insights into the fascinating and surprisingly deep perceptual reality these creatures inhabit.

Pill bottles are a pain but usually you take the same stuff every day. The thing that kills me are the cooking directions on many food products. and the ingredient listings. Little fonts and non contrasting colors.

I think part of the problem is that anything with visually accessible text immediately registers as ‘medicinal’ which is at odds with trying to portray your product as either natural or exciting as well as aspirational.

Hmm I’d have suggested the worst cause was the amount of information that must be on the packaging forces the text to be small, especially on food. As apparently you can’t sell something with a full colour printed box/sleeve, with the pretty picture of what that thing is/could be made into and your company brand taking up something like 1/3 of the ‘front’, and the sides must stay pretty so it looks good stacked up – leaving only the back of the box to hold all the useful information…

Lots of people have morning meds and night meds. Ever accidentally taken a bunch of Benadryl right before you have to be somewhere? I don’t like having to go to work and meeting the hat man and his army of spiders along the way

“The Hat Man and his Army of Spiders”.

Now I really do want to start a band.

Funny thing but more and more cooking instructions are done in a graphical form and often in the front or side of packaging, and that trips me up as I’m trying to read the small print while overlooking the graphical simple instructions in plain sight.

I wonder how well this simulation really works at selling the effects, the best VR headsets are getting pixel dense enough to be really good for detail it seems, but still not actually that close to the standard eyeball’s capabilities, and usually still have some visual aberration that isn’t intentional. Still really like the idea, I just suspect the VR simulation will fall a bit short.

Developing this and other similar things is a kind of morality play for a certain class of people right now

Interesting simulation, and I laud it for attempting to convey understanding, but as someone with permanent retinal damage I can attest that it does not at all look like black splotches in the field of vision. Perhaps in some way I’d welcome that if it did.

Instead it looks the same as the natural blind spots that all vertebrates have and that we played with as children in elementary school science class: it looks like nothing. Your brain fills it in.

I’d perhaps describe it as it looking like an ‘averaging’ of the colors surrounding the area, though that is just an approximation.

I can detect the damaged area in at least a few ways:

1) look at a textured surface, like a bath towel, under good lighting. Think. Notice that the texture is ‘wrong’ in spots. Those are the damaged areas.

2) look at a blank surface under good light and blink the eye rapidly. The healthy areas have the after-image effect. The damaged areas do not. You can get a full-field view of your damage this way. If you do it under white light and concentrate, you can perceive relative cone/rod damage at the boundaries.

3) fixate on a point and use a laser pointer to scan around in the field of vision. As the bright red dot becomes close to the damage, it will become somewhat yellow, and then disappear altogether. I can trace out it’s boundary with detail on paper if a friend helps mark it where I indicate.

My ophthalmologist thinks I am an ‘interesting’ patient. But he heeds me now having previously dismissed my suggestion of macular edema which he poo-pooed but yet ultimately necessitated steroid injections that in turn resulted in a pressure increase to ~70 mmHg (I am not kidding) and burned my retina. It was an interesting show to see while that occurred. LSD has nothing on a rapidly dying retina. I can attest to both experiences.

The eye chart and finger wiggling and even the OCT machine are blunt instruments in my opinion. OK, the OCT is excellent at surveying the topography, but that is not vision. And the eye chart is just silly. No one reads that way. Reading is real life. I suppose the eye chart is meaningful for refractive issues.

I’ll get off the soapbox, but I wanted to relate my peculiar experience. If these guys wanted to more accurately depict the experience, then a pixel averaging would be a fair first-order approximation, and some generative AI would be a good second. But I understand that producing this simulation is not their end goal but rather a tool in a bigger project.

If folks want a cure, then I would encourage stem cell injection. I am thoroughly convinced that will restore mine and others vision. If the politicians can get out of the way with their nonsense, desperately cleaving unto their fatuous notion of ‘power’.

The question there is if a user stays in this VR simulation long enough does their brain start filling in the gaps it can’t really see and getting a similar perceived effect to yours. Just how much of this is in the brains plasticity and image processing and how much is really that your eyes are not as broken as to ‘see black’.

Interesting. I suspect the answer is ‘yes’. This would be an interesting experiment.

I would assert that ‘black’ is a perceptual color. ‘Nothing’ is not a perceptual color. ‘Nothing’ is the color that objects take on when they behind you. Can the brain substitute ‘visual black’ for ‘blind black’? Definitely worth a test.

The retina is cool because it is a mini-brain, before going to the occipital lobe (where quite frankly most of the computational resources by volume our brain are located), and then it mostly goes to the frontal cortex, where a lot of the the thing we call awareness-of-our-situation happens.

All I can say in my circumstance is that reading is now an exhausting chore since I have to double check anything I ‘see’. But sometimes there is amusement in the malady. E.g. in Costco, I noticed someone carrying out a box of “Orgasmic[Organic] Raisin Bran”. Well, I think I should go back and get a box of that for myself. Or on the road a smorgasbord sign declaring “You can eat her[e] all day!” I didn’t know it was that kind of venue.

Yeah ‘nothing’ is a better description than ‘black’ for the state of your eyes – though in the case of the VR headset it can’t do anything to cease to exist in very specific spots, just not display anything.

indeed. hence my suggestion of color averaging or better yet a (specifically tuned!) AI simulation.

But ‘black’ is a lie. It is not at all black. ‘Black’ will just have you clawing your eyes out. ‘Nothing’ will have you clawing your mind out.

To some extent it is an experiment every VR user does anyway – the screen door effect especially on the older lower resolution VR sets leaves lots of unrendered gaps between the pixels. So while its not large blotches of nothing its a similar sort of image processing that doesn’t take long to get past (at least with most styles of content).