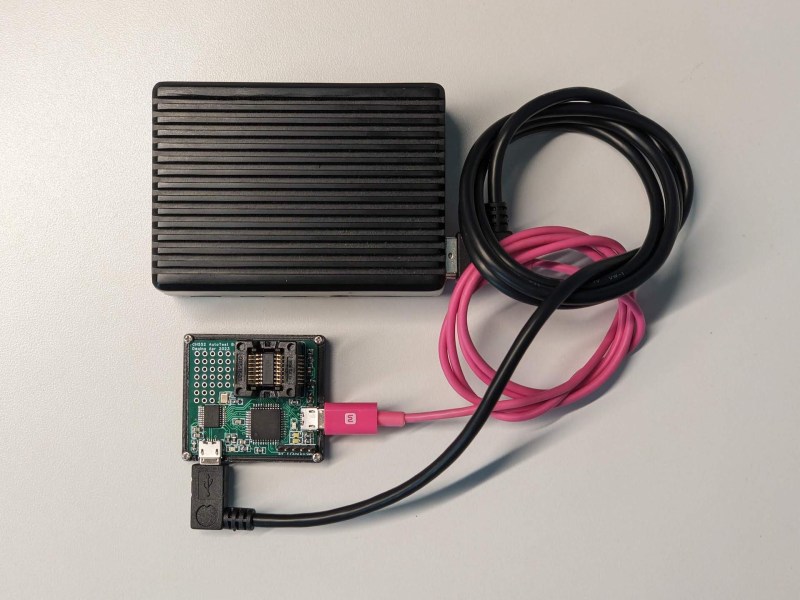

How can you tell if your software is doing what it’s supposed to? Write some tests and run them every time you change anything. But what if you’re making hardware? [deqing] has your back with the Automatic Hardware Testing rig. And just as you’d expect in the software-only world, you can fire off the system every time you update the firmware in your GitHub.

A Raspberry Pi compiles the firmware in question and flashes the device under test. The cool part is the custom rig that simulates button presses and reads the resulting values out. No actual LEDs are blinked, but the test rig looks for voltages on the appropriate pins, and a test passes when the timing is between 0.95 and 1.05 seconds for the highs and lows. Firing this entire procedure off at every git check-in ensures that all the example code is working.

So far, we can only see how the test rig would work with easily simulated peripherals. If your real application involved speaking to a DAC over I2C, for instance, you’d probably want to integrate that into the test rig, but the principle would be the same.

Are any of you doing this kind of mock-up hardware testing on your projects? Is sounds like it could catch bad mistakes before they got out of the house.

Been on a few projects that do this. For github you set the pi as a self hosted runner. Typically compilation isn’t done on the raspberry pi as it’s not got a great amount of compute. The raspberry pi CI stage grabs an artifact that’s been built in a previous stage and loads it, then executes a series of pytest scripts. As much as possible we try to use final hardware and build jigs to handle input / output. In some cases those jigs even allow for motion to test sensors.

When I worked at Microchip, there was a lab with a few benches filled with programmer/debuggers (PICkit, RealICE, etc.) connected to large PCBs containing samples from every supported microcontroller with their program/debug lines multiplexed with reed relays.

Each programmer was connected to a stack of PCs running Ubuntu and configured as Jenkins worker nodes. Every new push to XC8/16/32 or MPLAB IPE or a programmer’s firmware would trigger a suite of tests.

Fun thing was how lousy the coverage of this was, and how many years it took to get new MCUs added to the system.

Thats why we use jlinks at work :) I am responsible for same kind of testing at work (embedded system with multiple saml ucs) and jlink has way better python support.

J-run.exe <== please click me

Been doing this for the last 20+ years. It’s not without its problems, but it’s a million times better than nothing!

Former editor-in-chief of Hackaday (and my current coworker) Mike Szczys has been publishing a bunch about our HIL testing and all the surrounding tooling we do for The Golioth Firmware SDK. It’s an intense amount of work but as others have commented… It’s worth it.

https://blog.golioth.io/automated-hardware-testing-using-pytest/

A few years back, I did this for U-Boot using pytest and Jenkins. See https://github.com/swarren/uboot-test-hooks

This is nice, but I think it’s not the right approach for most cases. When I’m writing the firmware for a project, I write hardware abstraction layers over most peripherals. I then write the main logic in its own class, using the HALs. Then, I write unit tests against this. It’s pretty easy to write simple fakes for the HALs, so I run the unit tests on desktop.

Good test coverage is best practice for a reason – it makes it so much easier to do development work. With good test coverage, I have a lot more confidence when I make a change. I don’t need to extensively test the physical device with each iteration, which can be quite time-consuming and/or difficult.

You can see an example of how to set this up in this project: https://github.com/ademuri/open-motion-light

I use PlatformIO as my build system. It supports running tests written against the GTest framework.

This! There’s no excuse not to do it when dealing with 32-bit MCUs. But with AVR or MSP430 things get a bit complicated due to different word sizes and implicit type promotion. One could use a simulator, but I haven’t seen anyone using simavr and GTest together yet ;-)

How do you catch bugs in your HAL?

The same way that you’d catch bugs in your program if you didn’t have a HAL – with manual testing. This approach also doesn’t catch idiosyncrasies with hardware, but again, you’re still better off than if you didn’t have any tests at all.

I’ve found that my HAL implementations are quite simple. For Arduino functions like millis() and digitalWrite(), I implement fake versions for testing.

I also enable ASAN and UBSAN for my unit tests, to catch some C++ issues. I don’t run into too many of those, but it’s worth it since those issues can be quite difficult to catch.

Unit test takes more time to write as application itself.

Well, it does take some time to write good test coverage. But, as with any software, it’s an obvious cost versus the harder-to-see cost of manual testing and debugging. I would argue that for any project where you can’t stare at the code and easily verify that it’s correct, writing tests saves you time (and headache) in the long run.

Yeah, but hunting bugs that appear due to inadequate testing takes even more.

And it’s not a problem if you expect to work on the software in the long term. For a one-off sure, might not be worth it (though TDD has value even then). For a project that will be improved for some time the return on investment from good test coverage is very quick.

We have a significant team devoted to developing and maintaining our HIL smoke tests. We run them on all daily builds, so can narrow down to the day when a test breaks and isolate the commit that did it. We use git and Jenkins runners to drive the precess, and a variety of scripts, USB I

Ports, dedicated apis , audio capture and signal generators. The tests operate in different locations, for resilience against local outages, and all the results are put in a database for analysis. It’s an extremely powerful system for catching firmware errors and regressions.

People are fixated on CI/CD, just send the freakin binary to the server..

You need to reliably keep track of how and what built your binary. CI/CD is the most straight forward way

Please don’t go near any publicly available software. Get your “Im-a-rebel/genius-I-dont-need-tests” out on your local hardware with your own toy projects. Others already learned and are sick of having to explain this over and over.

Coming from software to hardware, and being something of a UnitTesting enthusiast, I always wondered how this skill/dogma would translate. Blinking leds is my goto visual test, being rather new, lazy and devoid of proper test kit.

This article makes me muse upon the possibility of using AI to monitor those leds flashes – making comparisons in their frequency and deducing changes.

I have been drinking.

The last sentence explains a lot, nobody needs “AI” involved in tests.

Done this on embedded systems. The trick was to define a HAL (Hardware Abstraction Layer).

Then the simulation side of things could mock out the HAL layer and test the vast majority of the code. The cool thing was that we also could one-off conditions for example a function returns a very rare

result from a low HAL function and would check how the main partof the code handled it.

The trick here is to use the simulation to outline and clarify all assumptions about how the HAL functions work. These become the requirements for the HAL. In other words, the HAL functions MUST comply to those requirements/assumptions otherwise the main code will fail.

Then to test the HAL functions we’d write a test harness. It would take commands from a test PC (for example) and run those on the device (usually a dev board version of it). We could then confirm that the HAL functions behaved according to the requirements/assumptions outlined above.

If the hardware changed and therefore the HAL changed, then these requirements could easily be re-tested or extended as needed. Then the main code could also be extended as needed to ensure it could handle any new requirements for the HAL functions.

The trick here is that all is fair in love, war and testing. So write the test harness to make whatever you need to test easy to do. Hack if necessary.

And the overall trick is subtle. If the HAL is defined at too low a level then it’s near useless. If it’s too high the test harness becomes difficult to drive for all cases and therefore more bugs are probable. The “just right” level is a difficult, subtle, architectural decision. It is a balance of low level hardware abstraction and yet high enough.

One way to think of it is to look at the behavior level. Instead of a low level function to get a pin value i.e. the HAL is abstracting pin 22 vs pin 24, define a function to get motor position i.e. the HAL may use several pins, have several low level I2C calls, etc. The main code simplifies the higher up it goes,

at the risk of complicating the code in the HAL.

Sounds like a lot of work, but in high reliability systems (e.g. medical devices) it pays in a big way.