We’re not very far into the AI revolution at this point, but we’re far enough to know not to trust AI implicitly. If you accept what ChatGPT or any of the other AI chatbots have to say at face value, you might just embarrass yourself. Or worse, you might make a mistake designing your next antenna.

We’ll explain. [Gregg Messenger (VE6WO)] asked a seemingly simple question about antenna theory: Does an impedance mismatch between the antenna and a coaxial feedline result in common-mode current on the coax shield? It’s an important practical matter, as any ham who has had the painful experience of “RF in the shack” can tell you. They also will likely tell you that common-mode current on the shield is caused by an unbalanced antenna system, not an impedance mismatch. But when [Gregg] asked Google Gemini and ChatGPT that question, the answer came back that impedance mismatch can cause current flow on the shield. So who’s right?

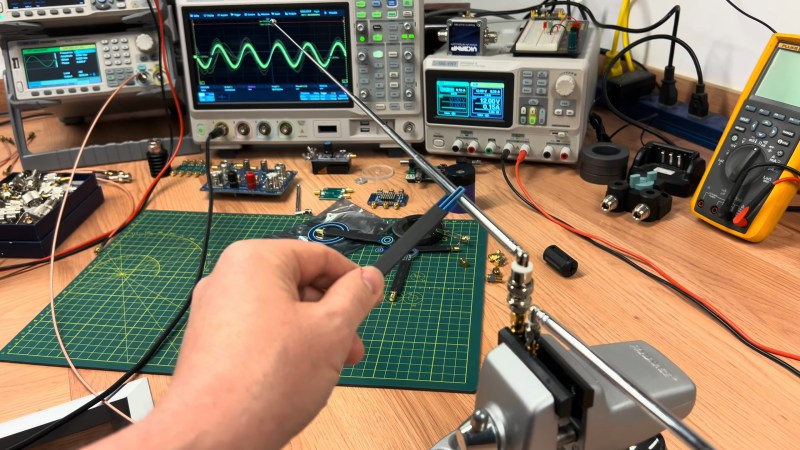

In the first video below, [Gregg] built a simulated ham shack using a 100-MHz signal generator and a length of coaxial feedline. Using a toroidal ferrite core with a couple of turns of magnet wire and a capacitor as a current probe for his oscilloscope, he was unable to find a trace of the signal on the shield even if the feedline was unterminated, which produces the impedance mismatch that the chatbots thought would spell doom. To bring the point home, [Gregg] created another test setup in the second video, this time using a pair of telescoping whip antennas to stand in for a dipole antenna. With the coax connected directly to the dipole, which creates an unbalanced system, he measured a current on the feedline, which got worse when he further unbalanced the system by removing one of the legs. Adding a balun between the feedline and the antenna, which shifts the phase on each leg of the antenna 180° apart, cured the problem.

We found these demonstrations quite useful. It’s always good to see someone taking a chatbot to task over myths and common misperceptions. We look into baluns now and again. Or even ununs.

“We’re not very far into the AI revolution at this point”..

What AI revolution? Sometimes there are technological jumps. Meh. This is just good marketing.

AI has some obvious and severe limitations at the moment, but you are woefully uniformed if you think it is all “just good marketing”. Companies aren’t spending billions of dollars on it for “good marketing”.

Who said “all”?

Only you.

Details are important.

Otherwise it’s just blind fanboism.

Read my other posts here if you think I’m just am AI fanboi.

Companies absolutely spend billions on something they think they can sell. Doesn’t matter if it is good or not.

Indeed, they spend it on bad marketing. Facebook wasted $50B building a 3D VR version of Habbo Hotel. It was so crappy, even 4chan didn’t bother to come in and close the pool.

Maybe you need an AI spell checker : “uniformed”.

Exactly right. Some people just can’t handle the idea of barriers to entry being lowered. Ironically, many of the basic comforts and conveniences we enjoy today were decried by self interested groups at the time.

Fortunately, the world has a reliable habit of growing beyond them

Lol, ok bud.

People like you are the reason I still have a programming job.

It’s always funny when people being me the latest chatgpt crap, which I refuse to fix.

The amount of gpt generated code my company runs: 0

“This technology doesn’t do specifically what I want it to do and is therefore useless!”

is the new “should have been a 555 timer”.

And an ice cream maker can’t play a tune.

Yep, conversational google.

“What AI revolution? ” Agree. Just a fancy search engine hype to make money. There are cases where being trained for a specific task, an inference engine can be very good at. But better to just use google or duckduckgo or what ever to get your ‘answers’ for general solutions. I can see the ‘draw’ though as with the word ‘AI’, people can get the idea it can make critical thinking unnecessary — ie. let the ‘AI’ do it for you :rolleyes: .

I absolutely agree that AI hot garbage for this sort of info. I think he misses one important point in the unterminated coax test though: unterminated coax has extremely high common mode impedance, and the antenna coupling to the coax shield in the near field can, and almost always does drastically lower CM impedance unless coax length and position are very carefully controlled, or a choke is used. Large mismatches in a real world setting are indicative of conditions that make CM current way more likely. Even if the common mode impedance of the whole system is relatively good, >1kΩ, if the antenna impedance is also very high (a long end fed wire can be 1kΩ or more), and there is no matching, at that point you’ve got a divider, and your common mode current is going to be proportional to the ratio of the antenna impedance and common mode impedance. A properly designed common mode choke will be >3kΩ, which is enough to swamp all but the most stubborn CM current. The mismatch doesn’t cause RF in the shack, the high antenna impedance does, but the SWR is a good indication that you’d better design the system with a particular eye toward CM current suppression.

“The difference between ignorance and stupidity is that ignorance is curable.”

So does ‘schooling’ ChatGPT cure its ignorance? Or does it remain stupid?

Categorical error. ChatGPT doesn’t know anything, it is incapable of both stupidity and intelligence, and of ignorance or curiosity or learning. You can’t “teach” it in this sense. You can train it but this isn’t the same as teaching.

It’s just a reddit/stackexchange/quora/etc summarizer. It is their true Messiah, and their vengeance.

Large Language Models are like someone walking around a hospital listening to conversations. They make connections between, say, “infection” and “antibiotic”, and can correlate antibiotic families to infection types, etc. So effectively it’s sort of BSing without actually abstracting concepts let alone reasoning.

I think we’ll need a fundamental restructuring if we want to push AI into proper reasoning. We need a way for it to take an idea, assign truthiness, link it with related ideas, weigh it against those ideas and update its storage of knowledge.

I could see LLMs being a part of that process but we need to integrate them as smaller parts of a more elaborate model to cross into reasoning.

There are more advanced efforts in that direction, including models that can be inspected to explain and provide feedback.

ChatGPT does not “learn” from its interactions with users beyond the specific discussion at hand. I know this because I asked ChatGPT whether it did or not.

https://chatgpt.com/share/673a4d07-0894-8001-b7fe-ccedc53770f7

Thatbis not true, it has thumb up and down and that is reinforcement learning from human feedback (RLHF).

That’s hardly specific enough to be called “learning”. And did you even bother to read the link? I think I’m going to take ChatGPT’s word about how ChatGPT operates than yours.

Then you’re not very familiar with:

ChatGPT is a Large Language model.

It’s a big model of language, of English. It produces sentences, based on big model of what words can often be found together and how they fit the Grammer rules of the language. That is, it’s a big autocorrect – it’s a model of language, to generate language.

It’s not a Large Facts model. It doesn’t give you facts; it gives you sentences. They are grammatically correct and that’s the only kind of correct they are.

I did a ChatGPT session on a topic I know about asking some simple questions. At each point I pointed out the errors in the previous answer, and slowly the quality of the answers got better.

Then I went back later in the day, and asked the same initial question and the answers were back at the start – full of errors. So I asked “Don’t you remember our previous discussion on this topic?” Answer “No”.

That is true, but ChatGPT archives your previous discussions and you can revive them if you want to continue them later with the benefit of whatever corrections you had previously made. Click the “Open Sidebar” tab at the upper left if it isn’t already open.

Which isn’t the point at all.

I have done the same. Outside of that particular session, it does not learn.

I find ChatGPT to be really useful and I use it a lot, but it just makes stuff up when it doesn’t know the actual answer. I’ve had it on several occasions give me a lengthy and detailed response to a technical question that made no sense whatsoever.

A friend of mine just a few days ago asked ChatGPT what the SWR of a vertical antenna designed for 15m would be on 20m and 10m. ChatGPT provided a series of superficially plausible (but irrelevant) calculations that gave results that were off by more than an order of magnitude.

There are people who know nothing about a topic, yet will make up an answer to any question you ask.

Sometimes, they even get it right.

They are called: Bullshit Artists.

In the 1st Century CE they were called seedpickers. So these kind of people have been around a long time!

I would like for every AI to have some kind of certainty score for each answer. It is not a useful tool as long as it acts like it has to answer every question with no clue to the user that it is likely wrong. No answer is much better than a false answer. False answers to engineering and science questions are potentially deadly.

It would have been interesting to see what the AI was using to figure out its wrong answer to the SWR question. In other words is there incorrect data out there or did the AI misunderstand it. Those answers are important for AI improvement.

It’s the same with Musk…

Think of a topic you’re knowledgeable about and then listen to him talk about it. He just mentions some related-sounding language and fills in the gaps with random rubbish.

Unfortunately it’s enough to fool a lot of people

The biggest problem with the current “AI” systems is the “I” part. They are not Intelligent in any sense of the word. Until that part is resolved with new models they will never be actually Intelligent.

I would expect “artificial light” to be actual light. But I wouldn’t expect “artificial flowers” to be actual flowers – just things that appear to be flowers.

So the real problem is the “A” part. I usually read it with the second meaning.

the tendency to invent things when uncertain is undeniable, but there will be a few incremental changes in the near future that probably change how we perceive it.

but the thing that captures my imagination…chatgpt doesn’t converse with you, instead it predicts what a conversational partner might say. it doesn’t think, instead it predicts what combination of words thought might produce.

i can understand that many people don’t have enough humility or self-insight to admit that’s what goes on in their own heads…but most of us work in technology. surely we have met every kind of coping mechanism that someone who doesn’t really understand what they’re talking about uses to earn prestige / keep their job / attempt to be helpful. surely the idea that ‘humans don’t do anything but bs their way through subjects they don’t really understand while trying to project a vague image of competence’ is pretty easy for us to swallow :)

This is all true.

I’ve found Claude to be fine for what I do: nutrition and recipes. Claude was far more helpful in getting my EFHW sorted than hams at eham actually. None of the AI’s are engineers or capable of much math or even logic. When they can do this things we will be off on another run like when the Internet became a “thing”. I’ve gotten help with C++ coding that generally was ok but specific code didn’t work

I find that people who cannot intuit how to use chatgpt find it useless and a simple next random word generator. If you were smarter, your questions would elicit correct answers, and if one incorrect answer were to inevitably appear, you would recognize it as such and rephrase the question until you accurately and effectively converse with the vector database. Everyone else is pretending to be smart. Learning one thing to a high degree shows dedication, not genius.