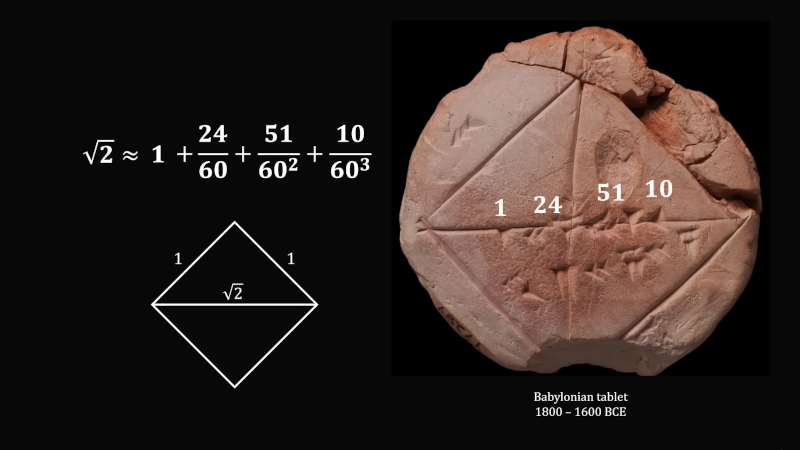

[MindYourDecisions] presents a Babylonian tablet dating back to around 1800 BC that shows that the hypotenuse of a unit square is the square root of two or 1.41421. How did they know that? We don’t know for sure how they computed it, but experts think it is the same as the ancient Greek method written down by Hero. It is a specialized form of the Newton method. You can follow along and learn how it works in the video below.

The method is simple. You guess the answer first, then you compute the difference and use that to adjust your estimate. You keep repeating the process until the error becomes small enough for your purposes.

For example, suppose you wanted to take the square root of 85. You can observe that 9 squared is 81, so the answer is sort of 9, right? But that’s off by 4 (85-81=4). So you take that number and divide it by the current answer (9) multiplied by two. In other words, the adjustment is 4/18 or 0.2222. Putting it together, our first answer is 9.2222.

If you square that, you get about 85.05 which is not too bad, but if you wanted closer you could repeat the process using 9.2222 in place of the 9. Repeat until the error is as low as you like. Our calculator tells us the real answer is 9.2195, so that first result is not bad. A second pass gives 9.2193, You could keep going, but that’s close enough for almost any purpose.

The video shows a geographical representation, and if you are a visual thinker, that might help you. We prefer to think of it algebraically. You are essentially creating each adjustment by adding the guess and the square divided by the guess and averaging them.

The ancients loved to estimate numbers. And Hero was into a lot of different things.

I was actually using this technique on an Intel 80C186 in the mid 80s. We needed a square root function and the routine needed to complete in less that 10 msec. 10 msec was one revolution of the disk drive we were writing. I devised a guessing algorithm that used the last answer as the first guess. We got a new square root in 5-7 msec and the customer was happy. Old school

I still use it with students. It covers a LOT of ground re floating point, and the limitations thereof.

How do we know they used this algorithm instead of simply drawing a large square on the ground and measuring it, then teaching the result as a known constant?

The answer given is correct to better than one part per million. It’s the best answer you can get with 3 sexagesimal places. You don’t get that from measuring a large square.

The given answer is correct to better than one part per million. It’s the best you can get with three sexagesimal places. You don’t get that kind of accuracy from measuring a square.

We don’t know what algorithm they used, but I suspect you’re understanding the technology required to construct an accurate square and measure it to the precision shown.

Have a go, draw a square and see if the diagonal measures correctly to 5 decimal places. That’s 0.01mm in a metre, impossible to observe by eye. The bigger you go the harder to keep your edges straight. Unless you use lasers, which were unlikely to be readily available ;)

A modern day metrology lab could do it…

I’m tempted to say “hold my beer…”

If I can aim at a 1 meter target 400 meters away with a rifle and actually hit somewhere near the center, I can line up three sticks at equal distances 30 meters apart with pretty good accuracy. If the side length of the cube is 60 meters, then the smallest difference in the diagonal I should measure is about a millimeter.

Of course it would be a massive undertaking to level a field and set up the “observatory”, do multiple measurements and average things out, make good guesses, etc. but you only need to do it once. Maybe they did it to check that the mathematical solution is valid.

this sounds like a very fun experiment. Please try! I’ll hold your beer. when you do,

No need to level anything. A ditch filled with water will work. Somewhere I read they think that was how the pyramid foundations were laid so flat.

The slide rule implements this geometric relationship directly.

when i was going to school i would use that estimation method with short division. I found it to be fast because i could easily divide two digit numbers into other numbers. e.g. 18 into 248, 18 into 24 goes once, then remainder 6. 18 into 68 goes 3 times remainder 14. i became mentally ill at the time and forgot long division, getting muddled, but that method got me through the last few exams.

Maybe I’m not understanding, but isn’t that just long division?

Just what were they doing to need this?

To know what they were doing to need this, I would like to advise you to read about the Sumerian and then the Babylonian civilizations. You will not believe what they accomplished! This calculation will be the least of it! Did you know that the clock system was invented by them? Or the concept of arithmetic modulo is just another example.

Perhaps calculating the length of pieces to cut when building things with shapes that included triagular parts?

For one, building ziggurats and such. There’s a million times when building large things where having the square root is helpful – diagonal braces in scaffolding, checking if things are square, measuring height where you cannot reach a ruler using basic trig, etc.

You don’t need that much accuracy for architecture.

They say an inch is the bricklayer’s thou.

Spackle will cover it.

B^)

An architect once told his son, “When you think you’ve completed the design, add a foot to the elevation. The worst is that it would look bad, for if it is too low, you’ll have nothing but problems.”

The imaginary number i (square root of negative one) is used (whether those building things know it or not) in a lot of construction.

They had intricate architecture back then and would have run into moments when this would be useful.. But as for the originator of the formula, I think people would be surprised how much pursuit of pure mathematics for its own sake was being done in very early periods of history. Aristocratic contempt for work often creates some interesting hobbies.

they were just mathturbating…

Similar is r=(x+r²)/2r, repeated until you converge on an answer. I think I got this from Embedded Systems Programming magazine, Nov 1991.

The first method I was taught requires repeated divisions, but it’s easier to remember. You take your original number, divide it by an estimated square root, and you know the true square root is somewhere between your estimate and that quotient. Take the average of those two as your new estimate and repeat the process, until you can see the difference between the estimate and the quotient is converging adequately on the answer.

There’s another method that uses only addition and subtraction, but it’s extremely slow except for small numbers.

I should have put that simpler but very slow method above. Say X is your input, and R is the root. Here’s what you do:

R=1

X=X-R

R=R+1

X=X-R

Keep looping back to the second line until X becomes negative.

R=R-1

Something good to implement in Logic World.

Similar is r=(x+r²)/2r, repeated until you converge on an answer. I think I got this from Embedded Systems Programming magazine, Nov 1991.

The first method I was taught requires repeated divisions, but it’s easier to remember. You take your original number, divide it by an estimated square root, and you know the true square root is somewhere between your estimate and that quotient. Take the average of those two as your new estimate and repeat the process, until you can see the difference between the estimate and the quotient is converging adequately on the answer.

There’s another method that uses only addition and subtraction, but it’s extremely slow except for small numbers.

That’s not fair, I’m helpless agains nerd-sniping :(

We had this at school, and I wanted to get rid of the division by 2. I remember having two fractions with the denominator of one being equal to the numerator of the other, the second easily derived from the first, and both differing by 1/(common denominator). Wrote it down, lost the paper snippet and forgot about it, thinking it was commonly known. Now I couldn’t find this algorithm anywhere, so by now I have spent several hours trying back and forth to recover it — and I got it again:

To approximate the root of x

1. Take a number in the ballpark of the root(x) c (=c/1), write the second number as x/c

2. The pairs are now c/d and n/c, numbers after letters for iteration step

3. c1 = c0c0+n0d0, d1 = 2c0d0, n1 = xd1

4. c2 = c1c1+n1d1, d2 = 2c1d1, n2 = xd2

…

The root of x is between c/d and n/c, which are 1/(cd) apart.

No proof, sorry, just what I observed when playing with some examples.

argh, got bitten by the formatting: my multiplication sign switches between italics and normal letters, but I think the equations stay readable, so…

ok, found some bits that may be useful in a proof:

– find a, b with a/b < sqrt(x) < xb/a

– a[n+1] = a[n]^2 + xb[n]^2

– b[n+1] = 2 a[n] b[n]

The error is less than a/b-xb/a = (a^2 – x b^2)/ab (with all the common prime factors stripped out), hence we want (a^2 – x b^2)=1. Under which conditions this is true needs further examining, I suspect it to be true for “close enough” values of a, b.

Once you get a[n]^2 – x b[n]^2 = 1 (#1), you also get (with brackets forclarity)

(a[n+1]^2) – (x b[n+1]^2)

= (a[n]^4 + 2 x a[n]^2 b[n]^2 + x^2 b[n]^4) – (4 x a[n]^2 b[n]^2)

= (a[n]^4 – 2 x a[n]^2 b[n]^2 + x^2 b[n]^4) = (a[n]^2 – xb[n]^2)^2 = 1 according to (#1)

So once your error is less than 1/cd (from last coment), it stays less than 1/(cd) for all following iterations.

*underestimating

In the 1970s when cheap handheld calculators became available they did not have a square root function key. Lots of key presses to get an answer or whip out the slide rule. Calculators also brought home the importance of “significant” digits. You might have the right answer so to speak but not get credit because you included too many digits.

The oldest working calculator I ever played with had a scope screen and a 4 number stack displayed.

You could watch it iterate on square roots etc. Super cool.

Of course it was RPN but predated HP calculators.

I’m guessing it was a Friden.

Mine was a Wang

RPN?

What is more interesting is… where is the proof that the method converges for all square (root)s?

if x not negative the serie is u[n+1]=1/2(u[n]+x/u[n])

the elements u[n] converge to u[n]^2=x

u[n]= 1/2(u[n]+x/u[n]) ==> u[n]^2=x so u[n] is the square root of x

(coming here from my other comment) sqrt(x) is always between a/b and x/(a/b), because when you multiply those two you get x, no matter if a/b is bigger or smaller than sqrt(x) (maybe I should have written |a[n]^2 – x b[n]^2| = 1, but ok).

The error is smaller than a/b – xb/a = (a^2 – xb^2)/ab, and with increasing n the numerator increases with the power of 2^n worst case, and the denominator with the power of 3^n, so the distance of a/b and xb/a approaches zero while sandwiching sqrt(x). It may be helpful to write it down on paper, fill in the formula for .[n+1] over two or three steps and watch for the greatest exponent.

(sorry for spamming, ideas usually appear just after sending…)

From the error figure we see, there is a “fast” convergence (|a^2-xb^2|=1) and a “slow” convergence (|a^2-xb^2|>1). As shown above you can never get from “fast” to “slow”, but you could get from “slow” to “fast”, if ab mod |a^2-xb^2| = 0. Does this ever happen? If yes, when? If not, why not? What is the difference between “fast” and “slow” fractions, are they really fast and slow, or are they just equally steep nice-looking or not-so-nice-looking steps towards sqrt(x)?

Obviously you enter the “fast” lane, when xb^2 is next to a square number. With this you can tune the iteration before starting by increasing b as necessary. Is there a suitable b for every x?

A National Technical Schools course I took in the mid-1980s taught this root finding method.

It seemed so weird doing all the calculations to find the next significant digit, and know the other digits in that calculation were wrong. So, if one wanted to find the next significant digit, they’d have to go through the calculations again.

I see another Veritasium video in the future. Too good for Derek to pass.

Makes you appreciate the square root key on that calculator you bought in the dollar store. For 1.25!

That reminds me, I need to replace the battery(s) in mine!

I suspect unless he’s used landmasses, “geographical representation” is actually geometric. That said, this is cool! And geometric methods are going to be very common in old math, so it’s worth having. We once cared about things being constructible, for instance, and especially before slide rules there were a number of calculations that were just regularly done with proportions and shapes.

I love that for all the contributions Newton made, the “guess, check, adjust guess and check again (and again, and again and again) until you are happy” … method, is what “Newton’s Method” is. Like, for a dude that invented a branch of math and showed how to get precise analytical solutions to previously unsolvable or intuition based estimated math problem solutions… it is just poetic. Or hilarious. Euler’s chain, LHospital rule (can’t remember how to spell that one)… all those guys’ rules are super helpful. But Newton? Naw. Just guess at that sh*t and get ‘er good enough.

A remnant of prediluvian civilization. History as we know it is agreed-on BS.