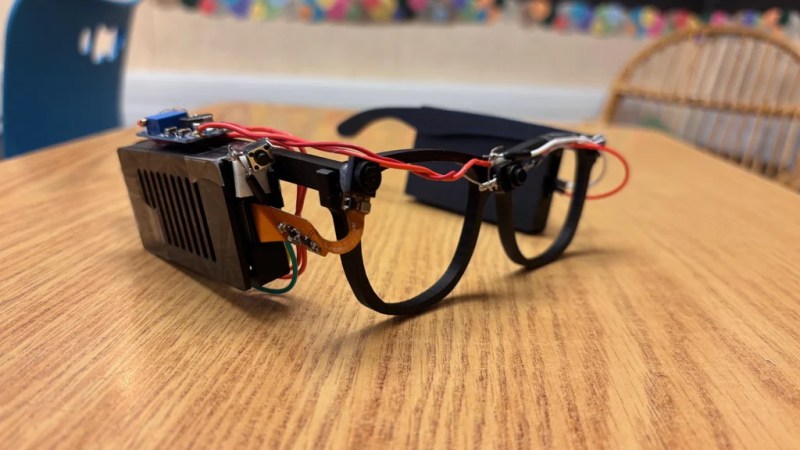

Glasses for the blind might sound like an odd idea, given the traditional purpose of glasses and the issue of vision impairment. However, eighth-grade student [Akhil Nagori] built these glasses with an alternate purpose in mind. They’re not really for seeing. Instead, they’re outfitted with hardware to capture text and read it aloud.

Yes, we’re talking about real-time text-to-audio transcription, built into a head-worn format. The hardware is pretty straightforward: a Raspberry Pi Zero 2W runs off a battery and is outfitted with the usual first-party camera. The camera is mounted on a set of eyeglass frames so that it points at whatever the wearer might be “looking” at. At the push of a button, the camera captures an image, and then passes it to an API which does the optical character recognition. The text can then be passed to a speech synthesizer so it can be read aloud to the wearer.

It’s funny to think about how advanced this project really is. Jump back to the dawn of the microcomputer era, and such a device would have been a total flight of fancy—something a researcher might make a PhD and career out of. Indeed, OCR and speech synthesis alone were challenge enough. Today, you can stand on the shoulders of giants and include such mighty capability in a homebrewed device that cost less than $50 to assemble. It’s a neat project, too, and one that we’re sure taught [Akhil] many valuable skills along the way.

Very cool project, and by an eighth grader no less!

I am really out of touch with machine vision libraries etc. In my mind, they are still hard to use and out of reach, and OCR is unobtainable by an individual. Glad to be reminded I’m wrong though.

They´re not that scary or hard to get started with. You can even mandate some AI to help you design some (crude, to test and correct) examples how to use computer vision and ocr (python opencv rulez)

Kudos to Akhil, certainly far more impressive than anything I was doing in the eighth grade!

Probably more useful for dyslexics than the blind, but seriously cool.

Getting into Lobot territory.. but you keep it up, kid!

Very cool! I could see this expanding from not only reading out text, to also using object recognition so the wearer can understand their surroundings. AI tools could certainly be useful in identifying objects, pathways, etc.

Examples:

– “Closed door 30 degrees to your left, doorknob on righthand side of door”

– “Small object on ground ahead”

-“Turn right 10 degrees to stay on sidewalk/path

And many of the most commonly communicated instructions (such as navigation instructions) could be abbreviated, or even turned into distinct sounds the wearer would learn. You could also play these sounds/communications to the left ear, right ear, or both as a way of communicating if the object is in the left/right/center of the field of view.

Maybe interface these with google translate, on the fly translation of foreign texts.

Parlez-vous français ? Non, mais je lis le français.

This could be good for legally blind folks and if it connected to Google Translate one could read Chinese, Arabic and so forth without having to type the text.

Blindness can be a big spectrum. There are plenty of people who have enough vision to be able to tell the shape of a book or a sign, but not enough to be able to clearly make out the letters.

It’s a common misconception that blindness means total darkness.

Very impressive, especially coming from 8th graders!! Kudos!