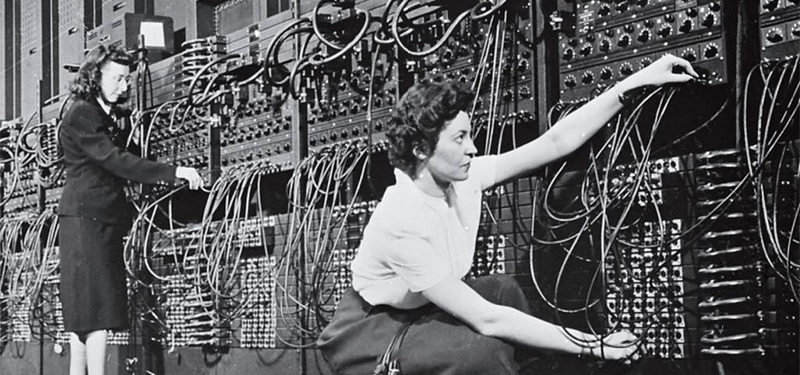

Over the decades there have been many denominations coined to classify computer systems, usually when they got used in different fields or technological improvements caused significant shifts. While the very first electronic computers were very limited and often not programmable, they would soon morph into something that we’d recognize today as a computer, starting with World War 2’s Colossus and ENIAC, which saw use with cryptanalysis and military weapons programs, respectively.

The first commercial digital electronic computer wouldn’t appear until 1951, however, in the form of the Ferranti Mark 1. These 4.5 ton systems mostly found their way to universities and kin, where they’d find welcome use in engineering, architecture and scientific calculations. This became the focus of new computer systems, effectively the equivalent of a scientific calculator. Until the invention of the transistor, the idea of a computer being anything but a hulking, room-sized monstrosity was preposterous.

A few decades later, more computer power could be crammed into less space than ever before including ever higher density storage. Computers were even found in toys, and amidst a whirlwind of mini-, micro-, super-, home-, minisuper- and mainframe computer systems, one could be excused for asking the question: what even is a supercomputer?

Today’s Supercomputers

Perhaps a fair way to classify supercomputers is that the ‘supercomputer’ aspect is a highly time-limited property. During the 1940s, Colossus and ENIAC were without question the supercomputers of their era, while 1976’s Cray-1 wiped the floor with everything that came before, yet all of these are archaic curiosities next to today’s top two supercomputers. Both the El Capitan and Frontier supercomputers are exascale level machines — they carry out exaFLOPS in double precision IEEE 754 calculations — based around commodity x86_64 CPUs in a massively parallel configuration.

Taking up 700 m2 of floor space at the Lawrence Livermore National Laboratory (LLNL) and drawing 30 MW of power, El Capitan’s 43,808 AMD EPYC CPUs are paired with the same number of AMD Instinct MI300A accelerators, each containing 24 Zen 4 cores plus CDNA3 GPU and 128 GB of HBM3 RAM. Unlike the monolithic ENIAC, El Capitan’s 11,136 nodes, containing four MI300As each, rely on a number of high-speed interconnects to distribute computing work across all cores.

At LLNL, El Capitan is used for effectively the same top secret government things as ENIAC was, while Frontier at Oak Ridge National Laboratory (ORNL) was the fastest supercomputer before El Capitan came online about three years later. Although currently LLNL and ORNL have the fastest supercomputers, there are many more of these systems in use around the world, even for innocent scientific research.

Looking at the current list of supercomputers, such as today’s Top 9, it’s clear that not only can supercomputers perform a lot more operations per second, they also are invariably massively parallel computing clusters. This wasn’t a change that was made easily, as parallel computing comes with a whole stack of complications and problems.

The Parallel Computing Shift

The first massively parallel computer was the ILLIAC IV, conceptualized by Daniel Slotnick in 1952 and first successfully put into operation in 1975 when it was connected to ARPANET. Although only one quadrant was fully constructed, it produced 50 MFLOPS compared to the Cray-1’s 160 MFLOPS a year later. Despite the immense construction costs and spotty operational history, it provided a most useful testbed for developing parallel computation methods and algorithms until the system was decommissioned in 1981.

There was a lot of pushback against the idea of massively parallel computation, however, with Seymour Cray famously comparing the idea of using many parallel vector processors instead of a single large one akin to ‘plowing a field with 1024 chickens instead of two oxen’.

Ultimately there is only so far you can scale a singular vector processor, of course, while parallel computing promised much better scaling, as well as the use of commodity hardware. A good example of this is a so-called Beowulf cluster, named after the original 1994 parallel computer built by Thomas Sterling and Donald Becker at NASA. This can use plain desktop computers, wired together using for example Ethernet and with open source libraries like Open MPI enabling massively parallel computing without a lot of effort.

Not only does this approach enable the assembly of a ‘supercomputer’ using cheap-ish, off-the-shelf components, it’s also effectively the approach used for LLNL’s El Capitan, just with not very cheap hardware, and not very cheap interconnect hardware, but still cheaper than if one were to try to build a monolithic vector processor with the same raw processing power after taking the messaging overhead of a cluster into account.

Mini And Maxi

One way to look at supercomputers is that it’s not about the scale, but what you do with it. Much like how government, large businesses and universities would end up with ‘Big Iron’ in the form of mainframes and supercomputers, there was a big market for minicomputers too. (At this time ‘mini’ meant something like a PDP-11 that’d comfortably fit in the corner of an average room at an office or university.)

The high-end versions of minicomputers were called ‘superminicomputer‘, which is not to be confused with minisupercomputer, which is another class entirely. During the 1980s there was a brief surge in this latter class of supercomputers that were designed to bring solid vector computing and similar supercomputer feats down to a size and price tag that might entice departments and other customers who’d otherwise not even begin to consider such an investment.

The manufacturers of these ‘budget-sized supercomputers’ were generally not the typical big computer manufacturers, but instead smaller companies and start-ups like Floating Point Systems (later acquired by Cray) who sold array processors and similar parallel, vector computing hardware.

Recently David Lovett (AKA Mr. Usagi Electric) embarked on a quest to recover and reverse-engineer as much FPS hardware as possible, with one of the goals being to build a full minisupercomputer system as companies and universities might have used them in the 1980s. This would involve attaching such an array processor to a PDP-11/44 system.

Speed Versus Reliability

Amidst all of these definitions, the distinction between a mainframe and a supercomputer is much easier and more straightforward at least. A mainframe is a computer system that’s designed for bulk data processing with as much built-in reliability and redundancy as the price tag allows for. A modern example is IBM’s Z-series of mainframes, with the ‘Z’ standing for ‘zero downtime’. These kind of systems are used by financial institutions and anywhere else where downtime is counted in millions of dollars going up in (literal) flames every second.

This means hot-swappable processor modules, hot-swappable and redundant power supplies, not to mention hot spares and a strong focus on fault tolerant computing. All of these features are less relevant for a supercomputer, where raw performance is the defining factor when running days-long simulations and when other ways to detect flaws exist without requiring hardware-level redundancy.

Considering the brief lifespan of supercomputers, currently in the order of a few years, compared to decades with mainframes and the many years that the microcomputers which we have on our desks can last, the life of a supercomputer seems like that of a bright and very brief flame, indeed.

Top image: Marlyn Wescoff and Betty Jean Jennings configuring plugboards on the ENIAC computer (Source: US National Archives)

I heard Lily Tomlin’s voice as Ernestine when I saw that first photo. “A gracious good morning…”

The phone in my pocket is more powerful than a 1970s room-sized computer. In 50 years time will a pocket-sized device be more powerful than todays room-sized computer? Sadly I sm unlikely to find out.

Probably… and just as likely it’ll need every bit of its power to run Windows.

The majority of phones, much like the majority of these parallel computer clusters, run an OS that’s Unix/Linux based.

“Ultimately there is only so far you can scale a singular vector processor, of course, while parallel computing promised much better scaling, as well as the use of commodity hardware.”

Well, yes and no. At some point, it causes a high amount of processing power just to manage so many parallel processors or processes.

Parallel processing causes a high burden on the scheduler that shouldn’tbe underestimated, I mean.

So I think that the 1024 chicken vs 2 oxen comparison wasn’t as foolish as it may seem.

Having a few oxen under control is less troublesome than lots of chicken. ;)

For years, I have been wondering how the work can be administered and split up to the various processors in a way that’s not just more work than the administrator doing the work itself. I suppose it depends on the type of work. I have a chance to do massive parallel processing on a small scale to experiment, but I have not started yet.

A long time ago I used to work alongside Cray engineers on the last generations of those machines. They were not exactly monolithic even then, and had an enormous amount of redundancy, down to paired processors with logic to flag single bit errors on output. These systems’ management was quite work intensive as well, with most scheduling being essentially manual in order to squeeze as much usable workload as possible, there was a literal paper chart we kept updated on the for reference with everything blocked out (it really helped when frustrated researchers wanted larger time slots).

The last system was also somehow insanely hard on it’s external cache, an array of 512 drives. We lost quite a few every time the machine was shut down, and an actual power failure (hilariously because we overloaded the power station link) caused some 300 to die.

Those few oxen were certainly the mantra of CRI (Cray Research Inc.) before SGI. Sadly, for the FEA code I worked on, those few oxen weren’t very effective when they got to more than two handfuls.

I worked for an FEA provider from the late 80s through early aughts; I was also on CRI’s Fortran Advisory Board in the ’90s. Sadly for CRI, a 16 CPU run of our code wasn’t often that much faster than 8 CPUs on a C90 . To be clear, we had some math kernels, notably an MxM, that got to the high 90s percent efficiency due to CRI’s vector features, including chaining, tailgating, hardware gather/scatter, &etc. The reason for poor CPU scaling was due to resource contention on a shared-memory parallel (SMP), even on an architecture that was legendary for maximizing resource availability. To be fair, this wasn’t only CRI’s problem, we were seeing these SMP issues across the gamut of systems used in CAE. Forewarned is forearmed, we restructured and rewrote our code for distributed memory parallel processing (e.g., clusters), which demand minimal interactions. Even running those distributed codes on the C90 wasn’t a substantial improvement. The 90’s saw RISC processors (and then x86) surpass the C90’s scalar performance. Worse still, systems like IBM’s Power 2 RS-580 was computationally competitive with the C90, even when the C90 brought its full vector capability to bear; performance-per-watt and performance-per-dollar were greatly in favor on the 580.

Back to the article’s main point. In the mid 90s, a US automotive manufacturer presented CRI and my employer with a challenge. They demanded we make a specific modal analysis (vibration modes) of a body-in-white (the body shell) run in 6 hours, start to finish. When we first got the data sets, the solution phase (i.e., only solving the system of equations) took 6 hours–reading the input, building the system of equations, and outputting the results were a few more hours. CRI and my employer eventually (a year later?) met the customer’s challenge. Perhaps 5 years later (in the early aughts), I ran that identical analysis on my /home/ Intel Linux system–it too met the challenge.

No mention of Linux?

This is another area where Linux really shines brightly.

Up to around 2003 most of the computers in the TOP500 ran some Unix variant, then a transitional period, and since 2017 100% of the TOP500 is running some variant of the Linux kernel.

https://en.wikipedia.org/wiki/TOP500

Even in 2001 Linux big iron was catching up. I don’t suppose you remember IBM’s pro Linux ad campaign at the time but they were pushing hard and most supercomputing facilities already had one or two “mid-tier” supercomputing cluster based on Linux. In fact, probably Sun’s last real gasp was parallel compute on things requiring massive amounts of memory, with the peak offerings being supercomputing clusters for bioinformatics. These were all running Linux.(It was a pain in the ass to adapt to older data centers, completely screwed up our environmental control and I even ended up correcting the department head in front of brass for not knowing the problem from requirements Sun’s engineer provided.).

Where I worked we would have listed in the top 10 with at least one of these systems were it permitted. (Including the Cray we would have listed for years prior, but they even did strategic purchasing to make it look like our machines weren’t top spec for the time.) This is just quirks of a public institution sharing with [REDACTED].

Hey Seymour, El Capitan is plowing a field with 43,808 chickens instead of two oxen.

No mother, that’s just the northern lights.

Imagine the… No, never mind, don’t. Between the static at low humidity, thousands of chickens, this is not a fun picture, I didn’t even want to complete it.

What’s a supercomputer? A regular computer in 30 years?

I often think of if as a “big iron”, a mainframe, a host computer.

The old big computers of the early days did distinct themselves from ordinary modern PCs by using terminal devices and by using time-sharing/multi-user concept.

PCs, as we know them today, didn’t use this concept before MP/M, I think.

That’s when terminals and time-sharing came into play.

Concurrent DOS, PC-MOS/386 or Wendin DOS offered similar in the 80s, I think.

In the mid-20th century there also were so-called “process computers”,

which had the job of controlling something (machinery, other computers) or processing lots of data.

To some degree, computers in rocket stages could be called “supercomputers” maybe.

The amount of computing they performed “on the fly” was comparably enormous in the 1960s and 70s.

Not unlike the powerful computer imagined on board of the USS Enterprise (TOS). ;)

Define PC…

Was a workstation a personal computer?

I say ‘no’. Too damn expensive.

But formfactor says ‘yes’.

LSI-11?

You’ve forgotten some of the worst mess ‘microcomputers’ encompassed.

For example, DOS sucked, but compared to the ‘OS’ in an Apple 2?

In the early old days there were 3 grades of ‘OS’ (best to worst).

1. OS supported basic.

2. OS was part of/extension to basic.

3. OS was written in basic.

IIRC 3 was CompuColor.

Also recall the ‘printer’ that as an array of solenoids meant to sit on a Selectric typewriter.

Netmare 2 was once a godsend…Sucked balls, but didn’t crash.

It was a glorious mess.

IBM coined the ‘PC’ term.

Late in the game.

In any case Amigas were multitasking, just not protected memory. Blitter!

“Define PC…”

In a nutshell?

An Z80 microcomputer capable of running CP/M.

Or an x86 microcomputer capable of running DOS or a successor.

Also there’s personal computer and Personal Computer.

The latter is often being associated with the term “IBM PC”,

which is a reference to the IBM PC 5150, it’s successors or compatibles.

There also were “MS-DOS compatibles” that could run DOS but weren’t strictly IBM PC compatibles.

The NEC PC-9801 line comes to mind, or the Sirius-1 (Victor 9000).

Perhaps there are more definitions, but that’s splitting hairs.

The C64 had “personal computer” written on some boxes, but that’s a marketing lie.

The C128, and especially C128D, was a real PC, though. It could run CP/M thanks to its Z80 CPU.

I hope I could help you! You’re welcome! 😃

learned something new today, computers are pretty damn hard to fit in a box. well… metaphorically.

6502 hate…

also 8080…

Before the IBM PC the common term was ‘micro computer’.

If your being OS centric…8080 Altair’s ran CPM before the Z-80 existed.

IIRC it also ran Berkeley Unix, if you had a day for bootup.

“6502 hate…”

Sorry, what? How? Where? I didn’t even mention it!

The 6502 simply wasn’t used for wordprocesing, for machine control, for the early CP/M computers of the 70s.

It’s not my fault that things had been the way they’ve been.

And it’s a matter of fact that CP/M and its many clones ran on historical computers such as IMSAI 8080 or Altair 8000 or North Star Horizon.

These computers had used S-100 bus and became the first “personal computers” as we know them.

Little boxes with a serial glass terminal or a CRT device+ASCII keyboard.

The 6502 was great for toy computers such as VIC20 or the Famicom/NES, though.

It was being used in a lots of kids toys and did its job very well.

The 6502-based Apple II was popular, too, of course.

It had more than 20 clones world wide, I think.

Here in Europe Apple II was popular before 1983 or so.

That’s when VC20/C64 took the place, I suppose.

With an Z80 “Soft Card”, the Apple II could run CP/M software, too.

There even was an 8088 card for running DOS, at some point.

“If your being OS centric…8080 Altair’s ran CPM before the Z-80 existed.”

»If you’ve being OS centric« – Oh my, how that sounds! 🙄

Pal, I’m not cherry picking here. CP/M was a big deal! Really big!

It was (among) the first operating system for microcomputers and has its place in history.

It’s deeply routed withing computer hobbyist movement of mid-70s and history of the development of the first PCs.

It was a software standard, too, allowing software interoperability between differen computers.

It was first time that software was “portable” between computers of different users.

There had been more than 20 CP/M compatible OSes that were CP/M binary compatible and could run CP/M programs.

The only requirement was that the processor could handle i8080 instructions (later: Z80 instructions).

»8080 Altair’s ran CPM before the Z-80« Sure. But the 8080 was replaced by Z80 soon. “A new star was born”, so to say.

Most CP/M programs were written for Z80.

Turbo Pascal v3 , for example. Very popular example.

Plain DR CP/M still ran on basic i8080, but applications rarely did not anymore. Developers used Z80 programming, applications compiled with TP made use of Z80 registers.

There had been Z80 compatibles such NSC800, for 8080/8085 upgrade purposes.

“Before the IBM PC the common term was ‘micro computer’.”

The term “personal computer” did exist by late 70s already.

Originally, people used the term if they meant a microcomputer with a typewriter keyboard, a good readable 80×24 or 80×25 screen (former terminal standard), a floppy drive or hard drive storage.

Something you could do work on. Something you did run on a minicomputer or mainframe just before.

The personal computer was a personal computer,

nolonger users had must book computer time when sharing processing power on a big computer.

That’s exactly how CP/M came into play, the Control Program/Monitor.

With a personal computer running CP/M, you could use your microcomputer any time. The way you want.

No need to share it with others anymore.

Lesser computers were a “hobbyist computer”, “game computer”, “educational computer” or “home computer”.

The Commodore PET 2001 had almost been some sort of personal computer, thanks to its industry bus interface.

However, it had a datasette recorder and a chicklet keyboard initially.

It also had an 6502 instead of 8080 or Z80.

That was a contradiction of features, thus it was more like an inflated terminal with built-in Basic rather than a typical “PC”.

But that’s how real life is, simply, I guess.

Certain things are just hard to categorize.

It’s not for nothing that PET stood for “Personal Electronic Transactor”.

I think that terms like “personal computer” and “home computer” and “graphics workstation” are also device classes.

Thus, these apparatuses can be defined by their use case/application.

Hence I said “in a nutshell” before.

There were historic computers that were personal computers and simply had certain traits in common (such as the ability of running CP/M or DOS).

And users back then were refering to them as personal computers in daily life, simply.

The “IBM PC” is such a prominent case, simply.

I read the term “supermini” for first time in “The soul of a new machine”. The Eclipse was one.

Interesting term. Historically, a “mini” was a minicomputer, a computer that had the size of a desk instead of a cabinet.

Likewise, a “micro” was a microcomputer, using a microprocessor, which did fit on a desk.

The British also used to describe home computers as “micros”, I think.

In the 1980s, at least. Again, very interesting, I think.

The 80386 was being called “a mainframe on-a-chip”, too.

Probably because of its power, but also it’s priority model (rings 0 to 3) and protected-mode and powerful memory-managment unit.

In this case, a SuperMini was a minicomputer with 32-bit capabilities and, possibly, virtual memory.

Also, my error: the Supermini was the Eagle; the Eclipse was the previous minicomputer from Data General.

“and the many years that the microcomputers which we have on our desks can last” .

This is really only recent history ‘performance-wise’. When we were running C64s, then x86s, then x86_64, it seemed like we were always wanting more ‘performance’ and changing out computers. Now that isn’t so for most of us. My 5900x and 5600x are still ‘screaming fast’ for the desktops and VMs. Only reason to jump to latest is because either the current system dies, or because we just ‘want to’ for bragging rights. At least for me, I don’t have a ‘logical’ reason to justify buying a ‘new’ system (AI is not a valid reason nor silly games) for the fore-see-able future.

I found it interesting that the RPI-1 was 4.5 times faster than the super computer CRAY-1 in 1978. The RPI-4 was around 50 times as fast. And of course the RPI-5 is way faster than the RPI-4. So a little $70 credit card sized computer only using 25W full bore. Compared to the Cray-1 at $7,000,000, 10,500 pounds and 115KW of power. Come a long ways…

As cool as the idea of super computing is, I still struggle to understand what use it would be for a hobbyist to dig into building their own. I remember seeing RPI cluster builds on this site a few years back. I have a friend who works with large GPU clusters for medical imaging, and I understand the typical examples for LLMs and weather modeling. Is there anything that can’t be acheived by a traditional computer that would interest a hobbyist or experimenter like me?

Beowulf clusters of space heaters.

I worked for a defected Russian and his wife on what the boss insisted was a “mainframe supercomputer” processor under “Starwars” contract in the early 80s. (I think the state department owed the boss a favor so they gave him a million to build his dream). His wife had a monthly column in the IEEE journal of supercomputing and they both were friends with Cray. At the time, Svetlana edited her papers on a ‘286 PS2.

This thing was built with horrible mechanical design (S100 boards soldered together into drawer frame panels). The whole thing was wirewrapped and used about 10k 74LS’ TTL ICs. We did have a single 16×16 bit flash multiply chip that used 5W. We used 52 x 50pin ribbon cables in each processor element. A whole drawer sized panel was nothing more than the single clock barrel shifter using MUXes.

It was “dynamic architecture” and consisted of 4 x 16bit processor elements designed to be linked into any combination of adjacent processors, i.e. 4x16bit, 2x32bit, 1x64bit, 1x48bit and 1x16bit etc.

They all ran the same instruction set regardless of configuration and could switch configs in a clock or two. A single 50 pin cable linked the processors together.

Cool thing was we implemented the full, brand new, IEEE floating point double precision standard, which means we could do 128bit floating point math in hardware without any co-processor.

It took 50 full sized pages of schematic for each processor. (All 4 were identical except a single identity strap.)

It was Harvard architecture and used dual 16 word instruction prefetch pipelines to hide any branch clocks.

We used an Apple IIe to load the instructions into the instruction memory, and a signal generator as a clock. Most of the time we single clocked it to debug the logic. We did once get it running at 200kHz just for fun.

I called it the “Man from UNCLE” setup because we had LEDs on all 5 internal busses and the ability to force individual bits at any point. Each processor had around 256 LEDs working.

One way to get yelled at was to compare any part of it to a microprocessor. This was a MAINFRAME SUPERCOMPUTER! The boss used to say that his friend Cray used technology to freeze an IBM360 while we had the architecture.

The money ran out and the thing was relegated to a dusty warehouse somewhere, but I walked away with profound experience of implementing functions with “hard logic” and internal functions of a computer. To this day I can still switch between binary, Hex, and decimal with a glance.

A super computer is a computer designed and built without regard to cost with the target of maximum performance. Anything else is not a super computer. Other types of machines are built with cost or manufacturability in mind. Once cost control is part of the design or manufacturing, you are not building a super computer. If you look back at the CDC-6600 and following Cray supers, they follow that model. Cray’s pushed the boundaries of what was possible at the time, sometimes failing. The ETA super was an example of failure.

As a side to this, most early supers were designed for vector processing and running FORTRAN as fast as possible. They were not particularly fast at integer processing.

Now days, the super computers they build are massively parallel machines, which can scale pretty much as wide as you want or are willing to pay.

No recognition of LEO – Lyons Electronic Office.

Noteworthy because it was the FIRST machine developed specifically for commercial application (not “Weaponry”, or “Science”)

Tea shops – 1950s etc. Overnight order fulfillment, optimized delivery routing (scatter out/ gather in, etc.) Vacuum tube LEO 1 and LEO II (Probably 15 machines built) transistor LEO III (Yes, the used Roman numerals).

BTW, the term “cryptanalysis” would not have been recognized in the ’40’s The term “Code breaking” would have.

Nice to see a photo of women working on something other than a legacy plain old telephone service (POTS) switchboard. The photo shows the ENIAC (1945-ish) being programmed by ‘physically rewiring it using patch cords and setting switches on plugboards.’ Of the 200 or so women hired as ‘computers’ (see: “Hidden Figures”), six were ENIAC’s primary programmers. https://en.wikipedia.org/wiki/ENIAC….

Per wikipedia: “ENIAC’s six primary programmers, Kay McNulty, Betty Jennings, Betty Snyder, Marlyn Wescoff, Fran Bilas and Ruth Lichterman, not only determined how to input ENIAC programs, but also developed an understanding of ENIAC’s inner workings.[43][44] The programmers were often able to narrow bugs down to an individual failed tube which could be pointed to for replacement by a technician.” So, not exactly a “Lily-Tomlin-as-Ernestine-telephone-operator” :-)