By some estimates, the English language contains over a million unique words. This is perhaps overly generous, but even conservative estimates generally put the number at over a hundred thousand. Regardless of where the exact number falls between those two extremes, it’s certainly many more words than could fit in the 64 kB of memory allocated to the spell checking program on some of the first Unix machines. This article by [Abhinav Upadhyay] takes a deep dive on how the early Unix engineers accomplished the feat despite the extreme limitations of the computers they were working with.

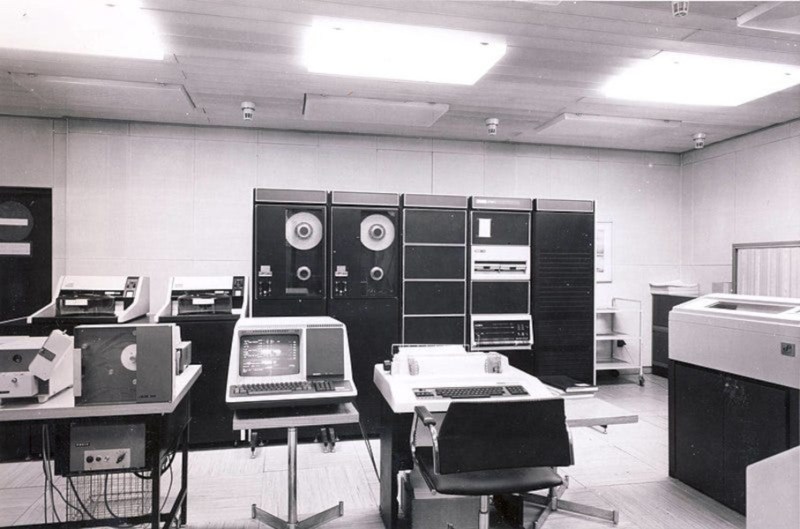

Perhaps the most obvious way to build a spell checker is by simply looking up each word in a dictionary. With modern hardware this wouldn’t be too hard, but disks in the ’70s were extremely slow and expensive. To move the dictionary into memory it was first whittled down to around 25,000 words by various methods, including using an algorithm to remove all affixes, and then using a Bloom filter to perform the lookups. The team found that this wasn’t a big enough dictionary size, and had to change strategies to expand the number of words the spell checker could check. Hash compression was used at first, followed by hash differences and then a special compression method which achieved an almost theoretically perfect compression.

Although most computers that run spell checkers today have much more memory as well as disks which are orders of magnitude larger and faster, a lot of the innovation made by this early Unix team is still relevant for showing how various compression algorithms can be used on data in general. Large language models, for one example, are proving to be the new frontier for text-based data compression.

What does this have to do with the article at all?

“By some estimates, the English language contains over a million unique words.”

I wouldn’t doubt it considering this stupidity. Just a few samples given.

146+ Animal Group Names: A Complete List From A-Z

https://www.goodgoodgood.co/articles/animal-group-names

Apes: a shrewdness

Asses: a pace

Badgers: a cete, a clan

Bats: a colony

Bears: a sloth, a sleuth

Boars: a singular

Ah yes – the appendix to The Book of Saint Albans — printed in 1486.

Demonstrating definitively that you don’t need the internet or AI to generate reams and reams of stupid content.

Note that the words in your “stupidity” list are also used for other things. The usage as a name for a group of animals is secondary.

You can fix a regular expression, to check if a word is valid (useable in Scrabble), into about 380 kilobytes, so it depends on what you mean by “unique”. If you use a character encoding other than ascii you can make it about 30% smaller again.

what was the ” a special compression method ” they ended up using? Lately I am interested in the compression of short strings and locality respecting hashing.

I didn’t understand many of the terms as they’re arcane, but one method they mentioned was sorting the dictionary in numerical order and then computing each word by adding on the difference to the previous word. read the article, it’s not long and there is a tldr section.

That’s smart for that time. Better than use a pure syllabic encoding approach to the problem.

If you need command-line compression try

gz. When comined withtaryou can make file archives.There were also some nice text file based tools in early UNIX for things which you could do a cat-grep on to get the information wanted. E.g. there was one called “units” so you could do something like “cat units | grep inch” and it would give you the conversion ratios between an inch and cm/mm

Reminds me of “Metric Converter”, aka metcnvrt, a graphical unit conversion utility written for Windows 2.x.

It’s merely from 1990, though and not nearly as old as the Unix utilities from the 1950s.

Unix:

https://en.wikipedia.org/wiki/Unix

Started development in 1969. There are no “Unix utilities from the 1950s.”

On the topic of old spell checkers, I remember a DOS-based standalone spell checker that was really smart (for its time): it would learn from your pattern of spelling errors (I assume things like: is this someone who just doesn’t know how to spell, or spells phonetically, or just has fingers slipping to neighboring keys) and make suggestions based on these patterns. I remember it getting noticeably better in its suggestions as it went over your texts. Does anyone remember its name?

The spelling checker in Borland Sidekick, or its successor program(s), don’t remember was/were extremely tight and very good. A lot of it was done with indexing.

In my version of the GameBoy Wordle clone (https://github.com/arpruss/gb-fiver), I was pleased to get 12972 five-letter words into 17871 bytes. That’s about 11 bits per word, which beats the spell checker’s 14 bits, but (a) they’re all exactly five-letter words and (b) there is no capitalization, hyphens, etc. My method for storing them was to alphabetize, break up into 26 lists, one for each starting letter, encode the remaining four letters as 20 bits, and then store the differences between successive words. Lookup is pretty slow, but it doesn’t matter for Wordle since you just need to look up one word per turn. And it’s lossless, so you’re not going to be able to cheat the game by finding a hash collision.

The strategy remains the same today in similar cases, use compression and find patterns/rules that avoid a pure brute force approach. ie: No English word (I believe), uses more than 2 ‘t’ letters in sequence. Knowing that you don’t need a list with the entries “cat” and “bat” to know that “cattt” and “batttt” or “batttery” are wrong.