Microsoft’s latest Phi4 LLM has 14 billion parameters that require about 11 GB of storage. Can you run it on a Raspberry Pi? Get serious. However, the Phi4-mini-reasoning model is a cut-down version with “only” 3.8 billion parameters that requires 3.2 GB. That’s more realistic and, in a recent video, [Gary Explains] tells you how to add this LLM to your Raspberry Pi arsenal.

The version [Gary] uses has four-bit quantization and, as you might expect, the performance isn’t going to be stellar. If you are versed in all the LLM lingo, the quantization is the way weights are stored, and, in general, the more parameters a model uses, the more things it can figure out.

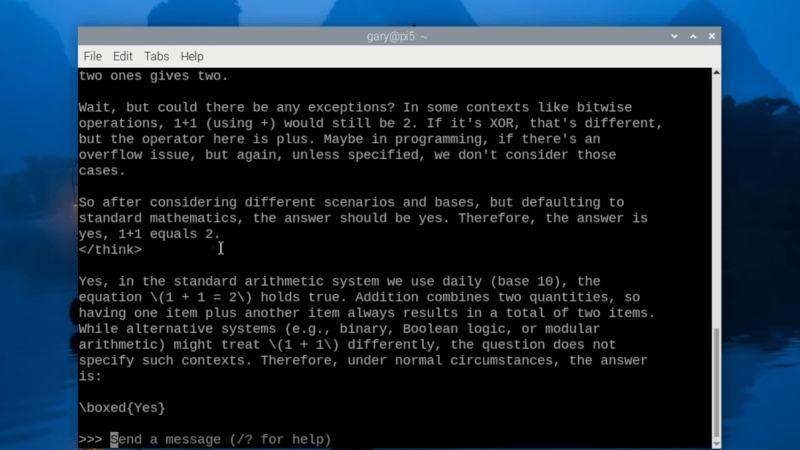

As a benchmark, [Gary] likes to use what he calls “the Alice question.” In other words, he asks for an answer to this question: “Alice has five brothers and she also has three sisters. How many sisters does Alice’s brother have?” While it probably took you a second to think about it, you almost certainly came up with the correct answer. With this model, a Raspberry Pi can answer it, too.

The first run seems fairly speedy, but it is running on a PC with a GPU. He notes that the same question takes about 10 minutes to pop up on a Raspberry Pi 5 with 4 cores and 8GB of RAM.

We aren’t sure what you’d do with a very slow LLM, but it does work. Let us know what you’d use it for, if anything, in the comments.

There are some other small models if you don’t like Phi4.

There are more choices available for limited memory systems. Qwen3 8B is remarkably competitive to that 14B and to the 70Bs for its size.

Interestingly, Llama 3.3 70B, is the only one, of those I’ve tried, that answered a few variations of the Alice question correctly first time. GBT could do it, but only after being asked, what about Alice, some failed entirely, even after multiple hints, they just kept insisting on their own correctness.

I see no point in even trying to make LLMs reason correctly. What for? Much better to force them to translate any reasoning problem they face into Prolog or SMT, offload the reasoning to a tool, then translate back the result. Even the smallest LLMs can do it well.

Just tested the smallest Gemma-3, Qwen3 and Phi-4 models with Prolog on the Alice question, all managed to produce the right result (via a standard trial-and-error sandbox loop). Some took longer than the others, Qwen yapped about its own reasoning for a while until finally got to Prolog, but all got to the right answer.

There is also a 0.6B model that’s really quite surpring!

Can I run any model on my computer localy?

for example https://huggingface.co/models

Yeah, check out LMstudio.

You can use any model that’ll fit into your RAM or VRAM, but if you want anything approaching “real time”, then it really needs to be VRAM. A 4060Ti 16GB is probably the best current gen mid-low end option.

You might be able to get Intel or AMD cards working but it may be a nightmare. CUDA is very polished: anything GTX900 series or later will run “straight out of the box”, I’ve even run stablediffusion on a 650GT without issue.

Arm based Macs are also very popular for running LLMs since they have a unified pool of RAM which extremely high speed and low latency, while (for the higher RAM specs) costing around 10x less than a GPU with the same amount.

I find lmstudio’s vulkan runtime kinda sucks. Running koboldcpp with no special tricks, literally just ./filefromgithubrepo and selecting my backend+model+context size, on amd 780m igpu minipc with Ryzen 7940HS and 2 sticks of no name ddr5 ram (64 gb total) kobold correctly realizes the igpu can take half the total ram for offload whereas lmstudio either refuses to offload at all or crashes when it exceeds the 2 gb default allocation. It also runs slow as molasses while koboldcpp zips along with input processing at 132 tokens a second and generation at just over 5 a second. It’s super usable real time on koboldcpp, it’s a ask a question and come back in 15 minutes kinda experience on lmstudio.

On cuda though LM Studio is easily the most polished experience you can have, no argument there.

https://hackaday.com/2025/01/08/running-ai-locally-without-spending-all-day-on-setup/ (lots of other choices in the comments of that post, too).

To my surprise both chatCPT and gemini get the Alice question wrong and answer 3. Perplexity says 4.

This shouldn’t be surprising. AI models are physically incapable of performing mathematical operations, they only produce a sentence by selecting the next most-likely word.

If it produces the right answer, it’s chance, and you could fairly easily “persuade” it to output the right answer – or any arbitrary answer for that matter.

Hence the wolfram plugin for ChatGPT.

ChatGPT does get it wrong on my end too. Grok answers it correctly.

I’d use it to accompany me watching University Challenge, no matter how badly I do, I’d probably win.

were are we in the bell curve regarding this ai/llm thing?

first search engines gave correct answers to a wrong understood question, now we have a system giving incorrect answers to perfectly understood questions.

Here is something interesting:

I just asked Copilot the same question it responded three, when I pointed out the answer is four, because Alice is also his sister, it asserted I was correct. Asking ChatGPT, it took slightly longer to analyze the question but it came back with the correct answer.

There is am ARM port of MS Bitnet, and some of those models would entirely in RAM on a Pi.

Fascinating. The electricity required, 10 minutes to generate a response, is a good illustration of the resources LLM’s require. Id love to see the math, but I suspect Google and OpenAI are hemorraging cash to keep this train going.

This has been my thought about AI from the beginning: is it worth the electric power (largely oenerated with air polluting fossil fuels) to get a partially correlt answer a little faster than a reasonably intelligent human can do running on glucose, a very clean fuel?

That’s why people are working on making things more efficient.

https://venturebeat.com/ai/alibabas-zerosearch-lets-ai-learn-to-google-itself-slashing-training-costs-by-88-percent/

I’ve been running LLM with 12b-14b model with 16GB Pi5 + 1TB NVME. Set Virtual Memory at 16GB for 32GB RAM effective. Latest Ollama even does vision!

What if Alice is Alice Cooper?

In that case, he doesn’t have 3 sisters but only one and no brother. Took me 2mn to check wikipedia, faster than the Pi’s working time.

‘she also had’

Although I only have a 5800XT, I do have an RTX Super.

Will Phi4 be able to compete with Gemini 2.5 Pro as I have that as my current LLM (paid version so I use it on my Pixel 7a as well).

I’ve seen lots of videos saying Gemini 2.5 pro is heads above everything.

Even going head to head with ChatGPT 4, Gemini 2.5 pro just crushes it on everything.

Phi-4 is smaller, thus worse than Gemini 2.5 Pro. Compare on ArtificialAnalysis.ai

It’s impressive that a Raspberry Pi 5 can even run a 3.8B parameter LLM like Phi-4 mini, even if it takes 10 minutes to answer a simple question. While not practical for real-time use, it’s a great demo of how far edge computing has come—and a fun way to experiment with LLMs on a budget.

RPi 5 is rubbish compared to an average smartphone, which can run a small LLM locally in seconds, not minutes. You can try it in web browser using Candle Phi Wasm, ONNX Runtime Web or MediaPipe.

Just trying with Axelera ai cards. On the Pi

A timely post considering i just today received my order of a couple of OrangePi RV2. RISC-V seems to be the future of vector instructions. I’m not sure what 2 TOPS gets me with little LLM’s but who doesn’t love having some new tech to poke and prod. They even have multiple LLM models available for it.

Update: Qwen2-int4-0.5b (477MB) thinks for ~4 seconds then outputs at 13 tokens per second. Really fast, Not too smart though.

I did this last weekend, but with TinyLlama and a RPI3B, 2gb of swap and it was answering questions a lot faster than 10mins. Amazing what an old hunk of junk can still achieve.