Imagine you have a projector pointing at a scene, which you’re photographing with a camera aimed from a different point. Using the techniques of modelling light transport, [okooptics] has shown us how you can capture an image from the projector’s point of view, instead of the camera—and even synthetically light the scene however you might like.

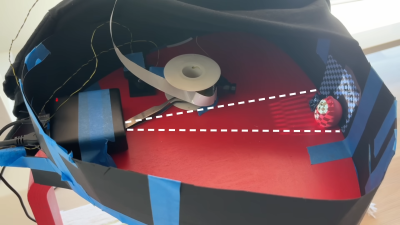

The concept involves capturing data regarding how light is transported from the projector to the scene. This could be achieved by lighting one pixel of the projector at a time while capturing an image with the camera. However, even for a low-resolution projector, of say 256×256 pixels, this would require capturing 65536 individual images, and take a very long time. Instead, [okooptics] explains how the same task can be achieved by using binary coded images with the projector, which allow the same data to be captured using just seventeen exposures.

Once armed with this light transport data, it’s possible to do wild tricks. You can synthetically light the scene, as if the projector were displaying any novel lighting pattern of your choice. You can also construct a simulated photo taken from the projector’s perspective, and even do some rudimentary depth reconstruction. [okooptics] explains this tricky subject well, using visual demonstrations to indicate how it all works.

The work was inspired by the “Dual Photography” paper published at SIGGRAPH some time ago, a conference that continues to produce outrageously interesting work to this day.

Please also add the tag:

https://hackaday.com/tag/structured-light/

I mean this is literally how an Xbox kinect (and I think most face ID sensors) famously works, just in infrared.

Which Kinect? IIRC, the original Kinect uses a fixed constellation of infrared laser dots, and you probably couldn’t get an image from the PoV of this “projector” the way the OP is getting his. I think the new Kinect uses ToF.

You’re right that they’re similar in objective, but, -at least to me- to me the way they do it is quite different.

I can see why there might be some confusion as they are both types of “structured light”. The main distinction is how they acquire the depth data.

The technique described in the article/video is “time-multiplexed structured light”, which uses a sequence of patterns—not a single one—to infer depth. Instead of a single complex pattern, it projects a series of simple ones, like binary or Gray codes, over time. A camera captures an image for each pattern, and the depth is calculated by analyzing how each point on the object responds to the entire sequence. This method is highly accurate but requires a static scene.

In contrast, “single-shot structured light” and captures the entire depth map from a single image. The Kinect1*, for example, projected a unique infrared speckle pattern. Because each dot was identifiable, the system could calculate the depth from a single snapshot by measuring the distortion of the pattern. This makes it ideal for capturing moving objects but can be less precise than the time-multiplexed approach (as points between the speckles are just interpolated).

Essentially, one technique uses time to gather information, while the other uses a more complex, information-rich pattern in a single moment.

Also, because faces move most face sensors use either “single-shot structured light” or “Time-of-Flight” (ToF) to capture depths**.

*- The Kinect 2 used ToF to measure depths.

**- All iPhone face sensors use “single-shot structured light”, many Android phones face sensors use a ToF sensor.

Note that this method hates specular reflections. You can improve the resistance to this with some polarized filters. But there is still a lot of noise with this method and it is relatively slow.

Aaah! I’ve been low-key searching for the “Dual Photography” paper for years since I saw it first. I’d forgotten the terms to search for. Such cool research!