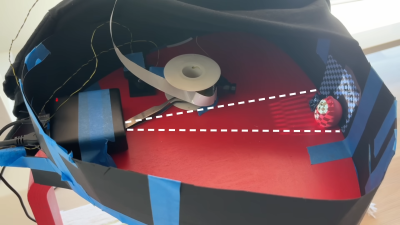

How do you go about making a mirror with 128 segments, each of which can be independently angled? That was the question that a certain bloke over at [Time Sink Studio] found himself pondering on, to ultimately settle on a whole batch of mini-actuators bought through AliExpress. These stepper-based actuators appear to be akin to those used with certain Oppo smartphones with pop-up camera, costing less than half a dollar for a very compact and quite fast actuator.

The basic design is very much akin to a macro version of a micromirror device, as used in e.g. DLP projectors, which rely on a kinetic mirror mount to enable precise alignment. With the small actuators travelling up to 8 mm each, the mirrors can cover 73 mm at a distance of 4 meters from a wall.

With the required angle of the mirror being effectively just the application of the Pythagorean theorem, the biggest challenge was probably calibrating these linear motors. Since they’re open loop devices, they are zeroed much like the steppers on 3D printers, by finding the end limit and counting steps from that known point. This doesn’t make drift impossible, but for projecting light onto walls it’s clearly more than good enough.

Continue reading “How To Use Tiny Open Loop Actuators For A Living Mirror”