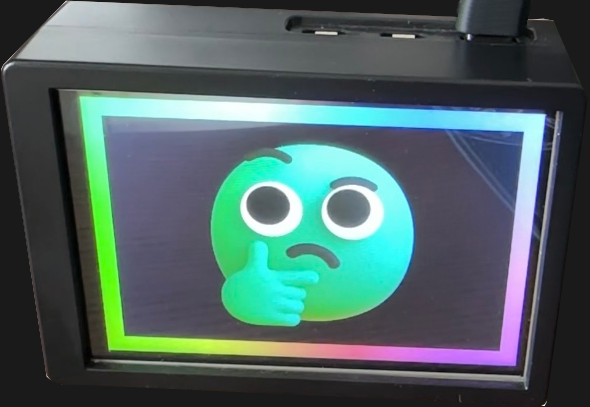

[Simone]’s AI assistant, dubbed Max Headbox, is a wakeword-triggered local AI agent capable of following instructions and doing simple tasks. It’s an experiment in many ways, but also a great demonstration not only of what is possible with the kinds of open tools and hardware available to a modern hobbyist, but also a reminder of just how far some of these software tools have come in only a few short years.

Max Headbox is not just a local large language model (LLM) running on Pi hardware; the model is able to make tool calls in a loop, chaining them together to complete tasks. This means the system can break down a spoken instruction (for example, “find the weather report for today and email it to me”) into a series of steps to complete, utilizing software tools as needed throughout the process until the task is finished.

Watch Max in action in the video (also embedded just below). Max is a little slow, but not unusably so. As far as proofs of concept go, it demonstrates that a foundation for such systems is perfectly feasible on budget hardware running free, locally installed software. Check out the GitHub repository.

The name is, of course, a play on Max Headroom, the purportedly computer-generated TV personality of the ’80s who was actually an actor in a mask, just like the person behind what was probably the most famous broadcast TV hack of all time (while wearing a Max Headroom mask).

Thanks to [JasonK] for the tip!

I love it. The speed would make it unusable for me but its still a great demo

I have made an alexa like totally local persona on my PC. It runs a speech to text model, a 7B LLM and a text to speech model to say what it thinks.

All I can say is its extremely EERIE to talk to an LLM. Its weird when it refers to itself as “I”. Let’s see how it behaves with the system prompt as “you’re talking to your lovely husband” and so…

Super unnverving.

Each model has an emergent personality which requires more tokens to prompt than you get in the response. This emergent voice is a statistical soup of choices and a smattering of human intervention via the devs to force behaviors (or block them). It suprised me to discover this, I thought it could contain numerous voices but it seems to he a metavoice or constituent low resolution sub voices. Not so intelligent, but quite strange

So you are saying it’s just technically ‘saving’ time only if you generalize the people it is talking too and amortize the cost of the prompts?

This is interesting because the number one use case people run into is customer service, exactly where individualized responses yield the best result. I’m shaking my head, possibly facepalming.

As a person who would rather have accurate results or no results, AI shoving me inaccurate or ‘what it thinks I want to hear’ results at me is super annoying.

It’s an automated reflexive nonlinear memory matrix. I would say current LLM has the intelligence of a newborn, sensory input drives a response that mimics the environment. The LLM is pure memory as its ability to learn and adapt is purely driven by human intervention (training).

It is fascinating, but is useful mainly for entertainment and not science or intelligent pursuits. A bank using an LLM for direct interaction with customers is begging for trouble. A non LLM system would mitigate all hallucination problems.

This is a common misconception that really worries me. AI does not have the ‘intelligence’ of a newborn. It has no intelligence in the sense of thought, agency or intrinsic motivations.

It can however, very artfully IMITATE these abilities.

AI simply has no thought of or consideration for what you are providing, its meaning, nuance or motivations. It is using training data to make up something that sounds good.

AI is not thinking!

It has no understanding, and no comprehension of meaning.

It is doing its best impression of a human with an answer.

Your question is reduced to a series of base patterns, and an answer is generated based on patterns that it has been trained on to match with your pattern.

It simply is making up gibberish for an answer that ‘looks right’ based on what it was told in the past.

I wish people stopped conflating these responses for anything other than a canned phrase from a doll’s pulled string.

That’s pure technobabble. The current LLMs don’t have any intelligence whatsoever. They’re Markov chains.

I’m pretty sure using it for customer service is primarily a choice because human CSRs are “too nice” and “too helpful”, while an LLM can be nicely confined to a very limited toolset.

Professional language and a removal of any moral responsibility for it’s actions…

The main function of LLM in customer service is to meet and greet the customer, annoy them for a while with the usual unhelpful suggestions, and then you get onto the waiting list for actual customer service.

Have you documented this somewhere? I’d love to see details.

My direct use of LLM models, Gemini mainly via Google AI Studio. Outputs never exceed prompts in complexity (no thinking mode enabled, no google search allowed). Prompts need to be on average 100 tokens to get 80 tokens of coherent and prompt-relevant responses. This is all from my personal experiences using the LLM to write culturally relevant and franchise accurate scripts for major IP (aka AI fanfic).

One good script requires up to a dozen prompt revisions requiring me to reprobate the LLM every time. For a bank interacting with a customer via LLM this means one good response needs 5 sample responses to distill in to one hopefully nonhallucinated answer.

a reprobate is a person of ill repute. no is verb.

kinda expected from someone trading writing practice and nerological computation for “using LLM to write culturally relevent and franchise accruate scripts for major IP”.

Right after you finish your job as a certified accumulated waste transition management engineer?

Meant to type reprompt and autocorrect switched it to reprobate on me. I chose this fanfic benchmark because supposedly great works of literature and commercial art have been fed to it. If the LLM had intelligent apparatus it should be able to write Shakespeare, but you end up getting low resolution shakespeak instead. Ask an LLM to do something requiring creative faculty and memory capabilities, in theory it should output something of quality. And yet it is an endless wasteland of slop you see in the outputs. Noone needs to worry about their jobs right now, unless you make C programming learning modules for a living.

That’s because the output suffers from regression towards the mean. It’s likely to produce outputs that are common and likely, or in other words, mediocre. Even if the model was trained solely on Shakespeare, it’s unlikely to output the best of Shakespeare, and never better than Shakespeare, except by pure random luck.

No, what the LLM does for “creativity” is to increase the “temperature” or the random noise in the model, which does not result in higher quality outputs on average, but simply more varied outputs, and more hallucinations and nonsense.

My reply was to @shinsukke. I am interested in homebrew , standalone “assistants” that are not tied to a corporate mothership.

Sometimes the way the comment section on HAD places/staggers comments, it’s easy to lose sight of who is replying to whom. I guess I should have mentioned shinsukke by name.

I really need to start focusing my eyes, I read that as “woke-word” triggered. 🤣🤣🤣🤣

Would love to have an LLM model running on an always-off-the-internet Raspberry Pi 400 that was trained for python. Any recommendations from anyone and whether this is possible? Thx in advance.

The smallest LLM can be around 1B parameters or less, are often good at specific tasks like generating C code. Not sure about performance, could be very long wait times. Accessing an API via command line would be more suitable and faster, but would require model API access.

Why bother? Why do people think they need an AI to help them out? Seems silly to me when one has a perfectly good brain in their own head. Never understood the draw. Seems like we are well on the way to Idiocracy… And some folks are excited about that! Go figure.

Running an LLM locally is secure, versus API access which puts your data on someone’s server. I don’t know what LLM will ultimately be relegated to for use cases. Why not try something new and interesting?

Try some books on AI and Excel. Plenty of answers to “why”.

My hobby python program has taken a huge leap forward with Grok and ChatGPT help. I’m looking to have some level of that capability, though, running offline for security reasons. That’s “why”.

Now we all know what a Pakled Tricorder looks like.

Briefly

Interrupted

Large

Language

Yokel

(B.I.L.L.Y.)

“the square of do hypotenoose is equal to the sum of the other two….uhhh..George Washington.”

Poor BILLY—he was the first to use DNA memory, but lost half his memory due to the donor’s use of thalidomide.

Can anyone tell what hardware is being used? What pi 5 is being used? Ram? SSD storage? AI accelerator?

The blog post and the github link have all the info: no SSD or AI accelerator just a bare bone Pi 16GB/8GB a fan and a screen attached to it