When we talk about HDTV, we’re typically talking about any one of a number of standards from when television made the paradigm switch from analog to digital transmission. At the dawn of the new millenium, high-definition TV was a step-change for the medium, perhaps the biggest leap forward since color transmissions began in the middle of the 20th century.

However, a higher-resolution television format did indeed exist well before the TV world went digital. Over in Japan, television engineers had developed an analog HD format that promised quality far beyond regular old NTSC and PAL transmissions. All this, decades before flat screens and digital TV were ever seen in consumer households!

Resolution

Japan’s efforts to develop a better standard of analog television were pursued by the Science and Technical Research Laboratories of NHK, the national public broadcaster. Starting in the 1970s, research and development focused on how to deliver a higher-quality television signal, as well as how to best capture, store, and display it.

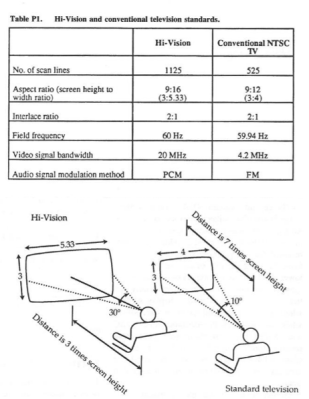

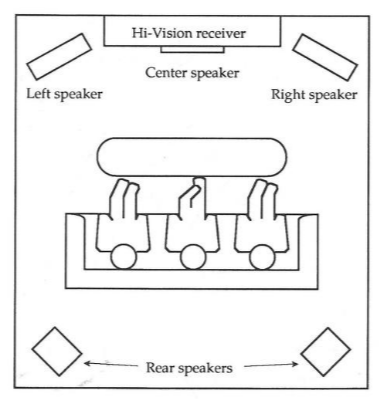

This work led to the development of a standard known as Hi-Vision, which aimed to greatly improve the resolution and quality of broadcast television. At 1125 lines, it offered over double the vertical resolution of the prevailing 60 Hz NTSC standard in Japan. The precise number was chosen for meeting minimum requirements for image quality for a viewer with good vision, while being a convenient integer ratio to NTSC’s 525 lines (15:7), and PAL’s 625 lines (9:5). Hi-Vision also introduced a shift to the 16:9 aspect ratio from the more traditional 4:3 used in conventional analog television. The new standard also brought with it improved audio, with four independent channels—left, center, right, and rear—in what was termed “3-1 mode.” This was not unlike the layout used by Dolby Surround systems of the mid-1980s, though the NHK spec suggests using multiple speakers behind the viewers to deliver the single rear sound channel.

Hi-Vision referred most specifically to the video standard itself; the broadcast standard was called MUSE—standing for Multiple sub-Nyquist Sampling Encoding. This was a method for dealing with the high bandwidth requirements of higher-quality television. Where an NTSC TV broadcast might only need 4.2 MHz of bandwidth, the Hi-Vision standard needed 20-25 MHz of bandwidth. That wasn’t practical to fit in alongside terrestrial broadcasts of the time, and even for satellite delivery, it was considered too great. Thus, MUSE offered a way to compress the high-resolution signal down into a more manageable 8.1 MHz, with a combination of dot interlacing and advanced multiplexing techniques. The method used meant that ultimately four frames were needed to make up a full image. Special motion-sensitive encoding techniques were also used to limit the blurring impact of camera pans due to the use of the dot interlaced method. Meanwhile, the four-channel digital audio stream was squeezed into the vertical blanking period.

MUSE broadcasts began on an experimental basis in 1989. NHK would eventually begin using the standard regularly on its BShi satellite service, with a handful of other Japanese broadcasters eventually following suit. Broadcasts ran until 2007, when NHK finally shut down the service with digital TV by then well established.

An NHK station sign-on animation used from 1991 to 1994.

The technology wasn’t just limited to higher-quality broadcasts, either. Recorded media capable of delivering higher-resolution content also permeated the Japanese market. W-VHS (Wide-VHS) hit the market in 1993 as a video cassette standard capable of recording Hi-Vision/MUSE broadcast material. The W moniker was initially chosen for its shorthand meaning in Japanese of “double”—since Hi-Vision used 1125 lines which was just over double the 525 lines in an NTSC broadcast.

Later, in 1994, Panasonic released its Hi-Vision LaserDisc player, with Pioneer and Sony eventually offering similar products. They similarly offered 1,125 lines (1,035 visible) of resolution in a native 16:9 aspect ratio. The discs were read using a narrower-wavelength laser than standard laser discs, which also offered improved read performance and reliability.

Sample video from a MUSE Hi-Vision Laserdisc. Note the extreme level of detail visible in the makeup palettes and skin, and the motion trails in some of the lens flares.

The hope was that Hi-Vision would become an international standard for HDTV, supplanting the ugly mix of NTSC, PAL, and SECAM formats around the world. Unfortunately, that never came to pass. While Hi-Vision and MUSE did offer a better quality image, there simply wasn’t much content that was actually broadcast in the standard. Only a few channels in Japan were available, creating a limited incentive for households to upgrade their existing sets. Similarly, the amount of recorded media available was also limited. The bandwidth requirements were also too great; even with MUSE squishing the signals down, the 8.1MHz required was still considered too much for practical use in the US market. Meanwhile, being based on a 60 Hz standard meant the European industry was not interested.

Further worsening the situation was that by 1996, DVD technology had been released, offering better quality and all the associated benefits of a digital medium. Digital television technology was not far behind, and buildouts began in countries around the world by the late 1990s. These transmissions offered higher quality and the ability to deliver more channels with the same bandwidth, and would ultimately take over.

Only a handful of Hi-Vision displays still exist in the world.

Hi-Vision and MUSE offered a huge step up in image quality, but their technical limitations and broadcast difficulties meant that they would never compete with the new digital technologies that were coming down the line. There was simply not enough time for the technology to find a foothold in the market before something better came along. Still, it’s quite something to look back on the content and hardware from the late 1980s and early 1990s that was able, in many ways, to measure up in quality to the digital flat screen TVs that wouldn’t arrive for another 15 years or so. Quite a technical feat indeed, even if it didn’t win the day!

Pedantic side note: the name of the NHK channel mentioned in the article, is indeed stylized “BShi”. If you guessed that it’s supposed to be pronounced like “BS high” (ビーエスハイ), since it broadcast in Hi-Vision, you catch on more quickly than I do.

I spent an embarrassingly long time trying to figure out why satellite channel 3 would have been called “B-Shi”, which sounds like “B-four” (and especially the pronunciation of “four” that’s avoided because it sounds like the word for “death”). Apparently I’ve spent too much time looking at programming variables lately and just expect everything capitalized to be in camel or Pascal case.

Hah I saw ‘bshi” and thought “bishi” as in 美少+女/年 …

Too much sailor moon, clearly.

At 5 times the bandwidth, it’s no wonder why it wasn’t widely adopted.

Well, that also depends on the country-region. Sure, it would effect transmission power requirement, but in many countries there were few available channels, and most of the radio spectrum were unused. If I remember correctly, PAL used 6-8MHz total bandwidth, with 5-6MHz for the video, so a slightly tweaked system would basically cut the available channels by 3, and for quite a lot of countries that wouldn’t be a huge issue, although simultaneous transmission of basic signal along with HD will increase usage to 4 channel bandwidths. The main target group to get something new and expensive like this actually used and invested in, would be government broadcast in countries with few channels. And it would have to work along side the old system for at least a decade if not more.

I’m too young to remember, how much of the TV broadcast spectrum actually got used? I’ve seen old TVs with dials showing 60 or 70 channels but I’ve never been able to get more than a dozen channels with an antenna.

In the UK pretty much all the allocated spectrum got used but it was split up into ‘channel groups’

Each region had its own group (albeit with only 4/5 channels of analogue terrestrial.) so you’d buy a UHF antenna that was tuned for your local group or ‘colour’ (they usually had a colour coded plastic cap or bung which denoted the channel group)

The use of channel groups prevented neighbouring regions suffering interference from other region’s transmitters where the coverage overlapped or if there was ‘weird’ atmospheric propagation that allowed signals to travel further than predicted (tropospheric ducting for example occasionally allowed TV from Mainland Europe to be watched and TV DXing was a hobby quite a few got into.)

It had the added bonus of allowing regional services to be spliced in to your local transmitter feed, so the 6 ‘o’ clock news usually had a half hour of national news and a half hour of local news, they also broadcast local language programming (Welsh, Gaelic etc) in regions where those languages were popular/used.

I should add, channel groups are still used today for terrestrial digital services ofr similar reasons (though I think the regional programming is done slightly differently) so, even now, the allocated band still looks pretty empty

In the USA in the early 2000s I was into broadcast HD (ATSC). Sadly I’m right in between the major urban centers/broadcast towers. The local PBS station was VHF, but did temporary broadcasting on UHF. This is important because a broadside array with multiple dipoles and a mesh to reflect and intensify the signal is easy to build, also small and portable.

After we lost the UHF channel I was not able to receive the channel again, you need a much larger antenna for VHF.

Worse was the big broadcasters around here mashing 5 channels of very poor quality into one transmission. Only Fox and CBS are UHF, NBC is decidedly VHF. I wish they had taken the opportunity to use the superior UHF exclusively, seems like a missed opportunity.

In North America channels where 6 Mhz wide, with the video signal using about 5 Mhz.

In the US we used to have channels 2 through 83, but there were usually only a couple dozen stations at most available in the big cities. Channel 1 was only used for experimental broadcasts in the 1940’s. A bunch of the channels were reallocated to cell phones in the early 80’s. A lot more were sold off after the analog stations were shut down. Now they only go up to 36, but the VHF channels (2-13) are rarely used since the digital transition. Digital TV allows for multiple channels on one RF channel, so there can be quite a few available in some areas now.

I wish, around here some channels stayed 2-13, requiring a much larger antenna.

Sub-Nyquist sampling was used by Sony in the late 1970s for their early experiments with digital recording of TV signals. It exploited the property of TV luminance (brightness) signals that their spectrum was crowded around harmonics of the line scan frequency. In NTSC video the color information is designed to into the gaps between these harmonics but in PAL this information was offset either side of these frequencies due to PAL’s alternation of the color burst phase. This, coupled with the relatively low bandwidth of chrominance (color) information opened an opportunity to ‘fold’ the digitized spectrum without losing or corrupting information by sampling it at less than the Nyquist frequency. Its a clever trick but by 1979 it was redundant because Sony built a ‘component’ digital recorder, directly digitizing the video luminance and chrominance signals. This not only worked a lot better than the digitized composite signal but opened the door to processing video completely digitally, something we take for granted today. (It was just a research novelty back then because there just wasn’t the parts, the prototype used a large box of descrete logic, some of which were being worked at their operational limits. The on-tape recording streams, for example, were four parallel 50Mbit/sec streams; these were serialized from memory a line at a time but the DRAM that was available at the time had a 350uSec — yes, microsecond — cycle time!)

IIRC, the place where that extra signal went was called the “Fukinuki hole”. Thank heavens MPEG encoding won the day.

And the EBU developed HD-MAC which was a slightly higher resolution analogue system as a response to the proposals that the Japanese standard be adopted as a world standard.

I saw it demonstrated at an IEEE talk about FLOF Teletext, really quite impressive but doomed.

“being based on a 60 Hz standard meant the European industry was not interested”

I’m curious about this. Surely, by the 1990s, new TV and video equipment didn’t depend on the mains clock for timing? Is there some other reason TVs in Europe couldn’t have worked with video fields at 60Hz? Bearing in mind, we’d already be talking about new hardware in every part of the system.

Given the timing, with a digital transition being inevitable by then, I can see why this was DOA regardless. As amazing as those demos would have looked in 1989, we ended up with the same result plus more channels by waiting a decade, so it would’ve been a terrible investment.

New hardware sure, but realistically, a change would be gradual over a decade or more where the new and old had to work side by side, and some would keep their old systems after closing down of transmission done too soon, and new sets would have to be able to use old standard from legacy VCRs and computors and such. So a converter box would have to downsample the new to the old signal for those. And a TV receiver and screen that could work with both the new and old is probably far easier and cheaper if that new is closer compared to the old, and there might be interference patterns with 50 Hz main and 60 Hz equipment. Shielding and such work, but cost money.

Unless you’re a dictator, change in a country or a region have to be smooth and over a long time to actually be adopted, and for a lot of applications, a completely new system is such a hurdle that competing technology might push that new tech aside if people have to buy new shit and change how they use it, like if cable is worth the hassle to get when you only used to get broadcasts. Or the internet suddenly comes along.

Hello! In my family, in the 90s, the VCR was the star of the living room.

It had a programmable, digitally-controlled TV tuner and AV input.

The TV was merely working as a dumb video monitor.

The VCR tuner was vastly superior to the TV’s built-in tuner, too.

When we had a satellite tuner, it was attached to that VCR via SCART.

So in other words, the VCR already was a set-top box that controlled everything.

Using an HDTV tuner instead wouldn’t have been all that different, thus.

When DVB-T (and DVB-T2) replaced analog terrestrial TV in most partsof Europe, it was basically same thing.

People then had to install an set-top box.

The box also did down-convert to RF or SCART, if no HDMI monitor was available.

+1 I got a surplus Apple II color monitor and used it for years with a second hand RCA VCR for television. The colors and fidelity were absolutely insane high quality.

My parents didn’t let us kids have a TV in our rooms, much less buy multiple TVs in the first place. So as a teen I also discovered this VCR tuning ability and used an old 2nd hand VCR connected to a colour composite “computer” monitor with headphones as a TV for many years

Actually, most of the reason for early B&W TV to be line-locked was to prevent traveling bars due to poor power supply filtering. With the advent of NTSC color, the field rate was changed to 59.94 Hz to avoid interference with the sound subcarrier, so there was no longer any dependence on line frequency.

Yes, incandescent lighting.

The strobing effect would have been unwatchable.

That’s right. But the 50/60 Hz flicker was noticeable and annyoing anyway. 🥲

That’s why 100 Hz/120 Hz TVs got introduced by late 80s/early 90s.

Today, of course, people nolonger remember these.

The 100 Hz/120 Hz CRT TVs were usually higher end and not that mainstream.

On IBM PC side, merely the MDA/Hercules graphics ran at 50 Hz.

– I guess that’s why it was so beloved here in the PAL/50 Hz land of Germany. ;)

The other IBM standards such as CGA/EGA/VGA ran in 60 Hz most of time.

(Except in 400 line modes, here VGA ran at about 70 Hz.

Super VGA cards ran custom frequencies, depending on video/mode driver.

European PCs with on-board CGA sometimes ran their CGA video at 50 Hz, too.)

The decay / persistence of the phosphor in the tube was imprtant.

Analog B&W on a good set was beautiful.

Oof. That’s a complicated matter, I’m afraid.

Yes, in principle most TVs in countries of “Western” Europe (political west) in the 1990s were capable of 60 Hz.

The video monitors such as Commodore 1084S had knobs to adjust image frequencies manually,

while the then-new SCART TVs usually had auto-detect.

Then-new videorecorders could display both NTSC/PAL tapes (sometimes SECAM), too.

That was important for viewing VHS imports (there was a market for this).

But then you had the ordinary people with their vintage SABA, Blaupunkt and Telefunken TV sets from the 1960s/1970s.

These things were very unflexible and had no AV or SCART inputs,

but merely an RF antenna jack on the back of the wooden chassis.

You could an NES or VCR to that, at very best. The VCR had the better tuner, in most cases.

Such traditional TVs had been in wide use up until the 2000s.

Some people in Europe still used black/white TVs in the 1990s.

Especially kids used such older TVs to play the NES/Dendy, Mastersystem in their bedroom and so on.

They also watched cartoons on that, since NES featured an automated switchbox..

For example, In poor GDR (East Germany) up until fall of Berlin wall in 1989 and the following re-union in 1990, color TVs were a luxury.

B/W TVs were normal, but still not cheap, either.

They bought new color TVs in the mid-90s, thus, I suppose.

The difference is, I guess, that in Europe TVs and latest technology not always were such a status symbol compared to US.

Some people were glad to have a working TV, at all. And they were happy with VHS, too. Some didn’t want to give up using C64 or Amiga 500 etc.

In my country, I think, DVD wasn’t mainstream until 2002 or so.

But that’s okay. In the early 90, Laserdisc and VCD/CD-i (official releases) were available to film fans already.

Yes, quite niche media. But it was available to the people looking for better-than-VHS quality, at least.

So all in all it’s very difficult to make a statement, I think.

For example, terrestrial antenna reception was still normal in the 1990s.

People had these big UHF/VHF beam antennas on the roof.

It was still normal back then.

Same time, some people had cable TV. Especially in houses with many families.

Then you had the TV fans who had a satellite dish (parabolic mirror) to receive analog satellite TV via Astra satellite, for example. Or Hotbird etc.

Some of these people had their satellite dishs since the 1980s already.

At the time, the satellite system had the capacity to transmit digital radio already.

Digital TV or MUSE would been possible via these satellites, too.

So in principle, European users could have received HD signals (if there were any) if they had an apropriate satellite receiver.

The receiver could have done any conversion for an existing SD monitor, too.

A multisync or VGA monitor could have had served as an cheap HD video monitor by 1987 already.

But these are just my two cents. :)

Back in the early 90 a large part of the now EU suffered from recession. Recovering from this dragged on through much of the 90´s. Completely binning the old TV system and building a new from scratch was more risk taking than most could stomach.

The issue wasn’t that the TVs weren’t clocked to the mains – but lots of lighting (particularly discharge and fluorescent lighting) was. If you shoot 50Hz lighting at 60Hz you get 10Hz lighting flicker.

There was also the issue of compatibility with SD – particularly for transmission and production (early HD production often required numerous SD sources to be upconverted). Spatial up conversion is relatively straight forward. Frame rate conversion is difficult to do well and expensive.

Japan has both 50Hz and 60Hz mains frequencies and does, indeed, suffer badly from flicker in some situations when 50Hz lighting is shot at 60Hz.

Here in Europe the TV manufacturers tried to push analog HD TV, but it never got beyond plans because regulators pushed back against analog HD TV and a standard was never adopted. I think this was in the ’90-ies. By then it already was pretty clear that it would not take too long before digital would be the future, and implementing analog HD TV for a period of 5 to 10 years would lead to an enormous transfer of money from consumers to equipment manufacturers. Good for the manufacturers, but not so good for the consumers who would quickly see their expensive equipment go obsolete.

In those days most people also still remembered the VHS versus betamax war.

It’s also quite amazing how short lived some of the technologies were. DVD’s were introduced in 1997, and took a few years before getting some reasonable adoption by the market, and the peak of DVD sales was in 2005. And 10 years later is was back to a small niche market.

Seems rather unlikely they pushed back when the EBU was directed to create an analogue HDTV standard, but who knows, in most democracies one arm of the state often has no idea what the other limbs are doing

Funding some research and drawing conclusions from that and acting on those is very much different from “no idea what the other limbs are doing”.

And Video 2000! 😝

Yeah, people scared of format wars…

Why having 3 Analogue TV standards (PAL,SECAM and NTSC)…

When we can have FOUR Digital ones (DVB, ATSC. ISDB and DMBT), let alone the substandards for satellite/cable/terrestrial, let alone the mobille variants like DVB-H, and so on…

Aside the NTSC v.s. everything else, which was somewhat a special case where the former was definitely older and worse, anything else is matter of protectionism/nationalism and geopolitics rather than meaningful technical superiority.

Even the (very useful) SCART connector was introduced in France, before home computers consoles and VCR were a thing, with the only scope of protecting the French industry. (In that case it was a futile attempt given in matter of few years it become indispensable and everyone started to build devices that had it, no matter the nation involved).

P.S. back to MUSE almost no one seem to remember that it’s aspect ratio was 5/3 (15/9 in gen Z| jargon), not sure when it was moved to 16/9, which was chosen in Europe for the HD-MAC standard (which was killed in the cradle by the upcoming digital TV)

Remembering its 165lbs/75kg heft while helping a delivery person position my parents’ first digital HD TV – a Sony Trinitron CRT – I thank the Ford that plasma flat screens didn’t take long to push HD CRTs of any sort to the curb. I like my intervertebral discs just the way they are.

It isn’t widely remembered that France had a HD system with 819 lines as long ago as 1949:

https://en.wikipedia.org/wiki/819_line

However, there were difficulties both with transmission (it needed an awful lot of bandwidth) and reception (it was hard to make sets that did justice to it), and importantly it was monochrome only, which by the 1960s was a serious disadvantage. It continued until the middle 1980s.

The problem was the bandwidth, I think. It limited the number of channels.

But not that much, either. A nice b/w TV signal is 6 MHz (video only: 4), the 819 line system needed about 14 MHz (video only: 10).

Unfortunately, people back then saw it differently and tried to keep things down.

Or perhaps they had issues with electrical band filters etc?

The receivers in those HD TVs had to use non-standard parts, maybe, not sure.

About it being monochrome an disadvantage, not sure.

In the neighbor country, Germany, color TV started late in the 1970s.

And many citizens over here were not impressed by the poor color quality at first.

Not too few preferred a good b/w TV set instead.

Also, color TVs needed much more repairs at the time.

B/w sets were less complicated, less expensive and less failure prone.

But that’s Germany and not France.

Not sure how French citizens had felt about it, they’re strange sometimes.

I merely know that they later used PAL in TV studios, because SECAM couldn’t be mixed with other video sources.

Not sure where you got your timeline Joshua, unless you are talking about the DDR (aka East Germany).

Both the UK and West Germany (BRD) started to go PAL colour in 1967 on their 625 services, and France similarly on their second channel, which was also 625, with SECAM. (The French first channel remained 819 black and white until around 1974 I believe, before 625 colour SECAM simulcasts started)

It’s hard to argue DVDs looked better, they were not high definition. I’m not sure that was the case. I recall comments about slower adoption in Jaoan of rights-restriiced digital HDTV since the earlty adopters already had Hivision, and the W-VHS so they could freely record stuff if they wished. It didn’t help that (although 1080 stuff has higher actual resolutioon since it’s not doing all that subsampling) that it was listed as 1135 resolution so the 1080 sounded like a small downgrade.