It’s relatively easy to understand how optical microscopes work at low magnifications: one lens magnifies an image, the next magnifies the already-magnified image, and so on until it reaches the eye or sensor. At high magnifications, however, that model starts to fail when the feature size of the specimen nears the optical system’s diffraction limit. In a recent video, [xoreaxeax] built a simple microscope, then designed another microscope to overcome the diffraction limit without lenses or mirrors (the video is in German, but with automatic English subtitles).

The first part of the video goes over how lenses work and how they can be combined to magnify images. The first microscope was made out of camera lenses, and could resolve onion cells. The shorter the focal length of the objective lens, the stronger the magnification is, and a spherical lens gives the shortest focal length. [xoreaxeax] therefore made one by melting a bit of soda-lime glass with a torch. The picture it gave was indistinct, but highly magnified.

Besides the dodgy lens quality given by melting a shard of glass, at such high magnification some of the indistinctness was caused by the specimen acting as a diffraction grating and directing some light away from the objective lens. [xoreaxeax] visualized this by taking a series of pictures of a laser shining through a pinhole at different focal lengths, thus getting cross sections of the light field emanating from the pinhole. When repeating the procedure with a section of onion skin, it became apparent that diffraction was strongly scattering the light, which meant that some light was being diffracted out of the lens’s field of view, causing detail to be lost.

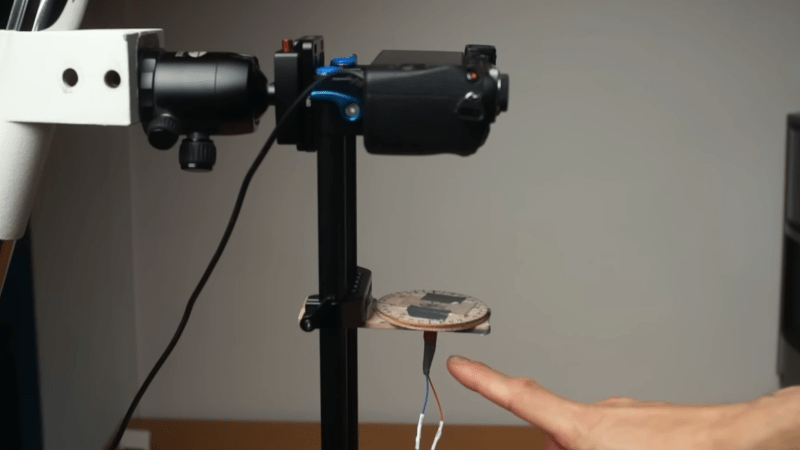

To recover the lost details, [xoreaxeax] eliminated the lenses and simply captured the interference pattern produced by passing light through the sample, then wrote a ptychography algorithm to reconstruct the original structure from the interference pattern. This required many images of the subject under different lighting conditions, which a rotating illumination stage provided. The algorithm was eventually able to recover a sort of image of the onion cells, but it was less than distinct. The fact that the lens-free setup was able to produce any image at all is nonetheless impressive.

To see another approach to ptychography, check out [Ben Krasnow’s] approach to increasing microscope resolution. With an electron microscope, ptychography can even image individual atoms.

“ one lens magnifies an image, the next magnifies the already-magnified image”… this is possibly THE worst explanation of an optical assembly I have ever seen.

Nice to see interference imaging has reached the kitchen table. Some colleagues of mine are also doing work on lens-free camera system: https://www.imec-int.com/en/expertise/health-technologies/lens-free-imaging

I take it they aren’t rotating a stage to map the diffraction pattern. How do they get near realtime images with this technique?

Awesome project! I wonder if there is a write up

Although interesting, I don’t really see a vaunting application. But possibly combining this with a micro lens array. There might be something there.

The underlying algorithm is kind of a big deal in transmission electron microscopy for single-atom resolution. It looks like tremendous fun was had, and that’s the important part here.

I liked this video! At its conclusion, our scientist / content creator talks about how using actual measurements don’t match his simulated results. He speculates that a better laser would make the difference. It is true that laser diodes aren’t great for interferometry (e.g. holography) because of their short coherence length (the distance along the beam you can expect monochromatic light to stay in phase with itself), but his onion skin subject is also very thin (short), so maybe a laser diode is adequate here (if not, cheap HeNe lasers are still available on eBay). I am reminded that software defined radio (SDR) algorithms are very sensitive to A2D “sample clock jitter.” Xoreaxeax’s manual rotary stage may be introducing analogous inaccuracies that are reducing his data quality. Using a stepper motor would clean up his angle-related measurements and improve reproducibility. Last but not least, Xoreaxeax’s homemade pinhole is introducing artifacts clearly visible on the video. IMHO, a laboratory-quality spatial filter plus an XY stage to center the laser beam on the pinhole would be well worth the investment.